Zhiyang Li

KG-ViP: Bridging Knowledge Grounding and Visual Perception in Multi-modal LLMs for Visual Question Answering

Jan 14, 2026Abstract:Multi-modal Large Language Models (MLLMs) for Visual Question Answering (VQA) often suffer from dual limitations: knowledge hallucination and insufficient fine-grained visual perception. Crucially, we identify that commonsense graphs and scene graphs provide precisely complementary solutions to these respective deficiencies by providing rich external knowledge and capturing fine-grained visual details. However, prior works typically treat them in isolation, overlooking their synergistic potential. To bridge this gap, we propose KG-ViP, a unified framework that empowers MLLMs by fusing scene graphs and commonsense graphs. The core of the KG-ViP framework is a novel retrieval-and-fusion pipeline that utilizes the query as a semantic bridge to progressively integrate both graphs, synthesizing a unified structured context that facilitates reliable multi-modal reasoning. Extensive experiments on FVQA 2.0+ and MVQA benchmarks demonstrate that KG-ViP significantly outperforms existing VQA methods.

Perceptual Quality Assessment of 3D Gaussian Splatting: A Subjective Dataset and Prediction Metric

Nov 11, 2025

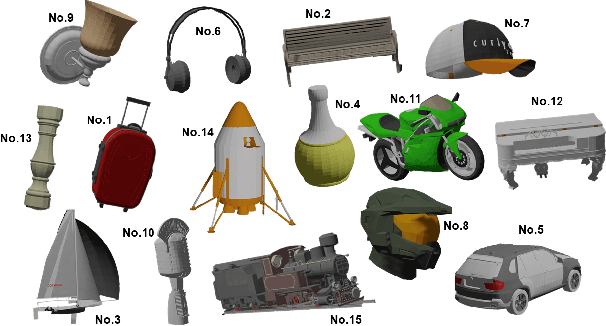

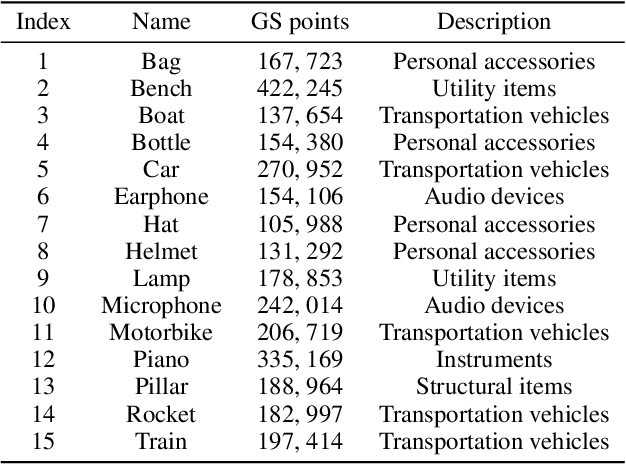

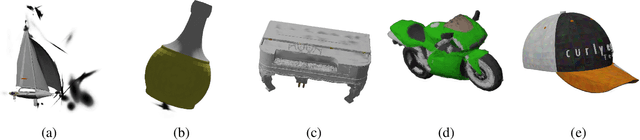

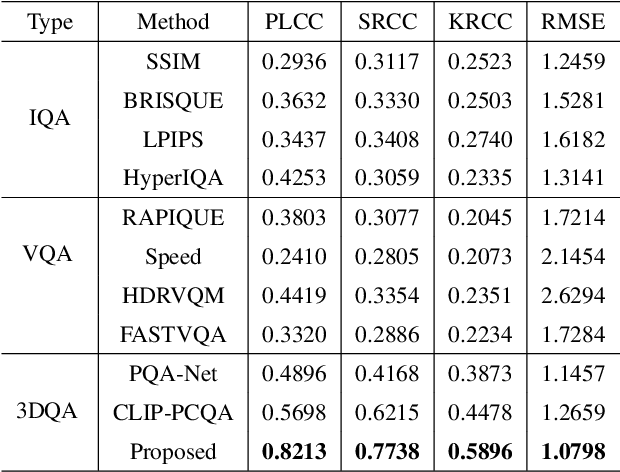

Abstract:With the rapid advancement of 3D visualization, 3D Gaussian Splatting (3DGS) has emerged as a leading technique for real-time, high-fidelity rendering. While prior research has emphasized algorithmic performance and visual fidelity, the perceptual quality of 3DGS-rendered content, especially under varying reconstruction conditions, remains largely underexplored. In practice, factors such as viewpoint sparsity, limited training iterations, point downsampling, noise, and color distortions can significantly degrade visual quality, yet their perceptual impact has not been systematically studied. To bridge this gap, we present 3DGS-QA, the first subjective quality assessment dataset for 3DGS. It comprises 225 degraded reconstructions across 15 object types, enabling a controlled investigation of common distortion factors. Based on this dataset, we introduce a no-reference quality prediction model that directly operates on native 3D Gaussian primitives, without requiring rendered images or ground-truth references. Our model extracts spatial and photometric cues from the Gaussian representation to estimate perceived quality in a structure-aware manner. We further benchmark existing quality assessment methods, spanning both traditional and learning-based approaches. Experimental results show that our method consistently achieves superior performance, highlighting its robustness and effectiveness for 3DGS content evaluation. The dataset and code are made publicly available at https://github.com/diaoyn/3DGSQA to facilitate future research in 3DGS quality assessment.

Discovering Galaxy Features via Dataset Distillation

Nov 29, 2023Abstract:In many applications, Neural Nets (NNs) have classification performance on par or even exceeding human capacity. Moreover, it is likely that NNs leverage underlying features that might differ from those humans perceive to classify. Can we "reverse-engineer" pertinent features to enhance our scientific understanding? Here, we apply this idea to the notoriously difficult task of galaxy classification: NNs have reached high performance for this task, but what does a neural net (NN) "see" when it classifies galaxies? Are there morphological features that the human eye might overlook that could help with the task and provide new insights? Can we visualize tracers of early evolution, or additionally incorporated spectral data? We present a novel way to summarize and visualize galaxy morphology through the lens of neural networks, leveraging Dataset Distillation, a recent deep-learning methodology with the primary objective to distill knowledge from a large dataset and condense it into a compact synthetic dataset, such that a model trained on this synthetic dataset achieves performance comparable to a model trained on the full dataset. We curate a class-balanced, medium-size high-confidence version of the Galaxy Zoo 2 dataset, and proceed with dataset distillation from our accurate NN-classifier to create synthesized prototypical images of galaxy morphological features, demonstrating its effectiveness. Of independent interest, we introduce a self-adaptive version of the state-of-the-art Matching Trajectory algorithm to automate the distillation process, and show enhanced performance on computer vision benchmarks.

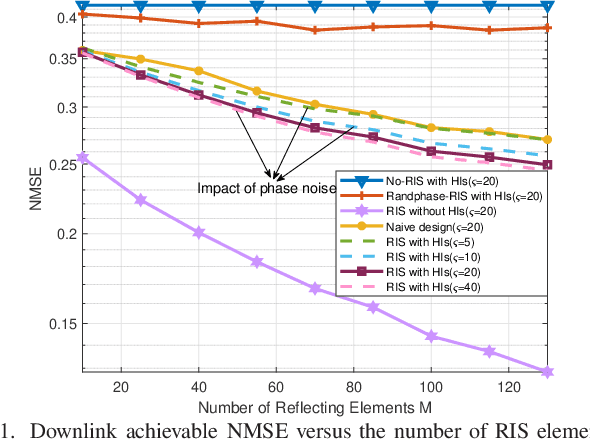

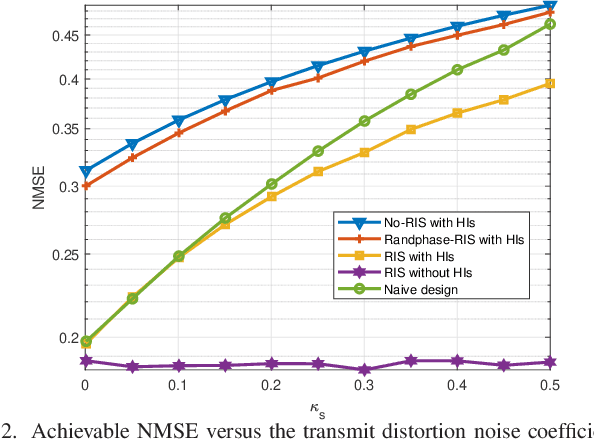

MSE-Based Transceiver Designs for RIS-Aided Communications With Hardware Impairments

Aug 16, 2022

Abstract:It is challenging to precisely configure the phase shifts of the reflecting elements at the reconfigurable intelligent surface (RIS) due to inherent hardware impairments (HIs). In this paper, the mean square error (MSE) performance is investigated in an RIS-aided single-user multiple-input multipleoutput (MIMO) communication system with transceiver HIs and RIS phase noise. We aim to jointly optimize the transmit precoder, linear received equalizer, and RIS reflecting matrices to minimize the MSE. To tackle this problem, an iterative algorithm is proposed, wherein the beamforming matrices are alternately optimized. Specifically, for the beamforming optimization subproblem, we derive the closed-form expression of the optimal precoder and equalizer matrices. Then, for the phase shift optimization subproblem, an efficient algorithm based on the majorization-minimization (MM) method is proposed. Simulation results show that the proposed MSE-based RIS-aided transceiver scheme dramatically outperforms the conventional system algorithms that do not consider HIs at both the transceiver and the RIS.

* Accepted by IEEE Communications Letters

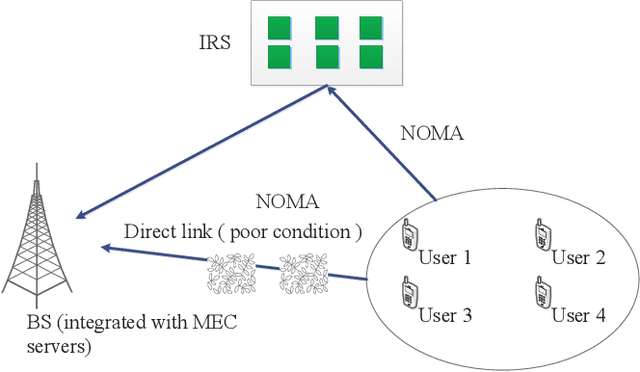

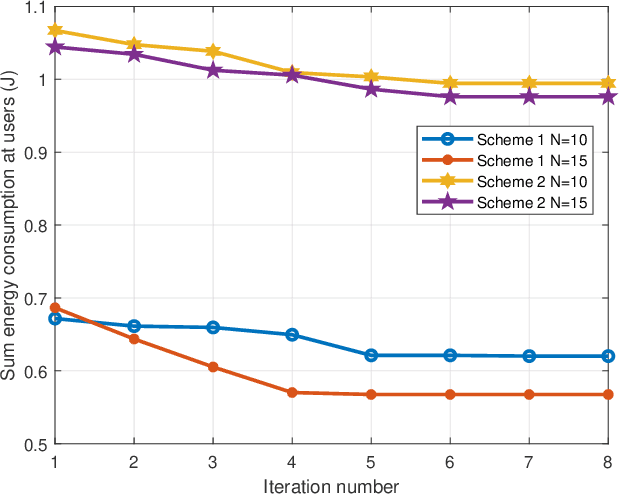

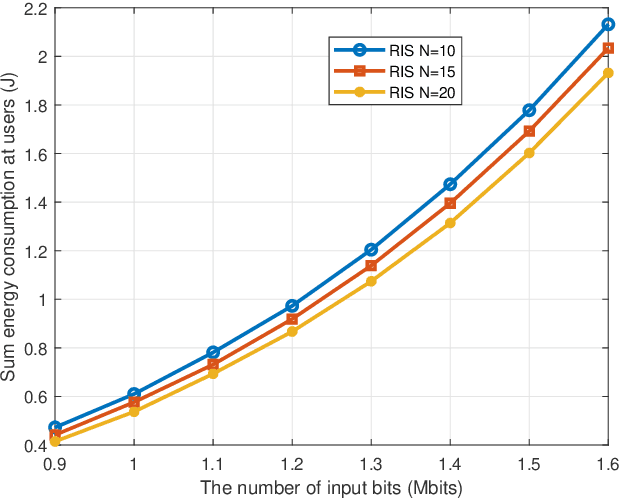

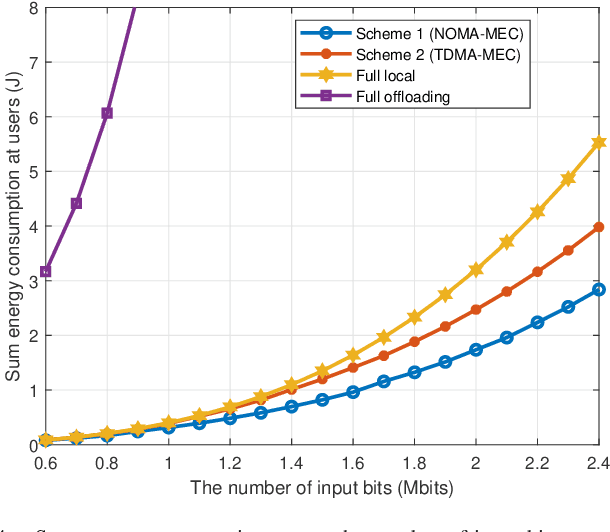

Energy Efficient Reconfigurable Intelligent Surface Enabled Mobile Edge Computing Networks with NOMA

Apr 30, 2021

Abstract:Reconfigurable intelligent surface (RIS) has emerged as a promising technology for achieving high spectrum and energy efficiency in future wireless communication networks. In this paper, we investigate an RIS-aided single-cell multi-user mobile edge computing (MEC) system where an RIS is deployed to support the communication between a base station (BS) equipped with MEC servers and multiple single-antenna users. To utilize the scarce frequency resource efficiently, we assume that users communicate with BS based on a non-orthogonal multiple access (NOMA) protocol. Each user has a computation task which can be computed locally or partially/fully offloaded to the BS. We aim to minimize the sum energy consumption of all users by jointly optimizing the passive phase shifters, the size of transmission data, transmission rate, power control, transmission time and the decoding order. Since the resulting problem is non-convex, we use the block coordinate descent method to alternately optimize two separated subproblems. More specifically, we use the dual method to tackle a subproblem with given phase shift and obtain the closed-form solution; and then we utilize penalty method to solve another subproblem for given power control. Moreover, in order to demonstrate the effectiveness of our proposed algorithm, we propose three benchmark schemes: the time-division multiple access (TDMA)-MEC scheme, the full local computing scheme and the full offloading scheme. We use an alternating 1-D search method and the dual method that can solve the TDMA-based transmission problem well. Numerical results demonstrate that the proposed scheme can increase the energy efficiency and achieve significant performance gains over the three benchmark schemes.

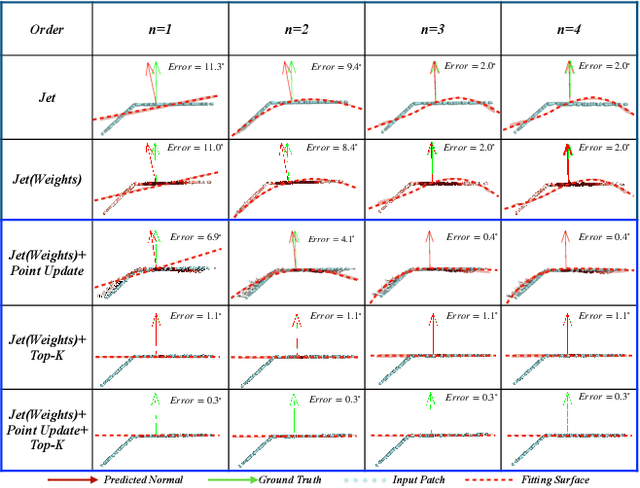

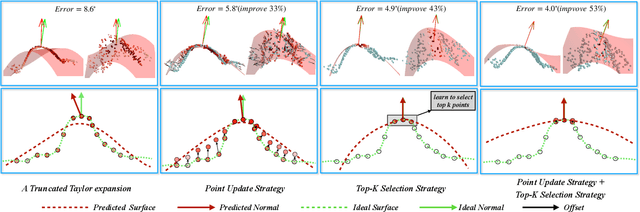

Improvement of Normal Estimation for PointClouds via Simplifying Surface Fitting

Apr 21, 2021

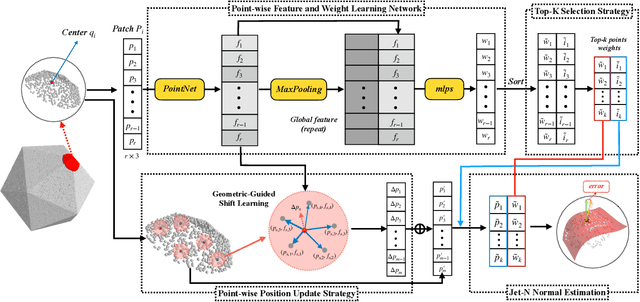

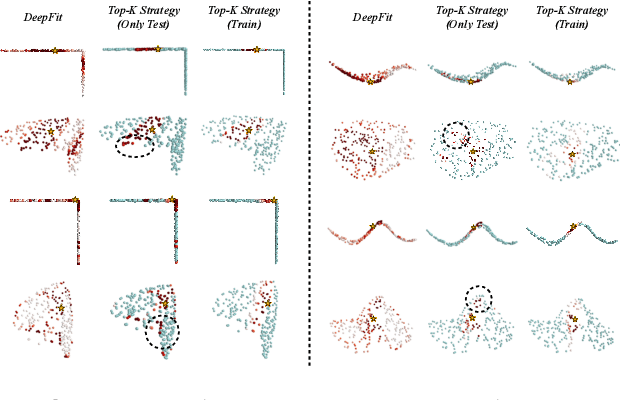

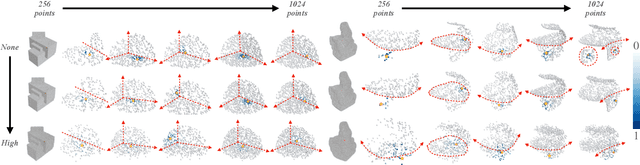

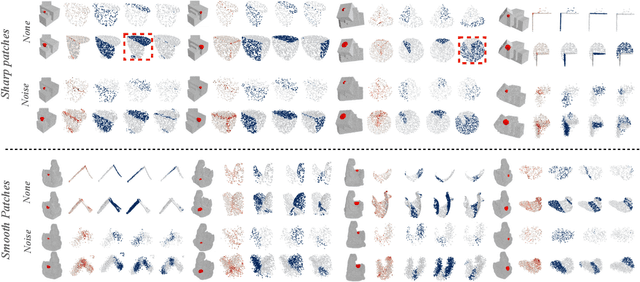

Abstract:With the burst development of neural networks in recent years, the task of normal estimation has once again become a concern. By introducing the neural networks to classic methods based on problem-specific knowledge, the adaptability of the normal estimation algorithm to noise and scale has been greatly improved. However, the compatibility between neural networks and the traditional methods has not been considered. Similar to the principle of Occam's razor, that is, the simpler is better. We observe that a more simplified process of surface fitting can significantly improve the accuracy of the normal estimation. In this paper, two simple-yet-effective strategies are proposed to address the compatibility between the neural networks and surface fitting process to improve normal estimation. Firstly, a dynamic top-k selection strategy is introduced to better focus on the most critical points of a given patch, and the points selected by our learning method tend to fit a surface by way of a simple tangent plane, which can dramatically improve the normal estimation results of patches with sharp corners or complex patterns. Then, we propose a point update strategy before local surface fitting, which smooths the sharp boundary of the patch to simplify the surface fitting process, significantly reducing the fitting distortion and improving the accuracy of the predicted point normal. The experiments analyze the effectiveness of our proposed strategies and demonstrate that our method achieves SOTA results with the advantage of higher estimation accuracy over most existed approaches.

Fast and Accurate Normal Estimation for Point Cloud via Patch Stitching

Mar 31, 2021

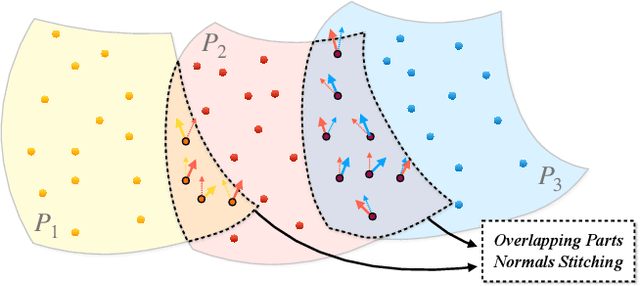

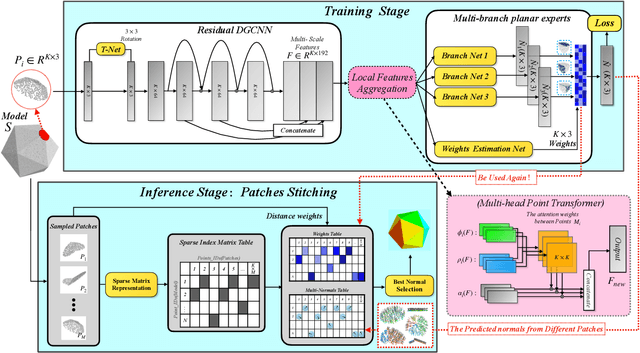

Abstract:This paper presents an effective normal estimation method adopting multi-patch stitching for an unstructured point cloud. The majority of learning-based approaches encode a local patch around each point of a whole model and estimate the normals in a point-by-point manner. In contrast, we suggest a more efficient pipeline, in which we introduce a patch-level normal estimation architecture to process a series of overlapping patches. Additionally, a multi-normal selection method based on weights, dubbed as multi-patch stitching, integrates the normals from the overlapping patches. To reduce the adverse effects of sharp corners or noise in a patch, we introduce an adaptive local feature aggregation layer to focus on an anisotropic neighborhood. We then utilize a multi-branch planar experts module to break the mutual influence between underlying piecewise surfaces in a patch. At the stitching stage, we use the learned weights of multi-branch planar experts and distance weights between points to select the best normal from the overlapping parts. Furthermore, we put forward constructing a sparse matrix representation to reduce large-scale retrieval overheads for the loop iterations dramatically. Extensive experiments demonstrate that our method achieves SOTA results with the advantage of lower computational costs and higher robustness to noise over most of the existing approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge