Tim Pearce

MineWorld: a Real-Time and Open-Source Interactive World Model on Minecraft

Apr 11, 2025

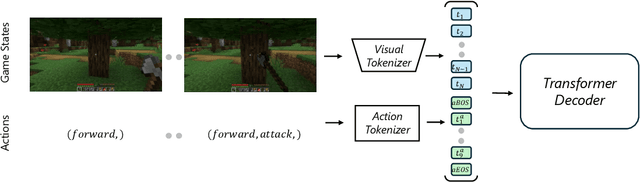

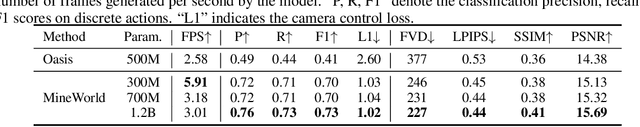

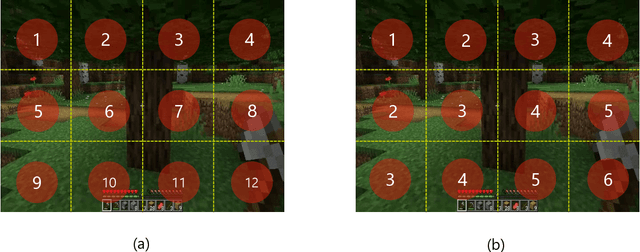

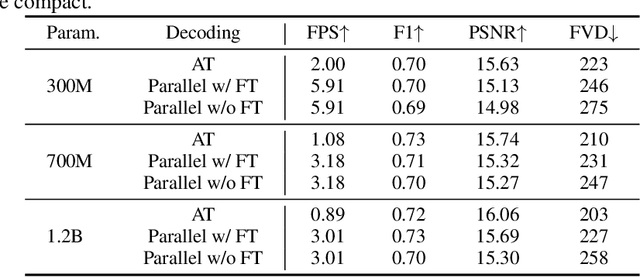

Abstract:World modeling is a crucial task for enabling intelligent agents to effectively interact with humans and operate in dynamic environments. In this work, we propose MineWorld, a real-time interactive world model on Minecraft, an open-ended sandbox game which has been utilized as a common testbed for world modeling. MineWorld is driven by a visual-action autoregressive Transformer, which takes paired game scenes and corresponding actions as input, and generates consequent new scenes following the actions. Specifically, by transforming visual game scenes and actions into discrete token ids with an image tokenizer and an action tokenizer correspondingly, we consist the model input with the concatenation of the two kinds of ids interleaved. The model is then trained with next token prediction to learn rich representations of game states as well as the conditions between states and actions simultaneously. In inference, we develop a novel parallel decoding algorithm that predicts the spatial redundant tokens in each frame at the same time, letting models in different scales generate $4$ to $7$ frames per second and enabling real-time interactions with game players. In evaluation, we propose new metrics to assess not only visual quality but also the action following capacity when generating new scenes, which is crucial for a world model. Our comprehensive evaluation shows the efficacy of MineWorld, outperforming SoTA open-sourced diffusion based world models significantly. The code and model have been released.

Fast Autoregressive Video Generation with Diagonal Decoding

Mar 18, 2025

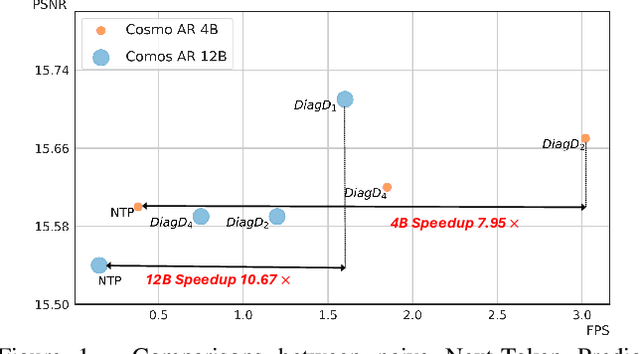

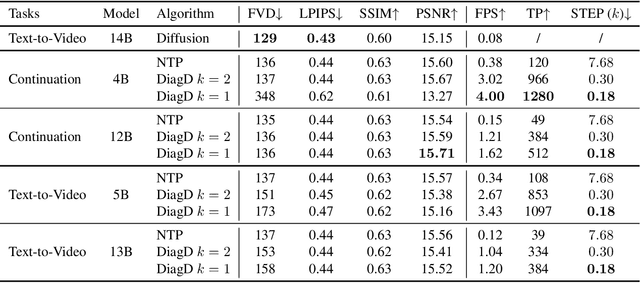

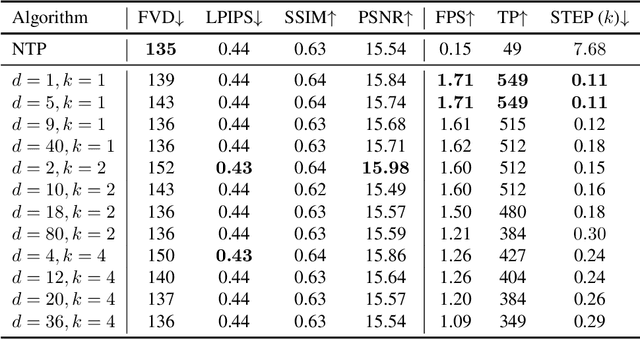

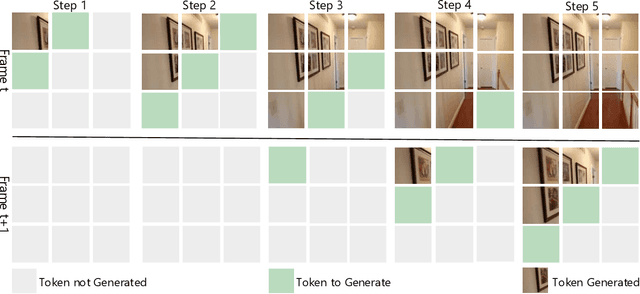

Abstract:Autoregressive Transformer models have demonstrated impressive performance in video generation, but their sequential token-by-token decoding process poses a major bottleneck, particularly for long videos represented by tens of thousands of tokens. In this paper, we propose Diagonal Decoding (DiagD), a training-free inference acceleration algorithm for autoregressively pre-trained models that exploits spatial and temporal correlations in videos. Our method generates tokens along diagonal paths in the spatial-temporal token grid, enabling parallel decoding within each frame as well as partially overlapping across consecutive frames. The proposed algorithm is versatile and adaptive to various generative models and tasks, while providing flexible control over the trade-off between inference speed and visual quality. Furthermore, we propose a cost-effective finetuning strategy that aligns the attention patterns of the model with our decoding order, further mitigating the training-inference gap on small-scale models. Experiments on multiple autoregressive video generation models and datasets demonstrate that DiagD achieves up to $10\times$ speedup compared to naive sequential decoding, while maintaining comparable visual fidelity.

Scaling Laws for Pre-training Agents and World Models

Nov 07, 2024

Abstract:The performance of embodied agents has been shown to improve by increasing model parameters, dataset size, and compute. This has been demonstrated in domains from robotics to video games, when generative learning objectives on offline datasets (pre-training) are used to model an agent's behavior (imitation learning) or their environment (world modeling). This paper characterizes the role of scale in these tasks more precisely. Going beyond the simple intuition that `bigger is better', we show that the same types of power laws found in language modeling (e.g. between loss and optimal model size), also arise in world modeling and imitation learning. However, the coefficients of these laws are heavily influenced by the tokenizer, task \& architecture -- this has important implications on the optimal sizing of models and data.

Reconciling Kaplan and Chinchilla Scaling Laws

Jun 12, 2024Abstract:Kaplan et al. [2020] (`Kaplan') and Hoffmann et al. [2022] (`Chinchilla') studied the scaling behavior of transformers trained on next-token language prediction. These studies produced different estimates for how the number of parameters ($N$) and training tokens ($D$) should be set to achieve the lowest possible loss for a given compute budget ($C$). Kaplan: $N_\text{optimal} \propto C^{0.73}$, Chinchilla: $N_\text{optimal} \propto C^{0.50}$. This note finds that much of this discrepancy can be attributed to Kaplan counting non-embedding rather than total parameters, combined with their analysis being performed at small scale. Simulating the Chinchilla study under these conditions produces biased scaling coefficients close to Kaplan's. Hence, this note reaffirms Chinchilla's scaling coefficients, by explaining the cause of Kaplan's original overestimation.

Diffusion for World Modeling: Visual Details Matter in Atari

May 20, 2024

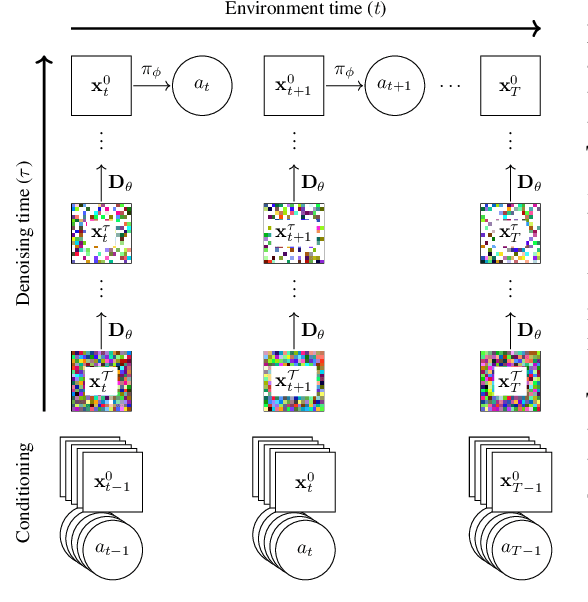

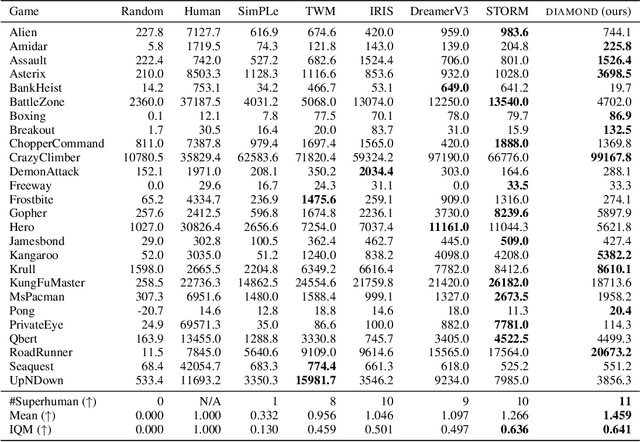

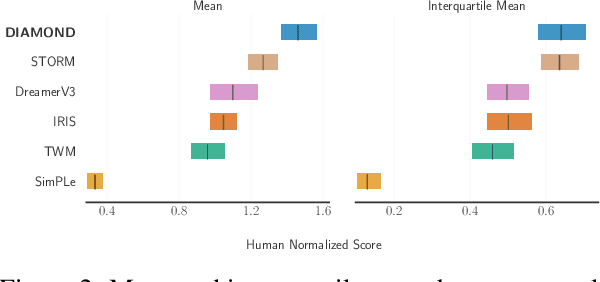

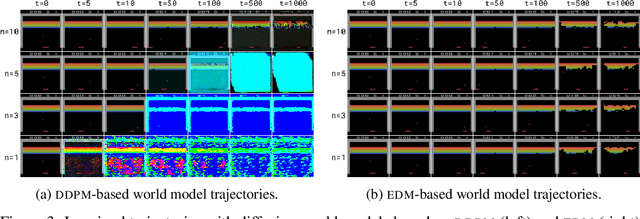

Abstract:World models constitute a promising approach for training reinforcement learning agents in a safe and sample-efficient manner. Recent world models predominantly operate on sequences of discrete latent variables to model environment dynamics. However, this compression into a compact discrete representation may ignore visual details that are important for reinforcement learning. Concurrently, diffusion models have become a dominant approach for image generation, challenging well-established methods modeling discrete latents. Motivated by this paradigm shift, we introduce DIAMOND (DIffusion As a Model Of eNvironment Dreams), a reinforcement learning agent trained in a diffusion world model. We analyze the key design choices that are required to make diffusion suitable for world modeling, and demonstrate how improved visual details can lead to improved agent performance. DIAMOND achieves a mean human normalized score of 1.46 on the competitive Atari 100k benchmark; a new best for agents trained entirely within a world model. To foster future research on diffusion for world modeling, we release our code, agents and playable world models at https://github.com/eloialonso/diamond.

C-GAIL: Stabilizing Generative Adversarial Imitation Learning with Control Theory

Feb 26, 2024

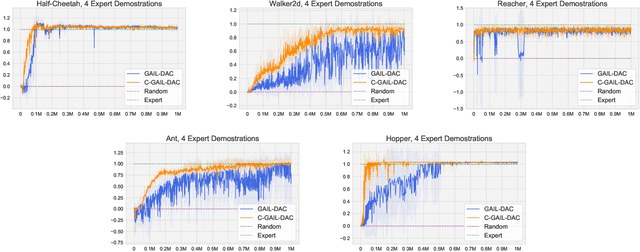

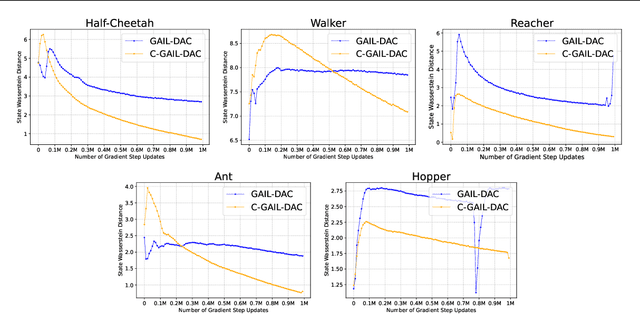

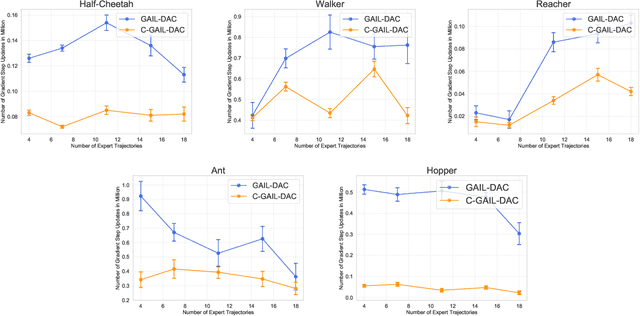

Abstract:Generative Adversarial Imitation Learning (GAIL) trains a generative policy to mimic a demonstrator. It uses on-policy Reinforcement Learning (RL) to optimize a reward signal derived from a GAN-like discriminator. A major drawback of GAIL is its training instability - it inherits the complex training dynamics of GANs, and the distribution shift introduced by RL. This can cause oscillations during training, harming its sample efficiency and final policy performance. Recent work has shown that control theory can help with the convergence of a GAN's training. This paper extends this line of work, conducting a control-theoretic analysis of GAIL and deriving a novel controller that not only pushes GAIL to the desired equilibrium but also achieves asymptotic stability in a 'one-step' setting. Based on this, we propose a practical algorithm 'Controlled-GAIL' (C-GAIL). On MuJoCo tasks, our controlled variant is able to speed up the rate of convergence, reduce the range of oscillation and match the expert's distribution more closely both for vanilla GAIL and GAIL-DAC.

Coalitional Bargaining via Reinforcement Learning: An Application to Collaborative Vehicle Routing

Oct 26, 2023Abstract:Collaborative Vehicle Routing is where delivery companies cooperate by sharing their delivery information and performing delivery requests on behalf of each other. This achieves economies of scale and thus reduces cost, greenhouse gas emissions, and road congestion. But which company should partner with whom, and how much should each company be compensated? Traditional game theoretic solution concepts, such as the Shapley value or nucleolus, are difficult to calculate for the real-world problem of Collaborative Vehicle Routing due to the characteristic function scaling exponentially with the number of agents. This would require solving the Vehicle Routing Problem (an NP-Hard problem) an exponential number of times. We therefore propose to model this problem as a coalitional bargaining game where - crucially - agents are not given access to the characteristic function. Instead, we implicitly reason about the characteristic function, and thus eliminate the need to evaluate the VRP an exponential number of times - we only need to evaluate it once. Our contribution is that our decentralised approach is both scalable and considers the self-interested nature of companies. The agents learn using a modified Independent Proximal Policy Optimisation. Our RL agents outperform a strong heuristic bot. The agents correctly identify the optimal coalitions 79% of the time with an average optimality gap of 4.2% and reduction in run-time of 62%.

Fair collaborative vehicle routing: A deep multi-agent reinforcement learning approach

Oct 26, 2023Abstract:Collaborative vehicle routing occurs when carriers collaborate through sharing their transportation requests and performing transportation requests on behalf of each other. This achieves economies of scale, thus reducing cost, greenhouse gas emissions and road congestion. But which carrier should partner with whom, and how much should each carrier be compensated? Traditional game theoretic solution concepts are expensive to calculate as the characteristic function scales exponentially with the number of agents. This would require solving the vehicle routing problem (NP-hard) an exponential number of times. We therefore propose to model this problem as a coalitional bargaining game solved using deep multi-agent reinforcement learning, where - crucially - agents are not given access to the characteristic function. Instead, we implicitly reason about the characteristic function; thus, when deployed in production, we only need to evaluate the expensive post-collaboration vehicle routing problem once. Our contribution is that we are the first to consider both the route allocation problem and gain sharing problem simultaneously - without access to the expensive characteristic function. Through decentralised machine learning, our agents bargain with each other and agree to outcomes that correlate well with the Shapley value - a fair profit allocation mechanism. Importantly, we are able to achieve a reduction in run-time of 88%.

* Final, published version can be found here: https://www.sciencedirect.com/science/article/pii/S0968090X23003662

TiZero: Mastering Multi-Agent Football with Curriculum Learning and Self-Play

Feb 21, 2023

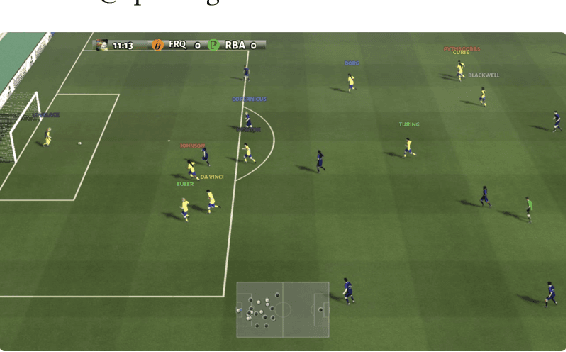

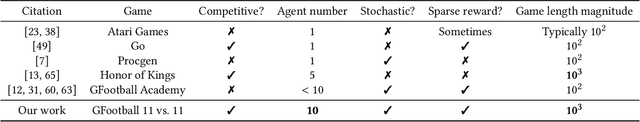

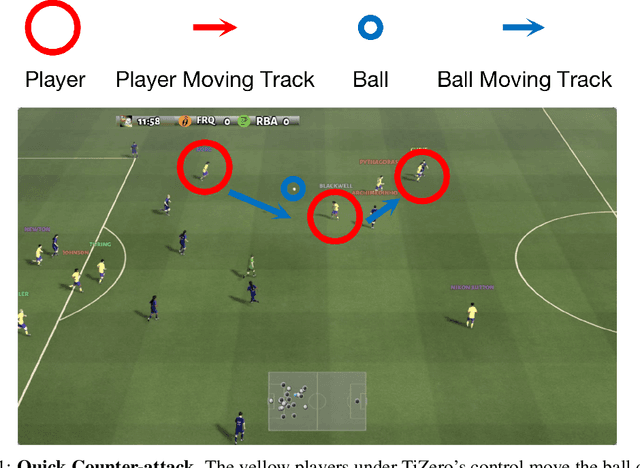

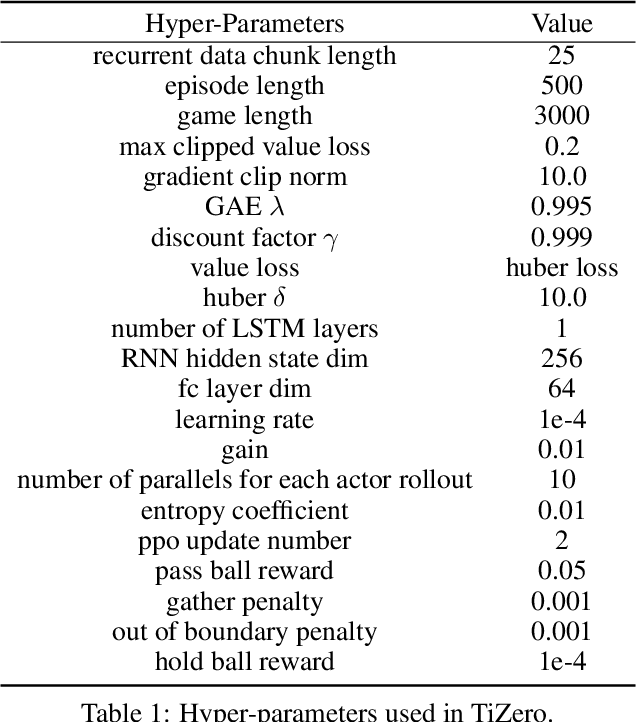

Abstract:Multi-agent football poses an unsolved challenge in AI research. Existing work has focused on tackling simplified scenarios of the game, or else leveraging expert demonstrations. In this paper, we develop a multi-agent system to play the full 11 vs. 11 game mode, without demonstrations. This game mode contains aspects that present major challenges to modern reinforcement learning algorithms; multi-agent coordination, long-term planning, and non-transitivity. To address these challenges, we present TiZero; a self-evolving, multi-agent system that learns from scratch. TiZero introduces several innovations, including adaptive curriculum learning, a novel self-play strategy, and an objective that optimizes the policies of multiple agents jointly. Experimentally, it outperforms previous systems by a large margin on the Google Research Football environment, increasing win rates by over 30%. To demonstrate the generality of TiZero's innovations, they are assessed on several environments beyond football; Overcooked, Multi-agent Particle-Environment, Tic-Tac-Toe and Connect-Four.

Imitating Human Behaviour with Diffusion Models

Jan 25, 2023

Abstract:Diffusion models have emerged as powerful generative models in the text-to-image domain. This paper studies their application as observation-to-action models for imitating human behaviour in sequential environments. Human behaviour is stochastic and multimodal, with structured correlations between action dimensions. Meanwhile, standard modelling choices in behaviour cloning are limited in their expressiveness and may introduce bias into the cloned policy. We begin by pointing out the limitations of these choices. We then propose that diffusion models are an excellent fit for imitating human behaviour, since they learn an expressive distribution over the joint action space. We introduce several innovations to make diffusion models suitable for sequential environments; designing suitable architectures, investigating the role of guidance, and developing reliable sampling strategies. Experimentally, diffusion models closely match human demonstrations in a simulated robotic control task and a modern 3D gaming environment.

* Published in ICLR 2023

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge