Stephen Mak

Fair collaborative vehicle routing: A deep multi-agent reinforcement learning approach

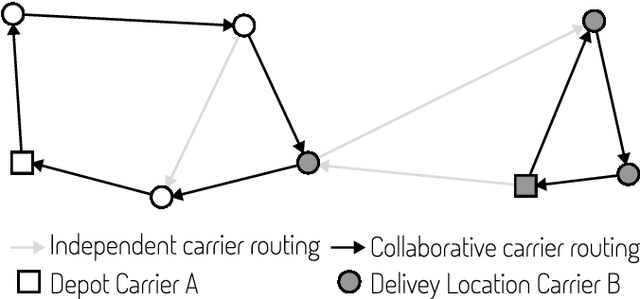

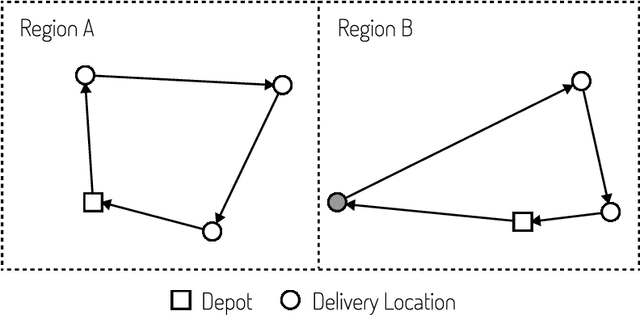

Oct 26, 2023Abstract:Collaborative vehicle routing occurs when carriers collaborate through sharing their transportation requests and performing transportation requests on behalf of each other. This achieves economies of scale, thus reducing cost, greenhouse gas emissions and road congestion. But which carrier should partner with whom, and how much should each carrier be compensated? Traditional game theoretic solution concepts are expensive to calculate as the characteristic function scales exponentially with the number of agents. This would require solving the vehicle routing problem (NP-hard) an exponential number of times. We therefore propose to model this problem as a coalitional bargaining game solved using deep multi-agent reinforcement learning, where - crucially - agents are not given access to the characteristic function. Instead, we implicitly reason about the characteristic function; thus, when deployed in production, we only need to evaluate the expensive post-collaboration vehicle routing problem once. Our contribution is that we are the first to consider both the route allocation problem and gain sharing problem simultaneously - without access to the expensive characteristic function. Through decentralised machine learning, our agents bargain with each other and agree to outcomes that correlate well with the Shapley value - a fair profit allocation mechanism. Importantly, we are able to achieve a reduction in run-time of 88%.

* Final, published version can be found here: https://www.sciencedirect.com/science/article/pii/S0968090X23003662

Coalitional Bargaining via Reinforcement Learning: An Application to Collaborative Vehicle Routing

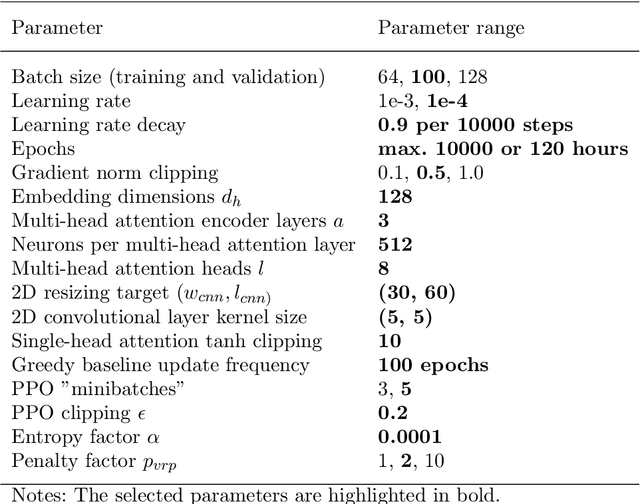

Oct 26, 2023Abstract:Collaborative Vehicle Routing is where delivery companies cooperate by sharing their delivery information and performing delivery requests on behalf of each other. This achieves economies of scale and thus reduces cost, greenhouse gas emissions, and road congestion. But which company should partner with whom, and how much should each company be compensated? Traditional game theoretic solution concepts, such as the Shapley value or nucleolus, are difficult to calculate for the real-world problem of Collaborative Vehicle Routing due to the characteristic function scaling exponentially with the number of agents. This would require solving the Vehicle Routing Problem (an NP-Hard problem) an exponential number of times. We therefore propose to model this problem as a coalitional bargaining game where - crucially - agents are not given access to the characteristic function. Instead, we implicitly reason about the characteristic function, and thus eliminate the need to evaluate the VRP an exponential number of times - we only need to evaluate it once. Our contribution is that our decentralised approach is both scalable and considers the self-interested nature of companies. The agents learn using a modified Independent Proximal Policy Optimisation. Our RL agents outperform a strong heuristic bot. The agents correctly identify the optimal coalitions 79% of the time with an average optimality gap of 4.2% and reduction in run-time of 62%.

Unlocking Carbon Reduction Potential with Reinforcement Learning for the Three-Dimensional Loading Capacitated Vehicle Routing Problem

Jul 22, 2023

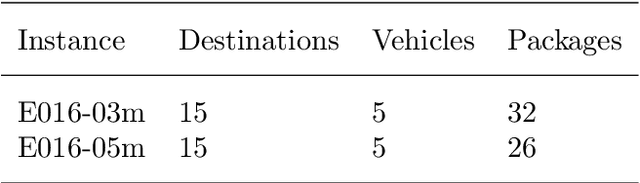

Abstract:Heavy goods vehicles are vital backbones of the supply chain delivery system but also contribute significantly to carbon emissions with only 60% loading efficiency in the United Kingdom. Collaborative vehicle routing has been proposed as a solution to increase efficiency, but challenges remain to make this a possibility. One key challenge is the efficient computation of viable solutions for co-loading and routing. Current operations research methods suffer from non-linear scaling with increasing problem size and are therefore bound to limited geographic areas to compute results in time for day-to-day operations. This only allows for local optima in routing and leaves global optimisation potential untouched. We develop a reinforcement learning model to solve the three-dimensional loading capacitated vehicle routing problem in approximately linear time. While this problem has been studied extensively in operations research, no publications on solving it with reinforcement learning exist. We demonstrate the favourable scaling of our reinforcement learning model and benchmark our routing performance against state-of-the-art methods. The model performs within an average gap of 3.83% to 8.10% compared to established methods. Our model not only represents a promising first step towards large-scale logistics optimisation with reinforcement learning but also lays the foundation for this research stream.

Towards Accelerating Benders Decomposition via Reinforcement Learning Surrogate Models

Jul 17, 2023Abstract:Stochastic optimization (SO) attempts to offer optimal decisions in the presence of uncertainty. Often, the classical formulation of these problems becomes intractable due to (a) the number of scenarios required to capture the uncertainty and (b) the discrete nature of real-world planning problems. To overcome these tractability issues, practitioners turn to decomposition methods that divide the problem into smaller, more tractable sub-problems. The focal decomposition method of this paper is Benders decomposition (BD), which decomposes stochastic optimization problems on the basis of scenario independence. In this paper we propose a method of accelerating BD with the aid of a surrogate model in place of an NP-hard integer master problem. Through the acceleration method we observe 30% faster average convergence when compared to other accelerated BD implementations. We introduce a reinforcement learning agent as a surrogate and demonstrate how it can be used to solve a stochastic inventory management problem.

Will bots take over the supply chain? Revisiting Agent-based supply chain automation

Sep 03, 2021Abstract:Agent-based systems have the capability to fuse information from many distributed sources and create better plans faster. This feature makes agent-based systems naturally suitable to address the challenges in Supply Chain Management (SCM). Although agent-based supply chains systems have been proposed since early 2000; industrial uptake of them has been lagging. The reasons quoted include the immaturity of the technology, a lack of interoperability with supply chain information systems, and a lack of trust in Artificial Intelligence (AI). In this paper, we revisit the agent-based supply chain and review the state of the art. We find that agent-based technology has matured, and other supporting technologies that are penetrating supply chains; are filling in gaps, leaving the concept applicable to a wider range of functions. For example, the ubiquity of IoT technology helps agents "sense" the state of affairs in a supply chain and opens up new possibilities for automation. Digital ledgers help securely transfer data between third parties, making agent-based information sharing possible, without the need to integrate Enterprise Resource Planning (ERP) systems. Learning functionality in agents enables agents to move beyond automation and towards autonomy. We note this convergence effect through conceptualising an agent-based supply chain framework, reviewing its components, and highlighting research challenges that need to be addressed in moving forward.

* 38 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge