Susan M. Resnick

from the iSTAGING consortium, for the ADNI

MetaVoxel: Joint Diffusion Modeling of Imaging and Clinical Metadata

Dec 12, 2025Abstract:Modern deep learning methods have achieved impressive results across tasks from disease classification, estimating continuous biomarkers, to generating realistic medical images. Most of these approaches are trained to model conditional distributions defined by a specific predictive direction with a specific set of input variables. We introduce MetaVoxel, a generative joint diffusion modeling framework that models the joint distribution over imaging data and clinical metadata by learning a single diffusion process spanning all variables. By capturing the joint distribution, MetaVoxel unifies tasks that traditionally require separate conditional models and supports flexible zero-shot inference using arbitrary subsets of inputs without task-specific retraining. Using more than 10,000 T1-weighted MRI scans paired with clinical metadata from nine datasets, we show that a single MetaVoxel model can perform image generation, age estimation, and sex prediction, achieving performance comparable to established task-specific baselines. Additional experiments highlight its capabilities for flexible inference. Together, these findings demonstrate that joint multimodal diffusion offers a promising direction for unifying medical AI models and enabling broader clinical applicability.

Pitfalls of defacing whole-head MRI: re-identification risk with diffusion models and compromised research potential

Jan 31, 2025Abstract:Defacing is often applied to head magnetic resonance image (MRI) datasets prior to public release to address privacy concerns. The alteration of facial and nearby voxels has provoked discussions about the true capability of these techniques to ensure privacy as well as their impact on downstream tasks. With advancements in deep generative models, the extent to which defacing can protect privacy is uncertain. Additionally, while the altered voxels are known to contain valuable anatomical information, their potential to support research beyond the anatomical regions directly affected by defacing remains uncertain. To evaluate these considerations, we develop a refacing pipeline that recovers faces in defaced head MRIs using cascaded diffusion probabilistic models (DPMs). The DPMs are trained on images from 180 subjects and tested on images from 484 unseen subjects, 469 of whom are from a different dataset. To assess whether the altered voxels in defacing contain universally useful information, we also predict computed tomography (CT)-derived skeletal muscle radiodensity from facial voxels in both defaced and original MRIs. The results show that DPMs can generate high-fidelity faces that resemble the original faces from defaced images, with surface distances to the original faces significantly smaller than those of a population average face (p < 0.05). This performance also generalizes well to previously unseen datasets. For skeletal muscle radiodensity predictions, using defaced images results in significantly weaker Spearman's rank correlation coefficients compared to using original images (p < 10-4). For shin muscle, the correlation is statistically significant (p < 0.05) when using original images but not statistically significant (p > 0.05) when any defacing method is applied, suggesting that defacing might not only fail to protect privacy but also eliminate valuable information.

Brain age identification from diffusion MRI synergistically predicts neurodegenerative disease

Oct 29, 2024

Abstract:Estimated brain age from magnetic resonance image (MRI) and its deviation from chronological age can provide early insights into potential neurodegenerative diseases, supporting early detection and implementation of prevention strategies. Diffusion MRI (dMRI), a widely used modality for brain age estimation, presents an opportunity to build an earlier biomarker for neurodegenerative disease prediction because it captures subtle microstructural changes that precede more perceptible macrostructural changes. However, the coexistence of macro- and micro-structural information in dMRI raises the question of whether current dMRI-based brain age estimation models are leveraging the intended microstructural information or if they inadvertently rely on the macrostructural information. To develop a microstructure-specific brain age, we propose a method for brain age identification from dMRI that minimizes the model's use of macrostructural information by non-rigidly registering all images to a standard template. Imaging data from 13,398 participants across 12 datasets were used for the training and evaluation. We compare our brain age models, trained with and without macrostructural information minimized, with an architecturally similar T1-weighted (T1w) MRI-based brain age model and two state-of-the-art T1w MRI-based brain age models that primarily use macrostructural information. We observe difference between our dMRI-based brain age and T1w MRI-based brain age across stages of neurodegeneration, with dMRI-based brain age being older than T1w MRI-based brain age in participants transitioning from cognitively normal (CN) to mild cognitive impairment (MCI), but younger in participants already diagnosed with Alzheimer's disease (AD). Approximately 4 years before MCI diagnosis, dMRI-based brain age yields better performance than T1w MRI-based brain ages in predicting transition from CN to MCI.

Predicting Age from White Matter Diffusivity with Residual Learning

Nov 06, 2023Abstract:Imaging findings inconsistent with those expected at specific chronological age ranges may serve as early indicators of neurological disorders and increased mortality risk. Estimation of chronological age, and deviations from expected results, from structural MRI data has become an important task for developing biomarkers that are sensitive to such deviations. Complementary to structural analysis, diffusion tensor imaging (DTI) has proven effective in identifying age-related microstructural changes within the brain white matter, thereby presenting itself as a promising additional modality for brain age prediction. Although early studies have sought to harness DTI's advantages for age estimation, there is no evidence that the success of this prediction is owed to the unique microstructural and diffusivity features that DTI provides, rather than the macrostructural features that are also available in DTI data. Therefore, we seek to develop white-matter-specific age estimation to capture deviations from normal white matter aging. Specifically, we deliberately disregard the macrostructural information when predicting age from DTI scalar images, using two distinct methods. The first method relies on extracting only microstructural features from regions of interest. The second applies 3D residual neural networks (ResNets) to learn features directly from the images, which are non-linearly registered and warped to a template to minimize macrostructural variations. When tested on unseen data, the first method yields mean absolute error (MAE) of 6.11 years for cognitively normal participants and MAE of 6.62 years for cognitively impaired participants, while the second method achieves MAE of 4.69 years for cognitively normal participants and MAE of 4.96 years for cognitively impaired participants. We find that the ResNet model captures subtler, non-macrostructural features for brain age prediction.

Rapid Brain Meninges Surface Reconstruction with Layer Topology Guarantee

Apr 13, 2023

Abstract:The meninges, located between the skull and brain, are composed of three membrane layers: the pia, the arachnoid, and the dura. Reconstruction of these layers can aid in studying volume differences between patients with neurodegenerative diseases and normal aging subjects. In this work, we use convolutional neural networks (CNNs) to reconstruct surfaces representing meningeal layer boundaries from magnetic resonance (MR) images. We first use the CNNs to predict the signed distance functions (SDFs) representing these surfaces while preserving their anatomical ordering. The marching cubes algorithm is then used to generate continuous surface representations; both the subarachnoid space (SAS) and the intracranial volume (ICV) are computed from these surfaces. The proposed method is compared to a state-of-the-art deformable model-based reconstruction method, and we show that our method can reconstruct smoother and more accurate surfaces using less computation time. Finally, we conduct experiments with volumetric analysis on both subjects with multiple sclerosis and healthy controls. For healthy and MS subjects, ICVs and SAS volumes are found to be significantly correlated to sex (p<0.01) and age (p<0.03) changes, respectively.

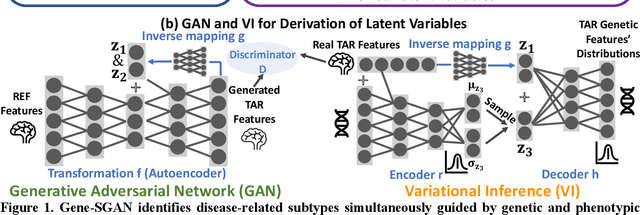

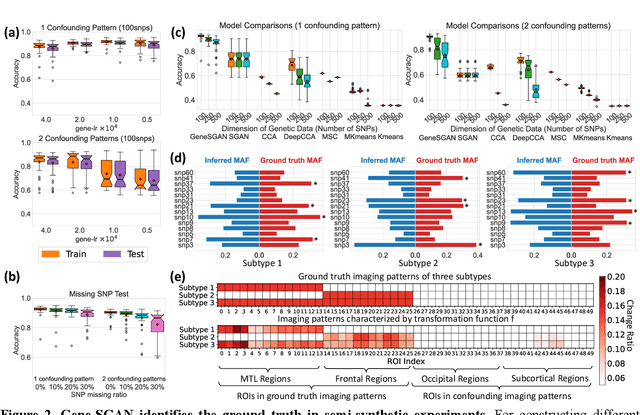

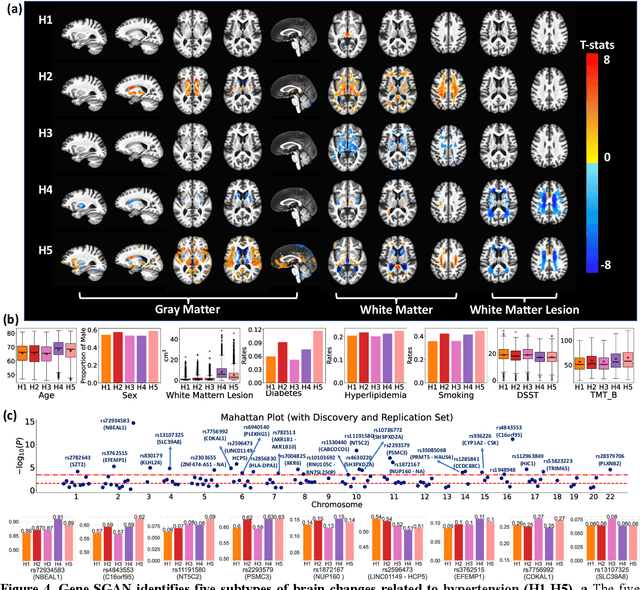

Gene-SGAN: a method for discovering disease subtypes with imaging and genetic signatures via multi-view weakly-supervised deep clustering

Jan 25, 2023

Abstract:Disease heterogeneity has been a critical challenge for precision diagnosis and treatment, especially in neurologic and neuropsychiatric diseases. Many diseases can display multiple distinct brain phenotypes across individuals, potentially reflecting disease subtypes that can be captured using MRI and machine learning methods. However, biological interpretability and treatment relevance are limited if the derived subtypes are not associated with genetic drivers or susceptibility factors. Herein, we describe Gene-SGAN - a multi-view, weakly-supervised deep clustering method - which dissects disease heterogeneity by jointly considering phenotypic and genetic data, thereby conferring genetic correlations to the disease subtypes and associated endophenotypic signatures. We first validate the generalizability, interpretability, and robustness of Gene-SGAN in semi-synthetic experiments. We then demonstrate its application to real multi-site datasets from 28,858 individuals, deriving subtypes of Alzheimer's disease and brain endophenotypes associated with hypertension, from MRI and SNP data. Derived brain phenotypes displayed significant differences in neuroanatomical patterns, genetic determinants, biological and clinical biomarkers, indicating potentially distinct underlying neuropathologic processes, genetic drivers, and susceptibility factors. Overall, Gene-SGAN is broadly applicable to disease subtyping and endophenotype discovery, and is herein tested on disease-related, genetically-driven neuroimaging phenotypes.

HACA3: A Unified Approach for Multi-site MR Image Harmonization

Dec 12, 2022Abstract:The lack of standardization is a prominent issue in magnetic resonance (MR) imaging. This often causes undesired contrast variations due to differences in hardware and acquisition parameters. In recent years, MR harmonization using image synthesis with disentanglement has been proposed to compensate for the undesired contrast variations. Despite the success of existing methods, we argue that three major improvements can be made. First, most existing methods are built upon the assumption that multi-contrast MR images of the same subject share the same anatomy. This assumption is questionable since different MR contrasts are specialized to highlight different anatomical features. Second, these methods often require a fixed set of MR contrasts for training (e.g., both Tw-weighted and T2-weighted images must be available), which limits their applicability. Third, existing methods generally are sensitive to imaging artifacts. In this paper, we present a novel approach, Harmonization with Attention-based Contrast, Anatomy, and Artifact Awareness (HACA3), to address these three issues. We first propose an anatomy fusion module that enables HACA3 to respect the anatomical differences between MR contrasts. HACA3 is also robust to imaging artifacts and can be trained and applied to any set of MR contrasts. Experiments show that HACA3 achieves state-of-the-art performance under multiple image quality metrics. We also demonstrate the applicability of HACA3 on downstream tasks with diverse MR datasets acquired from 21 sites with different field strengths, scanner platforms, and acquisition protocols.

Disentangling A Single MR Modality

May 10, 2022

Abstract:Disentangling anatomical and contrast information from medical images has gained attention recently, demonstrating benefits for various image analysis tasks. Current methods learn disentangled representations using either paired multi-modal images with the same underlying anatomy or auxiliary labels (e.g., manual delineations) to provide inductive bias for disentanglement. However, these requirements could significantly increase the time and cost in data collection and limit the applicability of these methods when such data are not available. Moreover, these methods generally do not guarantee disentanglement. In this paper, we present a novel framework that learns theoretically and practically superior disentanglement from single modality magnetic resonance images. Moreover, we propose a new information-based metric to quantitatively evaluate disentanglement. Comparisons over existing disentangling methods demonstrate that the proposed method achieves superior performance in both disentanglement and cross-domain image-to-image translation tasks.

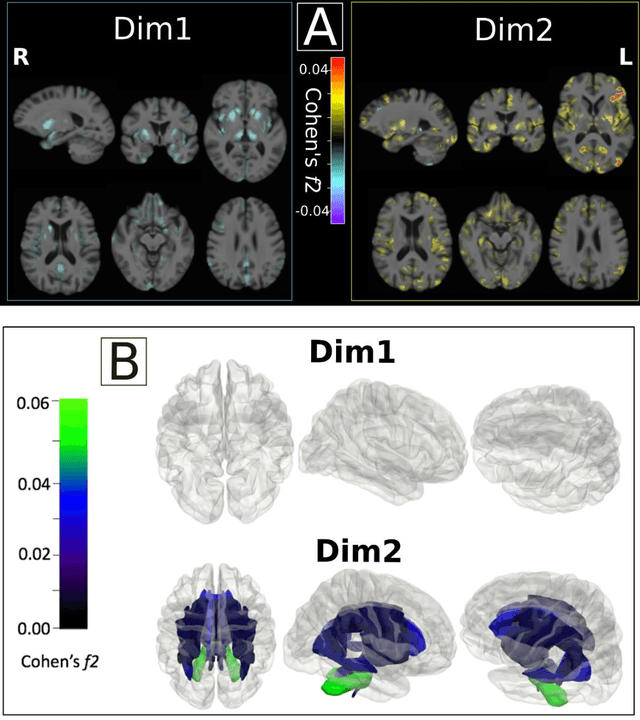

Multidimensional representations in late-life depression: convergence in neuroimaging, cognition, clinical symptomatology and genetics

Oct 25, 2021

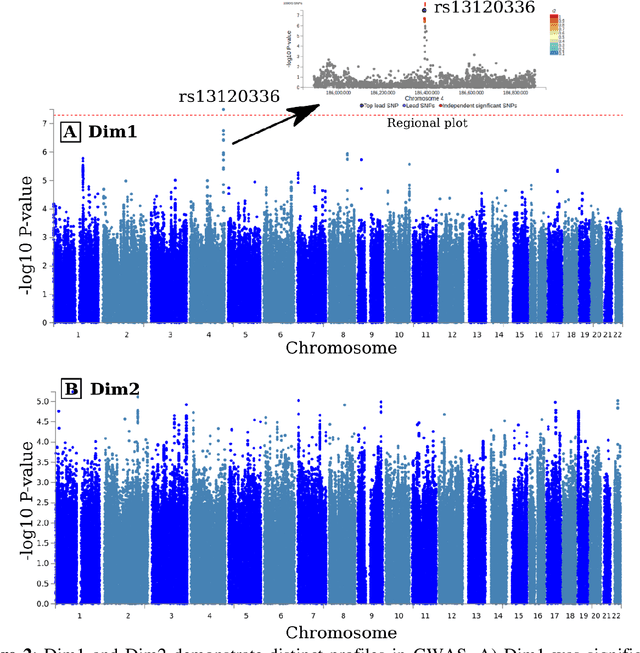

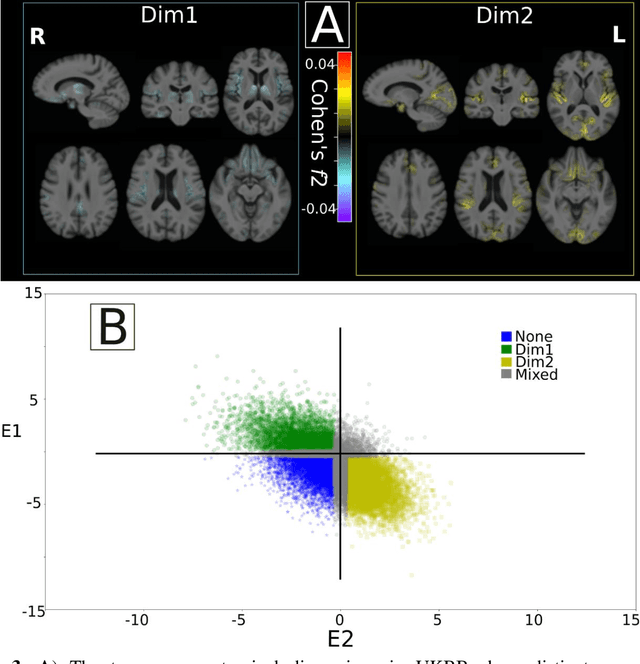

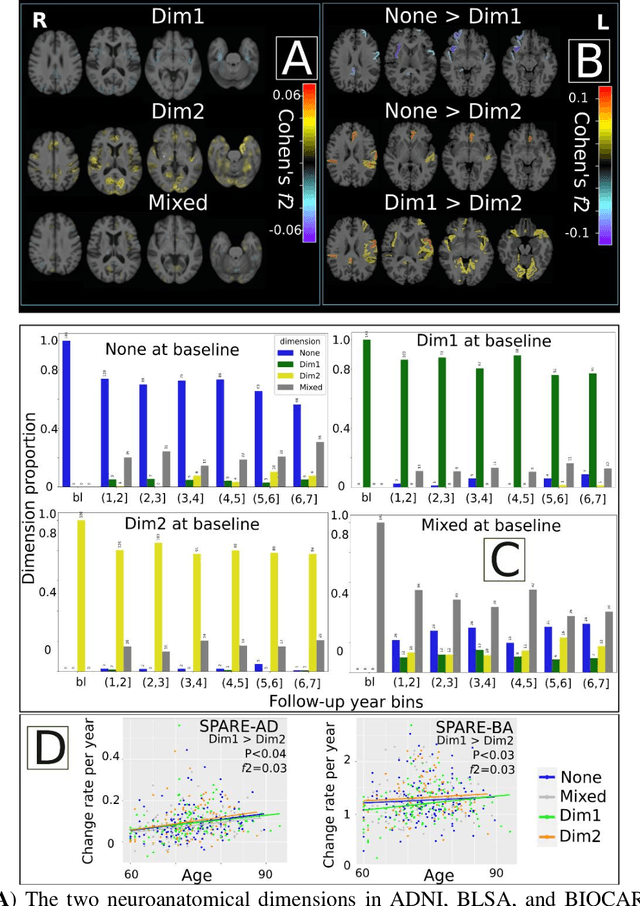

Abstract:Late-life depression (LLD) is characterized by considerable heterogeneity in clinical manifestation. Unraveling such heterogeneity would aid in elucidating etiological mechanisms and pave the road to precision and individualized medicine. We sought to delineate, cross-sectionally and longitudinally, disease-related heterogeneity in LLD linked to neuroanatomy, cognitive functioning, clinical symptomatology, and genetic profiles. Multimodal data from a multicentre sample (N=996) were analyzed. A semi-supervised clustering method (HYDRA) was applied to regional grey matter (GM) brain volumes to derive dimensional representations. Two dimensions were identified, which accounted for the LLD-related heterogeneity in voxel-wise GM maps, white matter (WM) fractional anisotropy (FA), neurocognitive functioning, clinical phenotype, and genetics. Dimension one (Dim1) demonstrated relatively preserved brain anatomy without WM disruptions relative to healthy controls. In contrast, dimension two (Dim2) showed widespread brain atrophy and WM integrity disruptions, along with cognitive impairment and higher depression severity. Moreover, one de novo independent genetic variant (rs13120336) was significantly associated with Dim 1 but not with Dim 2. Notably, the two dimensions demonstrated significant SNP-based heritability of 18-27% within the general population (N=12,518 in UKBB). Lastly, in a subset of individuals having longitudinal measurements, Dim2 demonstrated a more rapid longitudinal decrease in GM and brain age, and was more likely to progress to Alzheimers disease, compared to Dim1 (N=1,413 participants and 7,225 scans from ADNI, BLSA, and BIOCARD datasets).

Disentangling Alzheimer's disease neurodegeneration from typical brain aging using machine learning

Sep 08, 2021

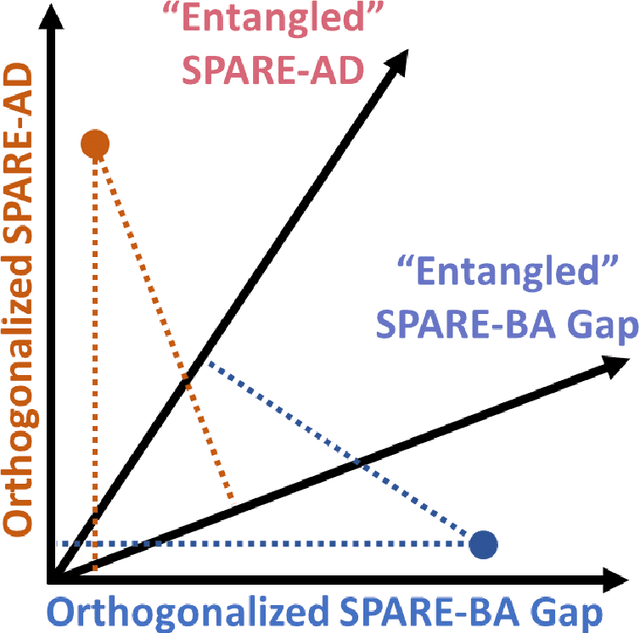

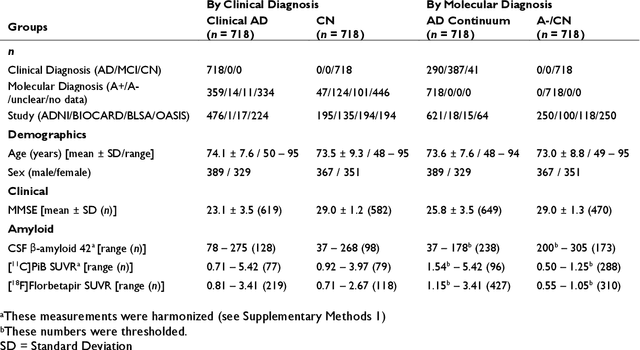

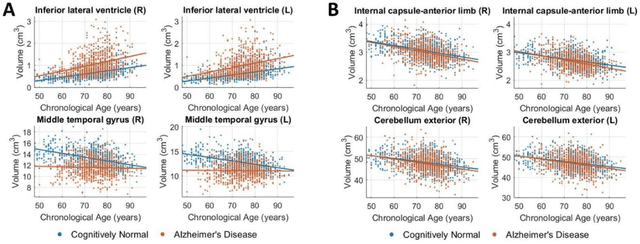

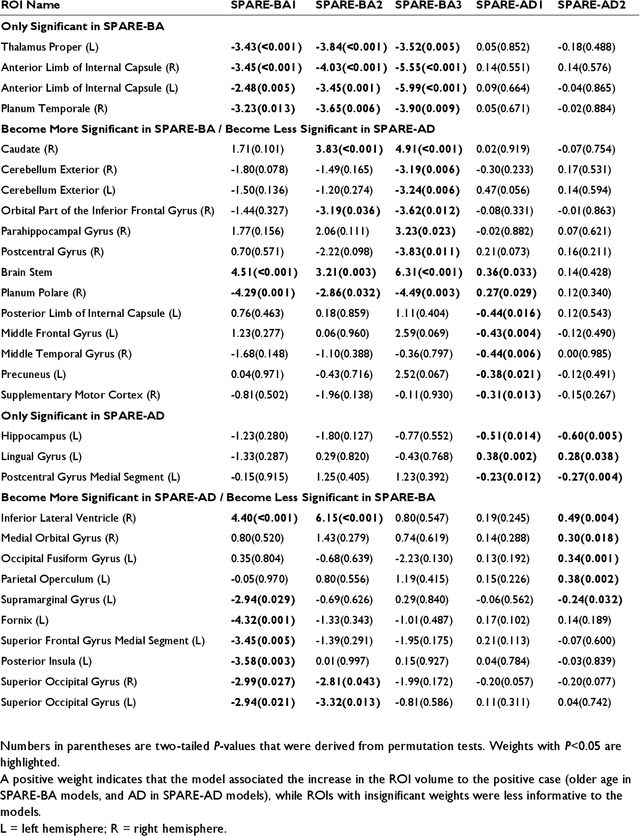

Abstract:Neuroimaging biomarkers that distinguish between typical brain aging and Alzheimer's disease (AD) are valuable for determining how much each contributes to cognitive decline. Machine learning models can derive multi-variate brain change patterns related to the two processes, including the SPARE-AD (Spatial Patterns of Atrophy for Recognition of Alzheimer's Disease) and SPARE-BA (of Brain Aging) investigated herein. However, substantial overlap between brain regions affected in the two processes confounds measuring them independently. We present a methodology toward disentangling the two. T1-weighted MRI images of 4,054 participants (48-95 years) with AD, mild cognitive impairment (MCI), or cognitively normal (CN) diagnoses from the iSTAGING (Imaging-based coordinate SysTem for AGIng and NeurodeGenerative diseases) consortium were analyzed. First, a subset of AD patients and CN adults were selected based purely on clinical diagnoses to train SPARE-BA1 (regression of age using CN individuals) and SPARE-AD1 (classification of CN versus AD). Second, analogous groups were selected based on clinical and molecular markers to train SPARE-BA2 and SPARE-AD2: amyloid-positive (A+) AD continuum group (consisting of A+AD, A+MCI, and A+ and tau-positive CN individuals) and amyloid-negative (A-) CN group. Finally, the combined group of the AD continuum and A-/CN individuals was used to train SPARE-BA3, with the intention to estimate brain age regardless of AD-related brain changes. Disentangled SPARE models derived brain patterns that were more specific to the two types of the brain changes. Correlation between the SPARE-BA and SPARE-AD was significantly reduced. Correlation of disentangled SPARE-AD was non-inferior to the molecular measurements and to the number of APOE4 alleles, but was less to AD-related psychometric test scores, suggesting contribution of advanced brain aging to these scores.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge