Sen He

Bridging Structure and Appearance: Topological Features for Robust Self-Supervised Segmentation

Dec 30, 2025Abstract:Self-supervised semantic segmentation methods often fail when faced with appearance ambiguities. We argue that this is due to an over-reliance on unstable, appearance-based features such as shadows, glare, and local textures. We propose \textbf{GASeg}, a novel framework that bridges appearance and geometry by leveraging stable topological information. The core of our method is Differentiable Box-Counting (\textbf{DBC}) module, which quantifies multi-scale topological statistics from two parallel streams: geometric-based features and appearance-based features. To force the model to learn these stable structural representations, we introduce Topological Augmentation (\textbf{TopoAug}), an adversarial strategy that simulates real-world ambiguities by applying morphological operators to the input images. A multi-objective loss, \textbf{GALoss}, then explicitly enforces cross-modal alignment between geometric-based and appearance-based features. Extensive experiments demonstrate that GASeg achieves state-of-the-art performance on four benchmarks, including COCO-Stuff, Cityscapes, and PASCAL, validating our approach of bridging geometry and appearance via topological information.

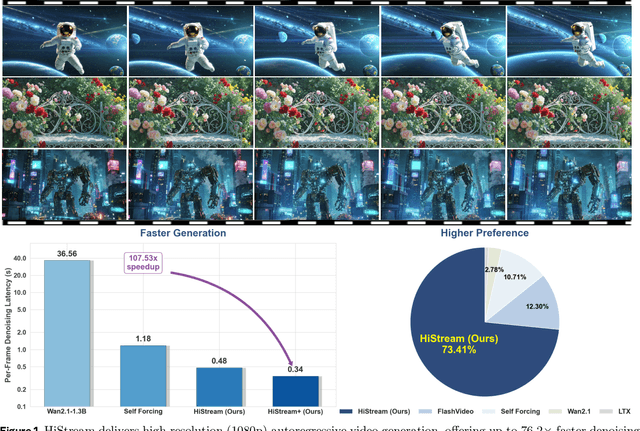

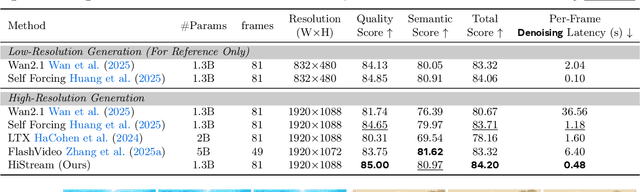

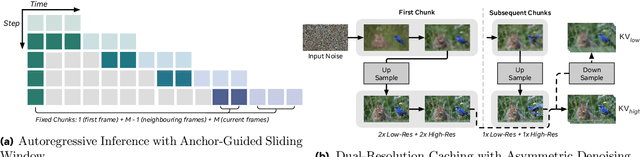

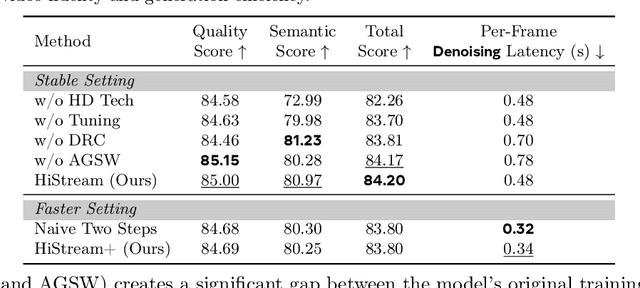

HiStream: Efficient High-Resolution Video Generation via Redundancy-Eliminated Streaming

Dec 24, 2025

Abstract:High-resolution video generation, while crucial for digital media and film, is computationally bottlenecked by the quadratic complexity of diffusion models, making practical inference infeasible. To address this, we introduce HiStream, an efficient autoregressive framework that systematically reduces redundancy across three axes: i) Spatial Compression: denoising at low resolution before refining at high resolution with cached features; ii) Temporal Compression: a chunk-by-chunk strategy with a fixed-size anchor cache, ensuring stable inference speed; and iii) Timestep Compression: applying fewer denoising steps to subsequent, cache-conditioned chunks. On 1080p benchmarks, our primary HiStream model (i+ii) achieves state-of-the-art visual quality while demonstrating up to 76.2x faster denoising compared to the Wan2.1 baseline and negligible quality loss. Our faster variant, HiStream+, applies all three optimizations (i+ii+iii), achieving a 107.5x acceleration over the baseline, offering a compelling trade-off between speed and quality, thereby making high-resolution video generation both practical and scalable.

OneStory: Coherent Multi-Shot Video Generation with Adaptive Memory

Dec 08, 2025

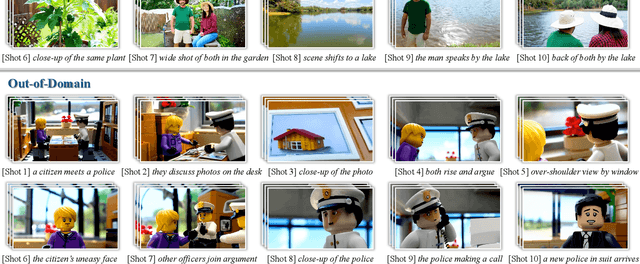

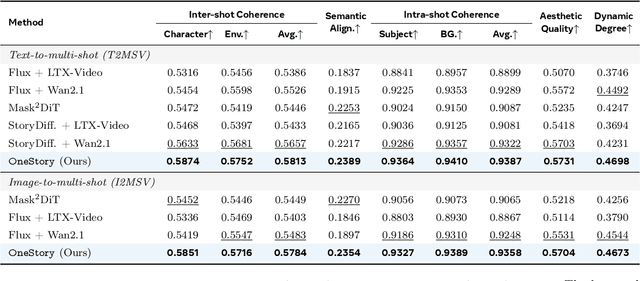

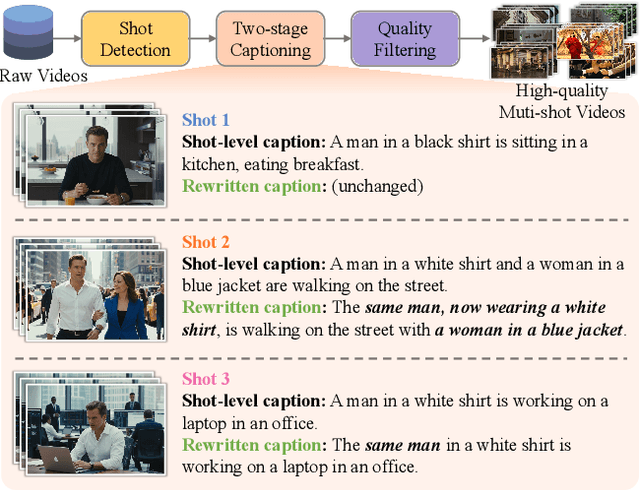

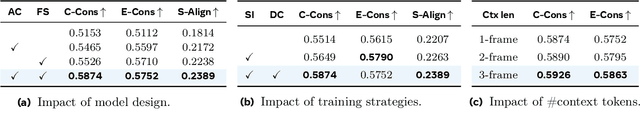

Abstract:Storytelling in real-world videos often unfolds through multiple shots -- discontinuous yet semantically connected clips that together convey a coherent narrative. However, existing multi-shot video generation (MSV) methods struggle to effectively model long-range cross-shot context, as they rely on limited temporal windows or single keyframe conditioning, leading to degraded performance under complex narratives. In this work, we propose OneStory, enabling global yet compact cross-shot context modeling for consistent and scalable narrative generation. OneStory reformulates MSV as a next-shot generation task, enabling autoregressive shot synthesis while leveraging pretrained image-to-video (I2V) models for strong visual conditioning. We introduce two key modules: a Frame Selection module that constructs a semantically-relevant global memory based on informative frames from prior shots, and an Adaptive Conditioner that performs importance-guided patchification to generate compact context for direct conditioning. We further curate a high-quality multi-shot dataset with referential captions to mirror real-world storytelling patterns, and design effective training strategies under the next-shot paradigm. Finetuned from a pretrained I2V model on our curated 60K dataset, OneStory achieves state-of-the-art narrative coherence across diverse and complex scenes in both text- and image-conditioned settings, enabling controllable and immersive long-form video storytelling.

Mixture of States: Routing Token-Level Dynamics for Multimodal Generation

Nov 15, 2025Abstract:We introduce MoS (Mixture of States), a novel fusion paradigm for multimodal diffusion models that merges modalities using flexible, state-based interactions. The core of MoS is a learnable, token-wise router that creates denoising timestep- and input-dependent interactions between modalities' hidden states, precisely aligning token-level features with the diffusion trajectory. This router sparsely selects the top-$k$ hidden states and is trained with an $ε$-greedy strategy, efficiently selecting contextual features with minimal learnable parameters and negligible computational overhead. We validate our design with text-to-image generation (MoS-Image) and editing (MoS-Editing), which achieve state-of-the-art results. With only 3B to 5B parameters, our models match or surpass counterparts up to $4\times$ larger. These findings establish MoS as a flexible and compute-efficient paradigm for scaling multimodal diffusion models.

DynamicLip: Shape-Independent Continuous Authentication via Lip Articulator Dynamics

Jan 02, 2025Abstract:Biometrics authentication has become increasingly popular due to its security and convenience; however, traditional biometrics are becoming less desirable in scenarios such as new mobile devices, Virtual Reality, and Smart Vehicles. For example, while face authentication is widely used, it suffers from significant privacy concerns. The collection of complete facial data makes it less desirable for privacy-sensitive applications. Lip authentication, on the other hand, has emerged as a promising biometrics method. However, existing lip-based authentication methods heavily depend on static lip shape when the mouth is closed, which can be less robust due to lip shape dynamic motion and can barely work when the user is speaking. In this paper, we revisit the nature of lip biometrics and extract shape-independent features from the lips. We study the dynamic characteristics of lip biometrics based on articulator motion. Building on the knowledge, we propose a system for shape-independent continuous authentication via lip articulator dynamics. This system enables robust, shape-independent and continuous authentication, making it particularly suitable for scenarios with high security and privacy requirements. We conducted comprehensive experiments in different environments and attack scenarios and collected a dataset of 50 subjects. The results indicate that our system achieves an overall accuracy of 99.06% and demonstrates robustness under advanced mimic attacks and AI deepfake attacks, making it a viable solution for continuous biometric authentication in various applications.

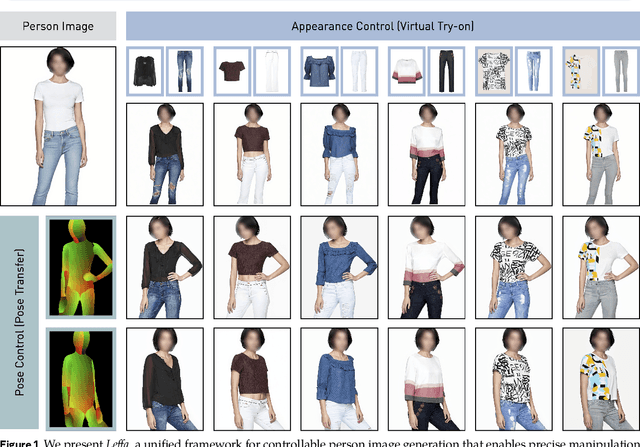

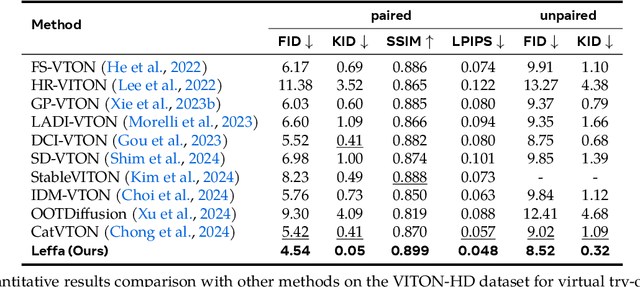

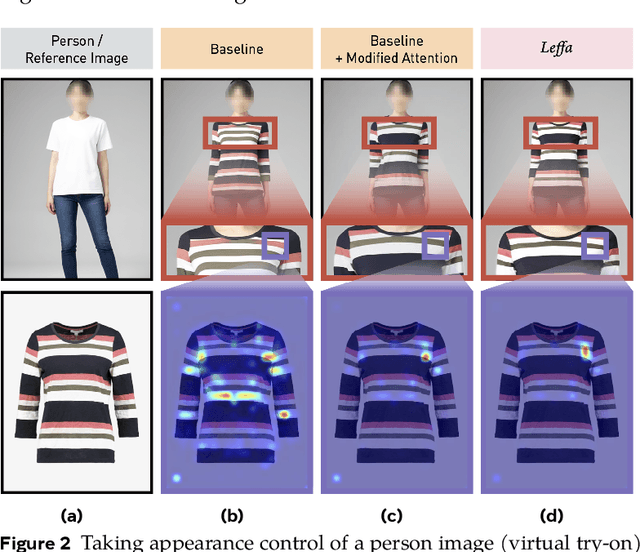

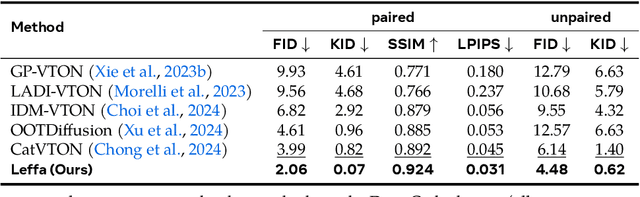

Learning Flow Fields in Attention for Controllable Person Image Generation

Dec 12, 2024

Abstract:Controllable person image generation aims to generate a person image conditioned on reference images, allowing precise control over the person's appearance or pose. However, prior methods often distort fine-grained textural details from the reference image, despite achieving high overall image quality. We attribute these distortions to inadequate attention to corresponding regions in the reference image. To address this, we thereby propose learning flow fields in attention (Leffa), which explicitly guides the target query to attend to the correct reference key in the attention layer during training. Specifically, it is realized via a regularization loss on top of the attention map within a diffusion-based baseline. Our extensive experiments show that Leffa achieves state-of-the-art performance in controlling appearance (virtual try-on) and pose (pose transfer), significantly reducing fine-grained detail distortion while maintaining high image quality. Additionally, we show that our loss is model-agnostic and can be used to improve the performance of other diffusion models.

LAA-Net: A Physical-prior-knowledge Based Network for Robust Nighttime Depth Estimation

Dec 05, 2024

Abstract:Existing self-supervised monocular depth estimation (MDE) models attempt to improve nighttime performance by using GANs to transfer nighttime images into their daytime versions. However, this can introduce inconsistencies due to the complexities of real-world daytime lighting variations, which may finally lead to inaccurate estimation results. To address this issue, we leverage physical-prior-knowledge about light wavelength and light attenuation during nighttime. Specifically, our model, Light-Attenuation-Aware Network (LAA-Net), incorporates physical insights from Rayleigh scattering theory for robust nighttime depth estimation: LAA-Net is trained based on red channel values because red light preserves more information under nighttime scenarios due to its longer wavelength. Additionally, based on Beer-Lambert law, we introduce Red Channel Attenuation (RCA) loss to guide LAA-Net's training. Experiments on the RobotCar-Night, nuScenes-Night, RobotCar-Day, and KITTI datasets demonstrate that our model outperforms SOTA models.

Adaptive Caching for Faster Video Generation with Diffusion Transformers

Nov 04, 2024

Abstract:Generating temporally-consistent high-fidelity videos can be computationally expensive, especially over longer temporal spans. More-recent Diffusion Transformers (DiTs) -- despite making significant headway in this context -- have only heightened such challenges as they rely on larger models and heavier attention mechanisms, resulting in slower inference speeds. In this paper, we introduce a training-free method to accelerate video DiTs, termed Adaptive Caching (AdaCache), which is motivated by the fact that "not all videos are created equal": meaning, some videos require fewer denoising steps to attain a reasonable quality than others. Building on this, we not only cache computations through the diffusion process, but also devise a caching schedule tailored to each video generation, maximizing the quality-latency trade-off. We further introduce a Motion Regularization (MoReg) scheme to utilize video information within AdaCache, essentially controlling the compute allocation based on motion content. Altogether, our plug-and-play contributions grant significant inference speedups (e.g. up to 4.7x on Open-Sora 720p - 2s video generation) without sacrificing the generation quality, across multiple video DiT baselines.

MarDini: Masked Autoregressive Diffusion for Video Generation at Scale

Oct 26, 2024

Abstract:We introduce MarDini, a new family of video diffusion models that integrate the advantages of masked auto-regression (MAR) into a unified diffusion model (DM) framework. Here, MAR handles temporal planning, while DM focuses on spatial generation in an asymmetric network design: i) a MAR-based planning model containing most of the parameters generates planning signals for each masked frame using low-resolution input; ii) a lightweight generation model uses these signals to produce high-resolution frames via diffusion de-noising. MarDini's MAR enables video generation conditioned on any number of masked frames at any frame positions: a single model can handle video interpolation (e.g., masking middle frames), image-to-video generation (e.g., masking from the second frame onward), and video expansion (e.g., masking half the frames). The efficient design allocates most of the computational resources to the low-resolution planning model, making computationally expensive but important spatio-temporal attention feasible at scale. MarDini sets a new state-of-the-art for video interpolation; meanwhile, within few inference steps, it efficiently generates videos on par with those of much more expensive advanced image-to-video models.

Hyper-VolTran: Fast and Generalizable One-Shot Image to 3D Object Structure via HyperNetworks

Jan 05, 2024

Abstract:Solving image-to-3D from a single view is an ill-posed problem, and current neural reconstruction methods addressing it through diffusion models still rely on scene-specific optimization, constraining their generalization capability. To overcome the limitations of existing approaches regarding generalization and consistency, we introduce a novel neural rendering technique. Our approach employs the signed distance function as the surface representation and incorporates generalizable priors through geometry-encoding volumes and HyperNetworks. Specifically, our method builds neural encoding volumes from generated multi-view inputs. We adjust the weights of the SDF network conditioned on an input image at test-time to allow model adaptation to novel scenes in a feed-forward manner via HyperNetworks. To mitigate artifacts derived from the synthesized views, we propose the use of a volume transformer module to improve the aggregation of image features instead of processing each viewpoint separately. Through our proposed method, dubbed as Hyper-VolTran, we avoid the bottleneck of scene-specific optimization and maintain consistency across the images generated from multiple viewpoints. Our experiments show the advantages of our proposed approach with consistent results and rapid generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge