Bodo Rosenhahn

From Gameplay Traces to Game Mechanics: Causal Induction with Large Language Models

Jan 30, 2026Abstract:Deep learning agents can achieve high performance in complex game domains without often understanding the underlying causal game mechanics. To address this, we investigate Causal Induction: the ability to infer governing laws from observational data, by tasking Large Language Models (LLMs) with reverse-engineering Video Game Description Language (VGDL) rules from gameplay traces. To reduce redundancy, we select nine representative games from the General Video Game AI (GVGAI) framework using semantic embeddings and clustering. We compare two approaches to VGDL generation: direct code generation from observations, and a two-stage method that first infers a structural causal model (SCM) and then translates it into VGDL. Both approaches are evaluated across multiple prompting strategies and controlled context regimes, varying the amount and form of information provided to the model, from just raw gameplay observations to partial VGDL specifications. Results show that the SCM-based approach more often produces VGDL descriptions closer to the ground truth than direct generation, achieving preference win rates of up to 81\% in blind evaluations and yielding fewer logically inconsistent rules. These learned SCMs can be used for downstream use cases such as causal reinforcement learning, interpretable agents, and procedurally generating novel but logically consistent games.

Naga: Vedic Encoding for Deep State Space Models

Nov 17, 2025Abstract:This paper presents Naga, a deep State Space Model (SSM) encoding approach inspired by structural concepts from Vedic mathematics. The proposed method introduces a bidirectional representation for time series by jointly processing forward and time-reversed input sequences. These representations are then combined through an element-wise (Hadamard) interaction, resulting in a Vedic-inspired encoding that enhances the model's ability to capture temporal dependencies across distant time steps. We evaluate Naga on multiple long-term time series forecasting (LTSF) benchmarks, including ETTh1, ETTh2, ETTm1, ETTm2, Weather, Traffic, and ILI. The experimental results show that Naga outperforms 28 current state of the art models and demonstrates improved efficiency compared to existing deep SSM-based approaches. The findings suggest that incorporating structured, Vedic-inspired decomposition can provide an interpretable and computationally efficient alternative for long-range sequence modeling.

Discovering State Equivalences in UCT Search Trees By Action Pruning

Oct 30, 2025Abstract:One approach to enhance Monte Carlo Tree Search (MCTS) is to improve its sample efficiency by grouping/abstracting states or state-action pairs and sharing statistics within a group. Though state-action pair abstractions are mostly easy to find in algorithms such as On the Go Abstractions in Upper Confidence bounds applied to Trees (OGA-UCT), nearly no state abstractions are found in either noisy or large action space settings due to constraining conditions. We provide theoretical and empirical evidence for this claim, and we slightly alleviate this state abstraction problem by proposing a weaker state abstraction condition that trades a minor loss in accuracy for finding many more abstractions. We name this technique Ideal Pruning Abstractions in UCT (IPA-UCT), which outperforms OGA-UCT (and any of its derivatives) across a large range of test domains and iteration budgets as experimentally validated. IPA-UCT uses a different abstraction framework from Abstraction of State-Action Pairs (ASAP) which is the one used by OGA-UCT, which we name IPA. Furthermore, we show that both IPA and ASAP are special cases of a more general framework that we call p-ASAP which itself is a special case of the ASASAP framework.

Neural Guided Sampling for Quantum Circuit Optimization

Oct 14, 2025Abstract:Translating a general quantum circuit on a specific hardware topology with a reduced set of available gates, also known as transpilation, comes with a substantial increase in the length of the equivalent circuit. Due to decoherence, the quality of the computational outcome can degrade seriously with increasing circuit length. Thus, there is major interest to reduce a quantum circuit to an equivalent circuit which is in its gate count as short as possible. One method to address efficient transpilation is based on approaches known from stochastic optimization, e.g. by using random sampling and token replacement strategies. Here, a core challenge is that these methods can suffer from sampling efficiency, causing long and energy consuming optimization time. As a remedy, we propose in this work 2D neural guided sampling. Thus, given a 2D representation of a quantum circuit, a neural network predicts groups of gates in the quantum circuit, which are likely reducible. Thus, it leads to a sampling prior which can heavily reduce the compute time for quantum circuit reduction. In several experiments, we demonstrate that our method is superior to results obtained from different qiskit or BQSKit optimization levels.

Benchmarking M-LTSF: Frequency and Noise-Based Evaluation of Multivariate Long Time Series Forecasting Models

Oct 06, 2025Abstract:Understanding the robustness of deep learning models for multivariate long-term time series forecasting (M-LTSF) remains challenging, as evaluations typically rely on real-world datasets with unknown noise properties. We propose a simulation-based evaluation framework that generates parameterizable synthetic datasets, where each dataset instance corresponds to a different configuration of signal components, noise types, signal-to-noise ratios, and frequency characteristics. These configurable components aim to model real-world multivariate time series data without the ambiguity of unknown noise. This framework enables fine-grained, systematic evaluation of M-LTSF models under controlled and diverse scenarios. We benchmark four representative architectures S-Mamba (state-space), iTransformer (transformer-based), R-Linear (linear), and Autoformer (decomposition-based). Our analysis reveals that all models degrade severely when lookback windows cannot capture complete periods of seasonal patters in the data. S-Mamba and Autoformer perform best on sawtooth patterns, while R-Linear and iTransformer favor sinusoidal signals. White and Brownian noise universally degrade performance with lower signal-to-noise ratio while S-Mamba shows specific trend-noise and iTransformer shows seasonal-noise vulnerability. Further spectral analysis shows that S-Mamba and iTransformer achieve superior frequency reconstruction. This controlled approach, based on our synthetic and principle-driven testbed, offers deeper insights into model-specific strengths and limitations through the aggregation of MSE scores and provides concrete guidance for model selection based on signal characteristics and noise conditions.

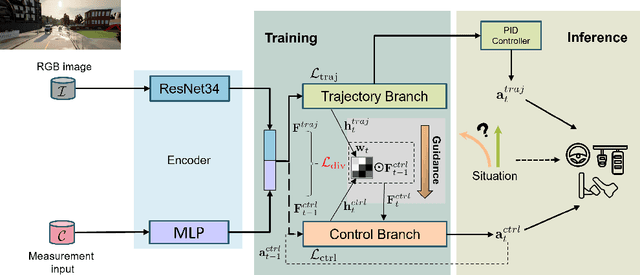

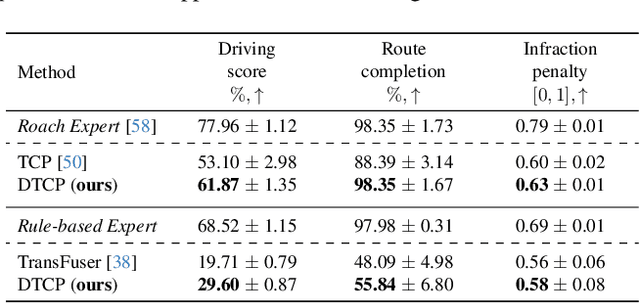

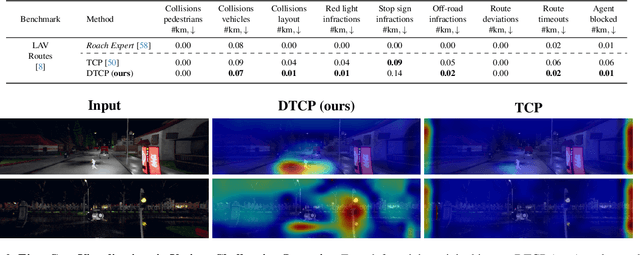

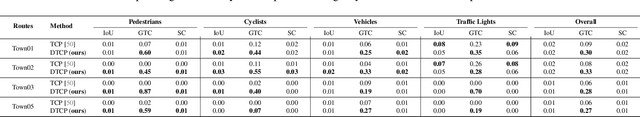

Interpretable Decision-Making for End-to-End Autonomous Driving

Aug 26, 2025

Abstract:Trustworthy AI is mandatory for the broad deployment of autonomous vehicles. Although end-to-end approaches derive control commands directly from raw data, interpreting these decisions remains challenging, especially in complex urban scenarios. This is mainly attributed to very deep neural networks with non-linear decision boundaries, making it challenging to grasp the logic behind AI-driven decisions. This paper presents a method to enhance interpretability while optimizing control commands in autonomous driving. To address this, we propose loss functions that promote the interpretability of our model by generating sparse and localized feature maps. The feature activations allow us to explain which image regions contribute to the predicted control command. We conduct comprehensive ablation studies on the feature extraction step and validate our method on the CARLA benchmarks. We also demonstrate that our approach improves interpretability, which correlates with reducing infractions, yielding a safer, high-performance driving model. Notably, our monocular, non-ensemble model surpasses the top-performing approaches from the CARLA Leaderboard by achieving lower infraction scores and the highest route completion rate, all while ensuring interpretability.

UncertainSAM: Fast and Efficient Uncertainty Quantification of the Segment Anything Model

May 08, 2025Abstract:The introduction of the Segment Anything Model (SAM) has paved the way for numerous semantic segmentation applications. For several tasks, quantifying the uncertainty of SAM is of particular interest. However, the ambiguous nature of the class-agnostic foundation model SAM challenges current uncertainty quantification (UQ) approaches. This paper presents a theoretically motivated uncertainty quantification model based on a Bayesian entropy formulation jointly respecting aleatoric, epistemic, and the newly introduced task uncertainty. We use this formulation to train USAM, a lightweight post-hoc UQ method. Our model traces the root of uncertainty back to under-parameterised models, insufficient prompts or image ambiguities. Our proposed deterministic USAM demonstrates superior predictive capabilities on the SA-V, MOSE, ADE20k, DAVIS, and COCO datasets, offering a computationally cheap and easy-to-use UQ alternative that can support user-prompting, enhance semi-supervised pipelines, or balance the tradeoff between accuracy and cost efficiency.

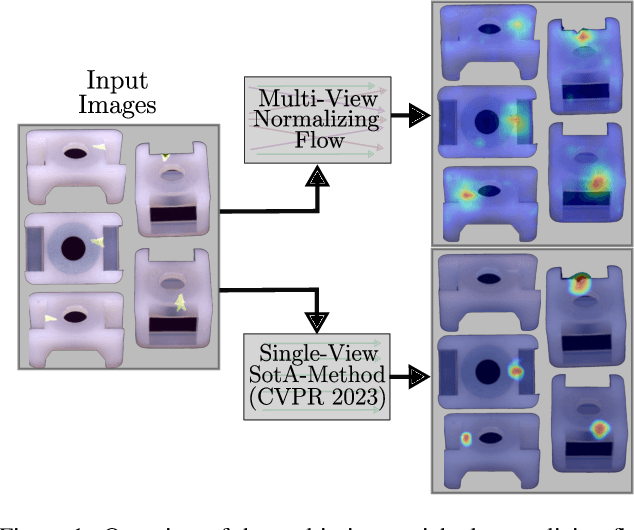

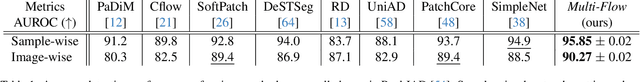

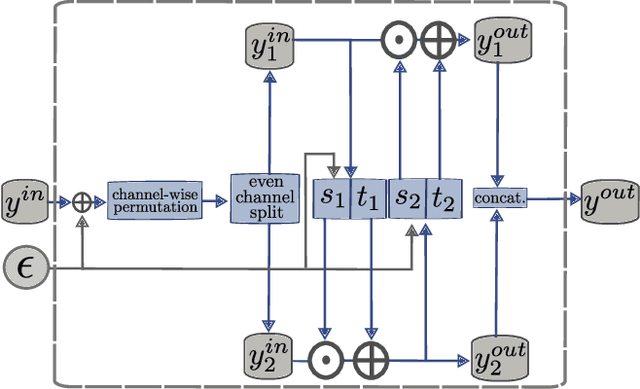

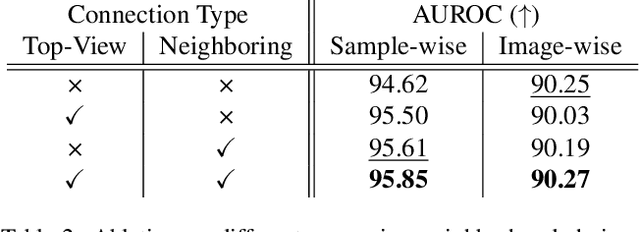

Multi-Flow: Multi-View-Enriched Normalizing Flows for Industrial Anomaly Detection

Apr 04, 2025

Abstract:With more well-performing anomaly detection methods proposed, many of the single-view tasks have been solved to a relatively good degree. However, real-world production scenarios often involve complex industrial products, whose properties may not be fully captured by one single image. While normalizing flow based approaches already work well in single-camera scenarios, they currently do not make use of the priors in multi-view data. We aim to bridge this gap by using these flow-based models as a strong foundation and propose Multi-Flow, a novel multi-view anomaly detection method. Multi-Flow makes use of a novel multi-view architecture, whose exact likelihood estimation is enhanced by fusing information across different views. For this, we propose a new cross-view message-passing scheme, letting information flow between neighboring views. We empirically validate it on the real-world multi-view data set Real-IAD and reach a new state-of-the-art, surpassing current baselines in both image-wise and sample-wise anomaly detection tasks.

S4D-Bio Audio Monitoring of Bone Cement Disintegration in Pulsating Fluid Jet Surgery under Laboratory Conditions

Mar 04, 2025Abstract:This study investigates a pulsating fluid jet as a novel precise, minimally invasive and cold technique for bone cement removal. We utilize the pulsating fluid jet device to remove bone cement from samples designed to mimic clinical conditions. The effectiveness of long nozzles was tested to enable minimally invasive procedures. Audio signal monitoring, complemented by the State Space Model (SSM) S4D-Bio, was employed to optimize the fluid jet parameters dynamically, addressing challenges like visibility obstruction from splashing. Within our experiments, we generate a comprehensive dataset correlating various process parameters and their equivalent audio signals to material erosion. The use of SSMs yields precise control over the predictive erosion process, achieving 98.93 \% accuracy. The study demonstrates on the one hand, that the pulsating fluid jet device, coupled with advanced audio monitoring techniques, is a highly effective tool for precise bone cement removal. On the other hand, this study presents the first application of SSMs in biomedical surgery technology, marking a significant advancement in the application. This research significantly advances biomedical engineering by integrating machine learning combined with pulsating fluid jet as surgical technology, offering a novel, minimally invasive, cold and adaptive approach for bone cement removal in orthopedic applications.

S4ConvD: Adaptive Scaling and Frequency Adjustment for Energy-Efficient Sensor Networks in Smart Buildings

Feb 28, 2025

Abstract:Predicting energy consumption in smart buildings is challenging due to dependencies in sensor data and the variability of environmental conditions. We introduce S4ConvD, a novel convolutional variant of Deep State Space Models (Deep-SSMs), that minimizes reliance on extensive preprocessing steps. S4ConvD is designed to optimize runtime in resource-constrained environments. By implementing adaptive scaling and frequency adjustments, this model shows to capture complex temporal patterns in building energy dynamics. Experiments on the ASHRAE Great Energy Predictor III dataset reveal that S4ConvD outperforms current benchmarks. Additionally, S4ConvD benefits from significant improvements in GPU runtime through the use of Block Tiling optimization techniques. Thus, S4ConvD has the potential for practical deployment in real-time energy modeling. Furthermore, the complete codebase and dataset are accessible on GitHub, fostering open-source contributions and facilitating further research. Our method also promotes resource-efficient model execution, enhancing both energy forecasting and the potential integration of renewable energy sources into smart grid systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge