Rebecca Fahrig

Rigid and non-rigid motion compensation in weight-bearing cone-beam CT of the knee using inertial measurements

Feb 24, 2021

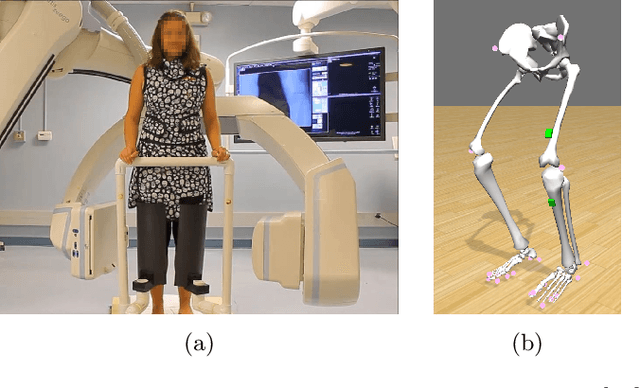

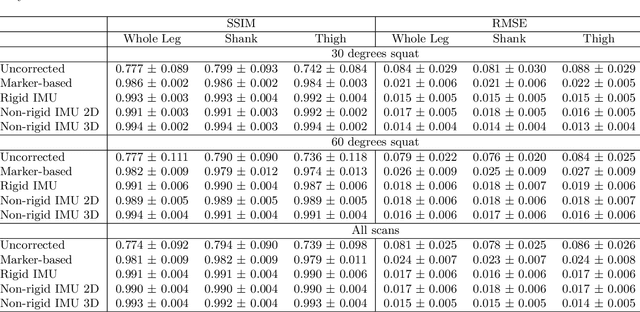

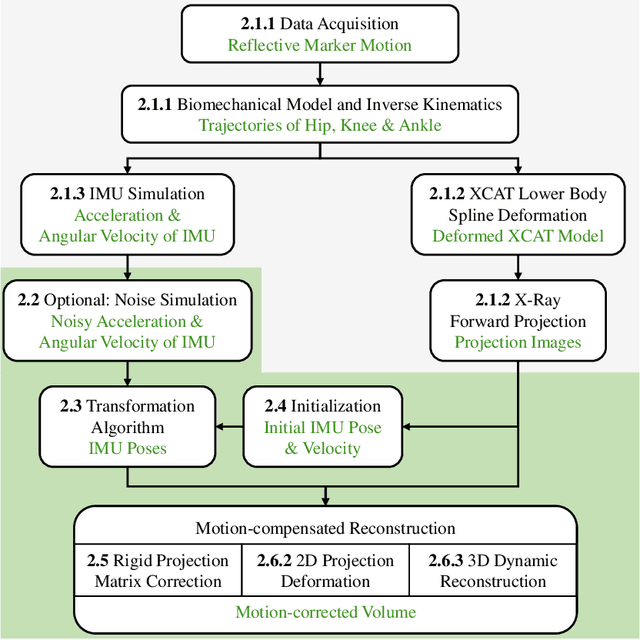

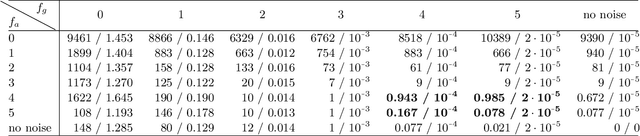

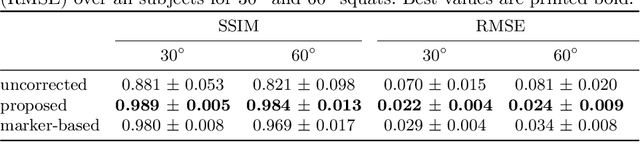

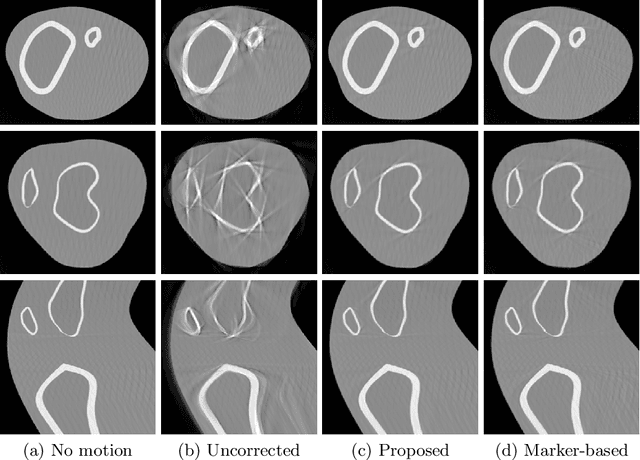

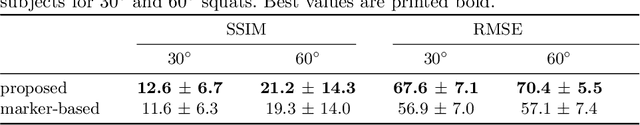

Abstract:Involuntary subject motion is the main source of artifacts in weight-bearing cone-beam CT of the knee. To achieve image quality for clinical diagnosis, the motion needs to be compensated. We propose to use inertial measurement units (IMUs) attached to the leg for motion estimation. We perform a simulation study using real motion recorded with an optical tracking system. Three IMU-based correction approaches are evaluated, namely rigid motion correction, non-rigid 2D projection deformation and non-rigid 3D dynamic reconstruction. We present an initialization process based on the system geometry. With an IMU noise simulation, we investigate the applicability of the proposed methods in real applications. All proposed IMU-based approaches correct motion at least as good as a state-of-the-art marker-based approach. The structural similarity index and the root mean squared error between motion-free and motion corrected volumes are improved by 24-35% and 78-85%, respectively, compared with the uncorrected case. The noise analysis shows that the noise levels of commercially available IMUs need to be improved by a factor of $10^5$ which is currently only achieved by specialized hardware not robust enough for the application. The presented study confirms the feasibility of this novel approach and defines improvements necessary for a real application.

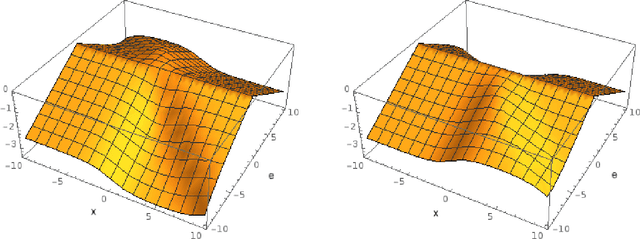

X-ray Scatter Estimation Using Deep Splines

Jan 22, 2021

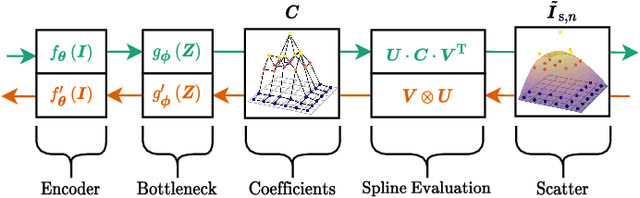

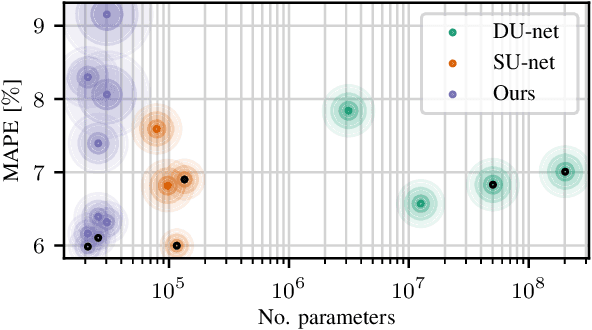

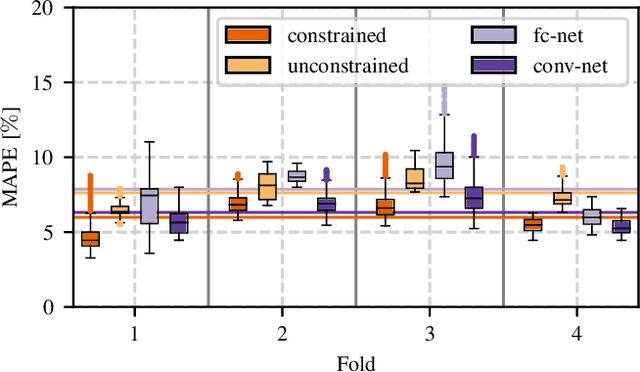

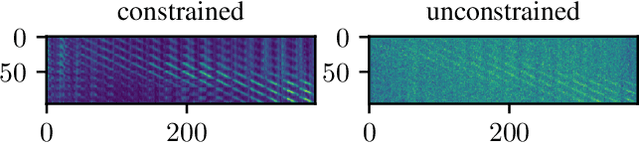

Abstract:Algorithmic X-ray scatter compensation is a desirable technique in flat-panel X-ray imaging and cone-beam computed tomography. State-of-the-art U-net based image translation approaches yielded promising results. As there are no physics constraints applied to the output of the U-Net, it cannot be ruled out that it yields spurious results. Unfortunately, those may be misleading in the context of medical imaging. To overcome this problem, we propose to embed B-splines as a known operator into neural networks. This inherently limits their predictions to well-behaved and smooth functions. In a study using synthetic head and thorax data as well as real thorax phantom data, we found that our approach performed on par with U-net when comparing both algorithms based on quantitative performance metrics. However, our approach not only reduces runtime and parameter complexity, but we also found it much more robust to unseen noise levels. While the U-net responded with visible artifacts, our approach preserved the X-ray signal's frequency characteristics.

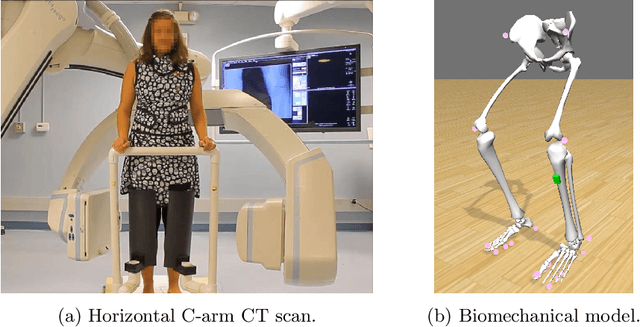

Inertial Measurements for Motion Compensation in Weight-bearing Cone-beam CT of the Knee

Jul 09, 2020

Abstract:Involuntary motion during weight-bearing cone-beam computed tomography (CT) scans of the knee causes artifacts in the reconstructed volumes making them unusable for clinical diagnosis. Currently, image-based or marker-based methods are applied to correct for this motion, but often require long execution or preparation times. We propose to attach an inertial measurement unit (IMU) containing an accelerometer and a gyroscope to the leg of the subject in order to measure the motion during the scan and correct for it. To validate this approach, we present a simulation study using real motion measured with an optical 3D tracking system. With this motion, an XCAT numerical knee phantom is non-rigidly deformed during a simulated CT scan creating motion corrupted projections. A biomechanical model is animated with the same tracked motion in order to generate measurements of an IMU placed below the knee. In our proposed multi-stage algorithm, these signals are transformed to the global coordinate system of the CT scan and applied for motion compensation during reconstruction. Our proposed approach can effectively reduce motion artifacts in the reconstructed volumes. Compared to the motion corrupted case, the average structural similarity index and root mean squared error with respect to the no-motion case improved by 13-21% and 68-70%, respectively. These results are qualitatively and quantitatively on par with a state-of-the-art marker-based method we compared our approach to. The presented study shows the feasibility of this novel approach, and yields promising results towards a purely IMU-based motion compensation in C-arm CT.

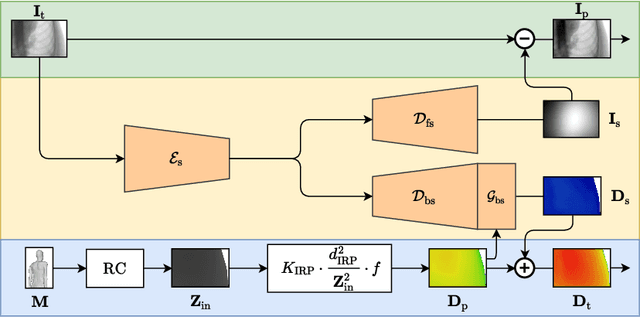

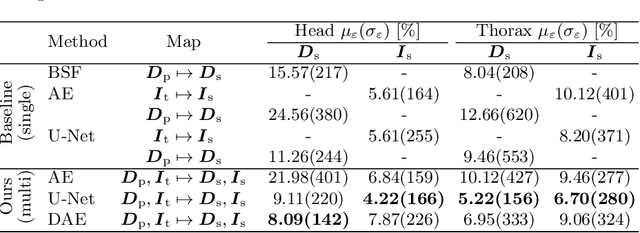

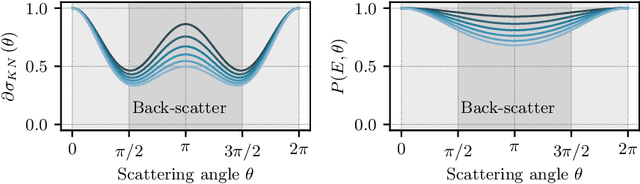

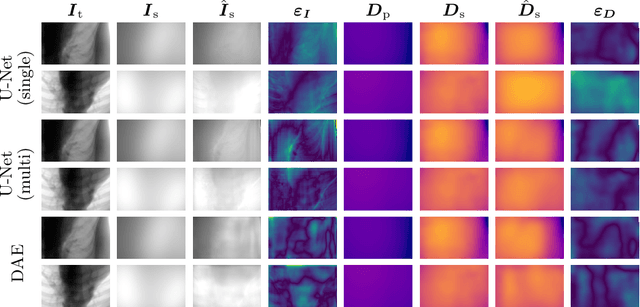

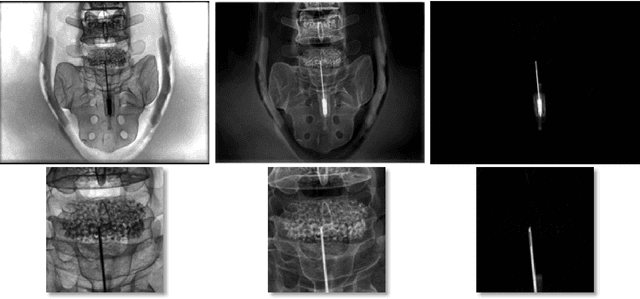

Simultaneous Estimation of X-ray Back-Scatter and Forward-Scatter using Multi-Task Learning

Jul 08, 2020

Abstract:Scattered radiation is a major concern impacting X-ray image-guided procedures in two ways. First, back-scatter significantly contributes to patient (skin) dose during complicated interventions. Second, forward-scattered radiation reduces contrast in projection images and introduces artifacts in 3-D reconstructions. While conventionally employed anti-scatter grids improve image quality by blocking X-rays, the additional attenuation due to the anti-scatter grid at the detector needs to be compensated for by a higher patient entrance dose. This also increases the room dose affecting the staff caring for the patient. For skin dose quantification, back-scatter is usually accounted for by applying pre-determined scalar back-scatter factors or linear point spread functions to a primary kerma forward projection onto a patient surface point. However, as patients come in different shapes, the generalization of conventional methods is limited. Here, we propose a novel approach combining conventional techniques with learning-based methods to simultaneously estimate the forward-scatter reaching the detector as well as the back-scatter affecting the patient skin dose. Knowing the forward-scatter, we can correct X-ray projections, while a good estimate of the back-scatter component facilitates an improved skin dose assessment. To simultaneously estimate forward-scatter as well as back-scatter, we propose a multi-task approach for joint back- and forward-scatter estimation by combining X-ray physics with neural networks. We show that, in theory, highly accurate scatter estimation in both cases is possible. In addition, we identify research directions for our multi-task framework and learning-based scatter estimation in general.

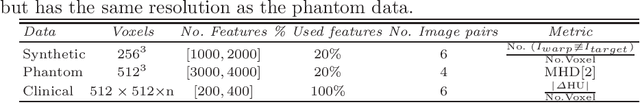

An Investigation of Feature-based Nonrigid Image Registration using Gaussian Process

Jan 12, 2020

Abstract:For a wide range of clinical applications, such as adaptive treatment planning or intraoperative image update, feature-based deformable registration (FDR) approaches are widely employed because of their simplicity and low computational complexity. FDR algorithms estimate a dense displacement field by interpolating a sparse field, which is given by the established correspondence between selected features. In this paper, we consider the deformation field as a Gaussian Process (GP), whereas the selected features are regarded as prior information on the valid deformations. Using GP, we are able to estimate the both dense displacement field and a corresponding uncertainty map at once. Furthermore, we evaluated the performance of different hyperparameter settings for squared exponential kernels with synthetic, phantom and clinical data respectively. The quantitative comparison shows, GP-based interpolation has performance on par with state-of-the-art B-spline interpolation. The greatest clinical benefit of GP-based interpolation is that it gives a reliable estimate of the mathematical uncertainty of the calculated dense displacement map.

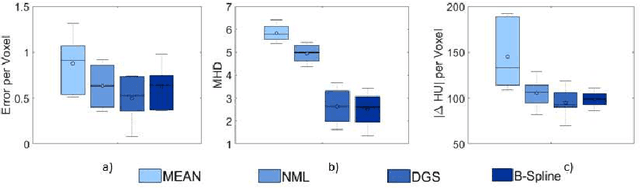

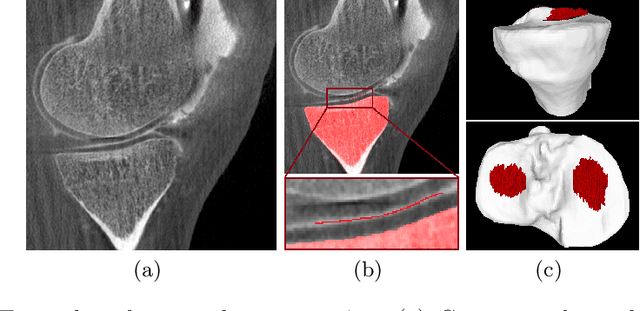

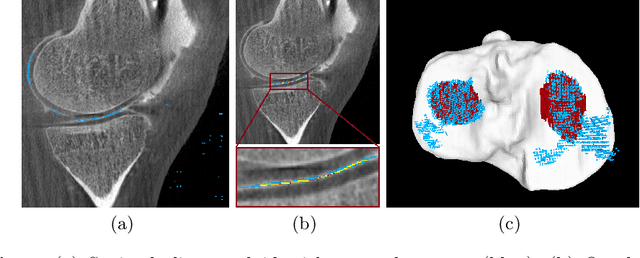

Multi-Channel Volumetric Neural Network for Knee Cartilage Segmentation in Cone-beam CT

Dec 03, 2019

Abstract:Analyzing knee cartilage thickness and strain under load can help to further the understanding of the effects of diseases like Osteoarthritis. A precise segmentation of the cartilage is a necessary prerequisite for this analysis. This segmentation task has mainly been addressed in Magnetic Resonance Imaging, and was rarely investigated on contrast-enhanced Computed Tomography, where contrast agent visualizes the border between femoral and tibial cartilage. To overcome the main drawback of manual segmentation, namely its high time investment, we propose to use a 3D Convolutional Neural Network for this task. The presented architecture consists of a V-Net with SeLu activation, and a Tversky loss function. Due to the high imbalance between very few cartilage pixels and many background pixels, a high false positive rate is to be expected. To reduce this rate, the two largest segmented point clouds are extracted using a connected component analysis, since they most likely represent the medial and lateral tibial cartilage surfaces. The resulting segmentations are compared to manual segmentations, and achieve on average a recall of 0.69, which confirms the feasibility of this approach.

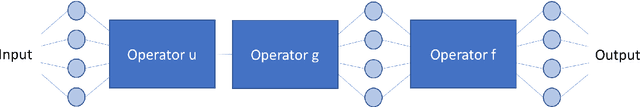

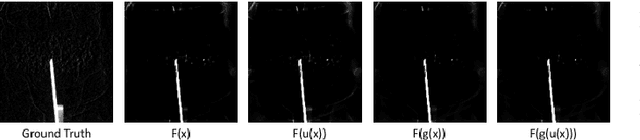

Precision Learning: Towards Use of Known Operators in Neural Networks

Oct 12, 2018

Abstract:In this paper, we consider the use of prior knowledge within neural networks. In particular, we investigate the effect of a known transform within the mapping from input data space to the output domain. We demonstrate that use of known transforms is able to change maximal error bounds. In order to explore the effect further, we consider the problem of X-ray material decomposition as an example to incorporate additional prior knowledge. We demonstrate that inclusion of a non-linear function known from the physical properties of the system is able to reduce prediction errors therewith improving prediction quality from SSIM values of 0.54 to 0.88. This approach is applicable to a wide set of applications in physics and signal processing that provide prior knowledge on such transforms. Also maximal error estimation and network understanding could be facilitated within the context of precision learning.

* accepted on ICPR 2018

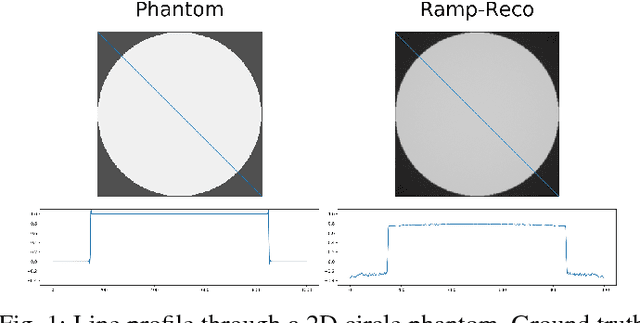

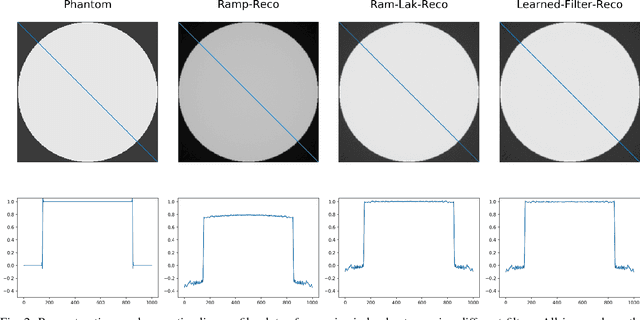

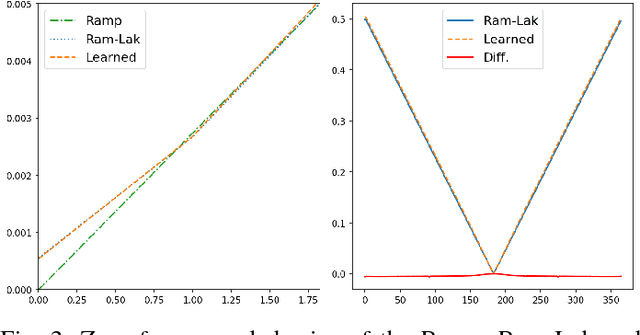

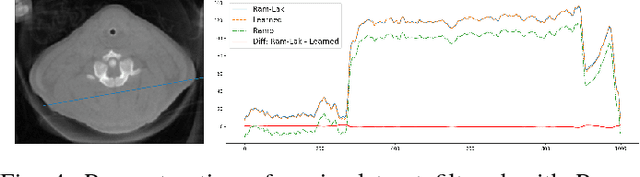

Precision Learning: Reconstruction Filter Kernel Discretization

Jul 09, 2018

Abstract:In this paper, we present substantial evidence that a deep neural network will intrinsically learn the appropriate way to discretize the ideal continuous reconstruction filter. Currently, the Ram-Lak filter or heuristic filters which impose different noise assumptions are used for filtered back-projection. All of these, however, inhibit a fully data-driven reconstruction deep learning approach. In addition, the heuristic filters are not chosen in an optimal sense. To tackle this issue, we propose a formulation to directly learn the reconstruction filter. The filter is initialized with the ideal Ramp filter as a strong pre-training and learned in frequency domain. We compare the learned filter with the Ram-Lak and the Ramp filter on a numerical phantom as well as on a real CT dataset. The results show that the network properly discretizes the continuous Ramp filter and converges towards the Ram-Lak solution. In our view these observations are interesting to gain a better understanding of deep learning techniques and traditional analytic techniques such as Wiener filtering and discretization theory. Furthermore, this will allow fully trainable data-driven reconstruction deep learning approaches.

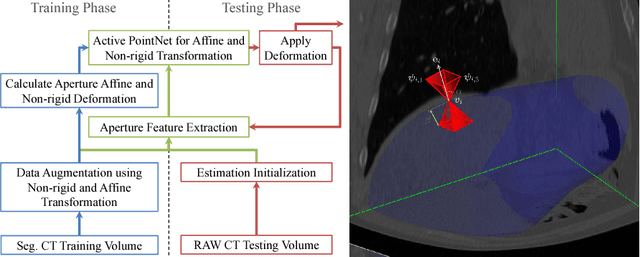

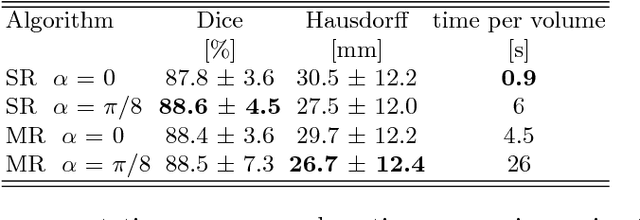

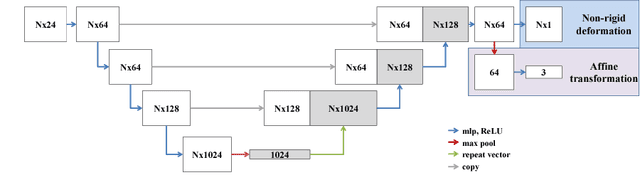

Action Learning for 3D Point Cloud Based Organ Segmentation

Jun 14, 2018

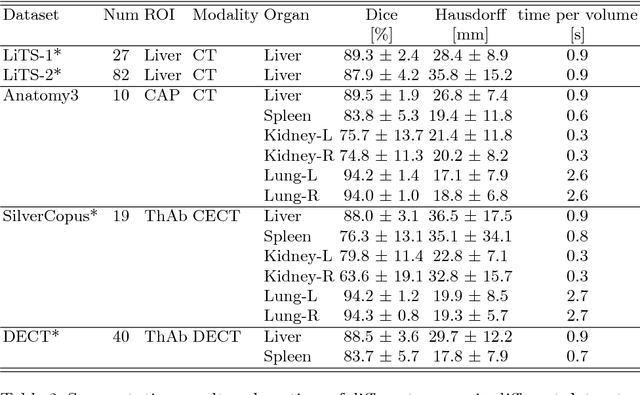

Abstract:We propose a novel point cloud based 3D organ segmentation pipeline utilizing deep Q-learning. In order to preserve shape properties, the learning process is guided using a statistical shape model. The trained agent directly predicts piece-wise linear transformations for all vertices in each iteration. This mapping between the ideal transformation for an object outline estimation is learned based on image features. To this end, we introduce aperture features that extract gray values by sampling the 3D volume within the cone centered around the associated vertex and its normal vector. Our approach is also capable of estimating a hierarchical pyramid of non rigid deformations for multi-resolution meshes. In the application phase, we use a marginal approach to gradually estimate affine as well as non-rigid transformations. We performed extensive evaluations to highlight the robust performance of our approach on a variety of challenge data as well as clinical data. Additionally, our method has a run time ranging from 0.3 to 2.7 seconds to segment each organ. In addition, we show that the proposed method can be applied to different organs, X-ray based modalities, and scanning protocols without the need of transfer learning. As we learn actions, even unseen reference meshes can be processed as demonstrated in an example with the Visible Human. From this we conclude that our method is robust, and we believe that our method can be successfully applied to many more applications, in particular, in the interventional imaging space.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge