Mario Amrehn

A Semi-Automated Usability Evaluation Framework for Interactive Image Segmentation Systems

Sep 01, 2019

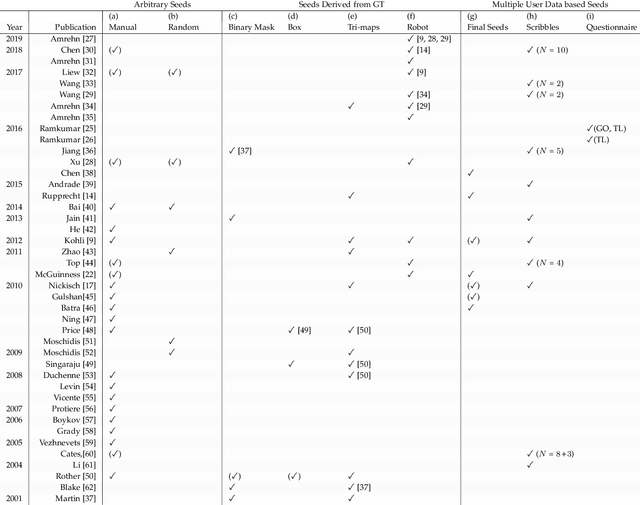

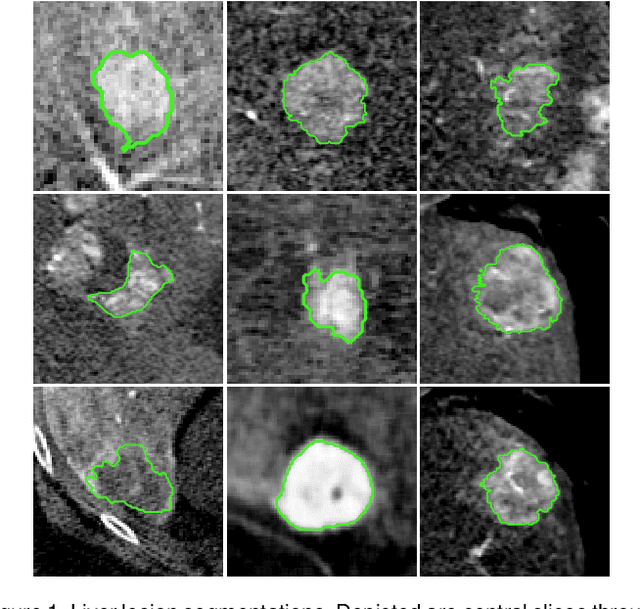

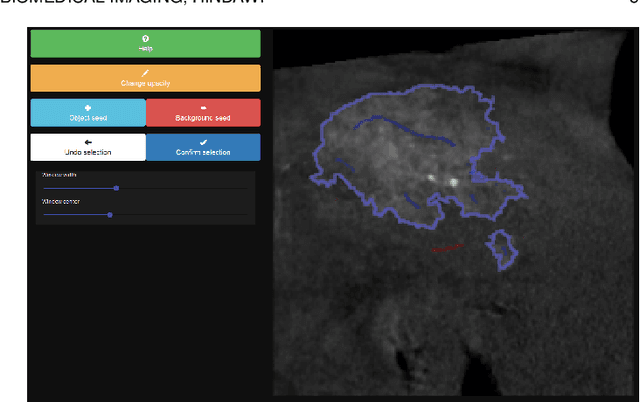

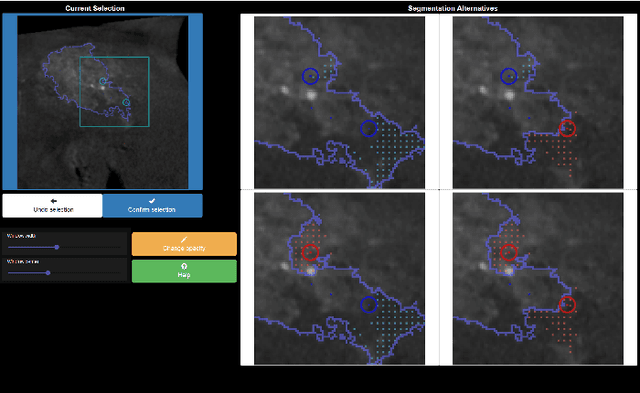

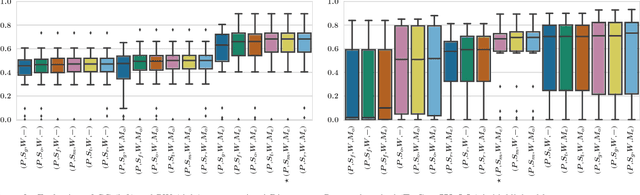

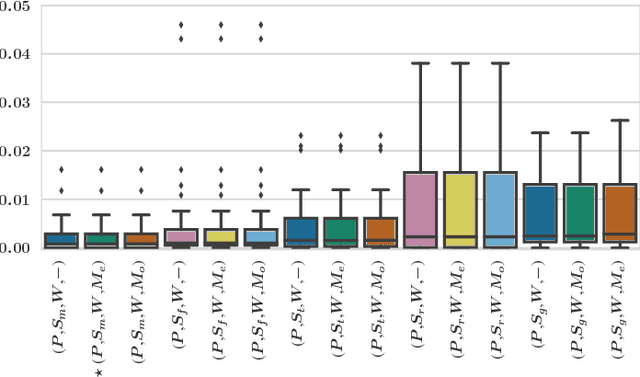

Abstract:For complex segmentation tasks, the achievable accuracy of fully automated systems is inherently limited. Specifically, when a precise segmentation result is desired for a small amount of given data sets, semi-automatic methods exhibit a clear benefit for the user. The optimization of human computer interaction (HCI) is an essential part of interactive image segmentation. Nevertheless, publications introducing novel interactive segmentation systems (ISS) often lack an objective comparison of HCI aspects. It is demonstrated, that even when the underlying segmentation algorithm is the same throughout interactive prototypes, their user experience may vary substantially. As a result, users prefer simple interfaces as well as a considerable degree of freedom to control each iterative step of the segmentation. In this article, an objective method for the comparison of ISS is proposed, based on extensive user studies. A summative qualitative content analysis is conducted via abstraction of visual and verbal feedback given by the participants. A direct assessment of the segmentation system is executed by the users via the system usability scale (SUS) and AttrakDiff-2 questionnaires. Furthermore, an approximation of the findings regarding usability aspects in those studies is introduced, conducted solely from the system-measurable user actions during their usage of interactive segmentation prototypes. The prediction of all questionnaire results has an average relative error of 8.9%, which is close to the expected precision of the questionnaire results themselves. This automated evaluation scheme may significantly reduce the resources necessary to investigate each variation of a prototype's user interface (UI) features and segmentation methodologies.

The Random Forest Classifier in WEKA: Discussion and New Developments for Imbalanced Data

Jan 04, 2019

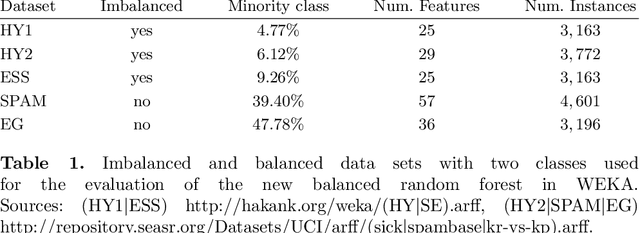

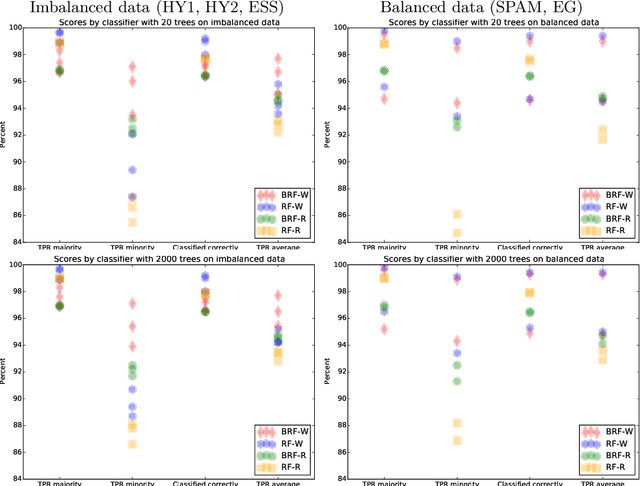

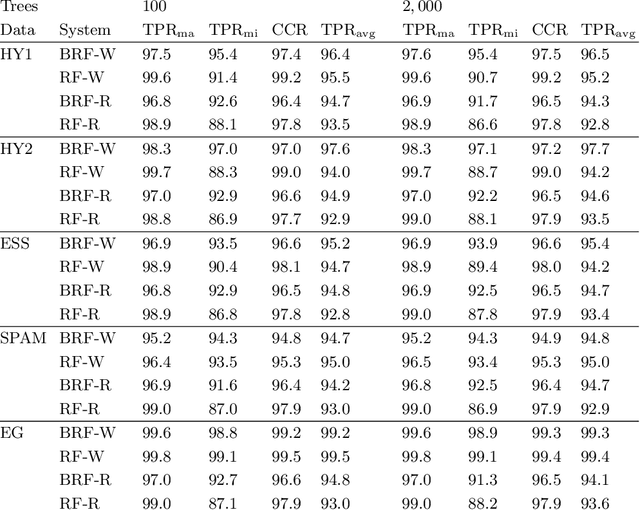

Abstract:Data analysis and machine learning have become an integrative part of the modern scientific methodology, providing automated techniques to predict further information based on observations. One of these classification and regression techniques is the random forest approach. Those decision tree based predictors are best known for their good computational performance and scalability. However, in case of severely imbalanced training data, as often seen in medical studies' data with large control groups, the training algorithm or the sampling process has to be altered in order to improve the prediction quality for minority classes. In this work, a balanced random forest approach for WEKA is proposed. Furthermore, the prediction quality of the unmodified random forest implementation and the new balanced random forest version for WEKA are evaluated against reference implementations in R. Two-class problems on balanced data sets and imbalanced medical studies' data are investigated. A superior prediction quality using the proposed method for imbalanced data is shown compared to the other three techniques.

Action Learning for 3D Point Cloud Based Organ Segmentation

Jun 14, 2018

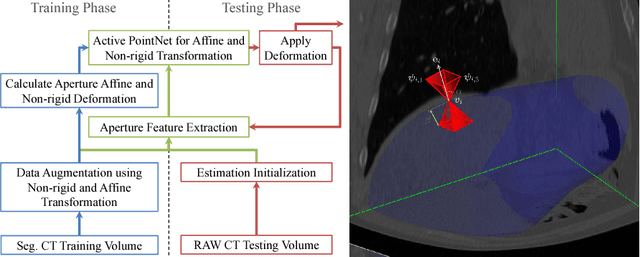

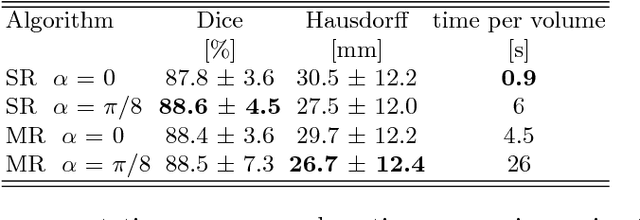

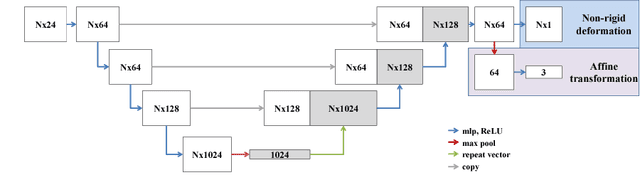

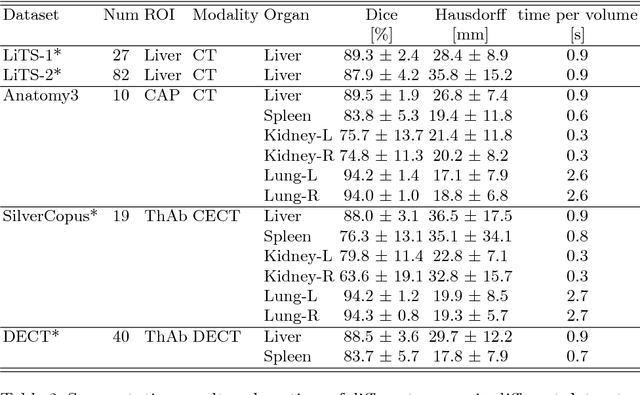

Abstract:We propose a novel point cloud based 3D organ segmentation pipeline utilizing deep Q-learning. In order to preserve shape properties, the learning process is guided using a statistical shape model. The trained agent directly predicts piece-wise linear transformations for all vertices in each iteration. This mapping between the ideal transformation for an object outline estimation is learned based on image features. To this end, we introduce aperture features that extract gray values by sampling the 3D volume within the cone centered around the associated vertex and its normal vector. Our approach is also capable of estimating a hierarchical pyramid of non rigid deformations for multi-resolution meshes. In the application phase, we use a marginal approach to gradually estimate affine as well as non-rigid transformations. We performed extensive evaluations to highlight the robust performance of our approach on a variety of challenge data as well as clinical data. Additionally, our method has a run time ranging from 0.3 to 2.7 seconds to segment each organ. In addition, we show that the proposed method can be applied to different organs, X-ray based modalities, and scanning protocols without the need of transfer learning. As we learn actions, even unseen reference meshes can be processed as demonstrated in an example with the Visible Human. From this we conclude that our method is robust, and we believe that our method can be successfully applied to many more applications, in particular, in the interventional imaging space.

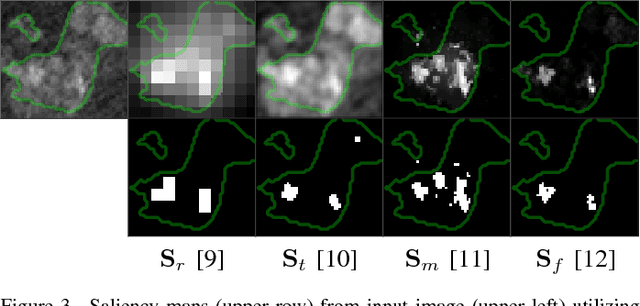

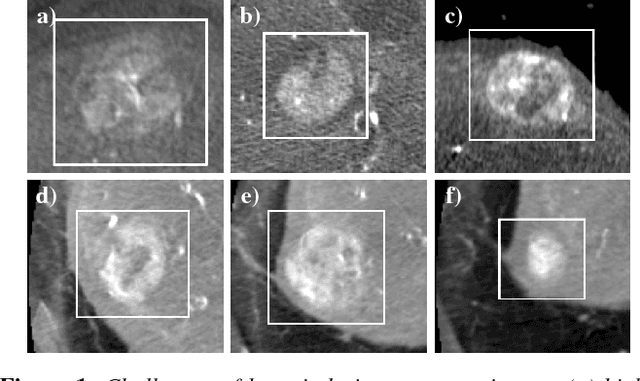

Robust Seed Mask Generation for Interactive Image Segmentation

Nov 20, 2017

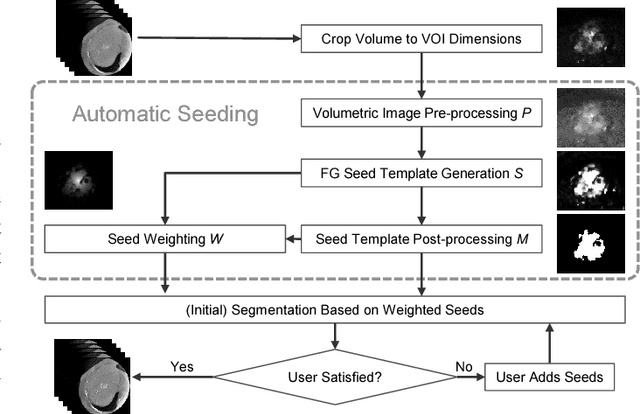

Abstract:In interactive medical image segmentation, anatomical structures are extracted from reconstructed volumetric images. The first iterations of user interaction traditionally consist of drawing pictorial hints as an initial estimate of the object to extract. Only after this time consuming first phase, the efficient selective refinement of current segmentation results begins. Erroneously labeled seeds, especially near the border of the object, are challenging to detect and replace for a human and may substantially impact the overall segmentation quality. We propose an automatic seeding pipeline as well as a configuration based on saliency recognition, in order to skip the time-consuming initial interaction phase during segmentation. A median Dice score of 68.22% is reached before the first user interaction on the test data set with an error rate in seeding of only 0.088%.

UI-Net: Interactive Artificial Neural Networks for Iterative Image Segmentation Based on a User Model

Sep 11, 2017

Abstract:For complex segmentation tasks, fully automatic systems are inherently limited in their achievable accuracy for extracting relevant objects. Especially in cases where only few data sets need to be processed for a highly accurate result, semi-automatic segmentation techniques exhibit a clear benefit for the user. One area of application is medical image processing during an intervention for a single patient. We propose a learning-based cooperative segmentation approach which includes the computing entity as well as the user into the task. Our system builds upon a state-of-the-art fully convolutional artificial neural network (FCN) as well as an active user model for training. During the segmentation process, a user of the trained system can iteratively add additional hints in form of pictorial scribbles as seed points into the FCN system to achieve an interactive and precise segmentation result. The segmentation quality of interactive FCNs is evaluated. Iterative FCN approaches can yield superior results compared to networks without the user input channel component, due to a consistent improvement in segmentation quality after each interaction.

* This work is submitted to the 2017 Eurographics Workshop on Visual Computing for Biology and Medicine

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge