William M. Wells III

A Novel Streamline-based diffusion MRI Tractography Registration Method with Probabilistic Keypoint Detection

Mar 04, 2025

Abstract:Registration of diffusion MRI tractography is an essential step for analyzing group similarities and variations in the brain's white matter (WM). Streamline-based registration approaches can leverage the 3D geometric information of fiber pathways to enable spatial alignment after registration. Existing methods usually rely on the optimization of the spatial distances to identify the optimal transformation. However, such methods overlook point connectivity patterns within the streamline itself, limiting their ability to identify anatomical correspondences across tractography datasets. In this work, we propose a novel unsupervised approach using deep learning to perform streamline-based dMRI tractography registration. The overall idea is to identify corresponding keypoint pairs across subjects for spatial alignment of tractography datasets. We model tractography as point clouds to leverage the graph connectivity along streamlines. We propose a novel keypoint detection method for streamlines, framed as a probabilistic classification task to identify anatomically consistent correspondences across unstructured streamline sets. In the experiments, we compare several existing methods and show highly effective and efficient tractography registration performance.

Learning to Match 2D Keypoints Across Preoperative MR and Intraoperative Ultrasound

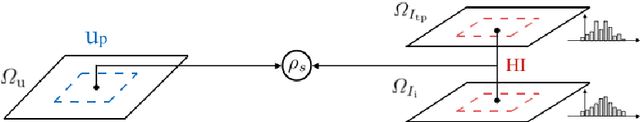

Sep 12, 2024Abstract:We propose in this paper a texture-invariant 2D keypoints descriptor specifically designed for matching preoperative Magnetic Resonance (MR) images with intraoperative Ultrasound (US) images. We introduce a matching-by-synthesis strategy, where intraoperative US images are synthesized from MR images accounting for multiple MR modalities and intraoperative US variability. We build our training set by enforcing keypoints localization over all images then train a patient-specific descriptor network that learns texture-invariant discriminant features in a supervised contrastive manner, leading to robust keypoints descriptors. Our experiments on real cases with ground truth show the effectiveness of the proposed approach, outperforming the state-of-the-art methods and achieving 80.35% matching precision on average.

Registration of Longitudinal Spine CTs for Monitoring Lesion Growth

Feb 14, 2024Abstract:Accurate and reliable registration of longitudinal spine images is essential for assessment of disease progression and surgical outcome. Implementing a fully automatic and robust registration is crucial for clinical use, however, it is challenging due to substantial change in shape and appearance due to lesions. In this paper we present a novel method to automatically align longitudinal spine CTs and accurately assess lesion progression. Our method follows a two-step pipeline where vertebrae are first automatically localized, labeled and 3D surfaces are generated using a deep learning model, then longitudinally aligned using a Gaussian mixture model surface registration. We tested our approach on 37 vertebrae, from 5 patients, with baseline CTs and 3, 6, and 12 months follow-ups leading to 111 registrations. Our experiment showed accurate registration with an average Hausdorff distance of 0.65 mm and average Dice score of 0.92.

Learning Expected Appearances for Intraoperative Registration during Neurosurgery

Oct 03, 2023Abstract:We present a novel method for intraoperative patient-to-image registration by learning Expected Appearances. Our method uses preoperative imaging to synthesize patient-specific expected views through a surgical microscope for a predicted range of transformations. Our method estimates the camera pose by minimizing the dissimilarity between the intraoperative 2D view through the optical microscope and the synthesized expected texture. In contrast to conventional methods, our approach transfers the processing tasks to the preoperative stage, reducing thereby the impact of low-resolution, distorted, and noisy intraoperative images, that often degrade the registration accuracy. We applied our method in the context of neuronavigation during brain surgery. We evaluated our approach on synthetic data and on retrospective data from 6 clinical cases. Our method outperformed state-of-the-art methods and achieved accuracies that met current clinical standards.

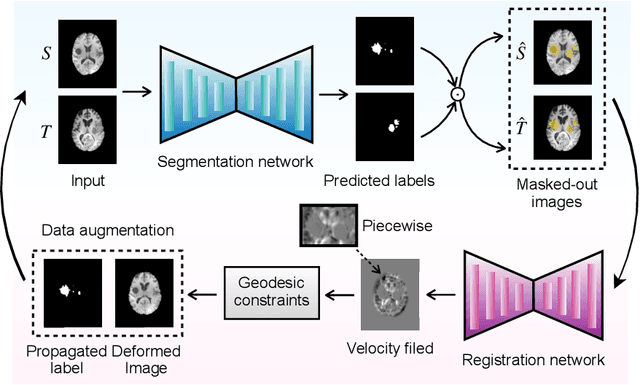

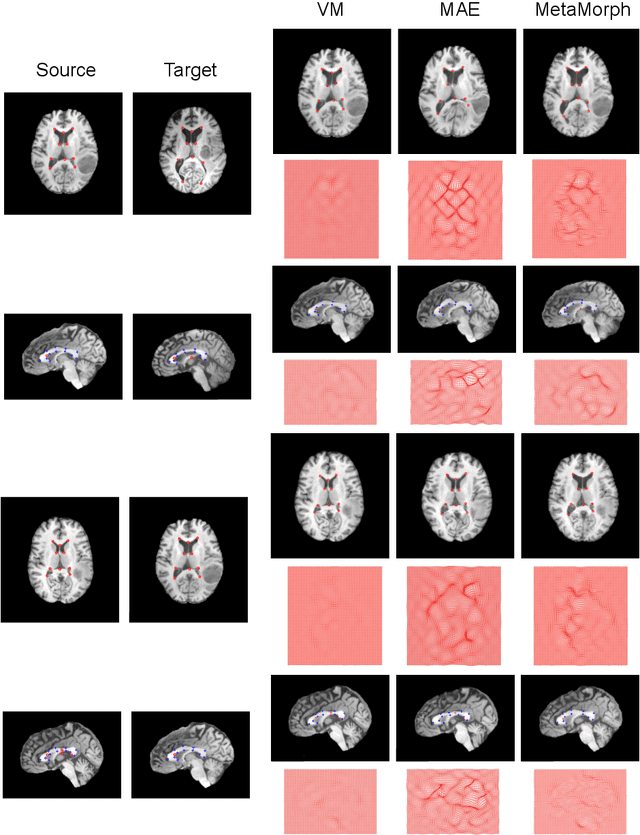

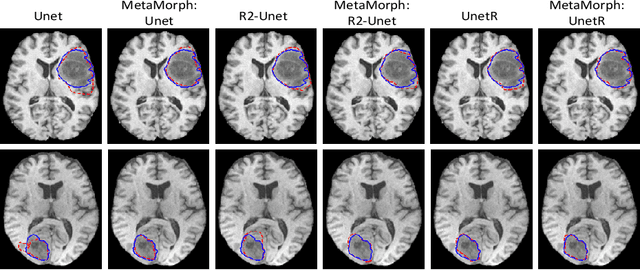

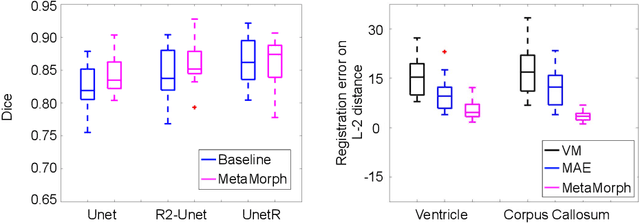

MetaMorph: Learning Metamorphic Image Transformation With Appearance Changes

Mar 08, 2023

Abstract:This paper presents a novel predictive model, MetaMorph, for metamorphic registration of images with appearance changes (i.e., caused by brain tumors). In contrast to previous learning-based registration methods that have little or no control over appearance-changes, our model introduces a new regularization that can effectively suppress the negative effects of appearance changing areas. In particular, we develop a piecewise regularization on the tangent space of diffeomorphic transformations (also known as initial velocity fields) via learned segmentation maps of abnormal regions. The geometric transformation and appearance changes are treated as joint tasks that are mutually beneficial. Our model MetaMorph is more robust and accurate when searching for an optimal registration solution under the guidance of segmentation, which in turn improves the segmentation performance by providing appropriately augmented training labels. We validate MetaMorph on real 3D human brain tumor magnetic resonance imaging (MRI) scans. Experimental results show that our model outperforms the state-of-the-art learning-based registration models. The proposed MetaMorph has great potential in various image-guided clinical interventions, e.g., real-time image-guided navigation systems for tumor removal surgery.

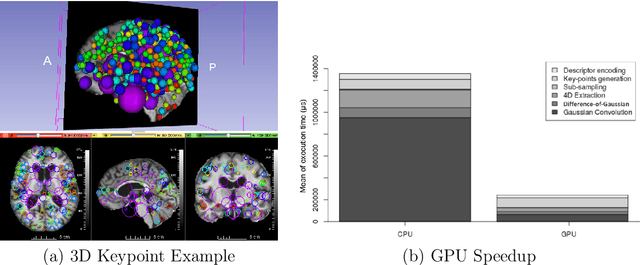

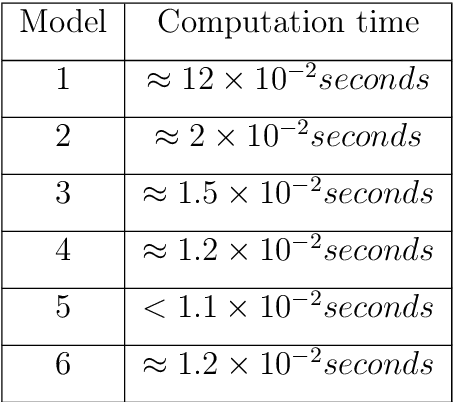

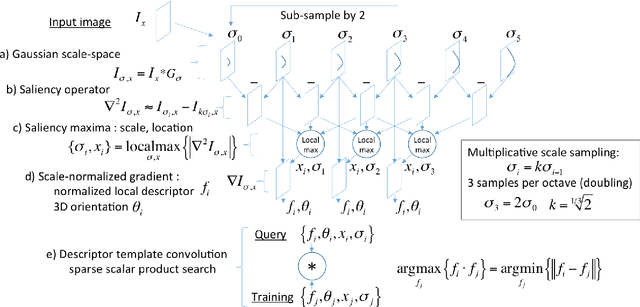

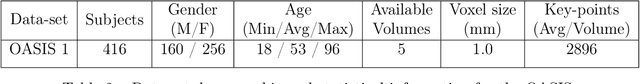

GPU optimization of the 3D Scale-invariant Feature Transform Algorithm and a Novel BRIEF-inspired 3D Fast Descriptor

Dec 19, 2021

Abstract:This work details a highly efficient implementation of the 3D scale-invariant feature transform (SIFT) algorithm, for the purpose of machine learning from large sets of volumetric medical image data. The primary operations of the 3D SIFT code are implemented on a graphics processing unit (GPU), including convolution, sub-sampling, and 4D peak detection from scale-space pyramids. The performance improvements are quantified in keypoint detection and image-to-image matching experiments, using 3D MRI human brain volumes of different people. Computationally efficient 3D keypoint descriptors are proposed based on the Binary Robust Independent Elementary Feature (BRIEF) code, including a novel descriptor we call Ranked Robust Independent Elementary Features (RRIEF), and compared to the original 3D SIFT-Rank method\citep{toews2013efficient}. The GPU implementation affords a speedup of approximately 7X beyond an optimised CPU implementation, where computation time is reduced from 1.4 seconds to 0.2 seconds for 3D volumes of size (145, 174, 145) voxels with approximately 3000 keypoints. Notable speedups include the convolution operation (20X), 4D peak detection (3X), sub-sampling (3X), and difference-of-Gaussian pyramid construction (2X). Efficient descriptors offer a speedup of 2X and a memory savings of 6X compared to standard SIFT-Rank descriptors, at a cost of reduced numbers of keypoint correspondences, revealing a trade-off between computational efficiency and algorithmic performance. The speedups gained by our implementation will allow for a more efficient analysis on larger data sets. Our optimized GPU implementation of the 3D SIFT-Rank extractor is available at https://github.com/CarluerJB/3D_SIFT_CUDA.

PEP: Parameter Ensembling by Perturbation

Oct 24, 2020

Abstract:Ensembling is now recognized as an effective approach for increasing the predictive performance and calibration of deep networks. We introduce a new approach, Parameter Ensembling by Perturbation (PEP), that constructs an ensemble of parameter values as random perturbations of the optimal parameter set from training by a Gaussian with a single variance parameter. The variance is chosen to maximize the log-likelihood of the ensemble average ($\mathbb{L}$) on the validation data set. Empirically, and perhaps surprisingly, $\mathbb{L}$ has a well-defined maximum as the variance grows from zero (which corresponds to the baseline model). Conveniently, calibration level of predictions also tends to grow favorably until the peak of $\mathbb{L}$ is reached. In most experiments, PEP provides a small improvement in performance, and, in some cases, a substantial improvement in empirical calibration. We show that this "PEP effect" (the gain in log-likelihood) is related to the mean curvature of the likelihood function and the empirical Fisher information. Experiments on ImageNet pre-trained networks including ResNet, DenseNet, and Inception showed improved calibration and likelihood. We further observed a mild improvement in classification accuracy on these networks. Experiments on classification benchmarks such as MNIST and CIFAR-10 showed improved calibration and likelihood, as well as the relationship between the PEP effect and overfitting; this demonstrates that PEP can be used to probe the level of overfitting that occurred during training. In general, no special training procedure or network architecture is needed, and in the case of pre-trained networks, no additional training is needed.

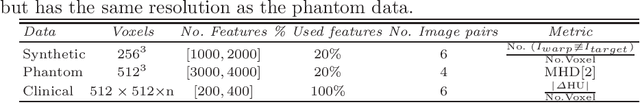

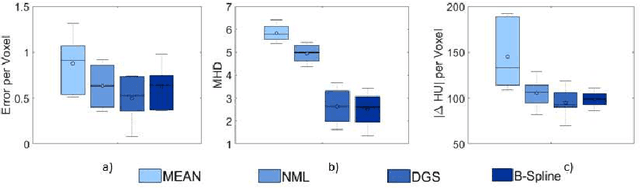

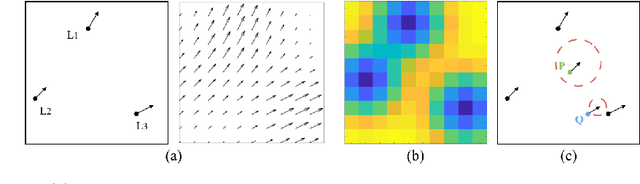

An Investigation of Feature-based Nonrigid Image Registration using Gaussian Process

Jan 12, 2020

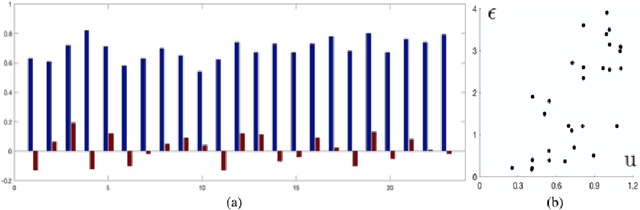

Abstract:For a wide range of clinical applications, such as adaptive treatment planning or intraoperative image update, feature-based deformable registration (FDR) approaches are widely employed because of their simplicity and low computational complexity. FDR algorithms estimate a dense displacement field by interpolating a sparse field, which is given by the established correspondence between selected features. In this paper, we consider the deformation field as a Gaussian Process (GP), whereas the selected features are regarded as prior information on the valid deformations. Using GP, we are able to estimate the both dense displacement field and a corresponding uncertainty map at once. Furthermore, we evaluated the performance of different hyperparameter settings for squared exponential kernels with synthetic, phantom and clinical data respectively. The quantitative comparison shows, GP-based interpolation has performance on par with state-of-the-art B-spline interpolation. The greatest clinical benefit of GP-based interpolation is that it gives a reliable estimate of the mathematical uncertainty of the calculated dense displacement map.

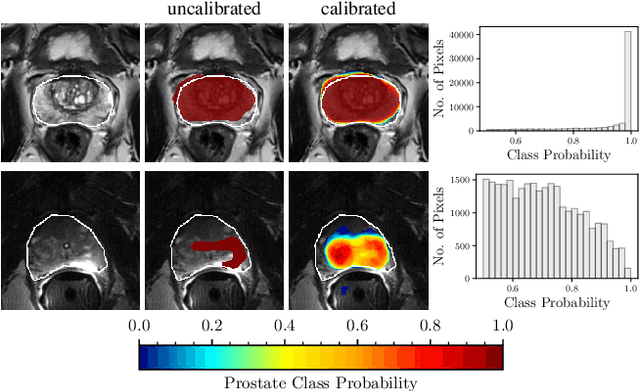

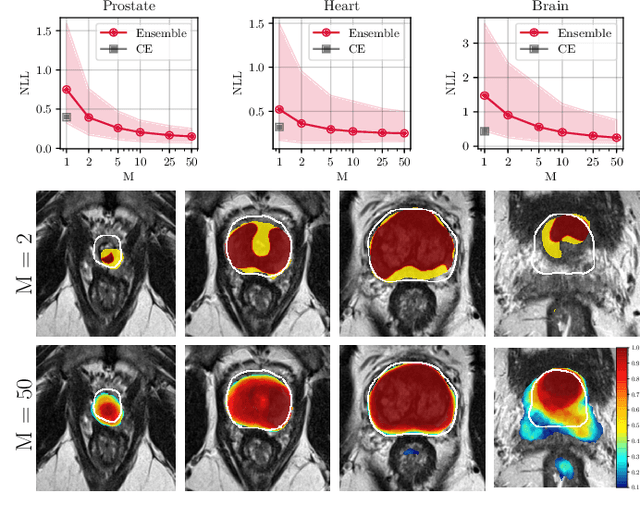

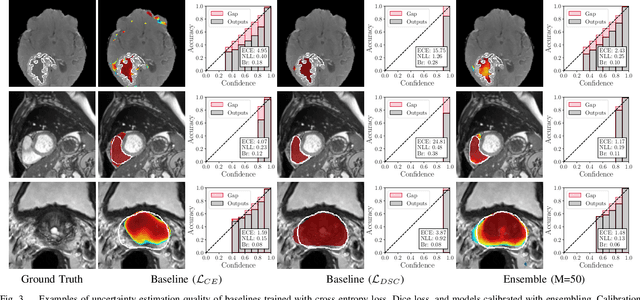

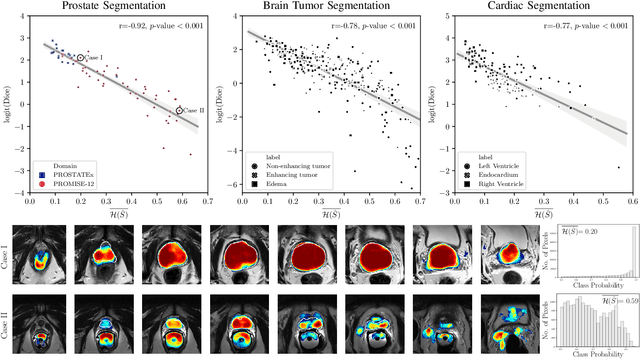

Confidence Calibration and Predictive Uncertainty Estimation for Deep Medical Image Segmentation

Nov 29, 2019

Abstract:Fully convolutional neural networks (FCNs), and in particular U-Nets, have achieved state-of-the-art results in semantic segmentation for numerous medical imaging applications. Moreover, batch normalization and Dice loss have been used successfully to stabilize and accelerate training. However, these networks are poorly calibrated i.e. they tend to produce overconfident predictions both in correct and erroneous classifications, making them unreliable and hard to interpret. In this paper, we study predictive uncertainty estimation in FCNs for medical image segmentation. We make the following contributions: 1) We systematically compare cross entropy loss with Dice loss in terms of segmentation quality and uncertainty estimation of FCNs; 2) We propose model ensembling for confidence calibration of the FCNs trained with batch normalization and Dice loss; 3) We assess the ability of calibrated FCNs to predict segmentation quality of structures and detect out-of-distribution test examples. We conduct extensive experiments across three medical image segmentation applications of the brain, the heart, and the prostate to evaluate our contributions. The results of this study offer considerable insight into the predictive uncertainty estimation and out-of-distribution detection in medical image segmentation and provide practical recipes for confidence calibration. Moreover, we consistently demonstrate that model ensembling improves confidence calibration.

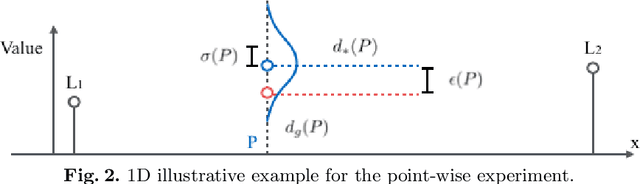

Pilot Study on Verifying the Monotonic Relationship between Error and Uncertainty in Deformable Registration for Neurosurgery

Aug 21, 2019

Abstract:In image-guided neurosurgery, deformable registration currently is not a clinical routine. Although using it in practice is a goal for image-guided therapy, this goal is hampered because surgeons are wary of the less predictable deformable registration error. In the preoperative- to-intraoperative registration, when surgeons notice a misaligned image pattern, they want to know whether it is a registration error or an actual deformation caused by tumor resection or retraction. Here, surgeons need a spatial distribution of error to help them make a better-informed decision, i.e., ignore locations with high error. However, such an error estimate is difficult to acquire. Alternatively, probabilistic image registration (PIR) methods give measures of registration uncertainty, which is a potential surrogate for assessing the quality of registration results. It is intuitive and believed by a lot of people that high uncertainty indicates a large error. Yet to the best of our knowledge, no such conclusion has been reported in the PIR literature. In this study, we look at one PIR method and give preliminary results showing that point-wise registration error and uncertainty are monotonically correlated.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge