Yijie Li

BrainSegNet: A Novel Framework for Whole-Brain MRI Parcellation Enhanced by Large Models

Jan 14, 2026Abstract:Whole-brain parcellation from MRI is a critical yet challenging task due to the complexity of subdividing the brain into numerous small, irregular shaped regions. Traditionally, template-registration methods were used, but recent advances have shifted to deep learning for faster workflows. While large models like the Segment Anything Model (SAM) offer transferable feature representations, they are not tailored for the high precision required in brain parcellation. To address this, we propose BrainSegNet, a novel framework that adapts SAM for accurate whole-brain parcellation into 95 regions. We enhance SAM by integrating U-Net skip connections and specialized modules into its encoder and decoder, enabling fine-grained anatomical precision. Key components include a hybrid encoder combining U-Net skip connections with SAM's transformer blocks, a multi-scale attention decoder with pyramid pooling for varying-sized structures, and a boundary refinement module to sharpen edges. Experimental results on the Human Connectome Project (HCP) dataset demonstrate that BrainSegNet outperforms several state-of-the-art methods, achieving higher accuracy and robustness in complex, multi-label parcellation.

Cross-Population White Matter Atlas Creation for Concurrent Mapping of Brain Connections in Neonates and Adults with Diffusion MRI Tractography

Dec 23, 2025

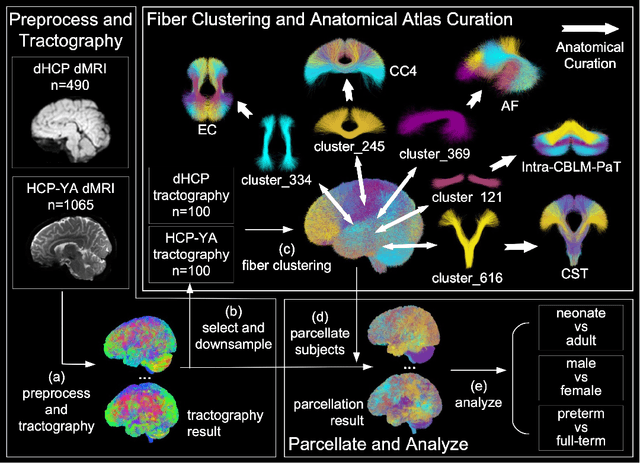

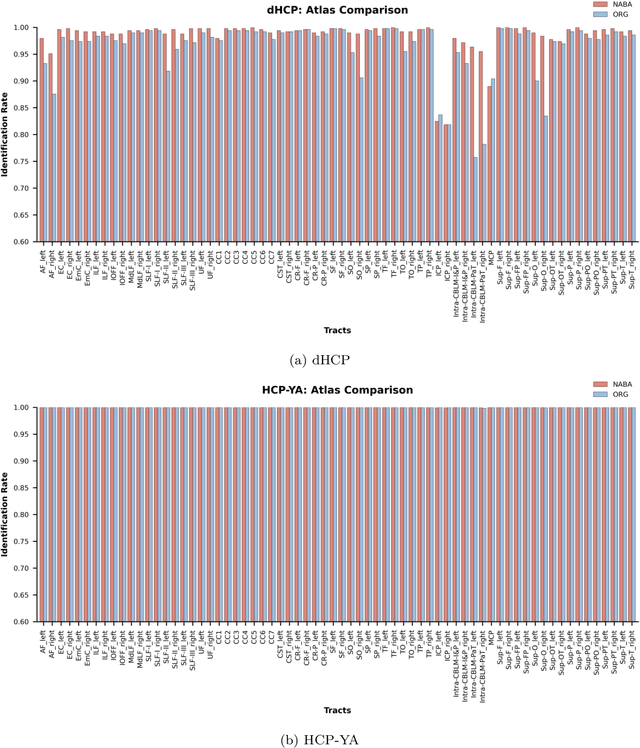

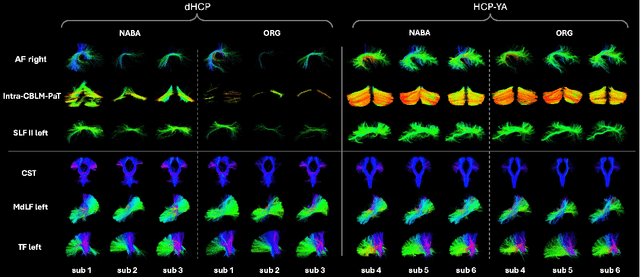

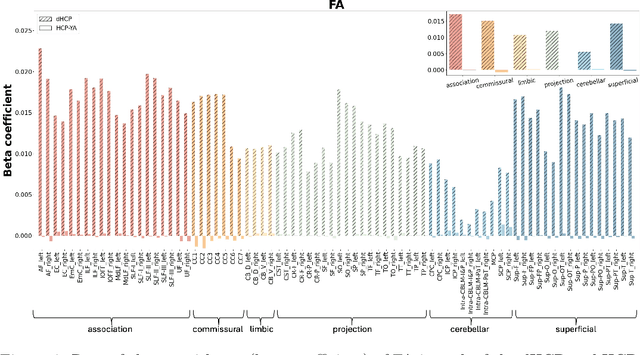

Abstract:Comparing white matter (WM) connections between adults and neonates using diffusion MRI (dMRI) can advance our understanding of typical brain development and potential biomarkers for neurological disorders. However, existing WM atlases are population-specific (adult or neonatal) and reside in separate spaces, preventing direct cross-population comparisons. A unified WM atlas spanning both neonates and adults is still lacking. In this study, we propose a neonatal/adult brain atlas (NABA), a WM tractography atlas built from dMRI data of both neonates and adults. NABA is constructed using a robust, data-driven fiber clustering pipeline, enabling group-wise WM atlasing across populations despite substantial anatomical variability. The atlas provides a standardized template for WM parcellation, allowing direct comparison of WM tracts between neonates and adults. Using NABA, we conduct four analyses: (1) evaluating the feasibility of joint WM mapping across populations, (2) characterizing WM development across neonatal ages relative to adults, (3) assessing sex-related differences in neonatal WM development, and (4) examining the effects of preterm birth. Our results show that NABA robustly identifies WM tracts in both populations. We observe rapid fractional anisotropy (FA) development in long-range association tracts, including the arcuate fasciculus and superior longitudinal fasciculus II, whereas intra-cerebellar tracts develop more slowly. Neonatal females exhibit faster overall FA development than males. Although preterm neonates show lower overall FA development rates, they demonstrate relatively higher FA growth in specific tracts, including the corticospinal tract, corona radiata-pontine pathway, and intracerebellar tracts. These findings demonstrate that NABA is a useful tool for investigating WM development across neonates and adults.

VI-MMRec: Similarity-Aware Training Cost-free Virtual User-Item Interactions for Multimodal Recommendation

Dec 09, 2025

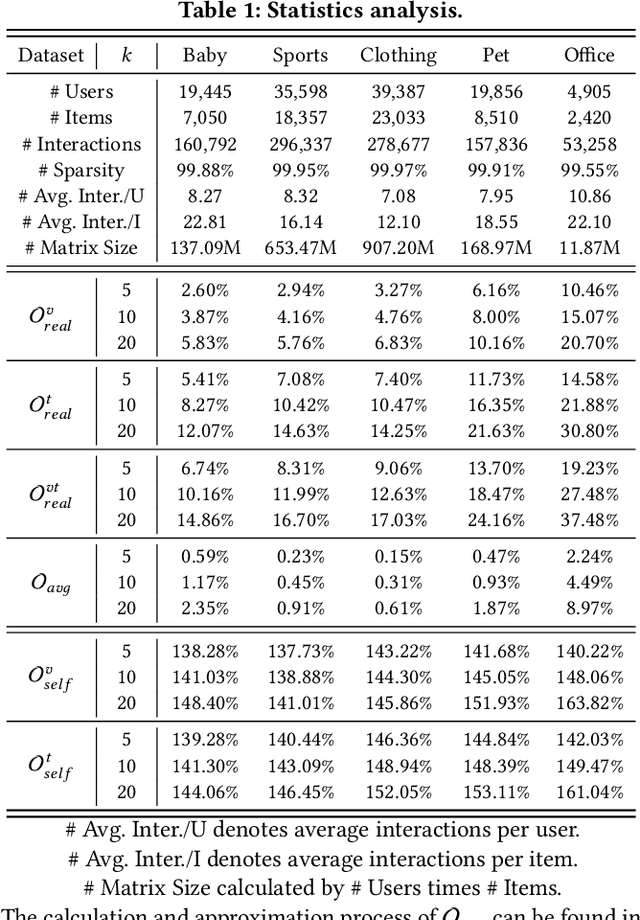

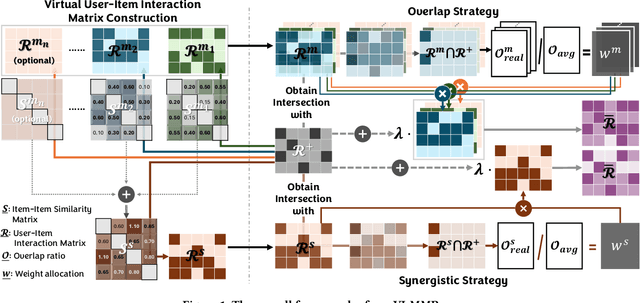

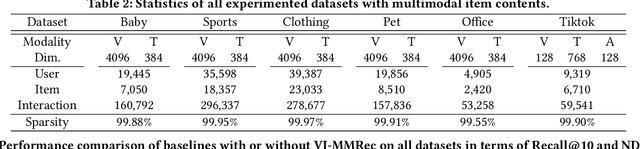

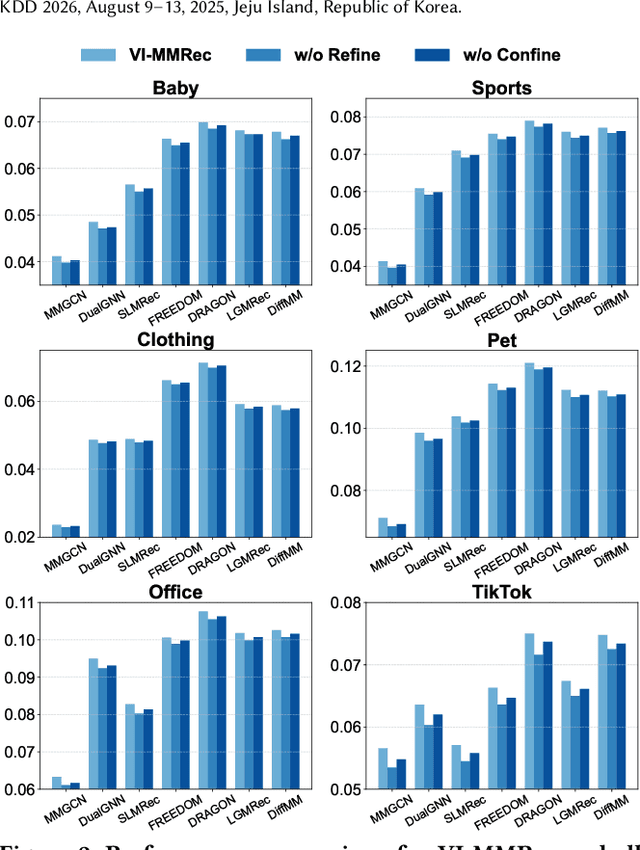

Abstract:Although existing multimodal recommendation models have shown promising performance, their effectiveness continues to be limited by the pervasive data sparsity problem. This problem arises because users typically interact with only a small subset of available items, leading existing models to arbitrarily treat unobserved items as negative samples. To this end, we propose VI-MMRec, a model-agnostic and training cost-free framework that enriches sparse user-item interactions via similarity-aware virtual user-item interactions. These virtual interactions are constructed based on modality-specific feature similarities of user-interacted items. Specifically, VI-MMRec introduces two different strategies: (1) Overlay, which independently aggregates modality-specific similarities to preserve modality-specific user preferences, and (2) Synergistic, which holistically fuses cross-modal similarities to capture complementary user preferences. To ensure high-quality augmentation, we design a statistically informed weight allocation mechanism that adaptively assigns weights to virtual user-item interactions based on dataset-specific modality relevance. As a plug-and-play framework, VI-MMRec seamlessly integrates with existing models to enhance their performance without modifying their core architecture. Its flexibility allows it to be easily incorporated into various existing models, maximizing performance with minimal implementation effort. Moreover, VI-MMRec introduces no additional overhead during training, making it significantly advantageous for practical deployment. Comprehensive experiments conducted on six real-world datasets using seven state-of-the-art multimodal recommendation models validate the effectiveness of our VI-MMRec.

Learning and Editing Universal Graph Prompt Tuning via Reinforcement Learning

Dec 09, 2025Abstract:Early graph prompt tuning approaches relied on task-specific designs for Graph Neural Networks (GNNs), limiting their adaptability across diverse pre-training strategies. In contrast, another promising line of research has investigated universal graph prompt tuning, which operates directly in the input graph's feature space and builds a theoretical foundation that universal graph prompt tuning can theoretically achieve an equivalent effect of any prompting function, eliminating dependence on specific pre-training strategies. Recent works propose selective node-based graph prompt tuning to pursue more ideal prompts. However, we argue that selective node-based graph prompt tuning inevitably compromises the theoretical foundation of universal graph prompt tuning. In this paper, we strengthen the theoretical foundation of universal graph prompt tuning by introducing stricter constraints, demonstrating that adding prompts to all nodes is a necessary condition for achieving the universality of graph prompts. To this end, we propose a novel model and paradigm, Learning and Editing Universal GrAph Prompt Tuning (LEAP), which preserves the theoretical foundation of universal graph prompt tuning while pursuing more ideal prompts. Specifically, we first build the basic universal graph prompts to preserve the theoretical foundation and then employ actor-critic reinforcement learning to select nodes and edit prompts. Extensive experiments on graph- and node-level tasks across various pre-training strategies in both full-shot and few-shot scenarios show that LEAP consistently outperforms fine-tuning and other prompt-based approaches.

DDTracking: A Deep Generative Framework for Diffusion MRI Tractography with Streamline Local-Global Spatiotemporal Modeling

Aug 06, 2025Abstract:This paper presents DDTracking, a novel deep generative framework for diffusion MRI tractography that formulates streamline propagation as a conditional denoising diffusion process. In DDTracking, we introduce a dual-pathway encoding network that jointly models local spatial encoding (capturing fine-scale structural details at each streamline point) and global temporal dependencies (ensuring long-range consistency across the entire streamline). Furthermore, we design a conditional diffusion model module, which leverages the learned local and global embeddings to predict streamline propagation orientations for tractography in an end-to-end trainable manner. We conduct a comprehensive evaluation across diverse, independently acquired dMRI datasets, including both synthetic and clinical data. Experiments on two well-established benchmarks with ground truth (ISMRM Challenge and TractoInferno) demonstrate that DDTracking largely outperforms current state-of-the-art tractography methods. Furthermore, our results highlight DDTracking's strong generalizability across heterogeneous datasets, spanning varying health conditions, age groups, imaging protocols, and scanner types. Collectively, DDTracking offers anatomically plausible and robust tractography, presenting a scalable, adaptable, and end-to-end learnable solution for broad dMRI applications. Code is available at: https://github.com/yishengpoxiao/DDtracking.git

MmBack: Clock-free Multi-Sensor Backscatter with Synchronous Acquisition and Multiplexing

Jul 02, 2025Abstract:Backscatter tags provide a low-power solution for sensor applications, yet many real-world scenarios require multiple sensors-often of different types-for complex sensing tasks. However, existing designs support only a single sensor per tag, increasing spatial overhead. State-of-the-art approaches to multiplexing multiple sensor streams on a single tag rely on onboard clocks or multiple modulation chains, which add cost, enlarge form factor, and remain prone to timing drift-disrupting synchronization across sensors. We present mmBack, a low-power, clock-free backscatter tag that enables synchronous multi-sensor data acquisition and multiplexing over a single modulation chain. mmBack synchronizes sensor inputs in parallel using a shared reference signal extracted from ambient RF excitation, eliminating the need for an onboard timing source. To efficiently multiplex sensor data, mmBack designs a voltage-division scheme to multiplex multiple sensor inputs as backscatter frequency shifts through a single oscillator and RF switch. At the receiver, mmBack develops a frequency tracking algorithm and a finite-state machine for accurate demultiplexing. mmBack's ASIC design consumes 25.56uW, while its prototype supports 5 concurrent sensor streams with bandwidths of up to 5kHz and 3 concurrent sensor streams with bandwidth of up to 18kHz. Evaluation shows that mmBack achieves an average SNR surpassing 15dB in signal reconstruction.

MDVT: Enhancing Multimodal Recommendation with Model-Agnostic Multimodal-Driven Virtual Triplets

May 22, 2025Abstract:The data sparsity problem significantly hinders the performance of recommender systems, as traditional models rely on limited historical interactions to learn user preferences and item properties. While incorporating multimodal information can explicitly represent these preferences and properties, existing works often use it only as side information, failing to fully leverage its potential. In this paper, we propose MDVT, a model-agnostic approach that constructs multimodal-driven virtual triplets to provide valuable supervision signals, effectively mitigating the data sparsity problem in multimodal recommendation systems. To ensure high-quality virtual triplets, we introduce three tailored warm-up threshold strategies: static, dynamic, and hybrid. The static warm-up threshold strategy exhaustively searches for the optimal number of warm-up epochs but is time-consuming and computationally intensive. The dynamic warm-up threshold strategy adjusts the warm-up period based on loss trends, improving efficiency but potentially missing optimal performance. The hybrid strategy combines both, using the dynamic strategy to find the approximate optimal number of warm-up epochs and then refining it with the static strategy in a narrow hyper-parameter space. Once the warm-up threshold is satisfied, the virtual triplets are used for joint model optimization by our enhanced pair-wise loss function without causing significant gradient skew. Extensive experiments on multiple real-world datasets demonstrate that integrating MDVT into advanced multimodal recommendation models effectively alleviates the data sparsity problem and improves recommendation performance, particularly in sparse data scenarios.

Segregation and Context Aggregation Network for Real-time Cloud Segmentation

Apr 19, 2025

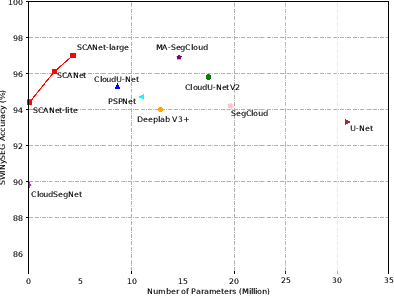

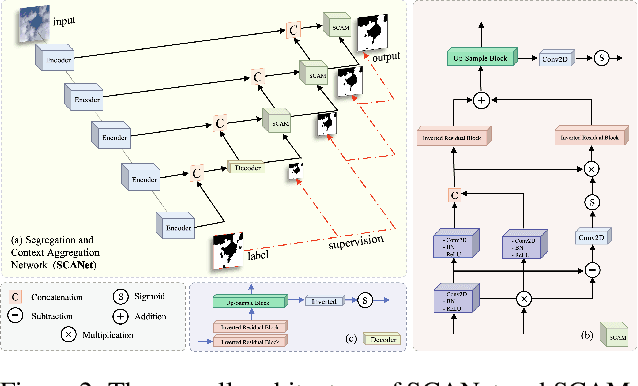

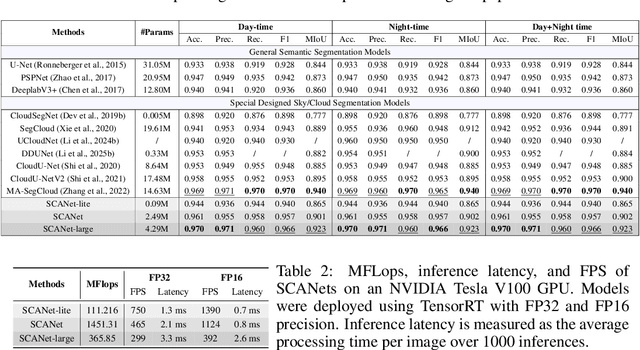

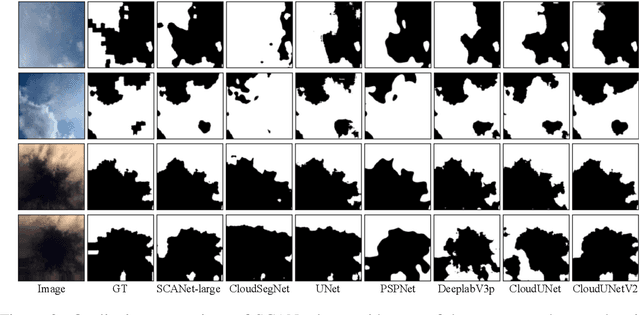

Abstract:Cloud segmentation from intensity images is a pivotal task in atmospheric science and computer vision, aiding weather forecasting and climate analysis. Ground-based sky/cloud segmentation extracts clouds from images for further feature analysis. Existing methods struggle to balance segmentation accuracy and computational efficiency, limiting real-world deployment on edge devices, so we introduce SCANet, a novel lightweight cloud segmentation model featuring Segregation and Context Aggregation Module (SCAM), which refines rough segmentation maps into weighted sky and cloud features processed separately. SCANet achieves state-of-the-art performance while drastically reducing computational complexity. SCANet-large (4.29M) achieves comparable accuracy to state-of-the-art methods with 70.9% fewer parameters. Meanwhile, SCANet-lite (90K) delivers 1390 fps in FP16, surpassing real-time standards. Additionally, we propose an efficient pre-training strategy that enhances performance even without ImageNet pre-training.

A Novel Streamline-based diffusion MRI Tractography Registration Method with Probabilistic Keypoint Detection

Mar 04, 2025

Abstract:Registration of diffusion MRI tractography is an essential step for analyzing group similarities and variations in the brain's white matter (WM). Streamline-based registration approaches can leverage the 3D geometric information of fiber pathways to enable spatial alignment after registration. Existing methods usually rely on the optimization of the spatial distances to identify the optimal transformation. However, such methods overlook point connectivity patterns within the streamline itself, limiting their ability to identify anatomical correspondences across tractography datasets. In this work, we propose a novel unsupervised approach using deep learning to perform streamline-based dMRI tractography registration. The overall idea is to identify corresponding keypoint pairs across subjects for spatial alignment of tractography datasets. We model tractography as point clouds to leverage the graph connectivity along streamlines. We propose a novel keypoint detection method for streamlines, framed as a probabilistic classification task to identify anatomically consistent correspondences across unstructured streamline sets. In the experiments, we compare several existing methods and show highly effective and efficient tractography registration performance.

RAINER: A Robust Ensemble Learning Grid Search-Tuned Framework for Rainfall Patterns Prediction

Jan 28, 2025

Abstract:Rainfall prediction remains a persistent challenge due to the highly nonlinear and complex nature of meteorological data. Existing approaches lack systematic utilization of grid search for optimal hyperparameter tuning, relying instead on heuristic or manual selection, frequently resulting in sub-optimal results. Additionally, these methods rarely incorporate newly constructed meteorological features such as differences between temperature and humidity to capture critical weather dynamics. Furthermore, there is a lack of systematic evaluation of ensemble learning techniques and limited exploration of diverse advanced models introduced in the past one or two years. To address these limitations, we propose a robust ensemble learning grid search-tuned framework (RAINER) for rainfall prediction. RAINER incorporates a comprehensive feature engineering pipeline, including outlier removal, imputation of missing values, feature reconstruction, and dimensionality reduction via Principal Component Analysis (PCA). The framework integrates novel meteorological features to capture dynamic weather patterns and systematically evaluates non-learning mathematical-based methods and a variety of machine learning models, from weak classifiers to advanced neural networks such as Kolmogorov-Arnold Networks (KAN). By leveraging grid search for hyperparameter tuning and ensemble voting techniques, RAINER achieves promising results within real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge