Jinjiang You

Segregation and Context Aggregation Network for Real-time Cloud Segmentation

Apr 19, 2025

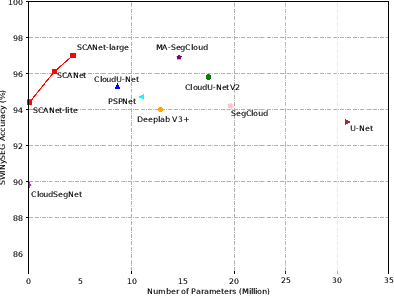

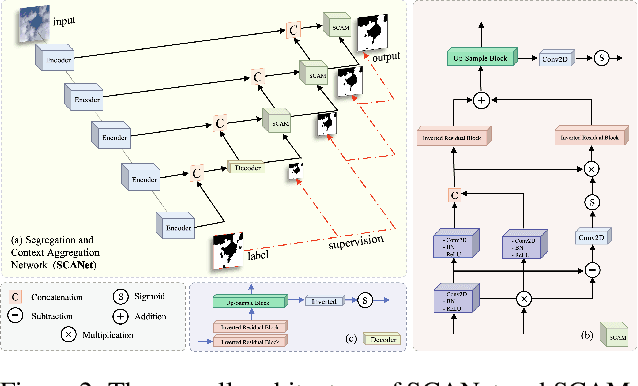

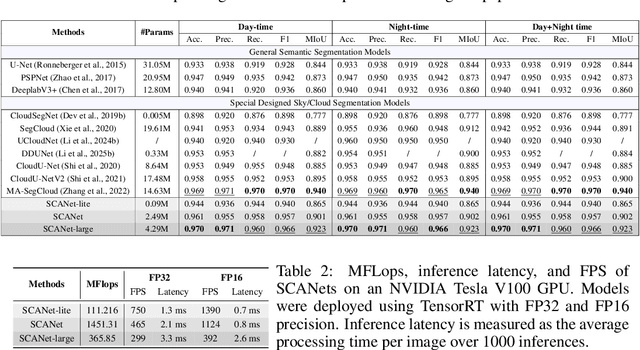

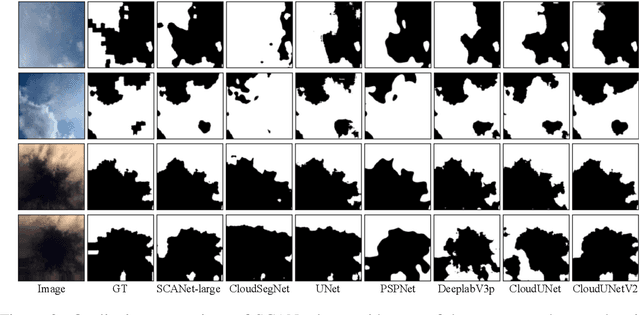

Abstract:Cloud segmentation from intensity images is a pivotal task in atmospheric science and computer vision, aiding weather forecasting and climate analysis. Ground-based sky/cloud segmentation extracts clouds from images for further feature analysis. Existing methods struggle to balance segmentation accuracy and computational efficiency, limiting real-world deployment on edge devices, so we introduce SCANet, a novel lightweight cloud segmentation model featuring Segregation and Context Aggregation Module (SCAM), which refines rough segmentation maps into weighted sky and cloud features processed separately. SCANet achieves state-of-the-art performance while drastically reducing computational complexity. SCANet-large (4.29M) achieves comparable accuracy to state-of-the-art methods with 70.9% fewer parameters. Meanwhile, SCANet-lite (90K) delivers 1390 fps in FP16, surpassing real-time standards. Additionally, we propose an efficient pre-training strategy that enhances performance even without ImageNet pre-training.

TrafficKAN-GCN: Graph Convolutional-based Kolmogorov-Arnold Network for Traffic Flow Optimization

Mar 05, 2025Abstract:Urban traffic optimization is critical for improving transportation efficiency and alleviating congestion, particularly in large-scale dynamic networks. Traditional methods, such as Dijkstra's and Floyd's algorithms, provide effective solutions in static settings, but they struggle with the spatial-temporal complexity of real-world traffic flows. In this work, we propose TrafficKAN-GCN, a hybrid deep learning framework combining Kolmogorov-Arnold Networks (KAN) with Graph Convolutional Networks (GCN), designed to enhance urban traffic flow optimization. By integrating KAN's adaptive nonlinear function approximation with GCN's spatial graph learning capabilities, TrafficKAN-GCN captures both complex traffic patterns and topological dependencies. We evaluate the proposed framework using real-world traffic data from the Baltimore Metropolitan area. Compared with baseline models such as MLP-GCN, standard GCN, and Transformer-based approaches, TrafficKAN-GCN achieves competitive prediction accuracy while demonstrating improved robustness in handling noisy and irregular traffic data. Our experiments further highlight the framework's ability to redistribute traffic flow, mitigate congestion, and adapt to disruptive events, such as the Francis Scott Key Bridge collapse. This study contributes to the growing body of work on hybrid graph learning for intelligent transportation systems, highlighting the potential of combining KAN and GCN for real-time traffic optimization. Future work will focus on reducing computational overhead and integrating Transformer-based temporal modeling for enhanced long-term traffic prediction. The proposed TrafficKAN-GCN framework offers a promising direction for data-driven urban mobility management, balancing predictive accuracy, robustness, and computational efficiency.

RAINER: A Robust Ensemble Learning Grid Search-Tuned Framework for Rainfall Patterns Prediction

Jan 28, 2025

Abstract:Rainfall prediction remains a persistent challenge due to the highly nonlinear and complex nature of meteorological data. Existing approaches lack systematic utilization of grid search for optimal hyperparameter tuning, relying instead on heuristic or manual selection, frequently resulting in sub-optimal results. Additionally, these methods rarely incorporate newly constructed meteorological features such as differences between temperature and humidity to capture critical weather dynamics. Furthermore, there is a lack of systematic evaluation of ensemble learning techniques and limited exploration of diverse advanced models introduced in the past one or two years. To address these limitations, we propose a robust ensemble learning grid search-tuned framework (RAINER) for rainfall prediction. RAINER incorporates a comprehensive feature engineering pipeline, including outlier removal, imputation of missing values, feature reconstruction, and dimensionality reduction via Principal Component Analysis (PCA). The framework integrates novel meteorological features to capture dynamic weather patterns and systematically evaluates non-learning mathematical-based methods and a variety of machine learning models, from weak classifiers to advanced neural networks such as Kolmogorov-Arnold Networks (KAN). By leveraging grid search for hyperparameter tuning and ensemble voting techniques, RAINER achieves promising results within real-world datasets.

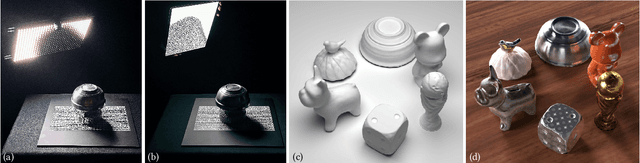

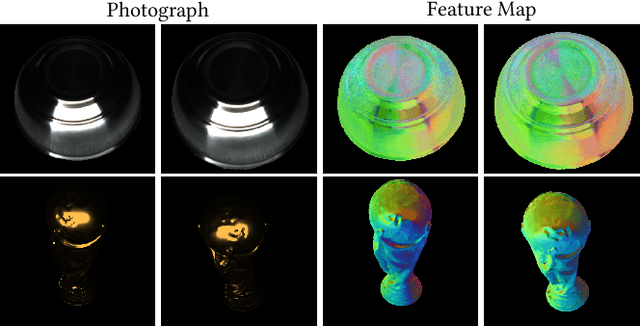

Learning Photometric Feature Transform for Free-form Object Scan

Aug 07, 2023

Abstract:We propose a novel framework to automatically learn to aggregate and transform photometric measurements from multiple unstructured views into spatially distinctive and view-invariant low-level features, which are fed to a multi-view stereo method to enhance 3D reconstruction. The illumination conditions during acquisition and the feature transform are jointly trained on a large amount of synthetic data. We further build a system to reconstruct the geometry and anisotropic reflectance of a variety of challenging objects from hand-held scans. The effectiveness of the system is demonstrated with a lightweight prototype, consisting of a camera and an array of LEDs, as well as an off-the-shelf tablet. Our results are validated against reconstructions from a professional 3D scanner and photographs, and compare favorably with state-of-the-art techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge