Qifan Zhang

Improving LLMs' Generalized Reasoning Abilities by Graph Problems

Jul 23, 2025Abstract:Large Language Models (LLMs) have made remarkable strides in reasoning tasks, yet their performance often falters on novel and complex problems. Domain-specific continued pretraining (CPT) methods, such as those tailored for mathematical reasoning, have shown promise but lack transferability to broader reasoning tasks. In this work, we pioneer the use of Graph Problem Reasoning (GPR) to enhance the general reasoning capabilities of LLMs. GPR tasks, spanning pathfinding, network analysis, numerical computation, and topological reasoning, require sophisticated logical and relational reasoning, making them ideal for teaching diverse reasoning patterns. To achieve this, we introduce GraphPile, the first large-scale corpus specifically designed for CPT using GPR data. Spanning 10.9 billion tokens across 23 graph tasks, the dataset includes chain-of-thought, program-of-thought, trace of execution, and real-world graph data. Using GraphPile, we train GraphMind on popular base models Llama 3 and 3.1, as well as Gemma 2, achieving up to 4.9 percent higher accuracy in mathematical reasoning and up to 21.2 percent improvement in non-mathematical reasoning tasks such as logical and commonsense reasoning. By being the first to harness GPR for enhancing reasoning patterns and introducing the first dataset of its kind, our work bridges the gap between domain-specific pretraining and universal reasoning capabilities, advancing the adaptability and robustness of LLMs.

R$^2$: A LLM Based Novel-to-Screenplay Generation Framework with Causal Plot Graphs

Mar 19, 2025Abstract:Automatically adapting novels into screenplays is important for the TV, film, or opera industries to promote products with low costs. The strong performances of large language models (LLMs) in long-text generation call us to propose a LLM based framework Reader-Rewriter (R$^2$) for this task. However, there are two fundamental challenges here. First, the LLM hallucinations may cause inconsistent plot extraction and screenplay generation. Second, the causality-embedded plot lines should be effectively extracted for coherent rewriting. Therefore, two corresponding tactics are proposed: 1) A hallucination-aware refinement method (HAR) to iteratively discover and eliminate the affections of hallucinations; and 2) a causal plot-graph construction method (CPC) based on a greedy cycle-breaking algorithm to efficiently construct plot lines with event causalities. Recruiting those efficient techniques, R$^2$ utilizes two modules to mimic the human screenplay rewriting process: The Reader module adopts a sliding window and CPC to build the causal plot graphs, while the Rewriter module generates first the scene outlines based on the graphs and then the screenplays. HAR is integrated into both modules for accurate inferences of LLMs. Experimental results demonstrate the superiority of R$^2$, which substantially outperforms three existing approaches (51.3%, 22.6%, and 57.1% absolute increases) in pairwise comparison at the overall win rate for GPT-4o.

GCoder: Improving Large Language Model for Generalized Graph Problem Solving

Oct 24, 2024

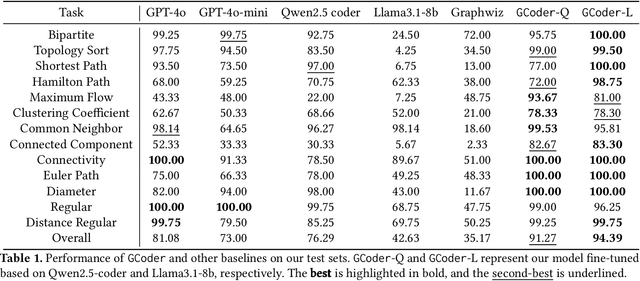

Abstract:Large Language Models (LLMs) have demonstrated strong reasoning abilities, making them suitable for complex tasks such as graph computation. Traditional reasoning steps paradigm for graph problems is hindered by unverifiable steps, limited long-term reasoning, and poor generalization to graph variations. To overcome these limitations, we introduce GCoder, a code-based LLM designed to enhance problem-solving in generalized graph computation problems. Our method involves constructing an extensive training dataset, GraphWild, featuring diverse graph formats and algorithms. We employ a multi-stage training process, including Supervised Fine-Tuning (SFT) and Reinforcement Learning from Compiler Feedback (RLCF), to refine model capabilities. For unseen tasks, a hybrid retrieval technique is used to augment performance. Experiments demonstrate that GCoder outperforms GPT-4o, with an average accuracy improvement of 16.42% across various graph computational problems. Furthermore, GCoder efficiently manages large-scale graphs with millions of nodes and diverse input formats, overcoming the limitations of previous models focused on the reasoning steps paradigm. This advancement paves the way for more intuitive and effective graph problem-solving using LLMs. Code and data are available at here: https://github.com/Bklight999/WWW25-GCoder/tree/master.

Continual Distillation Learning

Jul 18, 2024

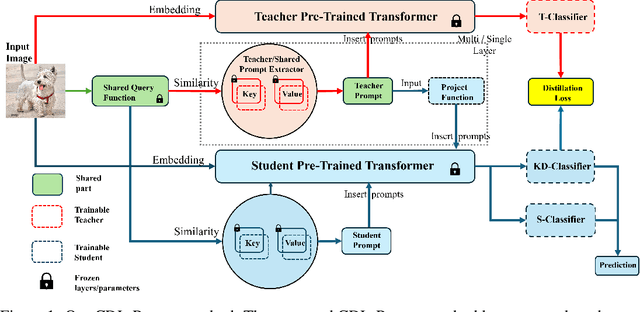

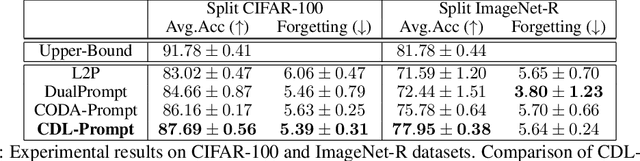

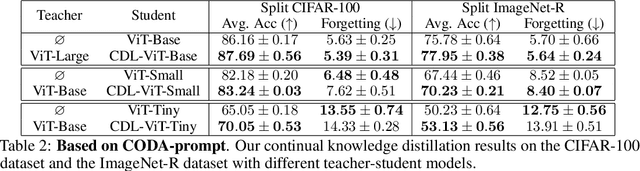

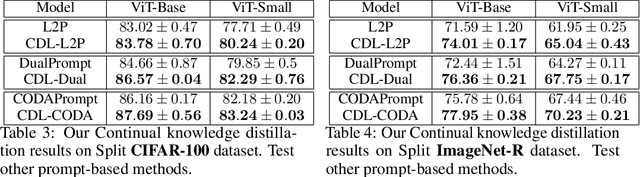

Abstract:We study the problem of Continual Distillation Learning (CDL) that considers Knowledge Distillation (KD) in the Continual Learning (CL) setup. A teacher model and a student model need to learn a sequence of tasks, and the knowledge of the teacher model will be distilled to the student to improve the student model. We introduce a novel method named CDL-Prompt that utilizes prompt-based continual learning models to build the teacher-student model. We investigate how to utilize the prompts of the teacher model in the student model for knowledge distillation, and propose an attention-based prompt mapping scheme to use the teacher prompts for the student. We demonstrate that our method can be applied to different prompt-based continual learning models such as L2P, DualPrompt and CODA-Prompt to improve their performance using powerful teacher models. Although recent CL methods focus on prompt learning, we show that our method can be utilized to build efficient CL models using prompt-based knowledge distillation.

GraphArena: Benchmarking Large Language Models on Graph Computational Problems

Jun 29, 2024

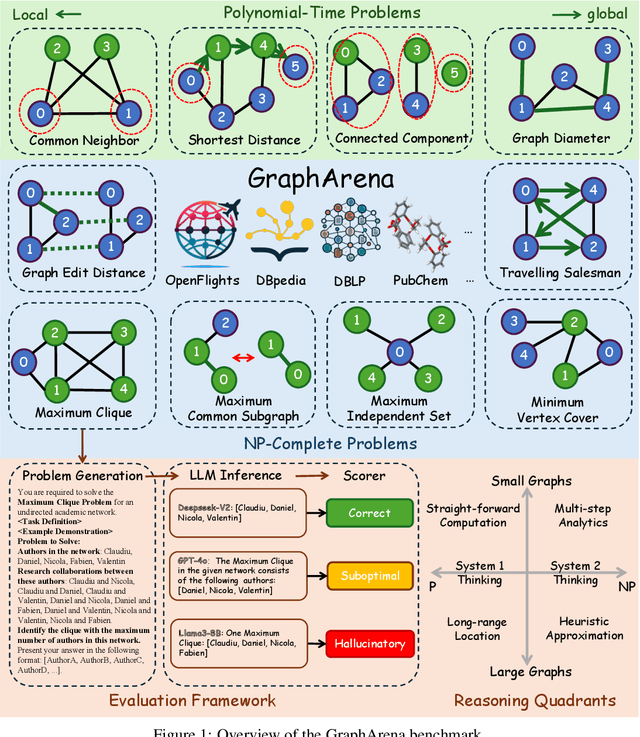

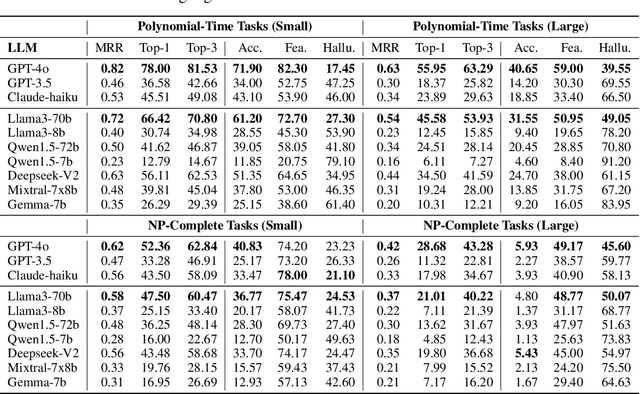

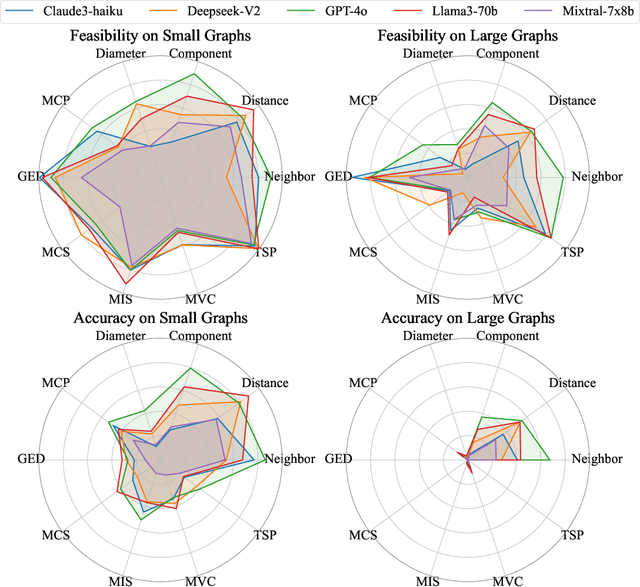

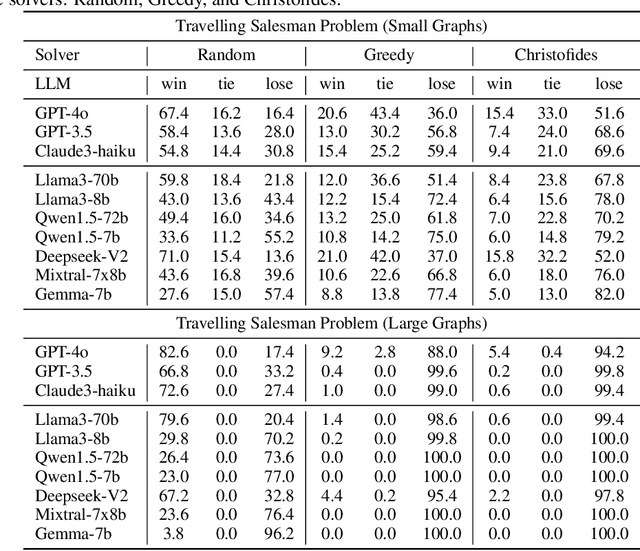

Abstract:The "arms race" of Large Language Models (LLMs) demands novel, challenging, and diverse benchmarks to faithfully examine their progresses. We introduce GraphArena, a benchmarking tool designed to evaluate LLMs on graph computational problems using million-scale real-world graphs from diverse scenarios such as knowledge graphs, social networks, and molecular structures. GraphArena offers a suite of 10 computational tasks, encompassing four polynomial-time (e.g., Shortest Distance) and six NP-complete challenges (e.g., Travelling Salesman Problem). It features a rigorous evaluation framework that classifies LLM outputs as correct, suboptimal (feasible but not optimal), or hallucinatory (properly formatted but infeasible). Evaluation of 10 leading LLMs, including GPT-4o and LLaMA3-70B-Instruct, reveals that even top-performing models struggle with larger, more complex graph problems and exhibit hallucination issues. Despite the application of strategies such as chain-of-thought prompting, these issues remain unresolved. GraphArena contributes a valuable supplement to the existing LLM benchmarks and is open-sourced at https://github.com/squareRoot3/GraphArena.

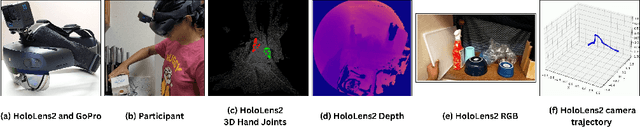

HO-Cap: A Capture System and Dataset for 3D Reconstruction and Pose Tracking of Hand-Object Interaction

Jun 10, 2024

Abstract:We introduce a data capture system and a new dataset named HO-Cap that can be used to study 3D reconstruction and pose tracking of hands and objects in videos. The capture system uses multiple RGB-D cameras and a HoloLens headset for data collection, avoiding the use of expensive 3D scanners or mocap systems. We propose a semi-automatic method to obtain annotations of shape and pose of hands and objects in the collected videos, which significantly reduces the required annotation time compared to manual labeling. With this system, we captured a video dataset of humans using objects to perform different tasks, as well as simple pick-and-place and handover of an object from one hand to the other, which can be used as human demonstrations for embodied AI and robot manipulation research. Our data capture setup and annotation framework can be used by the community to reconstruct 3D shapes of objects and human hands and track their poses in videos.

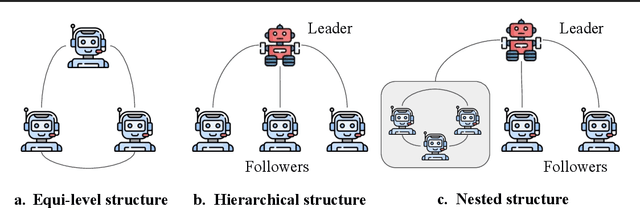

LLM Multi-Agent Systems: Challenges and Open Problems

Feb 05, 2024

Abstract:This paper explores existing works of multi-agent systems and identifies challenges that remain inadequately addressed. By leveraging the diverse capabilities and roles of individual agents within a multi-agent system, these systems can tackle complex tasks through collaboration. We discuss optimizing task allocation, fostering robust reasoning through iterative debates, managing complex and layered context information, and enhancing memory management to support the intricate interactions within multi-agent systems. We also explore the potential application of multi-agent systems in blockchain systems to shed light on their future development and application in real-world distributed systems.

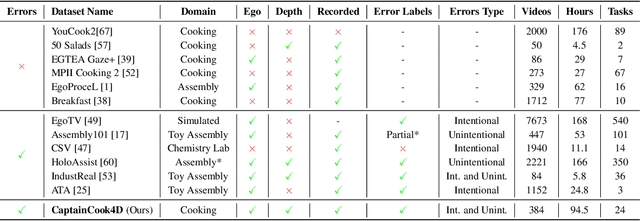

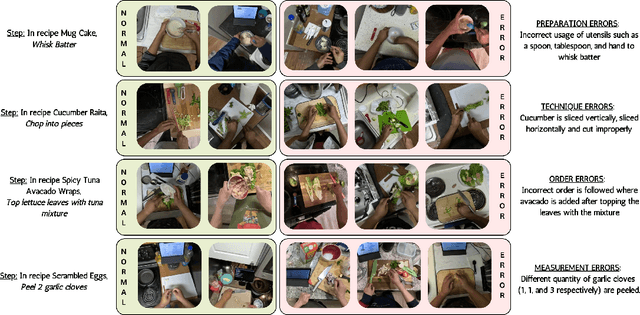

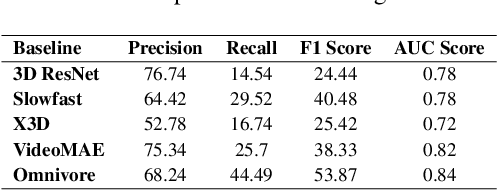

CaptainCook4D: A dataset for understanding errors in procedural activities

Dec 22, 2023

Abstract:Following step-by-step procedures is an essential component of various activities carried out by individuals in their daily lives. These procedures serve as a guiding framework that helps to achieve goals efficiently, whether it is assembling furniture or preparing a recipe. However, the complexity and duration of procedural activities inherently increase the likelihood of making errors. Understanding such procedural activities from a sequence of frames is a challenging task that demands an accurate interpretation of visual information and the ability to reason about the structure of the activity. To this end, we collect a new egocentric 4D dataset, CaptainCook4D, comprising 384 recordings (94.5 hours) of people performing recipes in real kitchen environments. This dataset consists of two distinct types of activity: one in which participants adhere to the provided recipe instructions and another in which they deviate and induce errors. We provide 5.3K step annotations and 10K fine-grained action annotations and benchmark the dataset for the following tasks: supervised error recognition, multistep localization, and procedure learning

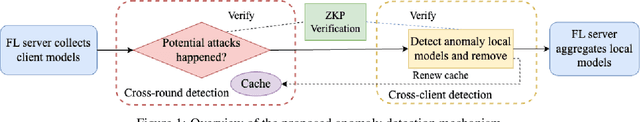

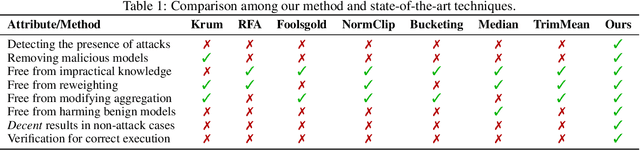

Kick Bad Guys Out! Zero-Knowledge-Proof-Based Anomaly Detection in Federated Learning

Oct 06, 2023

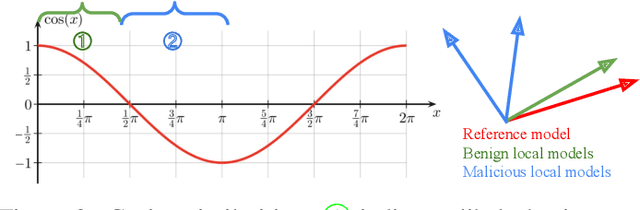

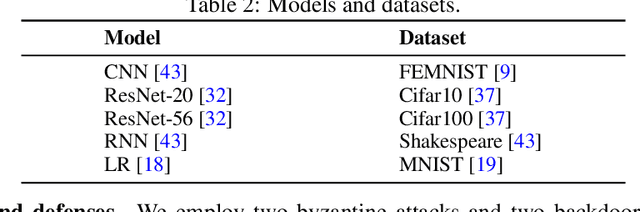

Abstract:Federated learning (FL) systems are vulnerable to malicious clients that submit poisoned local models to achieve their adversarial goals, such as preventing the convergence of the global model or inducing the global model to misclassify some data. Many existing defense mechanisms are impractical in real-world FL systems, as they require prior knowledge of the number of malicious clients or rely on re-weighting or modifying submissions. This is because adversaries typically do not announce their intentions before attacking, and re-weighting might change aggregation results even in the absence of attacks. To address these challenges in real FL systems, this paper introduces a cutting-edge anomaly detection approach with the following features: i) Detecting the occurrence of attacks and performing defense operations only when attacks happen; ii) Upon the occurrence of an attack, further detecting the malicious client models and eliminating them without harming the benign ones; iii) Ensuring honest execution of defense mechanisms at the server by leveraging a zero-knowledge proof mechanism. We validate the superior performance of the proposed approach with extensive experiments.

Automatic Assessment of Divergent Thinking in Chinese Language with TransDis: A Transformer-Based Language Model Approach

Jun 26, 2023Abstract:Language models have been increasingly popular for automatic creativity assessment, generating semantic distances to objectively measure the quality of creative ideas. However, there is currently a lack of an automatic assessment system for evaluating creative ideas in the Chinese language. To address this gap, we developed TransDis, a scoring system using transformer-based language models, capable of providing valid originality (quality) and flexibility (variety) scores for Alternative Uses Task (AUT) responses in Chinese. Study 1 demonstrated that the latent model-rated originality factor, comprised of three transformer-based models, strongly predicted human originality ratings, and the model-rated flexibility strongly correlated with human flexibility ratings as well. Criterion validity analyses indicated that model-rated originality and flexibility positively correlated to other creativity measures, demonstrating similar validity to human ratings. Study 2 & 3 showed that TransDis effectively distinguished participants instructed to provide creative vs. common uses (Study 2) and participants instructed to generate ideas in a flexible vs. persistent way (Study 3). Our findings suggest that TransDis can be a reliable and low-cost tool for measuring idea originality and flexibility in Chinese language, potentially paving the way for automatic creativity assessment in other languages. We offer an open platform to compute originality and flexibility for AUT responses in Chinese and over 50 other languages (https://osf.io/59jv2/).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge