Zhengyi Liu

R$^2$: A LLM Based Novel-to-Screenplay Generation Framework with Causal Plot Graphs

Mar 19, 2025Abstract:Automatically adapting novels into screenplays is important for the TV, film, or opera industries to promote products with low costs. The strong performances of large language models (LLMs) in long-text generation call us to propose a LLM based framework Reader-Rewriter (R$^2$) for this task. However, there are two fundamental challenges here. First, the LLM hallucinations may cause inconsistent plot extraction and screenplay generation. Second, the causality-embedded plot lines should be effectively extracted for coherent rewriting. Therefore, two corresponding tactics are proposed: 1) A hallucination-aware refinement method (HAR) to iteratively discover and eliminate the affections of hallucinations; and 2) a causal plot-graph construction method (CPC) based on a greedy cycle-breaking algorithm to efficiently construct plot lines with event causalities. Recruiting those efficient techniques, R$^2$ utilizes two modules to mimic the human screenplay rewriting process: The Reader module adopts a sliding window and CPC to build the causal plot graphs, while the Rewriter module generates first the scene outlines based on the graphs and then the screenplays. HAR is integrated into both modules for accurate inferences of LLMs. Experimental results demonstrate the superiority of R$^2$, which substantially outperforms three existing approaches (51.3%, 22.6%, and 57.1% absolute increases) in pairwise comparison at the overall win rate for GPT-4o.

LFSamba: Marry SAM with Mamba for Light Field Salient Object Detection

Nov 11, 2024

Abstract:A light field camera can reconstruct 3D scenes using captured multi-focus images that contain rich spatial geometric information, enhancing applications in stereoscopic photography, virtual reality, and robotic vision. In this work, a state-of-the-art salient object detection model for multi-focus light field images, called LFSamba, is introduced to emphasize four main insights: (a) Efficient feature extraction, where SAM is used to extract modality-aware discriminative features; (b) Inter-slice relation modeling, leveraging Mamba to capture long-range dependencies across multiple focal slices, thus extracting implicit depth cues; (c) Inter-modal relation modeling, utilizing Mamba to integrate all-focus and multi-focus images, enabling mutual enhancement; (d) Weakly supervised learning capability, developing a scribble annotation dataset from an existing pixel-level mask dataset, establishing the first scribble-supervised baseline for light field salient object detection.https://github.com/liuzywen/LFScribble

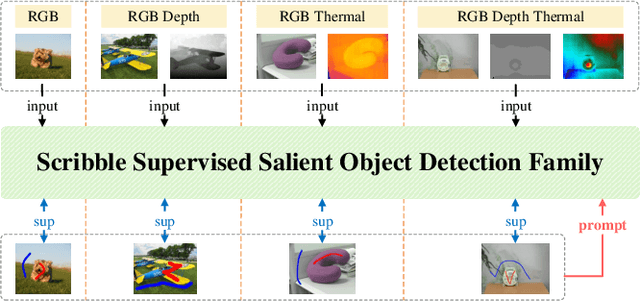

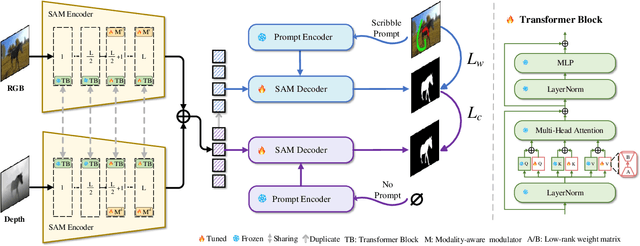

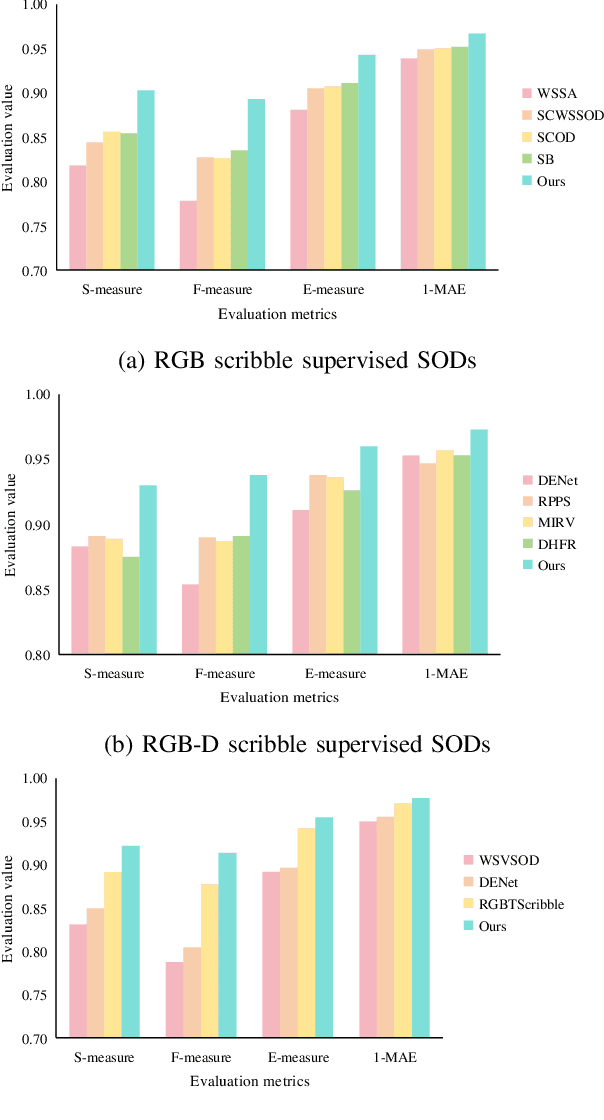

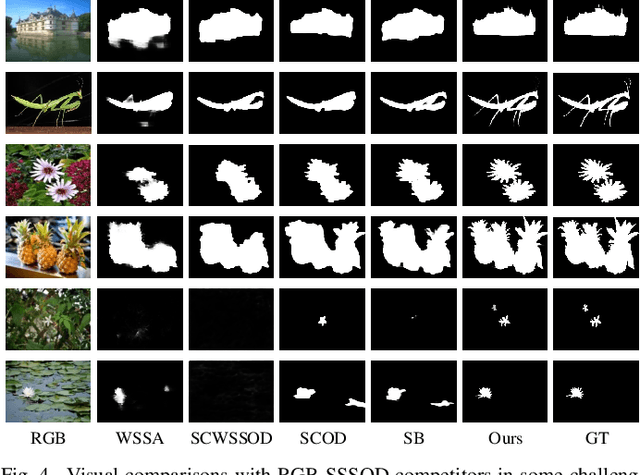

SSFam: Scribble Supervised Salient Object Detection Family

Sep 07, 2024

Abstract:Scribble supervised salient object detection (SSSOD) constructs segmentation ability of attractive objects from surroundings under the supervision of sparse scribble labels. For the better segmentation, depth and thermal infrared modalities serve as the supplement to RGB images in the complex scenes. Existing methods specifically design various feature extraction and multi-modal fusion strategies for RGB, RGB-Depth, RGB-Thermal, and Visual-Depth-Thermal image input respectively, leading to similar model flood. As the recently proposed Segment Anything Model (SAM) possesses extraordinary segmentation and prompt interactive capability, we propose an SSSOD family based on SAM, named SSFam, for the combination input with different modalities. Firstly, different modal-aware modulators are designed to attain modal-specific knowledge which cooperates with modal-agnostic information extracted from the frozen SAM encoder for the better feature ensemble. Secondly, a siamese decoder is tailored to bridge the gap between the training with scribble prompt and the testing with no prompt for the stronger decoding ability. Our model demonstrates the remarkable performance among combinations of different modalities and refreshes the highest level of scribble supervised methods and comes close to the ones of fully supervised methods. https://github.com/liuzywen/SSFam

Local Consensus Enhanced Siamese Network with Reciprocal Loss for Two-view Correspondence Learning

Aug 06, 2023Abstract:Recent studies of two-view correspondence learning usually establish an end-to-end network to jointly predict correspondence reliability and relative pose. We improve such a framework from two aspects. First, we propose a Local Feature Consensus (LFC) plugin block to augment the features of existing models. Given a correspondence feature, the block augments its neighboring features with mutual neighborhood consensus and aggregates them to produce an enhanced feature. As inliers obey a uniform cross-view transformation and share more consistent learned features than outliers, feature consensus strengthens inlier correlation and suppresses outlier distraction, which makes output features more discriminative for classifying inliers/outliers. Second, existing approaches supervise network training with the ground truth correspondences and essential matrix projecting one image to the other for an input image pair, without considering the information from the reverse mapping. We extend existing models to a Siamese network with a reciprocal loss that exploits the supervision of mutual projection, which considerably promotes the matching performance without introducing additional model parameters. Building upon MSA-Net, we implement the two proposals and experimentally achieve state-of-the-art performance on benchmark datasets.

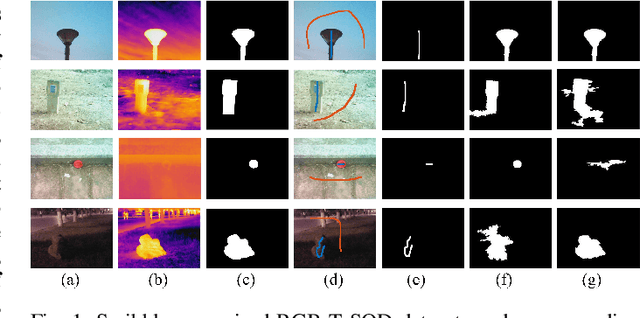

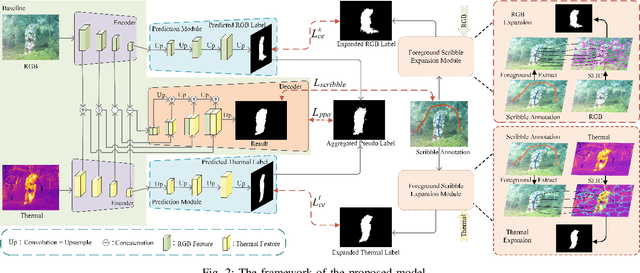

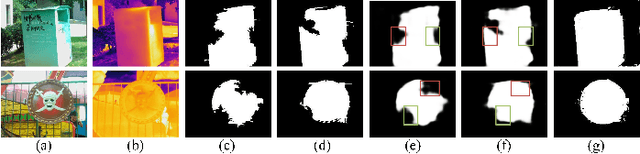

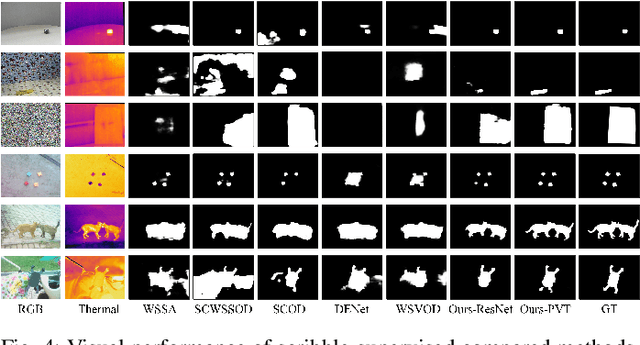

Scribble-Supervised RGB-T Salient Object Detection

Mar 17, 2023

Abstract:Salient object detection segments attractive objects in scenes. RGB and thermal modalities provide complementary information and scribble annotations alleviate large amounts of human labor. Based on the above facts, we propose a scribble-supervised RGB-T salient object detection model. By a four-step solution (expansion, prediction, aggregation, and supervision), label-sparse challenge of scribble-supervised method is solved. To expand scribble annotations, we collect the superpixels that foreground scribbles pass through in RGB and thermal images, respectively. The expanded multi-modal labels provide the coarse object boundary. To further polish the expanded labels, we propose a prediction module to alleviate the sharpness of boundary. To play the complementary roles of two modalities, we combine the two into aggregated pseudo labels. Supervised by scribble annotations and pseudo labels, our model achieves the state-of-the-art performance on the relabeled RGBT-S dataset. Furthermore, the model is applied to RGB-D and video scribble-supervised applications, achieving consistently excellent performance.

RGB-T Multi-Modal Crowd Counting Based on Transformer

Jan 08, 2023Abstract:Crowd counting aims to estimate the number of persons in a scene. Most state-of-the-art crowd counting methods based on color images can't work well in poor illumination conditions due to invisible objects. With the widespread use of infrared cameras, crowd counting based on color and thermal images is studied. Existing methods only achieve multi-modal fusion without count objective constraint. To better excavate multi-modal information, we use count-guided multi-modal fusion and modal-guided count enhancement to achieve the impressive performance. The proposed count-guided multi-modal fusion module utilizes a multi-scale token transformer to interact two-modal information under the guidance of count information and perceive different scales from the token perspective. The proposed modal-guided count enhancement module employs multi-scale deformable transformer decoder structure to enhance one modality feature and count information by the other modality. Experiment in public RGBT-CC dataset shows that our method refreshes the state-of-the-art results. https://github.com/liuzywen/RGBTCC

HRTransNet: HRFormer-Driven Two-Modality Salient Object Detection

Jan 08, 2023

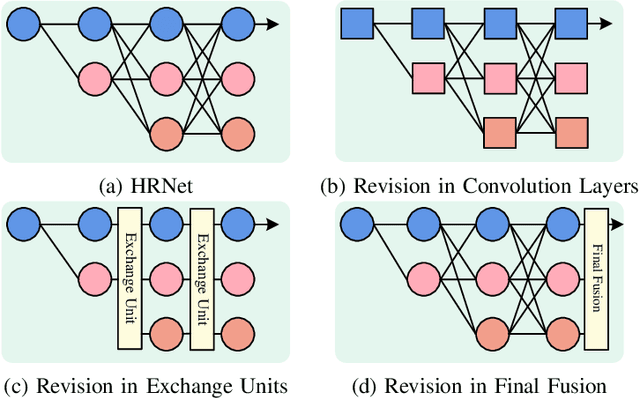

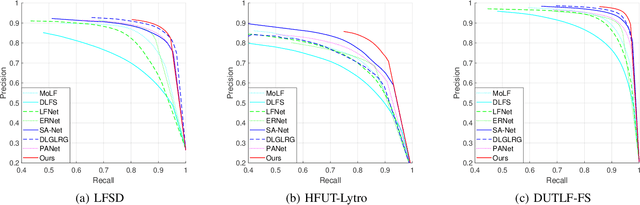

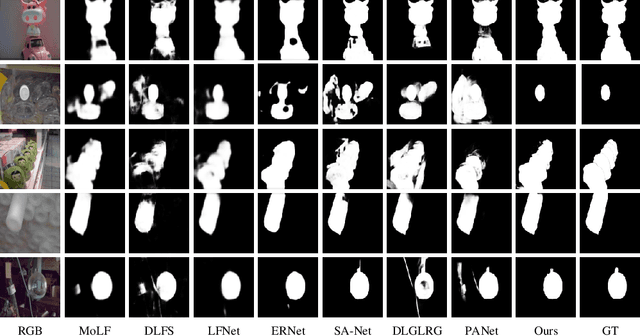

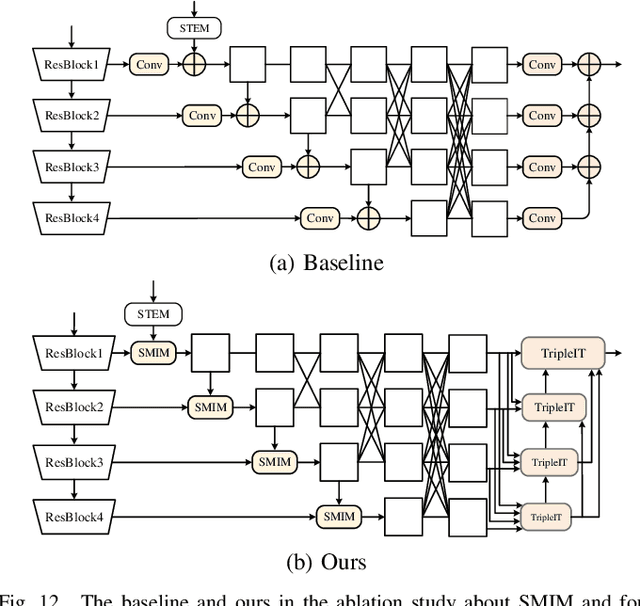

Abstract:The High-Resolution Transformer (HRFormer) can maintain high-resolution representation and share global receptive fields. It is friendly towards salient object detection (SOD) in which the input and output have the same resolution. However, two critical problems need to be solved for two-modality SOD. One problem is two-modality fusion. The other problem is the HRFormer output's fusion. To address the first problem, a supplementary modality is injected into the primary modality by using global optimization and an attention mechanism to select and purify the modality at the input level. To solve the second problem, a dual-direction short connection fusion module is used to optimize the output features of HRFormer, thereby enhancing the detailed representation of objects at the output level. The proposed model, named HRTransNet, first introduces an auxiliary stream for feature extraction of supplementary modality. Then, features are injected into the primary modality at the beginning of each multi-resolution branch. Next, HRFormer is applied to achieve forwarding propagation. Finally, all the output features with different resolutions are aggregated by intra-feature and inter-feature interactive transformers. Application of the proposed model results in impressive improvement for driving two-modality SOD tasks, e.g., RGB-D, RGB-T, and light field SOD.https://github.com/liuzywen/HRTransNet

Boosting Camouflaged Object Detection with Dual-Task Interactive Transformer

May 21, 2022

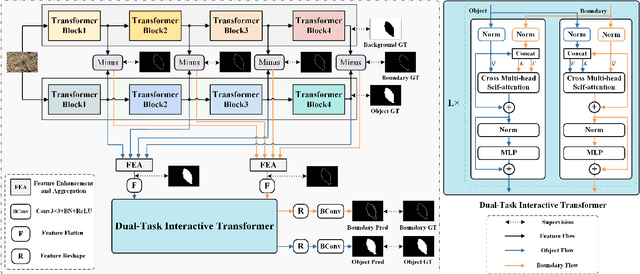

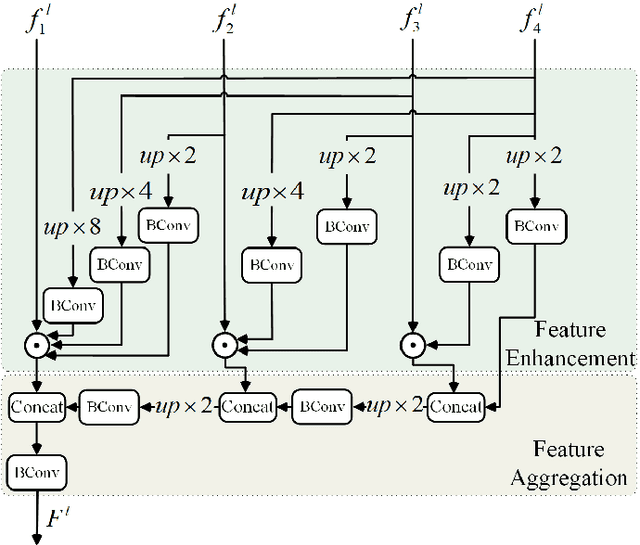

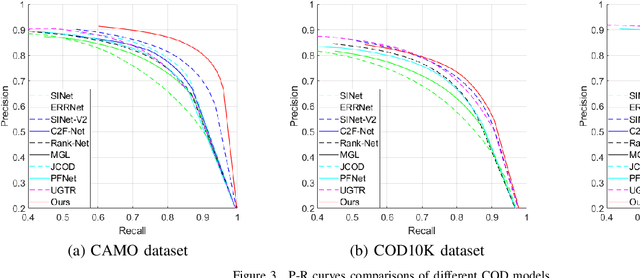

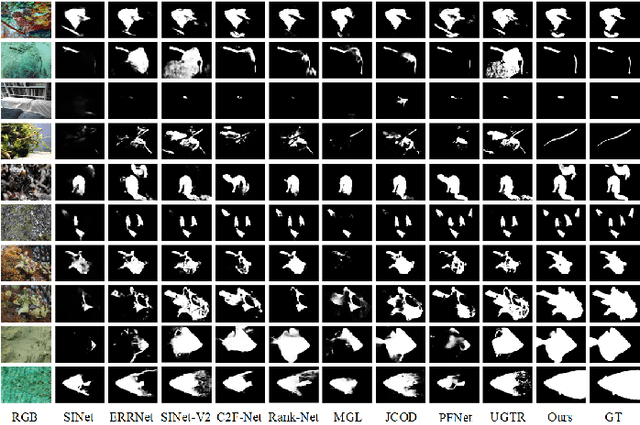

Abstract:Camouflaged object detection intends to discover the concealed objects hidden in the surroundings. Existing methods follow the bio-inspired framework, which first locates the object and second refines the boundary. We argue that the discovery of camouflaged objects depends on the recurrent search for the object and the boundary. The recurrent processing makes the human tired and helpless, but it is just the advantage of the transformer with global search ability. Therefore, a dual-task interactive transformer is proposed to detect both accurate position of the camouflaged object and its detailed boundary. The boundary feature is considered as Query to improve the camouflaged object detection, and meanwhile the object feature is considered as Query to improve the boundary detection. The camouflaged object detection and the boundary detection are fully interacted by multi-head self-attention. Besides, to obtain the initial object feature and boundary feature, transformer-based backbones are adopted to extract the foreground and background. The foreground is just object, while foreground minus background is considered as boundary. Here, the boundary feature can be obtained from blurry boundary region of the foreground and background. Supervised by the object, the background and the boundary ground truth, the proposed model achieves state-of-the-art performance in public datasets. https://github.com/liuzywen/COD

* Accepted by ICPR2022

SwinNet: Swin Transformer drives edge-aware RGB-D and RGB-T salient object detection

Apr 12, 2022

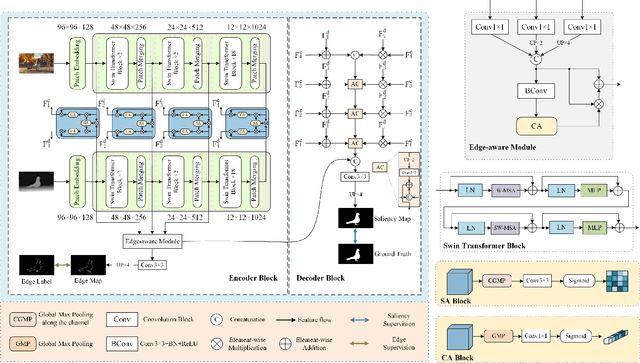

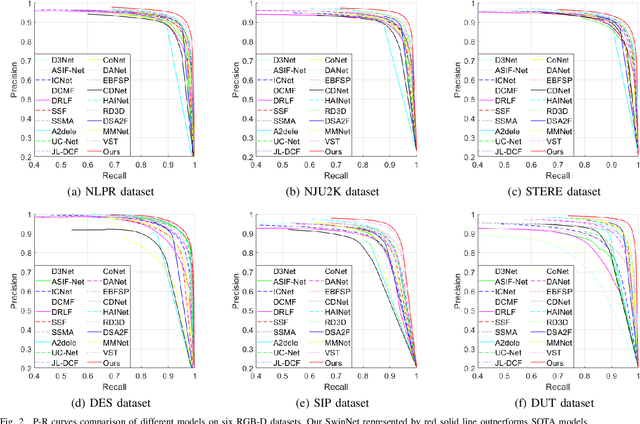

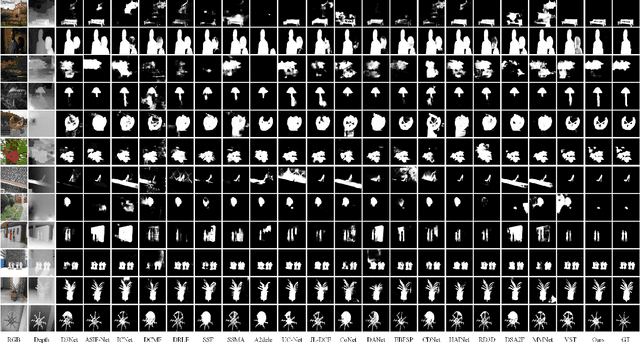

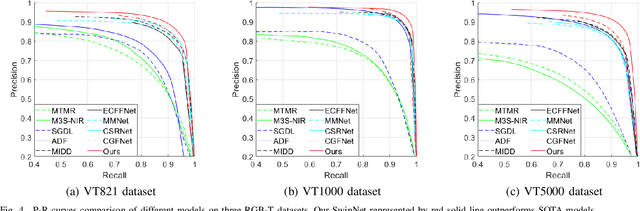

Abstract:Convolutional neural networks (CNNs) are good at extracting contexture features within certain receptive fields, while transformers can model the global long-range dependency features. By absorbing the advantage of transformer and the merit of CNN, Swin Transformer shows strong feature representation ability. Based on it, we propose a cross-modality fusion model SwinNet for RGB-D and RGB-T salient object detection. It is driven by Swin Transformer to extract the hierarchical features, boosted by attention mechanism to bridge the gap between two modalities, and guided by edge information to sharp the contour of salient object. To be specific, two-stream Swin Transformer encoder first extracts multi-modality features, and then spatial alignment and channel re-calibration module is presented to optimize intra-level cross-modality features. To clarify the fuzzy boundary, edge-guided decoder achieves inter-level cross-modality fusion under the guidance of edge features. The proposed model outperforms the state-of-the-art models on RGB-D and RGB-T datasets, showing that it provides more insight into the cross-modality complementarity task.

* Online published in TCSVT

TriTransNet: RGB-D Salient Object Detection with a Triplet Transformer Embedding Network

Aug 09, 2021

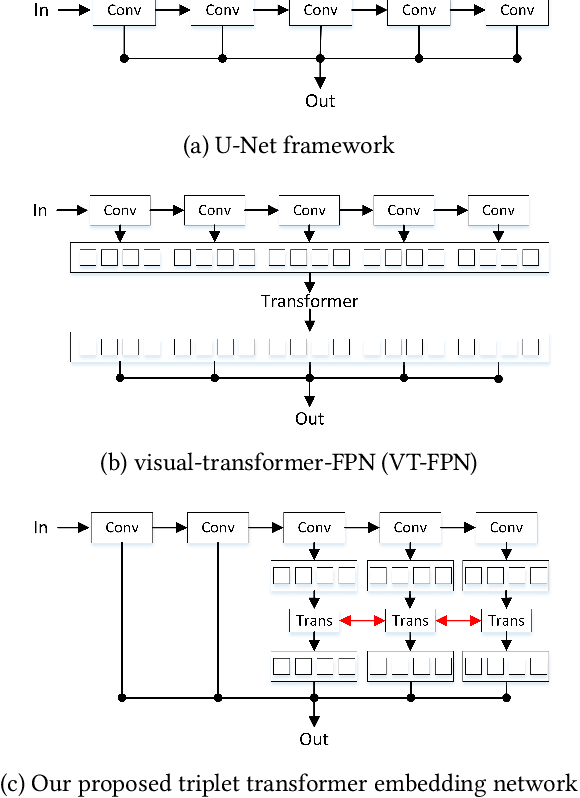

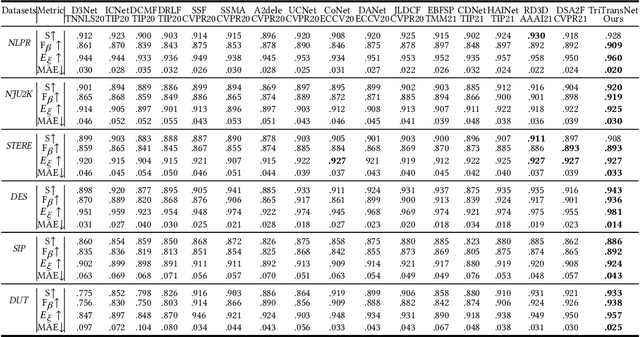

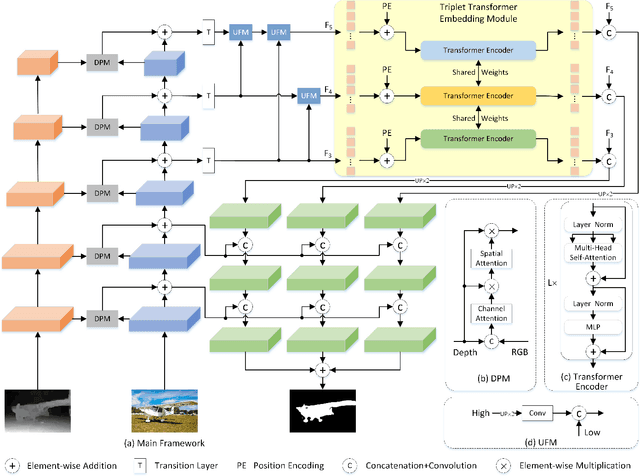

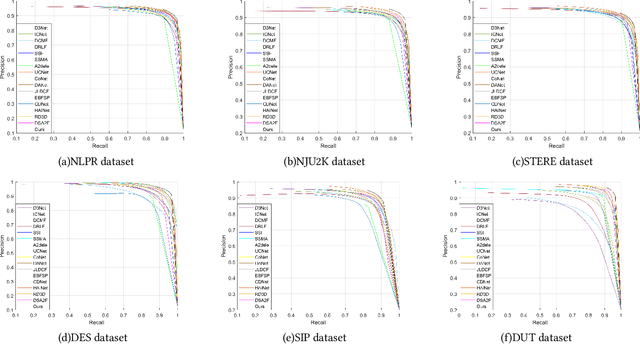

Abstract:Salient object detection is the pixel-level dense prediction task which can highlight the prominent object in the scene. Recently U-Net framework is widely used, and continuous convolution and pooling operations generate multi-level features which are complementary with each other. In view of the more contribution of high-level features for the performance, we propose a triplet transformer embedding module to enhance them by learning long-range dependencies across layers. It is the first to use three transformer encoders with shared weights to enhance multi-level features. By further designing scale adjustment module to process the input, devising three-stream decoder to process the output and attaching depth features to color features for the multi-modal fusion, the proposed triplet transformer embedding network (TriTransNet) achieves the state-of-the-art performance in RGB-D salient object detection, and pushes the performance to a new level. Experimental results demonstrate the effectiveness of the proposed modules and the competition of TriTransNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge