Lilong Chai

Efficient Auto-Labeling of Large-Scale Poultry Datasets (ALPD) Using Semi-Supervised Models, Active Learning, and Prompt-then-Detect Approach

Jan 18, 2025

Abstract:The rapid growth of AI in poultry farming has highlighted the challenge of efficiently labeling large, diverse datasets. Manual annotation is time-consuming, making it impractical for modern systems that continuously generate data. This study explores semi-supervised auto-labeling methods, integrating active learning, and prompt-then-detect paradigm to develop an efficient framework for auto-labeling of large poultry datasets aimed at advancing AI-driven behavior and health monitoring. Viideo data were collected from broilers and laying hens housed at the University of Arkansas and the University of Georgia. The collected videos were converted into images, pre-processed, augmented, and labeled. Various machine learning models, including zero-shot models like Grounding DINO, YOLO-World, and CLIP, and supervised models like YOLO and Faster-RCNN, were utilized for broilers, hens, and behavior detection. The results showed that YOLOv8s-World and YOLOv9s performed better when compared performance metrics for broiler and hen detection under supervised learning, while among the semi-supervised model, YOLOv8s-ALPD achieved the highest precision (96.1%) and recall (99.0%) with an RMSE of 1.9. The hybrid YOLO-World model, incorporating the optimal YOLOv8s backbone, demonstrated the highest overall performance. It achieved a precision of 99.2%, recall of 99.4%, and an F1 score of 98.7% for breed detection, alongside a precision of 88.4%, recall of 83.1%, and an F1 score of 84.5% for individual behavior detection. Additionally, semi-supervised models showed significant improvements in behavior detection, achieving up to 31% improvement in precision and 16% in F1-score. The semi-supervised models with minimal active learning reduced annotation time by over 80% compared to full manual labeling. Moreover, integrating zero-shot models enhanced detection and behavior identification.

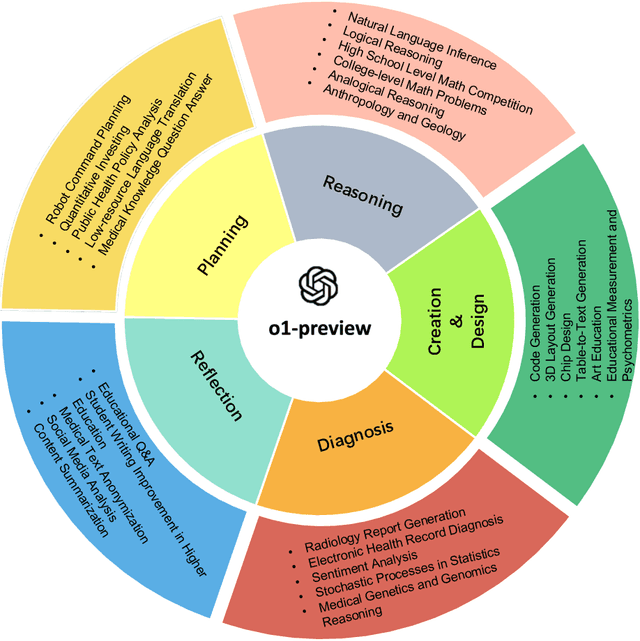

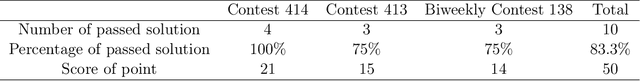

Evaluation of OpenAI o1: Opportunities and Challenges of AGI

Sep 27, 2024

Abstract:This comprehensive study evaluates the performance of OpenAI's o1-preview large language model across a diverse array of complex reasoning tasks, spanning multiple domains, including computer science, mathematics, natural sciences, medicine, linguistics, and social sciences. Through rigorous testing, o1-preview demonstrated remarkable capabilities, often achieving human-level or superior performance in areas ranging from coding challenges to scientific reasoning and from language processing to creative problem-solving. Key findings include: -83.3% success rate in solving complex competitive programming problems, surpassing many human experts. -Superior ability in generating coherent and accurate radiology reports, outperforming other evaluated models. -100% accuracy in high school-level mathematical reasoning tasks, providing detailed step-by-step solutions. -Advanced natural language inference capabilities across general and specialized domains like medicine. -Impressive performance in chip design tasks, outperforming specialized models in areas such as EDA script generation and bug analysis. -Remarkable proficiency in anthropology and geology, demonstrating deep understanding and reasoning in these specialized fields. -Strong capabilities in quantitative investing. O1 has comprehensive financial knowledge and statistical modeling skills. -Effective performance in social media analysis, including sentiment analysis and emotion recognition. The model excelled particularly in tasks requiring intricate reasoning and knowledge integration across various fields. While some limitations were observed, including occasional errors on simpler problems and challenges with certain highly specialized concepts, the overall results indicate significant progress towards artificial general intelligence.

On the Promises and Challenges of Multimodal Foundation Models for Geographical, Environmental, Agricultural, and Urban Planning Applications

Dec 23, 2023

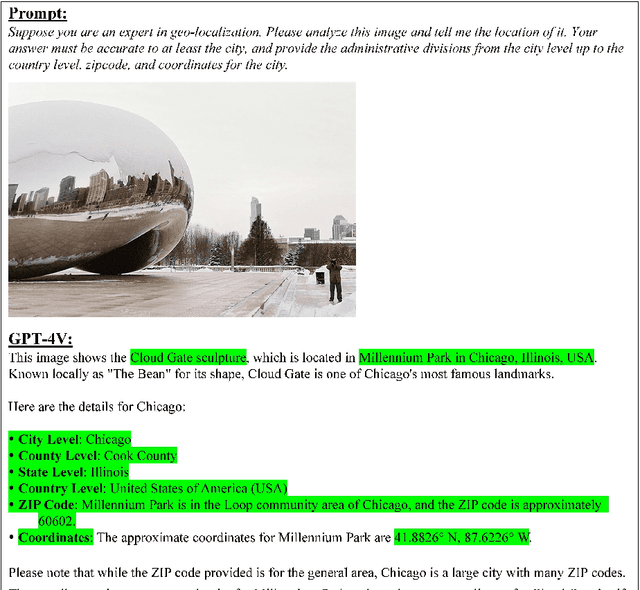

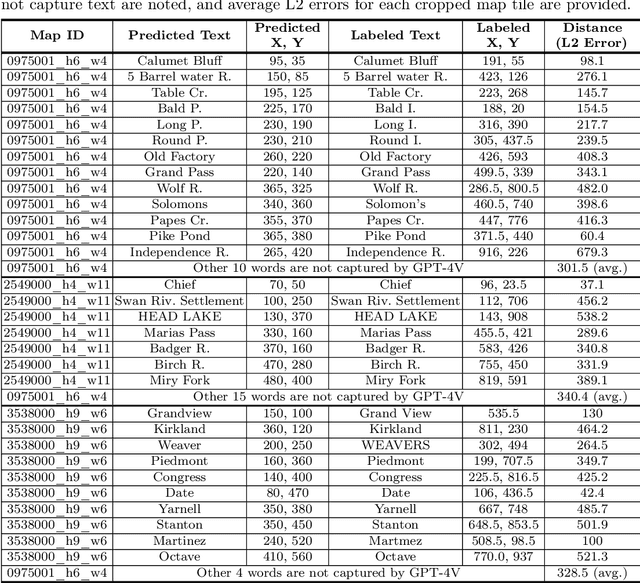

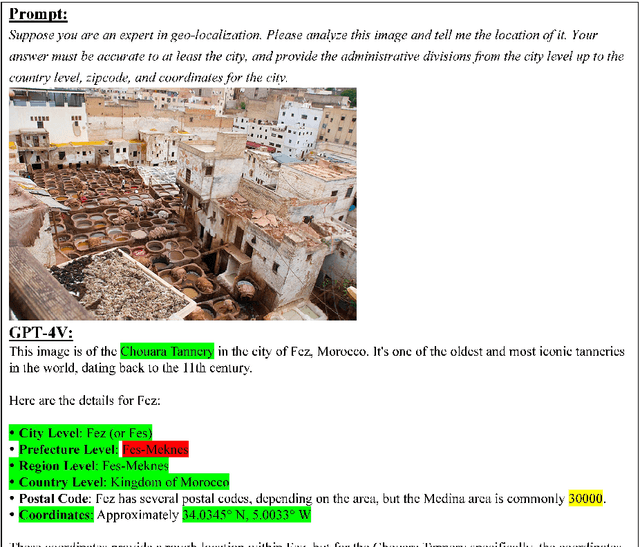

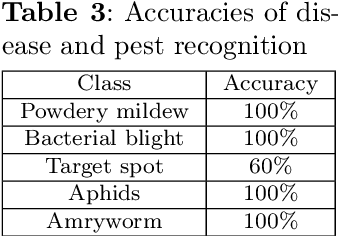

Abstract:The advent of large language models (LLMs) has heightened interest in their potential for multimodal applications that integrate language and vision. This paper explores the capabilities of GPT-4V in the realms of geography, environmental science, agriculture, and urban planning by evaluating its performance across a variety of tasks. Data sources comprise satellite imagery, aerial photos, ground-level images, field images, and public datasets. The model is evaluated on a series of tasks including geo-localization, textual data extraction from maps, remote sensing image classification, visual question answering, crop type identification, disease/pest/weed recognition, chicken behavior analysis, agricultural object counting, urban planning knowledge question answering, and plan generation. The results indicate the potential of GPT-4V in geo-localization, land cover classification, visual question answering, and basic image understanding. However, there are limitations in several tasks requiring fine-grained recognition and precise counting. While zero-shot learning shows promise, performance varies across problem domains and image complexities. The work provides novel insights into GPT-4V's capabilities and limitations for real-world geospatial, environmental, agricultural, and urban planning challenges. Further research should focus on augmenting the model's knowledge and reasoning for specialized domains through expanded training. Overall, the analysis demonstrates foundational multimodal intelligence, highlighting the potential of multimodal foundation models (FMs) to advance interdisciplinary applications at the nexus of computer vision and language.

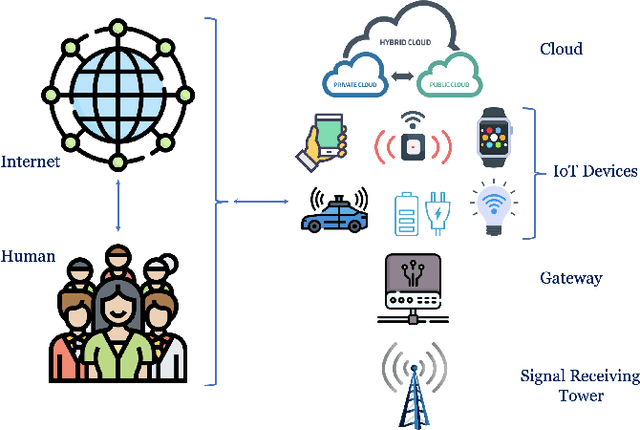

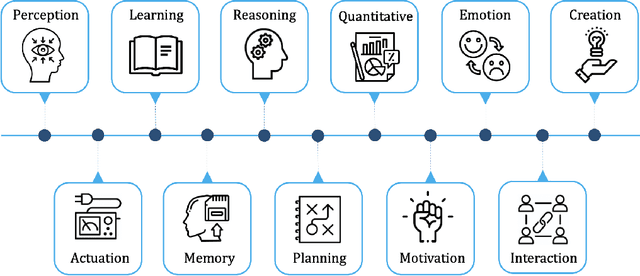

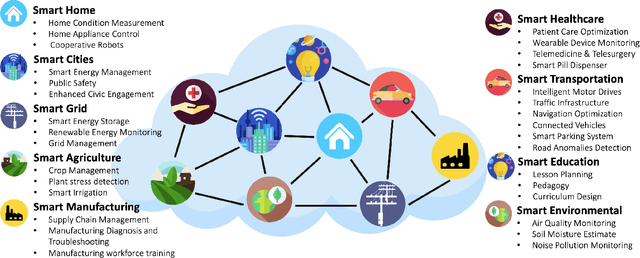

Towards Artificial General Intelligence (AGI) in the Internet of Things (IoT): Opportunities and Challenges

Sep 14, 2023

Abstract:Artificial General Intelligence (AGI), possessing the capacity to comprehend, learn, and execute tasks with human cognitive abilities, engenders significant anticipation and intrigue across scientific, commercial, and societal arenas. This fascination extends particularly to the Internet of Things (IoT), a landscape characterized by the interconnection of countless devices, sensors, and systems, collectively gathering and sharing data to enable intelligent decision-making and automation. This research embarks on an exploration of the opportunities and challenges towards achieving AGI in the context of the IoT. Specifically, it starts by outlining the fundamental principles of IoT and the critical role of Artificial Intelligence (AI) in IoT systems. Subsequently, it delves into AGI fundamentals, culminating in the formulation of a conceptual framework for AGI's seamless integration within IoT. The application spectrum for AGI-infused IoT is broad, encompassing domains ranging from smart grids, residential environments, manufacturing, and transportation to environmental monitoring, agriculture, healthcare, and education. However, adapting AGI to resource-constrained IoT settings necessitates dedicated research efforts. Furthermore, the paper addresses constraints imposed by limited computing resources, intricacies associated with large-scale IoT communication, as well as the critical concerns pertaining to security and privacy.

SAM for Poultry Science

May 17, 2023Abstract:In recent years, the agricultural industry has witnessed significant advancements in artificial intelligence (AI), particularly with the development of large-scale foundational models. Among these foundation models, the Segment Anything Model (SAM), introduced by Meta AI Research, stands out as a groundbreaking solution for object segmentation tasks. While SAM has shown success in various agricultural applications, its potential in the poultry industry, specifically in the context of cage-free hens, remains relatively unexplored. This study aims to assess the zero-shot segmentation performance of SAM on representative chicken segmentation tasks, including part-based segmentation and the use of infrared thermal images, and to explore chicken-tracking tasks by using SAM as a segmentation tool. The results demonstrate SAM's superior performance compared to SegFormer and SETR in both whole and part-based chicken segmentation. SAM-based object tracking also provides valuable data on the behavior and movement patterns of broiler birds. The findings of this study contribute to a better understanding of SAM's potential in poultry science and lay the foundation for future advancements in chicken segmentation and tracking.

AGI for Agriculture

Apr 12, 2023

Abstract:Artificial General Intelligence (AGI) is poised to revolutionize a variety of sectors, including healthcare, finance, transportation, and education. Within healthcare, AGI is being utilized to analyze clinical medical notes, recognize patterns in patient data, and aid in patient management. Agriculture is another critical sector that impacts the lives of individuals worldwide. It serves as a foundation for providing food, fiber, and fuel, yet faces several challenges, such as climate change, soil degradation, water scarcity, and food security. AGI has the potential to tackle these issues by enhancing crop yields, reducing waste, and promoting sustainable farming practices. It can also help farmers make informed decisions by leveraging real-time data, leading to more efficient and effective farm management. This paper delves into the potential future applications of AGI in agriculture, such as agriculture image processing, natural language processing (NLP), robotics, knowledge graphs, and infrastructure, and their impact on precision livestock and precision crops. By leveraging the power of AGI, these emerging technologies can provide farmers with actionable insights, allowing for optimized decision-making and increased productivity. The transformative potential of AGI in agriculture is vast, and this paper aims to highlight its potential to revolutionize the industry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge