Jielu Zhang

TorchSpatial: A Location Encoding Framework and Benchmark for Spatial Representation Learning

Jun 21, 2024Abstract:Spatial representation learning (SRL) aims at learning general-purpose neural network representations from various types of spatial data (e.g., points, polylines, polygons, networks, images, etc.) in their native formats. Learning good spatial representations is a fundamental problem for various downstream applications such as species distribution modeling, weather forecasting, trajectory generation, geographic question answering, etc. Even though SRL has become the foundation of almost all geospatial artificial intelligence (GeoAI) research, we have not yet seen significant efforts to develop an extensive deep learning framework and benchmark to support SRL model development and evaluation. To fill this gap, we propose TorchSpatial, a learning framework and benchmark for location (point) encoding, which is one of the most fundamental data types of spatial representation learning. TorchSpatial contains three key components: 1) a unified location encoding framework that consolidates 15 commonly recognized location encoders, ensuring scalability and reproducibility of the implementations; 2) the LocBench benchmark tasks encompassing 7 geo-aware image classification and 4 geo-aware image regression datasets; 3) a comprehensive suite of evaluation metrics to quantify geo-aware models' overall performance as well as their geographic bias, with a novel Geo-Bias Score metric. Finally, we provide a detailed analysis and insights into the model performance and geographic bias of different location encoders. We believe TorchSpatial will foster future advancement of spatial representation learning and spatial fairness in GeoAI research. The TorchSpatial model framework, LocBench, and Geo-Bias Score evaluation framework are available at https://github.com/seai-lab/TorchSpatial.

Img2Loc: Revisiting Image Geolocalization using Multi-modality Foundation Models and Image-based Retrieval-Augmented Generation

Mar 28, 2024

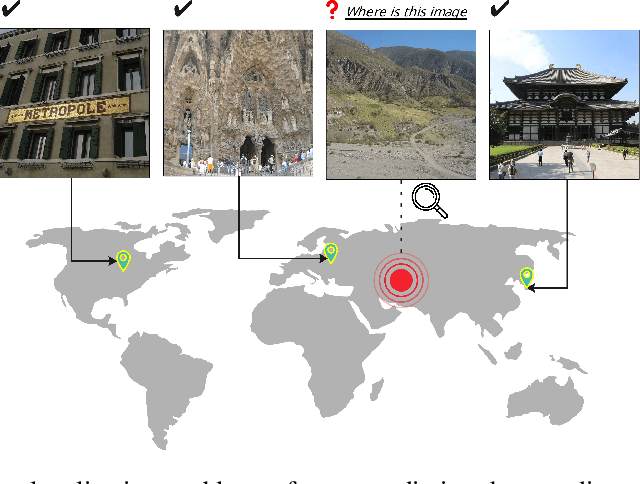

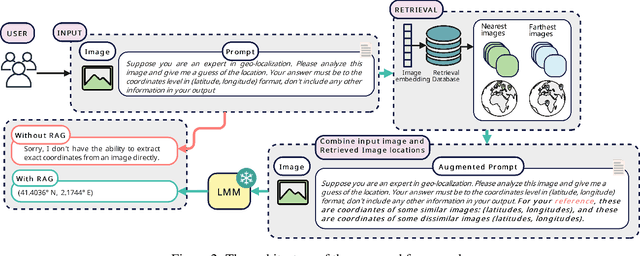

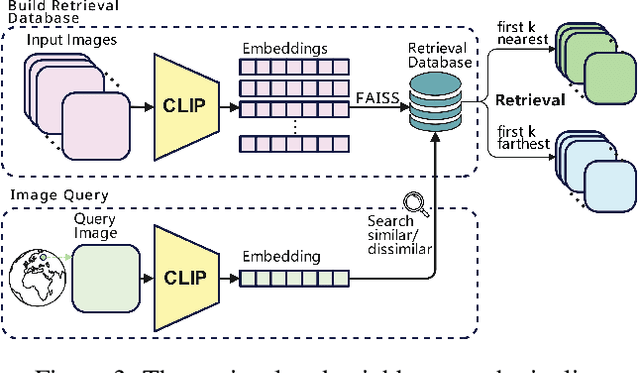

Abstract:Geolocating precise locations from images presents a challenging problem in computer vision and information retrieval.Traditional methods typically employ either classification, which dividing the Earth surface into grid cells and classifying images accordingly, or retrieval, which identifying locations by matching images with a database of image-location pairs. However, classification-based approaches are limited by the cell size and cannot yield precise predictions, while retrieval-based systems usually suffer from poor search quality and inadequate coverage of the global landscape at varied scale and aggregation levels. To overcome these drawbacks, we present Img2Loc, a novel system that redefines image geolocalization as a text generation task. This is achieved using cutting-edge large multi-modality models like GPT4V or LLaVA with retrieval augmented generation. Img2Loc first employs CLIP-based representations to generate an image-based coordinate query database. It then uniquely combines query results with images itself, forming elaborate prompts customized for LMMs. When tested on benchmark datasets such as Im2GPS3k and YFCC4k, Img2Loc not only surpasses the performance of previous state-of-the-art models but does so without any model training.

On the Promises and Challenges of Multimodal Foundation Models for Geographical, Environmental, Agricultural, and Urban Planning Applications

Dec 23, 2023

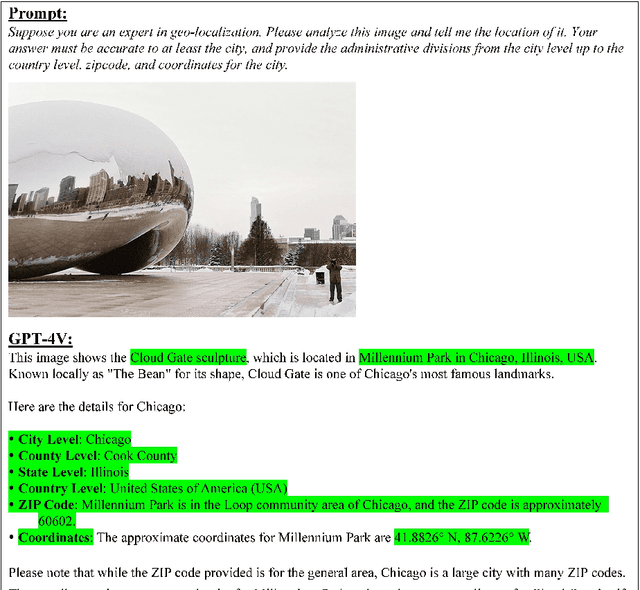

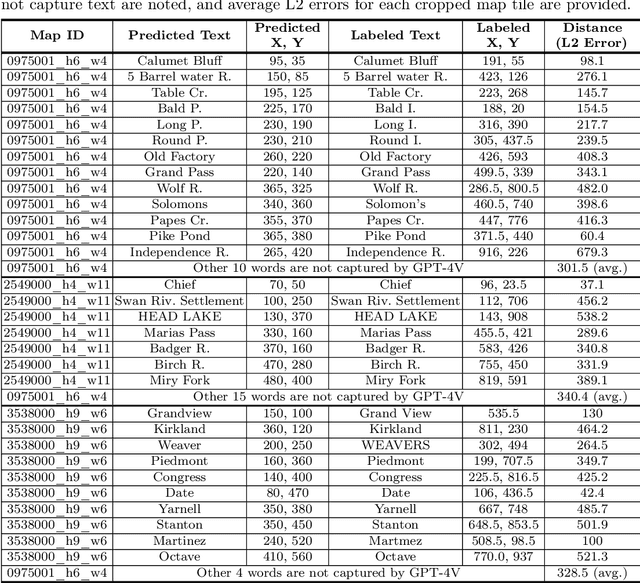

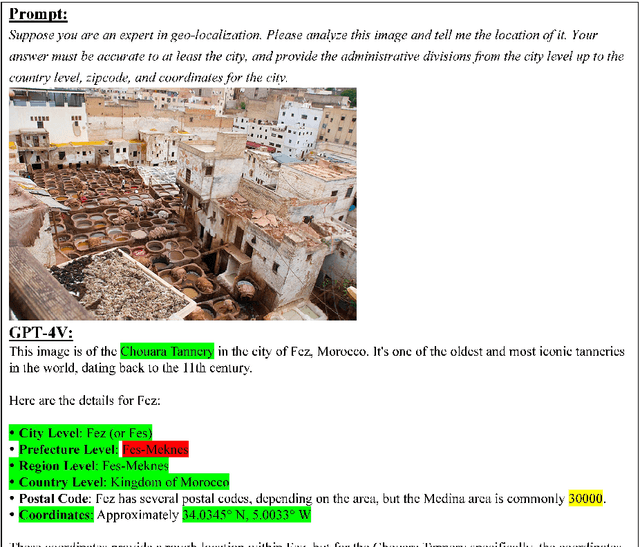

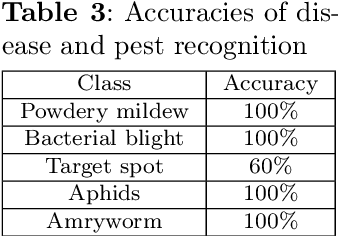

Abstract:The advent of large language models (LLMs) has heightened interest in their potential for multimodal applications that integrate language and vision. This paper explores the capabilities of GPT-4V in the realms of geography, environmental science, agriculture, and urban planning by evaluating its performance across a variety of tasks. Data sources comprise satellite imagery, aerial photos, ground-level images, field images, and public datasets. The model is evaluated on a series of tasks including geo-localization, textual data extraction from maps, remote sensing image classification, visual question answering, crop type identification, disease/pest/weed recognition, chicken behavior analysis, agricultural object counting, urban planning knowledge question answering, and plan generation. The results indicate the potential of GPT-4V in geo-localization, land cover classification, visual question answering, and basic image understanding. However, there are limitations in several tasks requiring fine-grained recognition and precise counting. While zero-shot learning shows promise, performance varies across problem domains and image complexities. The work provides novel insights into GPT-4V's capabilities and limitations for real-world geospatial, environmental, agricultural, and urban planning challenges. Further research should focus on augmenting the model's knowledge and reasoning for specialized domains through expanded training. Overall, the analysis demonstrates foundational multimodal intelligence, highlighting the potential of multimodal foundation models (FMs) to advance interdisciplinary applications at the nexus of computer vision and language.

XAI meets Biology: A Comprehensive Review of Explainable AI in Bioinformatics Applications

Dec 11, 2023

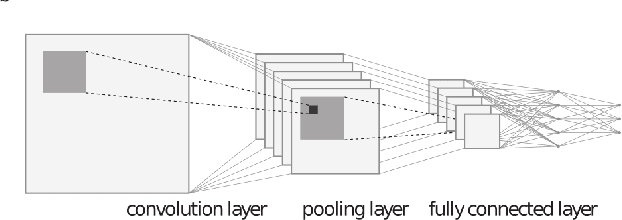

Abstract:Artificial intelligence (AI), particularly machine learning and deep learning models, has significantly impacted bioinformatics research by offering powerful tools for analyzing complex biological data. However, the lack of interpretability and transparency of these models presents challenges in leveraging these models for deeper biological insights and for generating testable hypotheses. Explainable AI (XAI) has emerged as a promising solution to enhance the transparency and interpretability of AI models in bioinformatics. This review provides a comprehensive analysis of various XAI techniques and their applications across various bioinformatics domains including DNA, RNA, and protein sequence analysis, structural analysis, gene expression and genome analysis, and bioimaging analysis. We introduce the most pertinent machine learning and XAI methods, then discuss their diverse applications and address the current limitations of available XAI tools. By offering insights into XAI's potential and challenges, this review aims to facilitate its practical implementation in bioinformatics research and help researchers navigate the landscape of XAI tools.

BadSAM: Exploring Security Vulnerabilities of SAM via Backdoor Attacks

May 05, 2023Abstract:Recently, the Segment Anything Model (SAM) has gained significant attention as an image segmentation foundation model due to its strong performance on various downstream tasks. However, it has been found that SAM does not always perform satisfactorily when faced with challenging downstream tasks. This has led downstream users to demand a customized SAM model that can be adapted to these downstream tasks. In this paper, we present BadSAM, the first backdoor attack on the image segmentation foundation model. Our preliminary experiments on the CAMO dataset demonstrate the effectiveness of BadSAM.

Text2Seg: Remote Sensing Image Semantic Segmentation via Text-Guided Visual Foundation Models

Apr 20, 2023Abstract:Recent advancements in foundation models (FMs), such as GPT-4 and LLaMA, have attracted significant attention due to their exceptional performance in zero-shot learning scenarios. Similarly, in the field of visual learning, models like Grounding DINO and the Segment Anything Model (SAM) have exhibited remarkable progress in open-set detection and instance segmentation tasks. It is undeniable that these FMs will profoundly impact a wide range of real-world visual learning tasks, ushering in a new paradigm shift for developing such models. In this study, we concentrate on the remote sensing domain, where the images are notably dissimilar from those in conventional scenarios. We developed a pipeline that leverages multiple FMs to facilitate remote sensing image semantic segmentation tasks guided by text prompt, which we denote as Text2Seg. The pipeline is benchmarked on several widely-used remote sensing datasets, and we present preliminary results to demonstrate its effectiveness. Through this work, we aim to provide insights into maximizing the applicability of visual FMs in specific contexts with minimal model tuning. The code is available at https://github.com/Douglas2Code/Text2Seg.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge