Hongxu Ma

MS-DETR: Towards Effective Video Moment Retrieval and Highlight Detection by Joint Motion-Semantic Learning

Jul 16, 2025Abstract:Video Moment Retrieval (MR) and Highlight Detection (HD) aim to pinpoint specific moments and assess clip-wise relevance based on the text query. While DETR-based joint frameworks have made significant strides, there remains untapped potential in harnessing the intricate relationships between temporal motion and spatial semantics within video content. In this paper, we propose the Motion-Semantics DETR (MS-DETR), a framework that captures rich motion-semantics features through unified learning for MR/HD tasks. The encoder first explicitly models disentangled intra-modal correlations within motion and semantics dimensions, guided by the given text queries. Subsequently, the decoder utilizes the task-wise correlation across temporal motion and spatial semantics dimensions to enable precise query-guided localization for MR and refined highlight boundary delineation for HD. Furthermore, we observe the inherent sparsity dilemma within the motion and semantics dimensions of MR/HD datasets. To address this issue, we enrich the corpus from both dimensions by generation strategies and propose contrastive denoising learning to ensure the above components learn robustly and effectively. Extensive experiments on four MR/HD benchmarks demonstrate that our method outperforms existing state-of-the-art models by a margin. Our code is available at https://github.com/snailma0229/MS-DETR.git.

Generative Regression Based Watch Time Prediction for Video Recommendation: Model and Performance

Dec 28, 2024

Abstract:Watch time prediction (WTP) has emerged as a pivotal task in short video recommendation systems, designed to encapsulate user interests. Predicting users' watch times on videos often encounters challenges, including wide value ranges and imbalanced data distributions, which can lead to significant bias when directly regressing watch time. Recent studies have tried to tackle these issues by converting the continuous watch time estimation into an ordinal classification task. While these methods are somewhat effective, they exhibit notable limitations. Inspired by language modeling, we propose a novel Generative Regression (GR) paradigm for WTP based on sequence generation. This approach employs structural discretization to enable the lossless reconstruction of original values while maintaining prediction fidelity. By formulating the prediction problem as a numerical-to-sequence mapping, and with meticulously designed vocabulary and label encodings, each watch time is transformed into a sequence of tokens. To expedite model training, we introduce the curriculum learning with an embedding mixup strategy which can mitigate training-and-inference inconsistency associated with teacher forcing. We evaluate our method against state-of-the-art approaches on four public datasets and one industrial dataset. We also perform online A/B testing on Kuaishou, a leading video app with about 400 million DAUs, to demonstrate the real-world efficacy of our method. The results conclusively show that GR outperforms existing techniques significantly. Furthermore, we successfully apply GR to another regression task in recommendation systems, i.e., Lifetime Value (LTV) prediction, which highlights its potential as a novel and effective solution to general regression challenges.

TorchSpatial: A Location Encoding Framework and Benchmark for Spatial Representation Learning

Jun 21, 2024Abstract:Spatial representation learning (SRL) aims at learning general-purpose neural network representations from various types of spatial data (e.g., points, polylines, polygons, networks, images, etc.) in their native formats. Learning good spatial representations is a fundamental problem for various downstream applications such as species distribution modeling, weather forecasting, trajectory generation, geographic question answering, etc. Even though SRL has become the foundation of almost all geospatial artificial intelligence (GeoAI) research, we have not yet seen significant efforts to develop an extensive deep learning framework and benchmark to support SRL model development and evaluation. To fill this gap, we propose TorchSpatial, a learning framework and benchmark for location (point) encoding, which is one of the most fundamental data types of spatial representation learning. TorchSpatial contains three key components: 1) a unified location encoding framework that consolidates 15 commonly recognized location encoders, ensuring scalability and reproducibility of the implementations; 2) the LocBench benchmark tasks encompassing 7 geo-aware image classification and 4 geo-aware image regression datasets; 3) a comprehensive suite of evaluation metrics to quantify geo-aware models' overall performance as well as their geographic bias, with a novel Geo-Bias Score metric. Finally, we provide a detailed analysis and insights into the model performance and geographic bias of different location encoders. We believe TorchSpatial will foster future advancement of spatial representation learning and spatial fairness in GeoAI research. The TorchSpatial model framework, LocBench, and Geo-Bias Score evaluation framework are available at https://github.com/seai-lab/TorchSpatial.

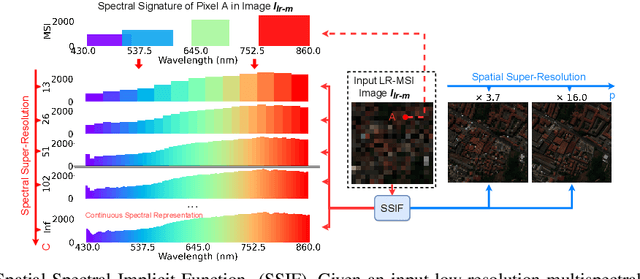

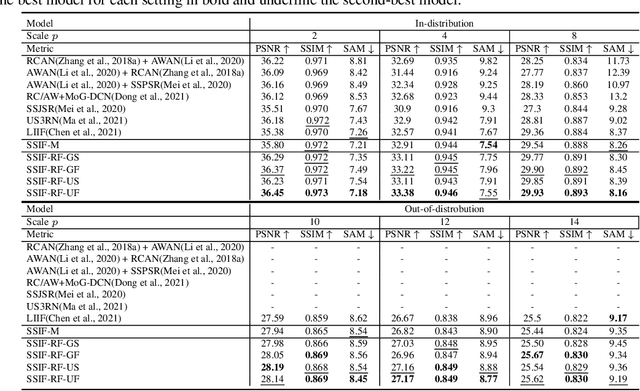

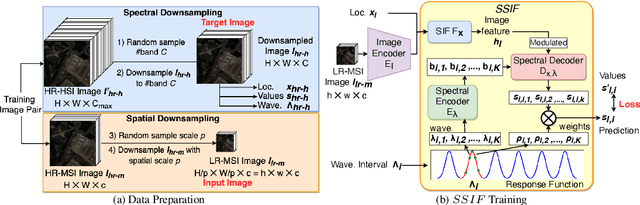

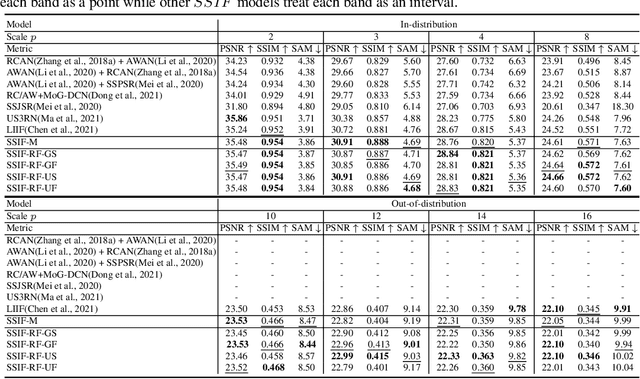

SSIF: Learning Continuous Image Representation for Spatial-Spectral Super-Resolution

Sep 30, 2023

Abstract:Existing digital sensors capture images at fixed spatial and spectral resolutions (e.g., RGB, multispectral, and hyperspectral images), and each combination requires bespoke machine learning models. Neural Implicit Functions partially overcome the spatial resolution challenge by representing an image in a resolution-independent way. However, they still operate at fixed, pre-defined spectral resolutions. To address this challenge, we propose Spatial-Spectral Implicit Function (SSIF), a neural implicit model that represents an image as a function of both continuous pixel coordinates in the spatial domain and continuous wavelengths in the spectral domain. We empirically demonstrate the effectiveness of SSIF on two challenging spatio-spectral super-resolution benchmarks. We observe that SSIF consistently outperforms state-of-the-art baselines even when the baselines are allowed to train separate models at each spectral resolution. We show that SSIF generalizes well to both unseen spatial resolutions and spectral resolutions. Moreover, SSIF can generate high-resolution images that improve the performance of downstream tasks (e.g., land use classification) by 1.7%-7%.

Learning fast and agile quadrupedal locomotion over complex terrain

Jul 02, 2022

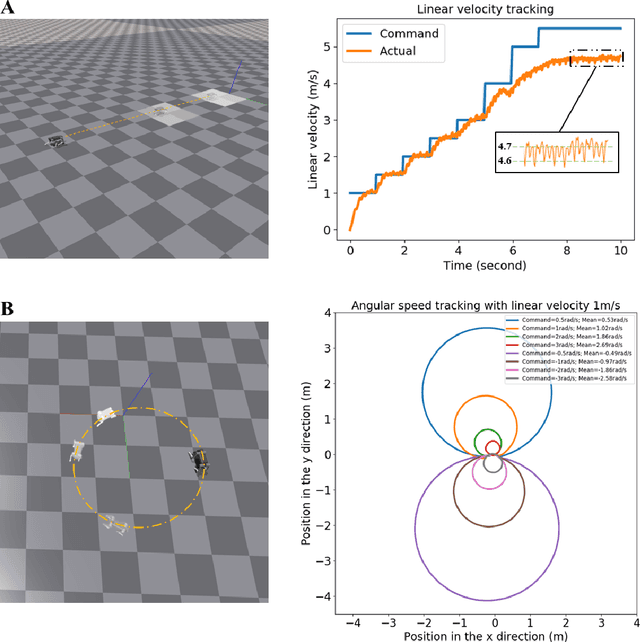

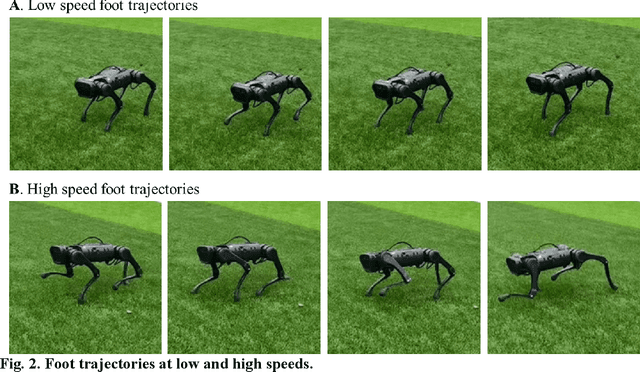

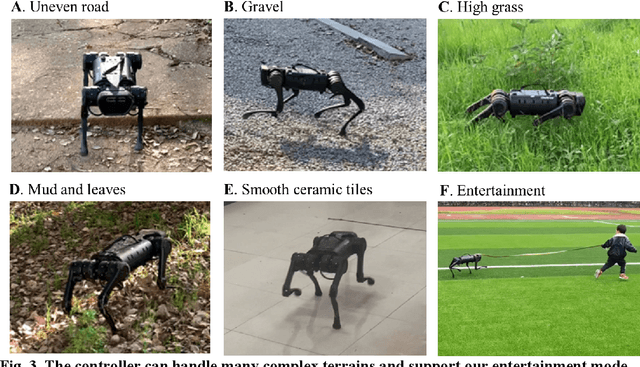

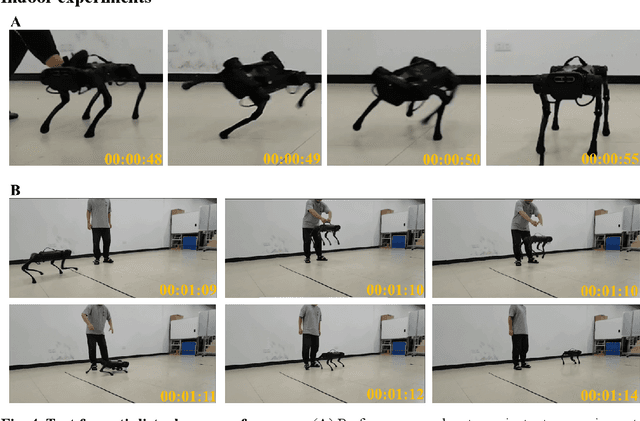

Abstract:In this paper, we propose a robust controller that achieves natural and stably fast locomotion on a real blind quadruped robot. With only proprioceptive information, the quadruped robot can move at a maximum speed of 10 times its body length, and has the ability to pass through various complex terrains. The controller is trained in the simulation environment by model-free reinforcement learning. In this paper, the proposed loose neighborhood control architecture not only guarantees the learning rate, but also obtains an action network that is easy to transfer to a real quadruped robot. Our research finds that there is a problem of data symmetry loss during training, which leads to unbalanced performance of the learned controller on the left-right symmetric quadruped robot structure, and proposes a mirror-world neural network to solve the performance problem. The learned controller composed of the mirror-world network can make the robot achieve excellent anti-disturbance ability. No specific human knowledge such as a foot trajectory generator are used in the training architecture. The learned controller can coordinate the robot's gait frequency and locomotion speed, and the locomotion pattern is more natural and reasonable than the artificially designed controller. Our controller has excellent anti-disturbance performance, and has good generalization ability to reach locomotion speeds it has never learned and traverse terrains it has never seen before.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge