Fei Dou

Achieving Fine-grained Cross-modal Understanding through Brain-inspired Hierarchical Representation Learning

Jan 04, 2026Abstract:Understanding neural responses to visual stimuli remains challenging due to the inherent complexity of brain representations and the modality gap between neural data and visual inputs. Existing methods, mainly based on reducing neural decoding to generation tasks or simple correlations, fail to reflect the hierarchical and temporal processes of visual processing in the brain. To address these limitations, we present NeuroAlign, a novel framework for fine-grained fMRI-video alignment inspired by the hierarchical organization of the human visual system. Our framework implements a two-stage mechanism that mirrors biological visual pathways: global semantic understanding through Neural-Temporal Contrastive Learning (NTCL) and fine-grained pattern matching through enhanced vector quantization. NTCL explicitly models temporal dynamics through bidirectional prediction between modalities, while our DynaSyncMM-EMA approach enables dynamic multi-modal fusion with adaptive weighting. Experiments demonstrate that NeuroAlign significantly outperforms existing methods in cross-modal retrieval tasks, establishing a new paradigm for understanding visual cognitive mechanisms.

MARAuder's Map: Motion-Aware Real-time Activity Recognition with Layout-Based Trajectories

Nov 08, 2025Abstract:Ambient sensor-based human activity recognition (HAR) in smart homes remains challenging due to the need for real-time inference, spatially grounded reasoning, and context-aware temporal modeling. Existing approaches often rely on pre-segmented, within-activity data and overlook the physical layout of the environment, limiting their robustness in continuous, real-world deployments. In this paper, we propose MARAuder's Map, a novel framework for real-time activity recognition from raw, unsegmented sensor streams. Our method projects sensor activations onto the physical floorplan to generate trajectory-aware, image-like sequences that capture the spatial flow of human movement. These representations are processed by a hybrid deep learning model that jointly captures spatial structure and temporal dependencies. To enhance temporal awareness, we introduce a learnable time embedding module that encodes contextual cues such as hour-of-day and day-of-week. Additionally, an attention-based encoder selectively focuses on informative segments within each observation window, enabling accurate recognition even under cross-activity transitions and temporal ambiguity. Extensive experiments on multiple real-world smart home datasets demonstrate that our method outperforms strong baselines, offering a practical solution for real-time HAR in ambient sensor environments.

ADLGen: Synthesizing Symbolic, Event-Triggered Sensor Sequences for Human Activity Modeling

May 23, 2025Abstract:Real world collection of Activities of Daily Living data is challenging due to privacy concerns, costly deployment and labeling, and the inherent sparsity and imbalance of human behavior. We present ADLGen, a generative framework specifically designed to synthesize realistic, event triggered, and symbolic sensor sequences for ambient assistive environments. ADLGen integrates a decoder only Transformer with sign based symbolic temporal encoding, and a context and layout aware sampling mechanism to guide generation toward semantically rich and physically plausible sensor event sequences. To enhance semantic fidelity and correct structural inconsistencies, we further incorporate a large language model into an automatic generate evaluate refine loop, which verifies logical, behavioral, and temporal coherence and generates correction rules without manual intervention or environment specific tuning. Through comprehensive experiments with novel evaluation metrics, ADLGen is shown to outperform baseline generators in statistical fidelity, semantic richness, and downstream activity recognition, offering a scalable and privacy-preserving solution for ADL data synthesis.

HELENE: Hessian Layer-wise Clipping and Gradient Annealing for Accelerating Fine-tuning LLM with Zeroth-order Optimization

Nov 16, 2024

Abstract:Fine-tuning large language models (LLMs) poses significant memory challenges, as the back-propagation process demands extensive resources, especially with growing model sizes. Recent work, MeZO, addresses this issue using a zeroth-order (ZO) optimization method, which reduces memory consumption by matching the usage to the inference phase. However, MeZO experiences slow convergence due to varying curvatures across model parameters. To overcome this limitation, we introduce HELENE, a novel scalable and memory-efficient optimizer that integrates annealed A-GNB gradients with a diagonal Hessian estimation and layer-wise clipping, serving as a second-order pre-conditioner. This combination allows for faster and more stable convergence. Our theoretical analysis demonstrates that HELENE improves convergence rates, particularly for models with heterogeneous layer dimensions, by reducing the dependency on the total parameter space dimension. Instead, the method scales with the largest layer dimension, making it highly suitable for modern LLM architectures. Experimental results on RoBERTa-large and OPT-1.3B across multiple tasks show that HELENE achieves up to a 20x speedup compared to MeZO, with average accuracy improvements of 1.5%. Furthermore, HELENE remains compatible with both full parameter tuning and parameter-efficient fine-tuning (PEFT), outperforming several state-of-the-art optimizers. The codes will be released after reviewing.

Towards Artificial General Intelligence (AGI) in the Internet of Things (IoT): Opportunities and Challenges

Sep 14, 2023

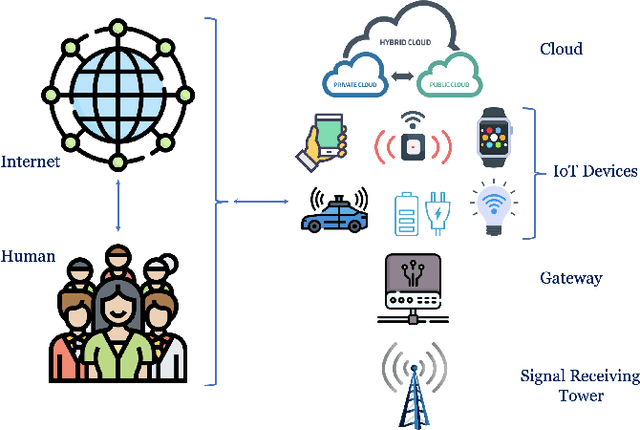

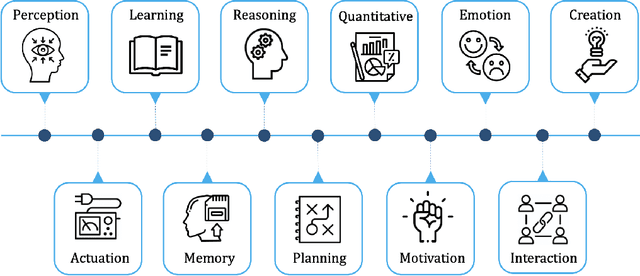

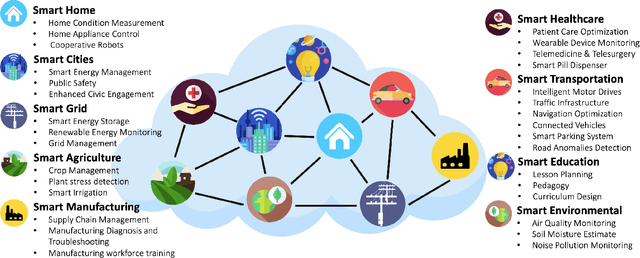

Abstract:Artificial General Intelligence (AGI), possessing the capacity to comprehend, learn, and execute tasks with human cognitive abilities, engenders significant anticipation and intrigue across scientific, commercial, and societal arenas. This fascination extends particularly to the Internet of Things (IoT), a landscape characterized by the interconnection of countless devices, sensors, and systems, collectively gathering and sharing data to enable intelligent decision-making and automation. This research embarks on an exploration of the opportunities and challenges towards achieving AGI in the context of the IoT. Specifically, it starts by outlining the fundamental principles of IoT and the critical role of Artificial Intelligence (AI) in IoT systems. Subsequently, it delves into AGI fundamentals, culminating in the formulation of a conceptual framework for AGI's seamless integration within IoT. The application spectrum for AGI-infused IoT is broad, encompassing domains ranging from smart grids, residential environments, manufacturing, and transportation to environmental monitoring, agriculture, healthcare, and education. However, adapting AGI to resource-constrained IoT settings necessitates dedicated research efforts. Furthermore, the paper addresses constraints imposed by limited computing resources, intricacies associated with large-scale IoT communication, as well as the critical concerns pertaining to security and privacy.

Mobilizing Personalized Federated Learning via Random Walk Stochastic ADMM

Apr 25, 2023

Abstract:In this research, we investigate the barriers associated with implementing Federated Learning (FL) in real-world scenarios, where a consistent connection between the central server and all clients cannot be maintained, and data distribution is heterogeneous. To address these challenges, we focus on mobilizing the federated setting, where the server moves between groups of adjacent clients to learn local models. Specifically, we propose a new algorithm, Random Walk Stochastic Alternating Direction Method of Multipliers (RWSADMM), capable of adapting to dynamic and ad-hoc network conditions as long as a sufficient number of connected clients are available for model training. In RWSADMM, the server walks randomly toward a group of clients. It formulates local proximity among adjacent clients based on hard inequality constraints instead of consensus updates to address data heterogeneity. Our proposed method is convergent, reduces communication costs, and enhances scalability by reducing the number of clients the central server needs to communicate with.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge