John Leonard

3DGS-CD: 3D Gaussian Splatting-based Change Detection for Physical Object Rearrangement

Nov 06, 2024Abstract:We present 3DGS-CD, the first 3D Gaussian Splatting (3DGS)-based method for detecting physical object rearrangements in 3D scenes. Our approach estimates 3D object-level changes by comparing two sets of unaligned images taken at different times. Leveraging 3DGS's novel view rendering and EfficientSAM's zero-shot segmentation capabilities, we detect 2D object-level changes, which are then associated and fused across views to estimate 3D changes. Our method can detect changes in cluttered environments using sparse post-change images within as little as 18s, using as few as a single new image. It does not rely on depth input, user instructions, object classes, or object models -- An object is recognized simply if it has been re-arranged. Our approach is evaluated on both public and self-collected real-world datasets, achieving up to 14% higher accuracy and three orders of magnitude faster performance compared to the state-of-the-art radiance-field-based change detection method. This significant performance boost enables a broad range of downstream applications, where we highlight three key use cases: object reconstruction, robot workspace reset, and 3DGS model update. Our code and data will be made available at https://github.com/520xyxyzq/3DGS-CD.

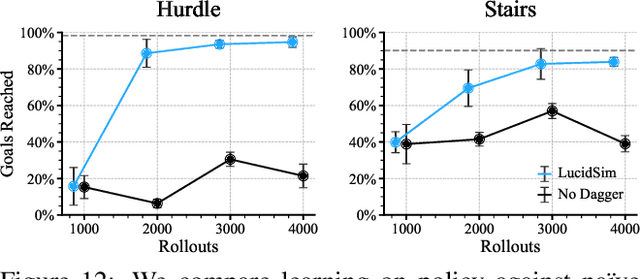

Learning Visual Parkour from Generated Images

Oct 31, 2024

Abstract:Fast and accurate physics simulation is an essential component of robot learning, where robots can explore failure scenarios that are difficult to produce in the real world and learn from unlimited on-policy data. Yet, it remains challenging to incorporate RGB-color perception into the sim-to-real pipeline that matches the real world in its richness and realism. In this work, we train a robot dog in simulation for visual parkour. We propose a way to use generative models to synthesize diverse and physically accurate image sequences of the scene from the robot's ego-centric perspective. We present demonstrations of zero-shot transfer to the RGB-only observations of the real world on a robot equipped with a low-cost, off-the-shelf color camera. website visit https://lucidsim.github.io

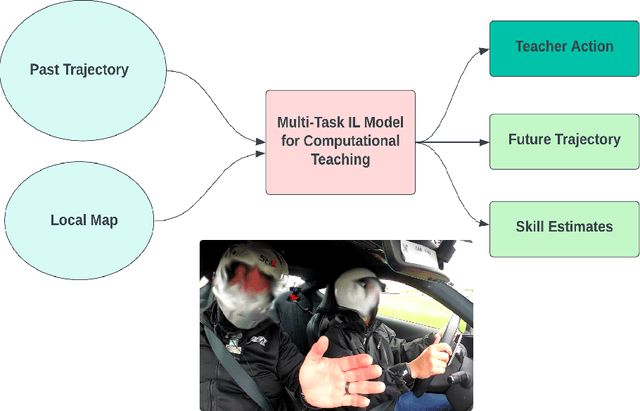

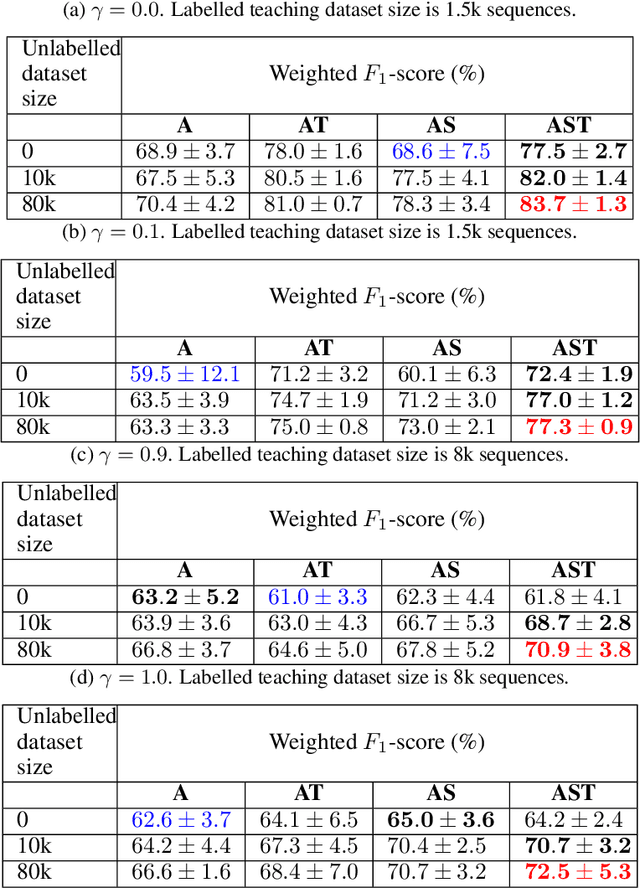

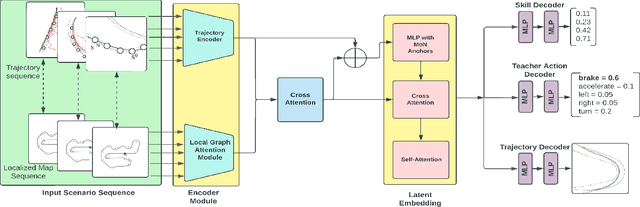

Computational Teaching for Driving via Multi-Task Imitation Learning

Oct 02, 2024

Abstract:Learning motor skills for sports or performance driving is often done with professional instruction from expert human teachers, whose availability is limited. Our goal is to enable automated teaching via a learned model that interacts with the student similar to a human teacher. However, training such automated teaching systems is limited by the availability of high-quality annotated datasets of expert teacher and student interactions that are difficult to collect at scale. To address this data scarcity problem, we propose an approach for training a coaching system for complex motor tasks such as high performance driving via a Multi-Task Imitation Learning (MTIL) paradigm. MTIL allows our model to learn robust representations by utilizing self-supervised training signals from more readily available non-interactive datasets of humans performing the task of interest. We validate our approach with (1) a semi-synthetic dataset created from real human driving trajectories, (2) a professional track driving instruction dataset, (3) a track-racing driving simulator human-subject study, and (4) a system demonstration on an instrumented car at a race track. Our experiments show that the right set of auxiliary machine learning tasks improves performance in predicting teaching instructions. Moreover, in the human subjects study, students exposed to the instructions from our teaching system improve their ability to stay within track limits, and show favorable perception of the model's interaction with them, in terms of usefulness and satisfaction.

MAC: Maximizing Algebraic Connectivity for Graph Sparsification

Mar 28, 2024

Abstract:Simultaneous localization and mapping (SLAM) is a critical capability in autonomous navigation, but memory and computational limits make long-term application of common SLAM techniques impractical; a robot must be able to determine what information should be retained and what can safely be forgotten. In graph-based SLAM, the number of edges (measurements) in a pose graph determines both the memory requirements of storing a robot's observations and the computational expense of algorithms deployed for performing state estimation using those observations, both of which can grow unbounded during long-term navigation. Motivated by these challenges, we propose a new general purpose approach to sparsify graphs in a manner that maximizes algebraic connectivity, a key spectral property of graphs which has been shown to control the estimation error of pose graph SLAM solutions. Our algorithm, MAC (for maximizing algebraic connectivity), is simple and computationally inexpensive, and admits formal post hoc performance guarantees on the quality of the solution that it provides. In application to the problem of pose-graph SLAM, we show on several benchmark datasets that our approach quickly produces high-quality sparsification results which retain the connectivity of the graph and, in turn, the quality of corresponding SLAM solutions.

GAME-UP: Game-Aware Mode Enumeration and Understanding for Trajectory Prediction

May 28, 2023

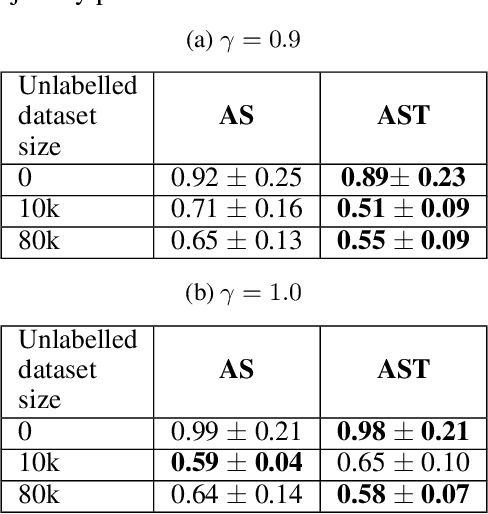

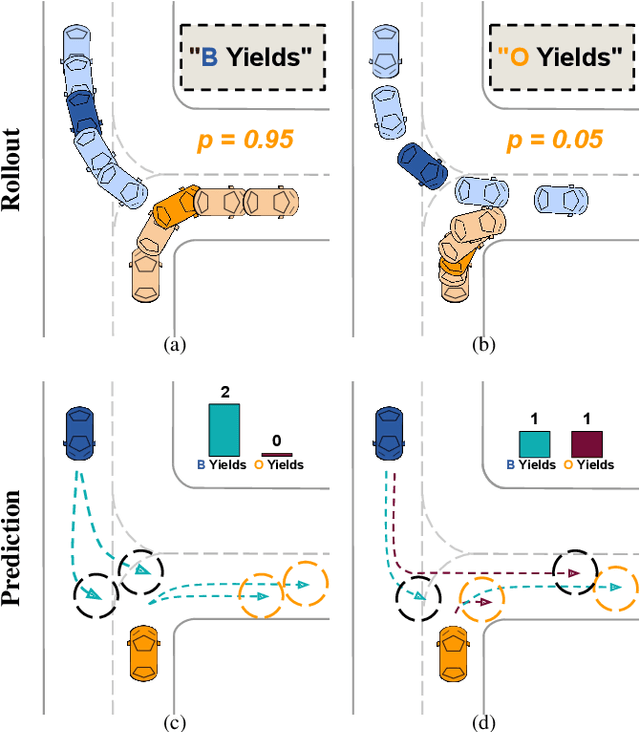

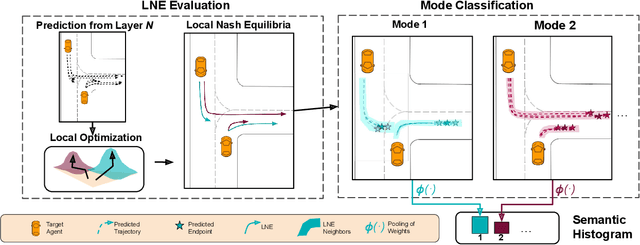

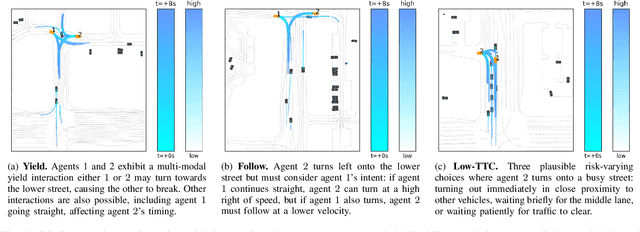

Abstract:Interactions between road agents present a significant challenge in trajectory prediction, especially in cases involving multiple agents. Because existing diversity-aware predictors do not account for the interactive nature of multi-agent predictions, they may miss these important interaction outcomes. In this paper, we propose GAME-UP, a framework for trajectory prediction that leverages game-theoretic inverse reinforcement learning to improve coverage of multi-modal predictions. We use a training-time game-theoretic numerical analysis as an auxiliary loss resulting in improved coverage and accuracy without presuming a taxonomy of actions for the agents. We demonstrate our approach on the interactive subset of Waymo Open Motion Dataset, including three subsets involving scenarios with high interaction complexity. Experiment results show that our predictor produces accurate predictions while covering twice as many possible interactions versus a baseline model.

SLAM-Supported Self-Training for 6D Object Pose Estimation

Mar 29, 2022

Abstract:Recent progress in learning-based object pose estimation paves the way for developing richer object-level world representations. However, the estimators, often trained with out-of-domain data, can suffer performance degradation as deployed in novel environments. To address the problem, we present a SLAM-supported self-training procedure to autonomously improve robot object pose estimation ability during navigation. Combining the network predictions with robot odometry, we can build a consistent object-level environment map via pose graph optimization (PGO). Exploiting the state estimates from PGO, we pseudo-label robot-collected RGB images to fine-tune the pose estimators. Unfortunately, it is difficult to quantify the uncertainty of the estimator predictions. The unmodeled data uncertainty used for PGO can result in low-quality object pose estimates. An automatic covariance tuning method is developed for robust PGO by allowing the measurement uncertainty models to change as part of the optimization process. The formulation permits a straightforward alternating minimization procedure that re-scales covariances analytically and component-wise, enabling more flexible noise modeling for learning-based measurements. We test our method with the deep object pose estimator (DOPE) on the YCB video dataset and in real-world robot experiments. The method can achieve significant performance gain in pose estimation, and in return facilitates the success of object SLAM.

MAAD: A Model and Dataset for "Attended Awareness" in Driving

Oct 16, 2021

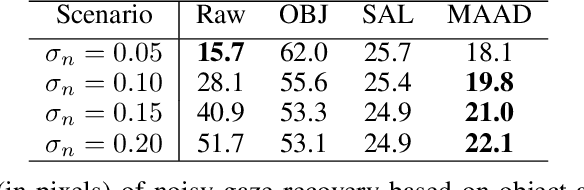

Abstract:We propose a computational model to estimate a person's attended awareness of their environment. We define attended awareness to be those parts of a potentially dynamic scene which a person has attended to in recent history and which they are still likely to be physically aware of. Our model takes as input scene information in the form of a video and noisy gaze estimates, and outputs visual saliency, a refined gaze estimate, and an estimate of the person's attended awareness. In order to test our model, we capture a new dataset with a high-precision gaze tracker including 24.5 hours of gaze sequences from 23 subjects attending to videos of driving scenes. The dataset also contains third-party annotations of the subjects' attended awareness based on observations of their scan path. Our results show that our model is able to reasonably estimate attended awareness in a controlled setting, and in the future could potentially be extended to real egocentric driving data to help enable more effective ahead-of-time warnings in safety systems and thereby augment driver performance. We also demonstrate our model's effectiveness on the tasks of saliency, gaze calibration, and denoising, using both our dataset and an existing saliency dataset. We make our model and dataset available at https://github.com/ToyotaResearchInstitute/att-aware/.

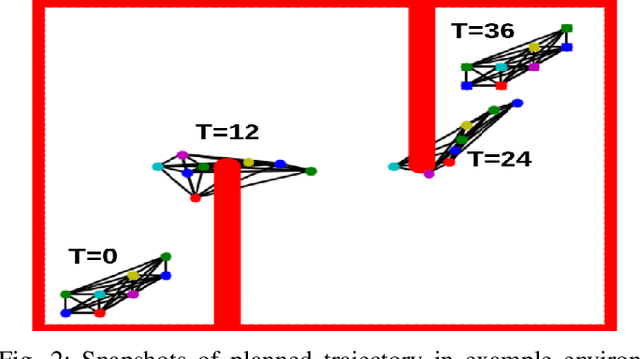

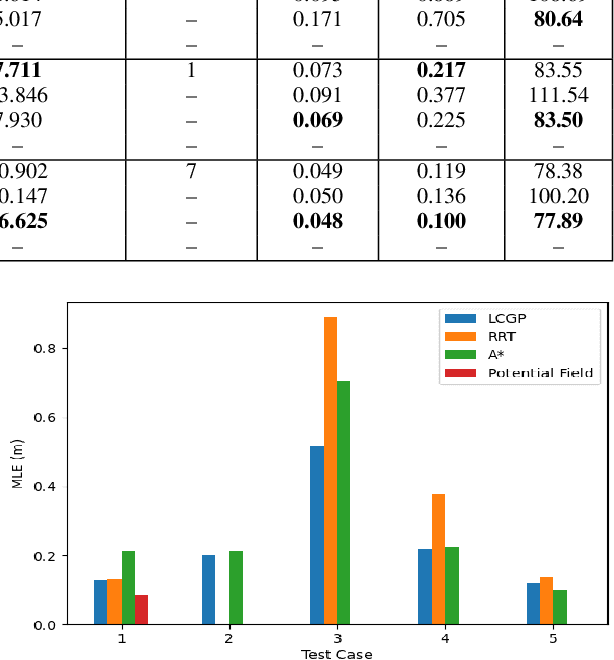

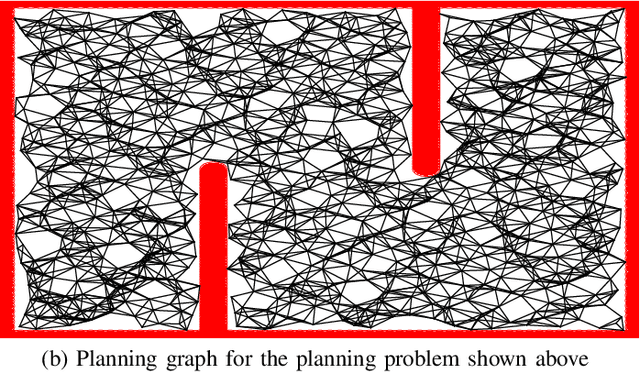

Prioritized Planning for Cooperative Range-Only Localization in Multi-Robot Networks

Sep 10, 2021

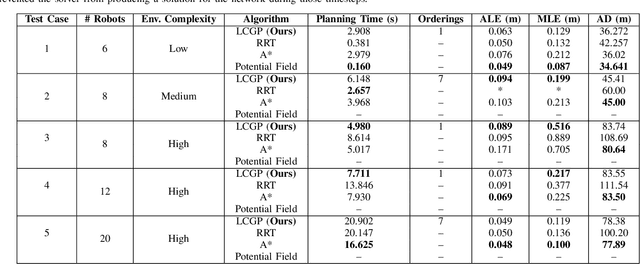

Abstract:We present a novel path-planning algorithm to reduce localization error for a network of robots cooperatively localizing via inter-robot range measurements. The quality of localization with range measurements depends on the configuration of the network, and poor configurations can cause substantial localization errors. To reduce the effect of network configuration on localization error for moving networks we consider various optimality measures of the Fisher information matrix (FIM), which have well-studied relationships with the localization error. In particular, we pose a trajectory planning problem with constraints on the FIM optimality measures. By constraining these optimality measures we can control the statistical properties of the localization error. To efficiently generate trajectories which satisfy these FIM constraints we present a prioritized planner which leverages graph-based planning and unique properties of the range-only FIM. We show results in simulated experiments that demonstrate the trajectories generated by our algorithm reduce worst-case localization error by up to 42\% in comparison to existing planning approaches and can scalably plan distance-efficient trajectories in complicated environments for large numbers of robots.

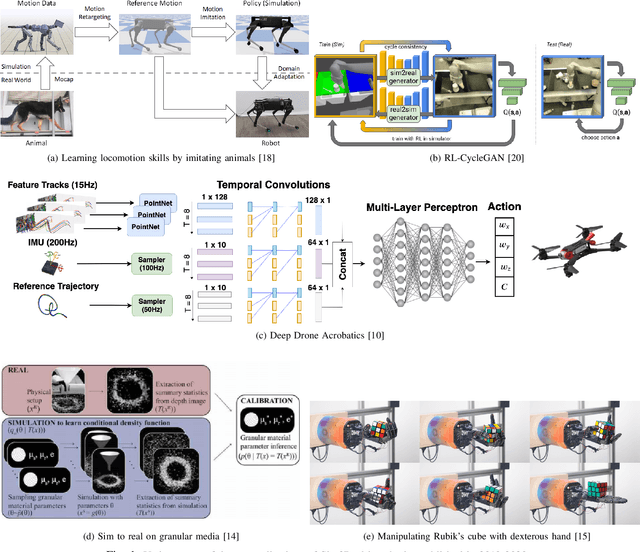

Perspectives on Sim2Real Transfer for Robotics: A Summary of the R:SS 2020 Workshop

Dec 07, 2020

Abstract:This report presents the debates, posters, and discussions of the Sim2Real workshop held in conjunction with the 2020 edition of the "Robotics: Science and System" conference. Twelve leaders of the field took competing debate positions on the definition, viability, and importance of transferring skills from simulation to the real world in the context of robotics problems. The debaters also joined a large panel discussion, answering audience questions and outlining the future of Sim2Real in robotics. Furthermore, we invited extended abstracts to this workshop which are summarized in this report. Based on the workshop, this report concludes with directions for practitioners exploiting this technology and for researchers further exploring open problems in this area.

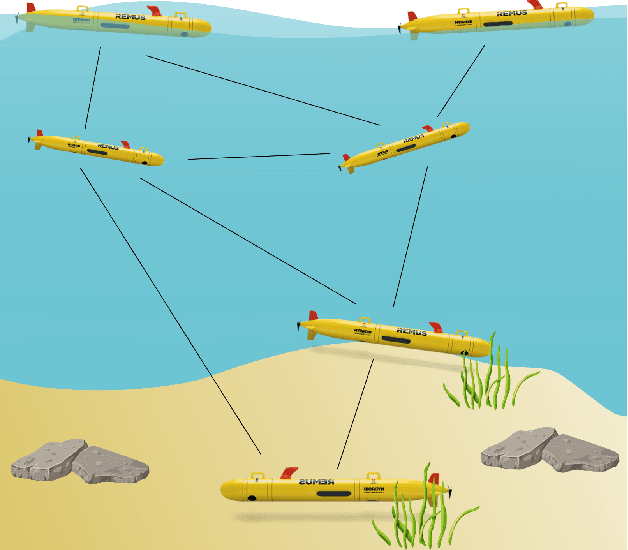

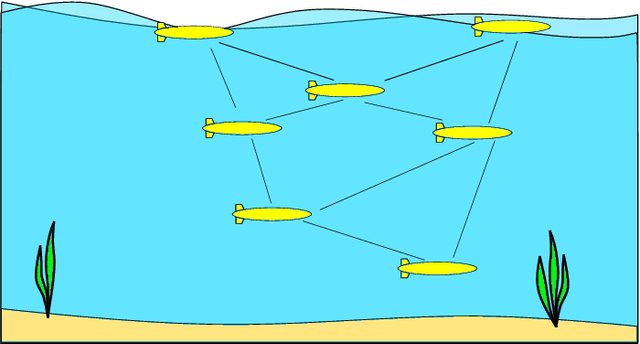

Network Localization Based Planning for Autonomous Underwater Vehicles with Inter-Vehicle Ranging

Oct 06, 2020

Abstract:Localization between a swarm of AUVs can be entirely estimated through the use of range measurements between neighboring AUVs via a class of techniques commonly referred to as sensor network localization. However, the localization quality depends on network topology, with degenerate topologies, referred to as low-rigidity configurations, leading to ambiguous or highly uncertain localization results. This paper presents tools for rigidity-based analysis, planning, and control of a multi-AUV network which account for sensor noise and limited sensing range. We evaluate our long-term planning framework in several two-dimensional simulated environments and show we are able to generate paths in feasible time and guarantee a minimum network rigidity over the full course of the paths.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge