Jilin Hu

ST-EVO: Towards Generative Spatio-Temporal Evolution of Multi-Agent Communication Topologies

Feb 16, 2026Abstract:LLM-powered Multi-Agent Systems (MAS) have emerged as an effective approach towards collaborative intelligence, and have attracted wide research interests. Among them, ``self-evolving'' MAS, treated as a more flexible and powerful technical route, can construct task-adaptive workflows or communication topologies, instead of relying on a predefined static structue template. Current self-evolving MAS mainly focus on Spatial Evolving or Temporal Evolving paradigm, which only considers the single dimension of evolution and does not fully incentivize LLMs' collaborative capability. In this work, we start from a novel Spatio-Temporal perspective by proposing ST-EVO, which supports dialogue-wise communication scheduling with a compact yet powerful flow-matching based Scheduler. To make precise Spatio-Temporal scheduling, ST-EVO can also perceive the uncertainty of MAS, and possesses self-feedback ability to learn from accumulated experience. Extensive experiments on nine benchmarks demonstrate the state-of-the-art performance of ST-EVO, achieving about 5%--25% accuracy improvement.

MEMTS: Internalizing Domain Knowledge via Parameterized Memory for Retrieval-Free Domain Adaptation of Time Series Foundation Models

Feb 14, 2026Abstract:While Time Series Foundation Models (TSFMs) have demonstrated exceptional performance in generalized forecasting, their performance often degrades significantly when deployed in real-world vertical domains characterized by temporal distribution shifts and domain-specific periodic structures. Current solutions are primarily constrained by two paradigms: Domain-Adaptive Pretraining (DAPT), which improves short-term domain fitting but frequently disrupts previously learned global temporal patterns due to catastrophic forgetting; and Retrieval-Augmented Generation (RAG), which incorporates external knowledge but introduces substantial retrieval overhead. This creates a severe scalability bottleneck that fails to meet the high-efficiency requirements of real-time stream processing. To break this impasse, we propose Memory for Time Series (MEMTS), a lightweight and plug-and-play method for retrieval-free domain adaptation in time series forecasting. The key component of MEMTS is a Knowledge Persistence Module (KPM), which internalizes domain-specific temporal dynamics, such as recurring seasonal patterns and trends into a compact set of learnable latent prototypes. In doing so, it transforms fragmented historical observations into continuous, parameterized knowledge representations. This paradigm shift enables MEMTS to achieve accurate domain adaptation with constant-time inference and near-zero latency, while effectively mitigating catastrophic forgetting of general temporal patterns, all without requiring any architectural modifications to the frozen TSFM backbone. Extensive experiments on multiple datasets demonstrate the SOTA performance of MEMTS.

SEER: Transformer-based Robust Time Series Forecasting via Automated Patch Enhancement and Replacement

Jan 31, 2026Abstract:Time series forecasting is important in many fields that require accurate predictions for decision-making. Patching techniques, commonly used and effective in time series modeling, help capture temporal dependencies by dividing the data into patches. However, existing patch-based methods fail to dynamically select patches and typically use all patches during the prediction process. In real-world time series, there are often low-quality issues during data collection, such as missing values, distribution shifts, anomalies and white noise, which may cause some patches to contain low-quality information, negatively impacting the prediction results. To address this issue, this study proposes a robust time series forecasting framework called SEER. Firstly, we propose an Augmented Embedding Module, which improves patch-wise representations using a Mixture-of-Experts (MoE) architecture and obtains series-wise token representations through a channel-adaptive perception mechanism. Secondly, we introduce a Learnable Patch Replacement Module, which enhances forecasting robustness and model accuracy through a two-stage process: 1) a dynamic filtering mechanism eliminates negative patch-wise tokens; 2) a replaced attention module substitutes the identified low-quality patches with global series-wise token, further refining their representations through a causal attention mechanism. Comprehensive experimental results demonstrate the SOTA performance of SEER.

Bridging Time and Frequency: A Joint Modeling Framework for Irregular Multivariate Time Series Forecasting

Jan 31, 2026Abstract:Irregular multivariate time series forecasting (IMTSF) is challenging due to non-uniform sampling and variable asynchronicity. These irregularities violate the equidistant assumptions of standard models, hindering local temporal modeling and rendering classical frequency-domain methods ineffective for capturing global periodic structures. To address this challenge, we propose TFMixer, a joint time-frequency modeling framework for IMTS forecasting. Specifically, TFMixer incorporates a Global Frequency Module that employs a learnable Non-Uniform Discrete Fourier Transform (NUDFT) to directly extract spectral representations from irregular timestamps. In parallel, the Local Time Module introduces a query-based patch mixing mechanism to adaptively aggregate informative temporal patches and alleviate information density imbalance. Finally, TFMixer fuses the time-domain and frequency-domain representations to generate forecasts and further leverages inverse NUDFT for explicit seasonal extrapolation. Extensive experiments on real-world datasets demonstrate the state--of-the-art performance of TFMixer.

TimeART: Towards Agentic Time Series Reasoning via Tool-Augmentation

Jan 20, 2026Abstract:Time series data widely exist in real-world cyber-physical systems. Though analyzing and interpreting them contributes to significant values, e.g, disaster prediction and financial risk control, current workflows mainly rely on human data scientists, which requires significant labor costs and lacks automation. To tackle this, we introduce TimeART, a framework fusing the analytical capability of strong out-of-the-box tools and the reasoning capability of Large Language Models (LLMs), which serves as a fully agentic data scientist for Time Series Question Answering (TSQA). To teach the LLM-based Time Series Reasoning Models (TSRMs) strategic tool-use, we also collect a 100k expert trajectory corpus called TimeToolBench. To enhance TSRMs' generalization capability, we then devise a four-stage training strategy, which boosts TSRMs through learning from their own early experiences and self-reflections. Experimentally, we train an 8B TSRM on TimeToolBench and equip it with the TimeART framework, and it achieves consistent state-of-the-art performance on multiple TSQA tasks, which pioneers a novel approach towards agentic time series reasoning.

TimeMar: Multi-Scale Autoregressive Modeling for Unconditional Time Series Generation

Jan 16, 2026Abstract:Generative modeling offers a promising solution to data scarcity and privacy challenges in time series analysis. However, the structural complexity of time series, characterized by multi-scale temporal patterns and heterogeneous components, remains insufficiently addressed. In this work, we propose a structure-disentangled multiscale generation framework for time series. Our approach encodes sequences into discrete tokens at multiple temporal resolutions and performs autoregressive generation in a coarse-to-fine manner, thereby preserving hierarchical dependencies. To tackle structural heterogeneity, we introduce a dual-path VQ-VAE that disentangles trend and seasonal components, enabling the learning of semantically consistent latent representations. Additionally, we present a guidance-based reconstruction strategy, where coarse seasonal signals are utilized as priors to guide the reconstruction of fine-grained seasonal patterns. Experiments on six datasets show that our approach produces higher-quality time series than existing methods. Notably, our model achieves strong performance with a significantly reduced parameter count and exhibits superior capability in generating high-quality long-term sequences. Our implementation is available at https://anonymous.4open.science/r/TimeMAR-BC5B.

DiffMM: Efficient Method for Accurate Noisy and Sparse Trajectory Map Matching via One Step Diffusion

Jan 13, 2026Abstract:Map matching for sparse trajectories is a fundamental problem for many trajectory-based applications, e.g., traffic scheduling and traffic flow analysis. Existing methods for map matching are generally based on Hidden Markov Model (HMM) or encoder-decoder framework. However, these methods continue to face significant challenges when handling noisy or sparsely sampled GPS trajectories. To address these limitations, we propose DiffMM, an encoder-diffusion-based map matching framework that produces effective yet efficient matching results through a one-step diffusion process. We first introduce a road segment-aware trajectory encoder that jointly embeds the input trajectory and its surrounding candidate road segments into a shared latent space through an attention mechanism. Next, we propose a one step diffusion method to realize map matching through a shortcut model by leveraging the joint embedding of the trajectory and candidate road segments as conditioning context. We conduct extensive experiments on large-scale trajectory datasets, demonstrating that our approach consistently outperforms state-of-the-art map matching methods in terms of both accuracy and efficiency, particularly for sparse trajectories and complex road network topologies.

Spatial-Temporal Feedback Diffusion Guidance for Controlled Traffic Imputation

Jan 08, 2026Abstract:Imputing missing values in spatial-temporal traffic data is essential for intelligent transportation systems. Among advanced imputation methods, score-based diffusion models have demonstrated competitive performance. These models generate data by reversing a noising process, using observed values as conditional guidance. However, existing diffusion models typically apply a uniform guidance scale across both spatial and temporal dimensions, which is inadequate for nodes with high missing data rates. Sparse observations provide insufficient conditional guidance, causing the generative process to drift toward the learned prior distribution rather than closely following the conditional observations, resulting in suboptimal imputation performance. To address this, we propose FENCE, a spatial-temporal feedback diffusion guidance method designed to adaptively control guidance scales during imputation. First, FENCE introduces a dynamic feedback mechanism that adjusts the guidance scale based on the posterior likelihood approximations. The guidance scale is increased when generated values diverge from observations and reduced when alignment improves, preventing overcorrection. Second, because alignment to observations varies across nodes and denoising steps, a global guidance scale for all nodes is suboptimal. FENCE computes guidance scales at the cluster level by grouping nodes based on their attention scores, leveraging spatial-temporal correlations to provide more accurate guidance. Experimental results on real-world traffic datasets show that FENCE significantly enhances imputation accuracy.

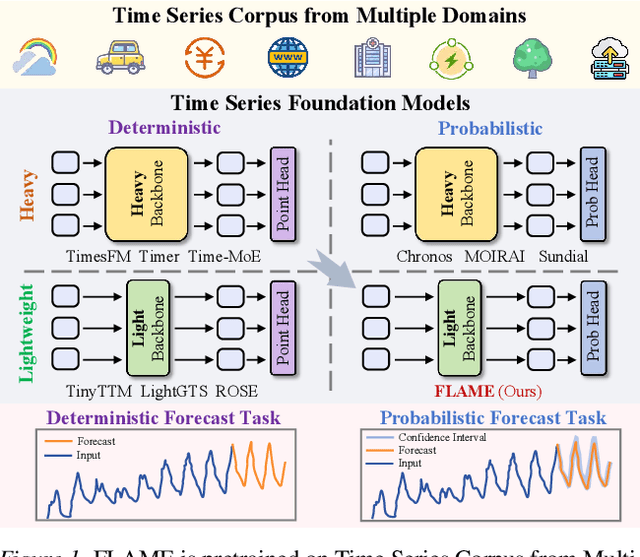

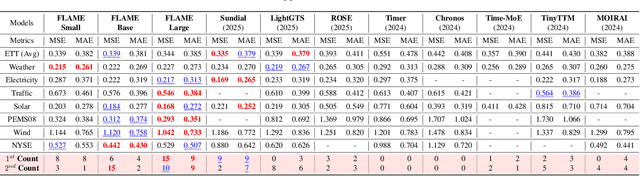

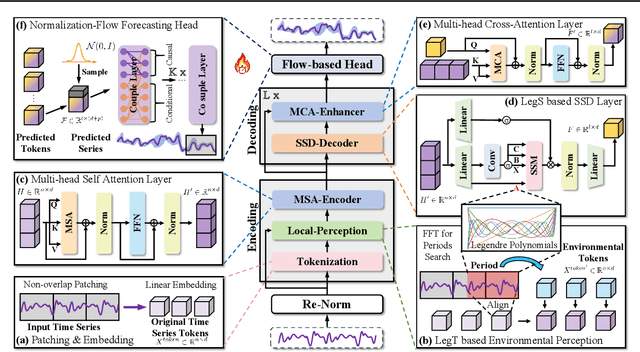

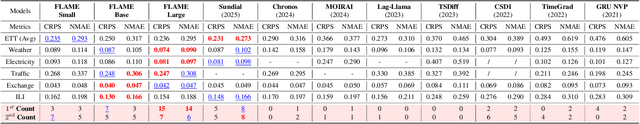

FLAME: Flow Enhanced Legendre Memory Models for General Time Series Forecasting

Dec 16, 2025

Abstract:In this work, we introduce FLAME, a family of extremely lightweight and capable Time Series Foundation Models, which support both deterministic and probabilistic forecasting via generative probabilistic modeling, thus ensuring both efficiency and robustness. FLAME utilizes the Legendre Memory for strong generalization capabilities. Through adapting variants of Legendre Memory, i.e., translated Legendre (LegT) and scaled Legendre (LegS), in the Encoding and Decoding phases, FLAME can effectively capture the inherent inductive bias within data and make efficient long-range inferences. To enhance the accuracy of probabilistic forecasting while keeping efficient, FLAME adopts a Normalization Flow based forecasting head, which can model the arbitrarily intricate distributions over the forecasting horizon in a generative manner. Comprehensive experiments on well-recognized benchmarks, including TSFM-Bench and ProbTS, demonstrate the consistent state-of-the-art zero-shot performance of FLAME on both deterministic and probabilistic forecasting tasks.

SculptDrug : A Spatial Condition-Aware Bayesian Flow Model for Structure-based Drug Design

Nov 16, 2025Abstract:Structure-Based drug design (SBDD) has emerged as a popular approach in drug discovery, leveraging three-dimensional protein structures to generate drug ligands. However, existing generative models encounter several key challenges: (1) incorporating boundary condition constraints, (2) integrating hierarchical structural conditions, and (3) ensuring spatial modeling fidelity. To address these limitations, we propose SculptDrug, a spatial condition-aware generative model based on Bayesian flow networks (BFNs). First, SculptDrug follows a BFN-based framework and employs a progressive denoising strategy to ensure spatial modeling fidelity, iteratively refining atom positions while enhancing local interactions for precise spatial alignment. Second, we introduce a Boundary Awareness Block that incorporates protein surface constraints into the generative process to ensure that generated ligands are geometrically compatible with the target protein. Third, we design a Hierarchical Encoder that captures global structural context while preserving fine-grained molecular interactions, ensuring overall consistency and accurate ligand-protein conformations. We evaluate SculptDrug on the CrossDocked dataset, and experimental results demonstrate that SculptDrug outperforms state-of-the-art baselines, highlighting the effectiveness of spatial condition-aware modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge