Gao Cong

STRATA-TS: Selective Knowledge Transfer for Urban Time Series Forecasting with Retrieval-Guided Reasoning

Aug 26, 2025Abstract:Urban forecasting models often face a severe data imbalance problem: only a few cities have dense, long-span records, while many others expose short or incomplete histories. Direct transfer from data-rich to data-scarce cities is unreliable because only a limited subset of source patterns truly benefits the target domain, whereas indiscriminate transfer risks introducing noise and negative transfer. We present STRATA-TS (Selective TRAnsfer via TArget-aware retrieval for Time Series), a framework that combines domain-adapted retrieval with reasoning-capable large models to improve forecasting in scarce data regimes. STRATA-TS employs a patch-based temporal encoder to identify source subsequences that are semantically and dynamically aligned with the target query. These retrieved exemplars are then injected into a retrieval-guided reasoning stage, where an LLM performs structured inference over target inputs and retrieved support. To enable efficient deployment, we distill the reasoning process into a compact open model via supervised fine-tuning. Extensive experiments on three parking availability datasets across Singapore, Nottingham, and Glasgow demonstrate that STRATA-TS consistently outperforms strong forecasting and transfer baselines, while providing interpretable knowledge transfer pathways.

Mixture-of-Experts for Personalized and Semantic-Aware Next Location Prediction

May 30, 2025

Abstract:Next location prediction plays a critical role in understanding human mobility patterns. However, existing approaches face two core limitations: (1) they fall short in capturing the complex, multi-functional semantics of real-world locations; and (2) they lack the capacity to model heterogeneous behavioral dynamics across diverse user groups. To tackle these challenges, we introduce NextLocMoE, a novel framework built upon large language models (LLMs) and structured around a dual-level Mixture-of-Experts (MoE) design. Our architecture comprises two specialized modules: a Location Semantics MoE that operates at the embedding level to encode rich functional semantics of locations, and a Personalized MoE embedded within the Transformer backbone to dynamically adapt to individual user mobility patterns. In addition, we incorporate a history-aware routing mechanism that leverages long-term trajectory data to enhance expert selection and ensure prediction stability. Empirical evaluations across several real-world urban datasets show that NextLocMoE achieves superior performance in terms of predictive accuracy, cross-domain generalization, and interpretability

TransferTraj: A Vehicle Trajectory Learning Model for Region and Task Transferability

May 19, 2025Abstract:Vehicle GPS trajectories provide valuable movement information that supports various downstream tasks and applications. A desirable trajectory learning model should be able to transfer across regions and tasks without retraining, avoiding the need to maintain multiple specialized models and subpar performance with limited training data. However, each region has its unique spatial features and contexts, which are reflected in vehicle movement patterns and difficult to generalize. Additionally, transferring across different tasks faces technical challenges due to the varying input-output structures required for each task. Existing efforts towards transferability primarily involve learning embedding vectors for trajectories, which perform poorly in region transfer and require retraining of prediction modules for task transfer. To address these challenges, we propose TransferTraj, a vehicle GPS trajectory learning model that excels in both region and task transferability. For region transferability, we introduce RTTE as the main learnable module within TransferTraj. It integrates spatial, temporal, POI, and road network modalities of trajectories to effectively manage variations in spatial context distribution across regions. It also introduces a TRIE module for incorporating relative information of spatial features and a spatial context MoE module for handling movement patterns in diverse contexts. For task transferability, we propose a task-transferable input-output scheme that unifies the input-output structure of different tasks into the masking and recovery of modalities and trajectory points. This approach allows TransferTraj to be pre-trained once and transferred to different tasks without retraining. Extensive experiments on three real-world vehicle trajectory datasets under task transfer, zero-shot, and few-shot region transfer, validating TransferTraj's effectiveness.

CORAG: A Cost-Constrained Retrieval Optimization System for Retrieval-Augmented Generation

Nov 01, 2024

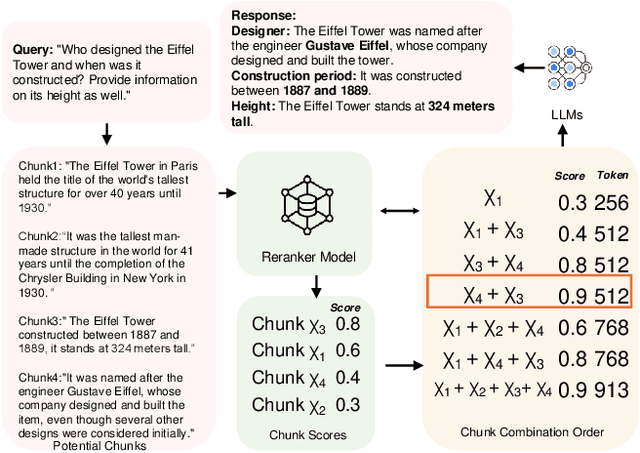

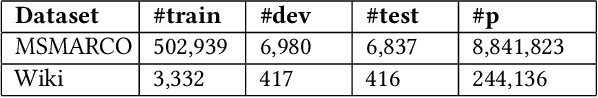

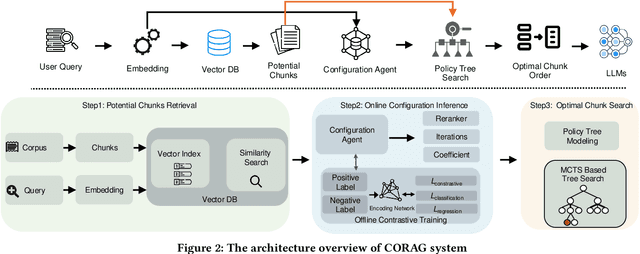

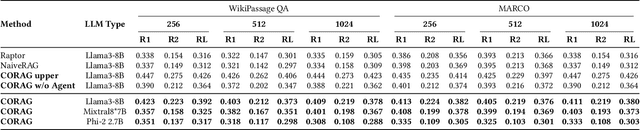

Abstract:Large Language Models (LLMs) have demonstrated remarkable generation capabilities but often struggle to access up-to-date information, which can lead to hallucinations. Retrieval-Augmented Generation (RAG) addresses this issue by incorporating knowledge from external databases, enabling more accurate and relevant responses. Due to the context window constraints of LLMs, it is impractical to input the entire external database context directly into the model. Instead, only the most relevant information, referred to as chunks, is selectively retrieved. However, current RAG research faces three key challenges. First, existing solutions often select each chunk independently, overlooking potential correlations among them. Second, in practice the utility of chunks is non-monotonic, meaning that adding more chunks can decrease overall utility. Traditional methods emphasize maximizing the number of included chunks, which can inadvertently compromise performance. Third, each type of user query possesses unique characteristics that require tailored handling, an aspect that current approaches do not fully consider. To overcome these challenges, we propose a cost constrained retrieval optimization system CORAG for retrieval-augmented generation. We employ a Monte Carlo Tree Search (MCTS) based policy framework to find optimal chunk combinations sequentially, allowing for a comprehensive consideration of correlations among chunks. Additionally, rather than viewing budget exhaustion as a termination condition, we integrate budget constraints into the optimization of chunk combinations, effectively addressing the non-monotonicity of chunk utility.

FlexTSF: A Universal Forecasting Model for Time Series with Variable Regularities

Oct 30, 2024Abstract:Developing a foundation model for time series forecasting across diverse domains has attracted significant attention in recent years. Existing works typically assume regularly sampled, well-structured data, limiting their applicability to more generalized scenarios where time series often contain missing values, unequal sequence lengths, and irregular time intervals between measurements. To cover diverse domains and handle variable regularities, we propose FlexTSF, a universal time series forecasting model that possesses better generalization and natively support both regular and irregular time series. FlexTSF produces forecasts in an autoregressive manner and incorporates three novel designs: VT-Norm, a normalization strategy to ablate data domain barriers, IVP Patcher, a patching module to learn representations from flexibly structured time series, and LED attention, an attention mechanism to seamlessly integrate these two and propagate forecasts with awareness of domain and time information. Experiments on 12 datasets show that FlexTSF outperforms state-of-the-art forecasting models respectively designed for regular and irregular time series. Furthermore, after self-supervised pre-training, FlexTSF shows exceptional performance in both zero-shot and few-show settings for time series forecasting.

PTR: A Pre-trained Language Model for Trajectory Recovery

Oct 18, 2024

Abstract:Spatiotemporal trajectory data is vital for web-of-things services and is extensively collected and analyzed by web-based hardware and platforms. However, issues such as service interruptions and network instability often lead to sparsely recorded trajectories, resulting in a loss of detailed movement data. As a result, recovering these trajectories to restore missing information becomes essential. Despite progress, several challenges remain unresolved. First, the lack of large-scale dense trajectory data hampers the performance of existing deep learning methods, which rely heavily on abundant data for supervised training. Second, current methods struggle to generalize across sparse trajectories with varying sampling intervals, necessitating separate re-training for each interval and increasing computational costs. Third, external factors crucial for the recovery of missing points are not fully incorporated. To address these challenges, we propose a framework called PTR. This framework mitigates the issue of limited dense trajectory data by leveraging the capabilities of pre-trained language models (PLMs). PTR incorporates an explicit trajectory prompt and is trained on datasets with multiple sampling intervals, enabling it to generalize effectively across different intervals in sparse trajectories. To capture external factors, we introduce an implicit trajectory prompt that models road conditions, providing richer information for recovering missing points. Additionally, we present a trajectory embedder that encodes trajectory points and transforms the embeddings of both observed and missing points into a format comprehensible to PLMs. Experimental results on two public trajectory datasets with three sampling intervals demonstrate the efficacy and scalability of PTR.

Context-Enhanced Multi-View Trajectory Representation Learning: Bridging the Gap through Self-Supervised Models

Oct 17, 2024

Abstract:Modeling trajectory data with generic-purpose dense representations has become a prevalent paradigm for various downstream applications, such as trajectory classification, travel time estimation and similarity computation. However, existing methods typically rely on trajectories from a single spatial view, limiting their ability to capture the rich contextual information that is crucial for gaining deeper insights into movement patterns across different geospatial contexts. To this end, we propose MVTraj, a novel multi-view modeling method for trajectory representation learning. MVTraj integrates diverse contextual knowledge, from GPS to road network and points-of-interest to provide a more comprehensive understanding of trajectory data. To align the learning process across multiple views, we utilize GPS trajectories as a bridge and employ self-supervised pretext tasks to capture and distinguish movement patterns across different spatial views. Following this, we treat trajectories from different views as distinct modalities and apply a hierarchical cross-modal interaction module to fuse the representations, thereby enriching the knowledge derived from multiple sources. Extensive experiments on real-world datasets demonstrate that MVTraj significantly outperforms existing baselines in tasks associated with various spatial views, validating its effectiveness and practical utility in spatio-temporal modeling.

nextlocllm: next location prediction using LLMs

Oct 11, 2024

Abstract:Next location prediction is a critical task in human mobility analysis and serves as a foundation for various downstream applications. Existing methods typically rely on discrete IDs to represent locations, which inherently overlook spatial relationships and cannot generalize across cities. In this paper, we propose NextLocLLM, which leverages the advantages of large language models (LLMs) in processing natural language descriptions and their strong generalization capabilities for next location prediction. Specifically, instead of using IDs, NextLocLLM encodes locations based on continuous spatial coordinates to better model spatial relationships. These coordinates are further normalized to enable robust cross-city generalization. Another highlight of NextlocLLM is its LLM-enhanced POI embeddings. It utilizes LLMs' ability to encode each POI category's natural language description into embeddings. These embeddings are then integrated via nonlinear projections to form this LLM-enhanced POI embeddings, effectively capturing locations' functional attributes. Furthermore, task and data prompt prefix, together with trajectory embeddings, are incorporated as input for partly-frozen LLM backbone. NextLocLLM further introduces prediction retrieval module to ensure structural consistency in prediction. Experiments show that NextLocLLM outperforms existing models in next location prediction, excelling in both supervised and zero-shot settings.

Self-supervised Learning for Geospatial AI: A Survey

Aug 22, 2024

Abstract:The proliferation of geospatial data in urban and territorial environments has significantly facilitated the development of geospatial artificial intelligence (GeoAI) across various urban applications. Given the vast yet inherently sparse labeled nature of geospatial data, there is a critical need for techniques that can effectively leverage such data without heavy reliance on labeled datasets. This requirement aligns with the principles of self-supervised learning (SSL), which has attracted increasing attention for its adoption in geospatial data. This paper conducts a comprehensive and up-to-date survey of SSL techniques applied to or developed for three primary data (geometric) types prevalent in geospatial vector data: points, polylines, and polygons. We systematically categorize various SSL techniques into predictive and contrastive methods, discussing their application with respect to each data type in enhancing generalization across various downstream tasks. Furthermore, we review the emerging trends of SSL for GeoAI, and several task-specific SSL techniques. Finally, we discuss several key challenges in the current research and outline promising directions for future investigation. By presenting a structured analysis of relevant studies, this paper aims to inspire continued advancements in the integration of SSL with GeoAI, encouraging innovative methods to harnessing the power of geospatial data.

UrbanLLM: Autonomous Urban Activity Planning and Management with Large Language Models

Jun 18, 2024

Abstract:Location-based services play an critical role in improving the quality of our daily lives. Despite the proliferation of numerous specialized AI models within spatio-temporal context of location-based services, these models struggle to autonomously tackle problems regarding complex urban planing and management. To bridge this gap, we introduce UrbanLLM, a fine-tuned large language model (LLM) designed to tackle diverse problems in urban scenarios. UrbanLLM functions as a problem-solver by decomposing urban-related queries into manageable sub-tasks, identifying suitable spatio-temporal AI models for each sub-task, and generating comprehensive responses to the given queries. Our experimental results indicate that UrbanLLM significantly outperforms other established LLMs, such as Llama and the GPT series, in handling problems concerning complex urban activity planning and management. UrbanLLM exhibits considerable potential in enhancing the effectiveness of solving problems in urban scenarios, reducing the workload and reliance for human experts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge