Kaiqi Zhao

AnchorGK: Anchor-based Incremental and Stratified Graph Learning Framework for Inductive Spatio-Temporal Kriging

Dec 25, 2025Abstract:Spatio-temporal kriging is a fundamental problem in sensor networks, driven by the sparsity of deployed sensors and the resulting missing observations. Although recent approaches model spatial and temporal correlations, they often under-exploit two practical characteristics of real deployments: the sparse spatial distribution of locations and the heterogeneous availability of auxiliary features across locations. To address these challenges, we propose AnchorGK, an Anchor-based Incremental and Stratified Graph Learning framework for inductive spatio-temporal kriging. AnchorGK introduces anchor locations to stratify the data in a principled manner. Anchors are constructed according to feature availability, and strata are then formed around these anchors. This stratification serves two complementary roles. First, it explicitly represents and continuously updates correlations between unobserved regions and surrounding observed locations within a graph learning framework. Second, it enables the systematic use of all available features across strata via an incremental representation mechanism, mitigating feature incompleteness without discarding informative signals. Building on the stratified structure, we design a dual-view graph learning layer that jointly aggregates feature-relevant and location-relevant information, learning stratum-specific representations that support accurate inference under inductive settings. Extensive experiments on multiple benchmark datasets demonstrate that AnchorGK consistently outperforms state-of-the-art baselines for spatio-temporal kriging.

Data-Augmented Quantization-Aware Knowledge Distillation

Sep 04, 2025Abstract:Quantization-aware training (QAT) and Knowledge Distillation (KD) are combined to achieve competitive performance in creating low-bit deep learning models. Existing KD and QAT works focus on improving the accuracy of quantized models from the network output perspective by designing better KD loss functions or optimizing QAT's forward and backward propagation. However, limited attention has been given to understanding the impact of input transformations, such as data augmentation (DA). The relationship between quantization-aware KD and DA remains unexplored. In this paper, we address the question: how to select a good DA in quantization-aware KD, especially for the models with low precisions? We propose a novel metric which evaluates DAs according to their capacity to maximize the Contextual Mutual Information--the information not directly related to an image's label--while also ensuring the predictions for each class are close to the ground truth labels on average. The proposed method automatically ranks and selects DAs, requiring minimal training overhead, and it is compatible with any KD or QAT algorithm. Extensive evaluations demonstrate that selecting DA strategies using our metric significantly improves state-of-the-art QAT and KD works across various model architectures and datasets.

Situational-Constrained Sequential Resources Allocation via Reinforcement Learning

Jun 17, 2025

Abstract:Sequential Resource Allocation with situational constraints presents a significant challenge in real-world applications, where resource demands and priorities are context-dependent. This paper introduces a novel framework, SCRL, to address this problem. We formalize situational constraints as logic implications and develop a new algorithm that dynamically penalizes constraint violations. To handle situational constraints effectively, we propose a probabilistic selection mechanism to overcome limitations of traditional constraint reinforcement learning (CRL) approaches. We evaluate SCRL across two scenarios: medical resource allocation during a pandemic and pesticide distribution in agriculture. Experiments demonstrate that SCRL outperforms existing baselines in satisfying constraints while maintaining high resource efficiency, showcasing its potential for real-world, context-sensitive decision-making tasks.

MMS-VPR: Multimodal Street-Level Visual Place Recognition Dataset and Benchmark

May 18, 2025Abstract:Existing visual place recognition (VPR) datasets predominantly rely on vehicle-mounted imagery, lack multimodal diversity and underrepresent dense, mixed-use street-level spaces, especially in non-Western urban contexts. To address these gaps, we introduce MMS-VPR, a large-scale multimodal dataset for street-level place recognition in complex, pedestrian-only environments. The dataset comprises 78,575 annotated images and 2,512 video clips captured across 207 locations in a ~70,800 $\mathrm{m}^2$ open-air commercial district in Chengdu, China. Each image is labeled with precise GPS coordinates, timestamp, and textual metadata, and covers varied lighting conditions, viewpoints, and timeframes. MMS-VPR follows a systematic and replicable data collection protocol with minimal device requirements, lowering the barrier for scalable dataset creation. Importantly, the dataset forms an inherent spatial graph with 125 edges, 81 nodes, and 1 subgraph, enabling structure-aware place recognition. We further define two application-specific subsets -- Dataset_Edges and Dataset_Points -- to support fine-grained and graph-based evaluation tasks. Extensive benchmarks using conventional VPR models, graph neural networks, and multimodal baselines show substantial improvements when leveraging multimodal and structural cues. MMS-VPR facilitates future research at the intersection of computer vision, geospatial understanding, and multimodal reasoning. The dataset is publicly available at https://huggingface.co/datasets/Yiwei-Ou/MMS-VPR.

ACE: A Cardinality Estimator for Set-Valued Queries

Mar 19, 2025Abstract:Cardinality estimation is a fundamental functionality in database systems. Most existing cardinality estimators focus on handling predicates over numeric or categorical data. They have largely omitted an important data type, set-valued data, which frequently occur in contemporary applications such as information retrieval and recommender systems. The few existing estimators for such data either favor high-frequency elements or rely on a partial independence assumption, which limits their practical applicability. We propose ACE, an Attention-based Cardinality Estimator for estimating the cardinality of queries over set-valued data. We first design a distillation-based data encoder to condense the dataset into a compact matrix. We then design an attention-based query analyzer to capture correlations among query elements. To handle variable-sized queries, a pooling module is introduced, followed by a regression model (MLP) to generate final cardinality estimates. We evaluate ACE on three datasets with varying query element distributions, demonstrating that ACE outperforms the state-of-the-art competitors in terms of both accuracy and efficiency.

Self-supervised Learning for Geospatial AI: A Survey

Aug 22, 2024

Abstract:The proliferation of geospatial data in urban and territorial environments has significantly facilitated the development of geospatial artificial intelligence (GeoAI) across various urban applications. Given the vast yet inherently sparse labeled nature of geospatial data, there is a critical need for techniques that can effectively leverage such data without heavy reliance on labeled datasets. This requirement aligns with the principles of self-supervised learning (SSL), which has attracted increasing attention for its adoption in geospatial data. This paper conducts a comprehensive and up-to-date survey of SSL techniques applied to or developed for three primary data (geometric) types prevalent in geospatial vector data: points, polylines, and polygons. We systematically categorize various SSL techniques into predictive and contrastive methods, discussing their application with respect to each data type in enhancing generalization across various downstream tasks. Furthermore, we review the emerging trends of SSL for GeoAI, and several task-specific SSL techniques. Finally, we discuss several key challenges in the current research and outline promising directions for future investigation. By presenting a structured analysis of relevant studies, this paper aims to inspire continued advancements in the integration of SSL with GeoAI, encouraging innovative methods to harnessing the power of geospatial data.

Self-Supervised Quantization-Aware Knowledge Distillation

Mar 17, 2024

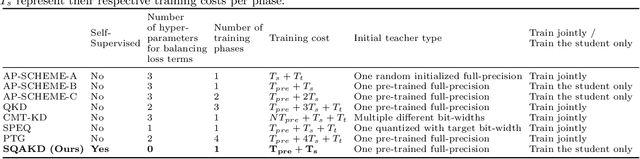

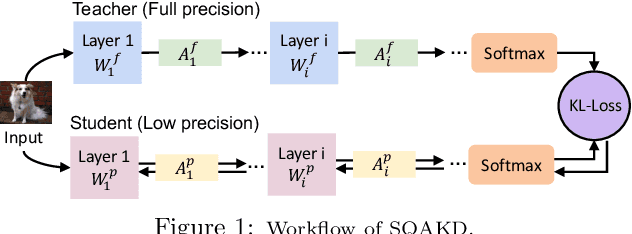

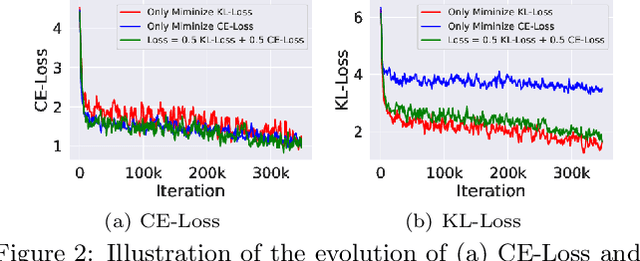

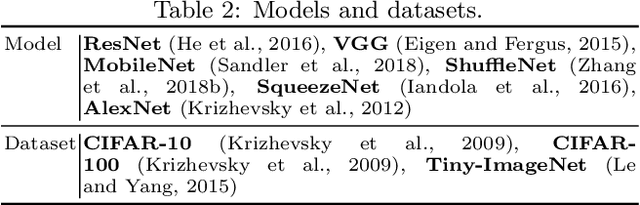

Abstract:Quantization-aware training (QAT) and Knowledge Distillation (KD) are combined to achieve competitive performance in creating low-bit deep learning models. However, existing works applying KD to QAT require tedious hyper-parameter tuning to balance the weights of different loss terms, assume the availability of labeled training data, and require complex, computationally intensive training procedures for good performance. To address these limitations, this paper proposes a novel Self-Supervised Quantization-Aware Knowledge Distillation (SQAKD) framework. SQAKD first unifies the forward and backward dynamics of various quantization functions, making it flexible for incorporating various QAT works. Then it formulates QAT as a co-optimization problem that simultaneously minimizes the KL-Loss between the full-precision and low-bit models for KD and the discretization error for quantization, without supervision from labels. A comprehensive evaluation shows that SQAKD substantially outperforms the state-of-the-art QAT and KD works for a variety of model architectures. Our code is at: https://github.com/kaiqi123/SQAKD.git.

CSG: Curriculum Representation Learning for Signed Graph

Oct 17, 2023

Abstract:Signed graphs are valuable for modeling complex relationships with positive and negative connections, and Signed Graph Neural Networks (SGNNs) have become crucial tools for their analysis. However, prior to our work, no specific training plan existed for SGNNs, and the conventional random sampling approach did not address varying learning difficulties within the graph's structure. We proposed a curriculum-based training approach, where samples progress from easy to complex, inspired by human learning. To measure learning difficulty, we introduced a lightweight mechanism and created the Curriculum representation learning framework for Signed Graphs (CSG). This framework optimizes the order in which samples are presented to the SGNN model. Empirical validation across six real-world datasets showed impressive results, enhancing SGNN model accuracy by up to 23.7% in link sign prediction (AUC) and significantly improving stability with an up to 8.4 reduction in the standard deviation of AUC scores.

SGA: A Graph Augmentation Method for Signed Graph Neural Networks

Oct 15, 2023

Abstract:Signed Graph Neural Networks (SGNNs) are vital for analyzing complex patterns in real-world signed graphs containing positive and negative links. However, three key challenges hinder current SGNN-based signed graph representation learning: sparsity in signed graphs leaves latent structures undiscovered, unbalanced triangles pose representation difficulties for SGNN models, and real-world signed graph datasets often lack supplementary information like node labels and features. These constraints limit the potential of SGNN-based representation learning. We address these issues with data augmentation techniques. Despite many graph data augmentation methods existing for unsigned graphs, none are tailored for signed graphs. Our paper introduces the novel Signed Graph Augmentation framework (SGA), comprising three main components. First, we employ the SGNN model to encode the signed graph, extracting latent structural information for candidate augmentation structures. Second, we evaluate these candidate samples (edges) and select the most beneficial ones for modifying the original training set. Third, we propose a novel augmentation perspective that assigns varying training difficulty to training samples, enabling the design of a new training strategy. Extensive experiments on six real-world datasets (Bitcoin-alpha, Bitcoin-otc, Epinions, Slashdot, Wiki-elec, and Wiki-RfA) demonstrate that SGA significantly improves performance across multiple benchmarks. Our method outperforms baselines by up to 22.2% in AUC for SGCN on Wiki-RfA, 33.3% in F1-binary, 48.8% in F1-micro, and 36.3% in F1-macro for GAT on Bitcoin-alpha in link sign prediction.

Poster: Self-Supervised Quantization-Aware Knowledge Distillation

Sep 22, 2023Abstract:Quantization-aware training (QAT) starts with a pre-trained full-precision model and performs quantization during retraining. However, existing QAT works require supervision from the labels and they suffer from accuracy loss due to reduced precision. To address these limitations, this paper proposes a novel Self-Supervised Quantization-Aware Knowledge Distillation framework (SQAKD). SQAKD first unifies the forward and backward dynamics of various quantization functions and then reframes QAT as a co-optimization problem that simultaneously minimizes the KL-Loss and the discretization error, in a self-supervised manner. The evaluation shows that SQAKD significantly improves the performance of various state-of-the-art QAT works. SQAKD establishes stronger baselines and does not require extensive labeled training data, potentially making state-of-the-art QAT research more accessible.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge