Xiaokun Luan

RACA: Representation-Aware Coverage Criteria for LLM Safety Testing

Feb 02, 2026Abstract:Recent advancements in LLMs have led to significant breakthroughs in various AI applications. However, their sophisticated capabilities also introduce severe safety concerns, particularly the generation of harmful content through jailbreak attacks. Current safety testing for LLMs often relies on static datasets and lacks systematic criteria to evaluate the quality and adequacy of these tests. While coverage criteria have been effective for smaller neural networks, they are not directly applicable to LLMs due to scalability issues and differing objectives. To address these challenges, this paper introduces RACA, a novel set of coverage criteria specifically designed for LLM safety testing. RACA leverages representation engineering to focus on safety-critical concepts within LLMs, thereby reducing dimensionality and filtering out irrelevant information. The framework operates in three stages: first, it identifies safety-critical representations using a small, expert-curated calibration set of jailbreak prompts. Second, it calculates conceptual activation scores for a given test suite based on these representations. Finally, it computes coverage results using six sub-criteria that assess both individual and compositional safety concepts. We conduct comprehensive experiments to validate RACA's effectiveness, applicability, and generalization, where the results demonstrate that RACA successfully identifies high-quality jailbreak prompts and is superior to traditional neuron-level criteria. We also showcase its practical application in real-world scenarios, such as test set prioritization and attack prompt sampling. Furthermore, our findings confirm RACA's generalization to various scenarios and its robustness across various configurations. Overall, RACA provides a new framework for evaluating the safety of LLMs, contributing a valuable technique to the field of testing for AI.

Neural Theorem Proving for Verification Conditions: A Real-World Benchmark

Jan 26, 2026Abstract:Theorem proving is fundamental to program verification, where the automated proof of Verification Conditions (VCs) remains a primary bottleneck. Real-world program verification frequently encounters hard VCs that existing Automated Theorem Provers (ATPs) cannot prove, leading to a critical need for extensive manual proofs that burden practical application. While Neural Theorem Proving (NTP) has achieved significant success in mathematical competitions, demonstrating the potential of machine learning approaches to formal reasoning, its application to program verification--particularly VC proving--remains largely unexplored. Despite existing work on annotation synthesis and verification-related theorem proving, no benchmark has specifically targeted this fundamental bottleneck: automated VC proving. This work introduces Neural Theorem Proving for Verification Conditions (NTP4VC), presenting the first real-world multi-language benchmark for this task. From real-world projects such as Linux and Contiki-OS kernel, our benchmark leverages industrial pipelines (Why3 and Frama-C) to generate semantically equivalent test cases across formal languages of Isabelle, Lean, and Rocq. We evaluate large language models (LLMs), both general-purpose and those fine-tuned for theorem proving, on NTP4VC. Results indicate that although LLMs show promise in VC proving, significant challenges remain for program verification, highlighting a large gap and opportunity for future research.

Automata-Based Steering of Large Language Models for Diverse Structured Generation

Nov 14, 2025Abstract:Large language models (LLMs) are increasingly tasked with generating structured outputs. While structured generation methods ensure validity, they often lack output diversity, a critical limitation that we confirm in our preliminary study. We propose a novel method to enhance diversity in automaton-based structured generation. Our approach utilizes automata traversal history to steer LLMs towards novel structural patterns. Evaluations show our method significantly improves structural and content diversity while maintaining comparable generation efficiency. Furthermore, we conduct a case study showcasing the effectiveness of our method in generating diverse test cases for testing open-source libraries.

Psychometric-Based Evaluation for Theorem Proving with Large Language Models

Feb 02, 2025

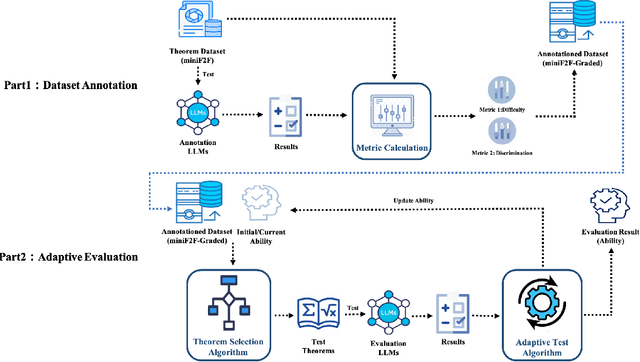

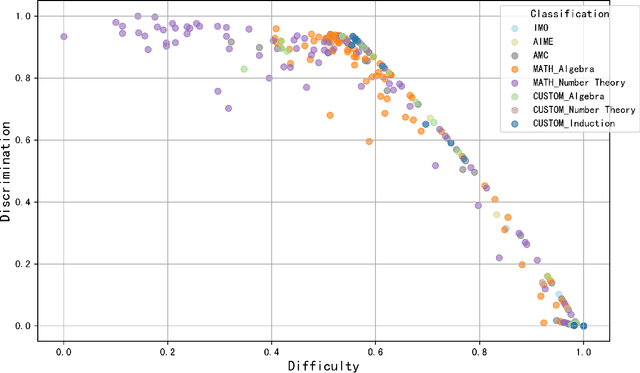

Abstract:Large language models (LLMs) for formal theorem proving have become a prominent research focus. At present, the proving ability of these LLMs is mainly evaluated through proof pass rates on datasets such as miniF2F. However, this evaluation method overlooks the varying importance of theorems. As a result, it fails to highlight the real performance disparities between LLMs and leads to high evaluation costs. This study proposes a psychometric-based evaluation method for theorem proving with LLMs, comprising two main components: Dataset Annotation and Adaptive Evaluation. First, we propose a metric calculation method to annotate the dataset with difficulty and discrimination metrics. Specifically, we annotate each theorem in the miniF2F dataset and grade them into varying difficulty levels according to the performance of LLMs, resulting in an enhanced dataset: miniF2F-Graded. Experimental results show that the difficulty grading in miniF2F-Graded better reflects the theorem difficulty perceived by LLMs. Secondly, we design an adaptive evaluation method to dynamically select the most suitable theorems for testing based on the annotated metrics and the real-time performance of LLMs. We apply this method to evaluate 10 LLMs. The results show that our method finely highlights the performance disparities between LLMs. It also reduces evaluation costs by using only 23% of the theorems in the dataset.

The Fusion of Large Language Models and Formal Methods for Trustworthy AI Agents: A Roadmap

Dec 09, 2024

Abstract:Large Language Models (LLMs) have emerged as a transformative AI paradigm, profoundly influencing daily life through their exceptional language understanding and contextual generation capabilities. Despite their remarkable performance, LLMs face a critical challenge: the propensity to produce unreliable outputs due to the inherent limitations of their learning-based nature. Formal methods (FMs), on the other hand, are a well-established computation paradigm that provides mathematically rigorous techniques for modeling, specifying, and verifying the correctness of systems. FMs have been extensively applied in mission-critical software engineering, embedded systems, and cybersecurity. However, the primary challenge impeding the deployment of FMs in real-world settings lies in their steep learning curves, the absence of user-friendly interfaces, and issues with efficiency and adaptability. This position paper outlines a roadmap for advancing the next generation of trustworthy AI systems by leveraging the mutual enhancement of LLMs and FMs. First, we illustrate how FMs, including reasoning and certification techniques, can help LLMs generate more reliable and formally certified outputs. Subsequently, we highlight how the advanced learning capabilities and adaptability of LLMs can significantly enhance the usability, efficiency, and scalability of existing FM tools. Finally, we show that unifying these two computation paradigms -- integrating the flexibility and intelligence of LLMs with the rigorous reasoning abilities of FMs -- has transformative potential for the development of trustworthy AI software systems. We acknowledge that this integration has the potential to enhance both the trustworthiness and efficiency of software engineering practices while fostering the development of intelligent FM tools capable of addressing complex yet real-world challenges.

Protecting Deep Learning Model Copyrights with Adversarial Example-Free Reuse Detection

Jul 04, 2024

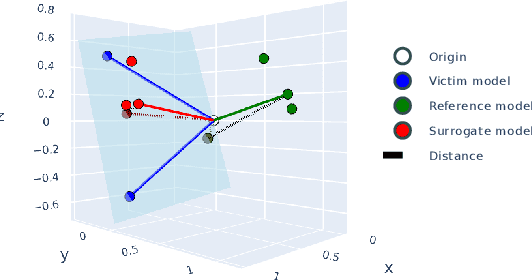

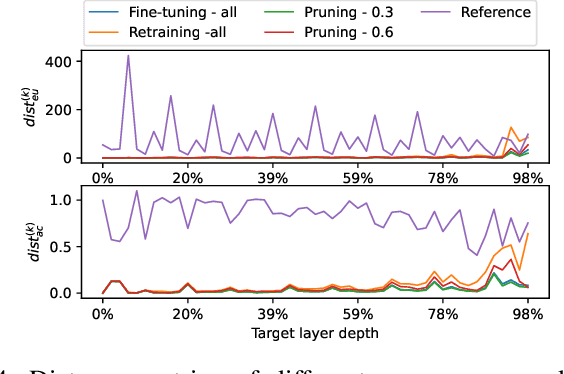

Abstract:Model reuse techniques can reduce the resource requirements for training high-performance deep neural networks (DNNs) by leveraging existing models. However, unauthorized reuse and replication of DNNs can lead to copyright infringement and economic loss to the model owner. This underscores the need to analyze the reuse relation between DNNs and develop copyright protection techniques to safeguard intellectual property rights. Existing white-box testing-based approaches cannot address the common heterogeneous reuse case where the model architecture is changed, and DNN fingerprinting approaches heavily rely on generating adversarial examples with good transferability, which is known to be challenging in the black-box setting. To bridge the gap, we propose NFARD, a Neuron Functionality Analysis-based Reuse Detector, which only requires normal test samples to detect reuse relations by measuring the models' differences on a newly proposed model characterization, i.e., neuron functionality (NF). A set of NF-based distance metrics is designed to make NFARD applicable to both white-box and black-box settings. Moreover, we devise a linear transformation method to handle heterogeneous reuse cases by constructing the optimal projection matrix for dimension consistency, significantly extending the application scope of NFARD. To the best of our knowledge, this is the first adversarial example-free method that exploits neuron functionality for DNN copyright protection. As a side contribution, we constructed a reuse detection benchmark named Reuse Zoo that covers various practical reuse techniques and popular datasets. Extensive evaluations on this comprehensive benchmark show that NFARD achieves F1 scores of 0.984 and 1.0 for detecting reuse relationships in black-box and white-box settings, respectively, while generating test suites 2 ~ 99 times faster than previous methods.

Towards Large Language Model Aided Program Refinement

Jun 26, 2024Abstract:Program refinement involves correctness-preserving transformations from formal high-level specification statements into executable programs. Traditional verification tool support for program refinement is highly interactive and lacks automation. On the other hand, the emergence of large language models (LLMs) enables automatic code generations from informal natural language specifications. However, code generated by LLMs is often unreliable. Moreover, the opaque procedure from specification to code provided by LLM is an uncontrolled black box. We propose LLM4PR, a tool that combines formal program refinement techniques with informal LLM-based methods to (1) transform the specification to preconditions and postconditions, (2) automatically build prompts based on refinement calculus, (3) interact with LLM to generate code, and finally, (4) verify that the generated code satisfies the conditions of refinement calculus, thus guaranteeing the correctness of the code. We have implemented our tool using GPT4, Coq, and Coqhammer, and evaluated it on the HumanEval and EvalPlus datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge