Protecting Deep Learning Model Copyrights with Adversarial Example-Free Reuse Detection

Paper and Code

Jul 04, 2024

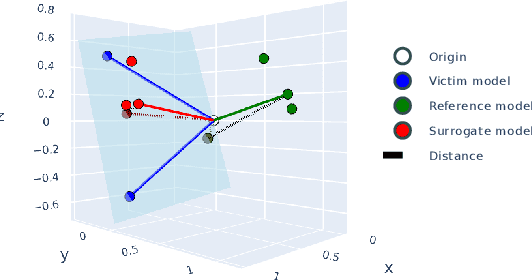

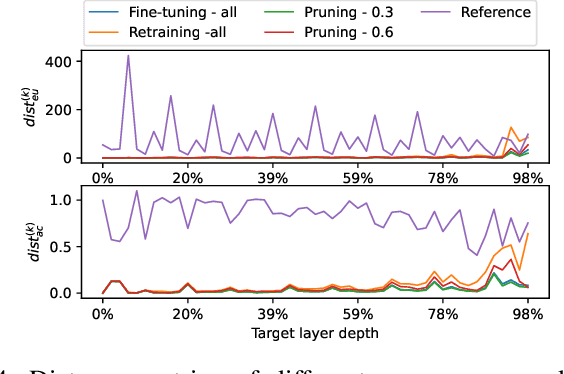

Model reuse techniques can reduce the resource requirements for training high-performance deep neural networks (DNNs) by leveraging existing models. However, unauthorized reuse and replication of DNNs can lead to copyright infringement and economic loss to the model owner. This underscores the need to analyze the reuse relation between DNNs and develop copyright protection techniques to safeguard intellectual property rights. Existing white-box testing-based approaches cannot address the common heterogeneous reuse case where the model architecture is changed, and DNN fingerprinting approaches heavily rely on generating adversarial examples with good transferability, which is known to be challenging in the black-box setting. To bridge the gap, we propose NFARD, a Neuron Functionality Analysis-based Reuse Detector, which only requires normal test samples to detect reuse relations by measuring the models' differences on a newly proposed model characterization, i.e., neuron functionality (NF). A set of NF-based distance metrics is designed to make NFARD applicable to both white-box and black-box settings. Moreover, we devise a linear transformation method to handle heterogeneous reuse cases by constructing the optimal projection matrix for dimension consistency, significantly extending the application scope of NFARD. To the best of our knowledge, this is the first adversarial example-free method that exploits neuron functionality for DNN copyright protection. As a side contribution, we constructed a reuse detection benchmark named Reuse Zoo that covers various practical reuse techniques and popular datasets. Extensive evaluations on this comprehensive benchmark show that NFARD achieves F1 scores of 0.984 and 1.0 for detecting reuse relationships in black-box and white-box settings, respectively, while generating test suites 2 ~ 99 times faster than previous methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge