Zhe Hou

Vibe Coding an LLM-powered Theorem Prover

Jan 08, 2026Abstract:We present Isabellm, an LLM-powered theorem prover for Isabelle/HOL that performs fully automatic proof synthesis. Isabellm works with any local LLM on Ollama and APIs such as Gemini CLI, and it is designed to run on consumer grade computers. The system combines a stepwise prover, which uses large language models to propose proof commands validated by Isabelle in a bounded search loop, with a higher-level proof planner that generates structured Isar outlines and attempts to fill and repair remaining gaps. The framework includes beam search for tactics, tactics reranker ML and RL models, premise selection with small transformer models, micro-RAG for Isar proofs built from AFP, and counter-example guided proof repair. All the code is implemented by GPT 4.1 - 5.2, Gemini 3 Pro, and Claude 4.5. Empirically, Isabellm can prove certain lemmas that defeat Isabelle's standard automation, including Sledgehammer, demonstrating the practical value of LLM-guided proof search. At the same time, we find that even state-of-the-art LLMs, such as GPT 5.2 Extended Thinking and Gemini 3 Pro struggle to reliably implement the intended fill-and-repair mechanisms with complex algorithmic designs, highlighting fundamental challenges in LLM code generation and reasoning. The code of Isabellm is available at https://github.com/zhehou/llm-isabelle

Few-Shot Precise Event Spotting via Unified Multi-Entity Graph and Distillation

Nov 18, 2025Abstract:Precise event spotting (PES) aims to recognize fine-grained events at exact moments and has become a key component of sports analytics. This task is particularly challenging due to rapid succession, motion blur, and subtle visual differences. Consequently, most existing methods rely on domain-specific, end-to-end training with large labeled datasets and often struggle in few-shot conditions due to their dependence on pixel- or pose-based inputs alone. However, obtaining large labeled datasets is practically hard. We propose a Unified Multi-Entity Graph Network (UMEG-Net) for few-shot PES. UMEG-Net integrates human skeletons and sport-specific object keypoints into a unified graph and features an efficient spatio-temporal extraction module based on advanced GCN and multi-scale temporal shift. To further enhance performance, we employ multimodal distillation to transfer knowledge from keypoint-based graphs to visual representations. Our approach achieves robust performance with limited labeled data and significantly outperforms baseline models in few-shot settings, providing a scalable and effective solution for few-shot PES. Code is publicly available at https://github.com/LZYAndy/UMEG-Net.

Adaptive Plan-Execute Framework for Smart Contract Security Auditing

May 21, 2025Abstract:Large Language Models (LLMs) have shown great promise in code analysis and auditing; however, they still struggle with hallucinations and limited context-aware reasoning. We introduce SmartAuditFlow, a novel Plan-Execute framework that enhances smart contract security analysis through dynamic audit planning and structured execution. Unlike conventional LLM-based auditing approaches that follow fixed workflows and predefined steps, SmartAuditFlow dynamically generates and refines audit plans based on the unique characteristics of each smart contract. It continuously adjusts its auditing strategy in response to intermediate LLM outputs and newly detected vulnerabilities, ensuring a more adaptive and precise security assessment. The framework then executes these plans step by step, applying a structured reasoning process to enhance vulnerability detection accuracy while minimizing hallucinations and false positives. To further improve audit precision, SmartAuditFlow integrates iterative prompt optimization and external knowledge sources, such as static analysis tools and Retrieval-Augmented Generation (RAG). This ensures audit decisions are contextually informed and backed by real-world security knowledge, producing comprehensive security reports. Extensive evaluations across multiple benchmarks demonstrate that SmartAuditFlow outperforms existing methods, achieving 100 percent accuracy on common and critical vulnerabilities, 41.2 percent accuracy for comprehensive coverage of known smart contract weaknesses in real-world projects, and successfully identifying all 13 tested CVEs. These results highlight SmartAuditFlow's scalability, cost-effectiveness, and superior adaptability over traditional static analysis tools and contemporary LLM-based approaches, establishing it as a robust solution for automated smart contract auditing.

Clustering and analysis of user behaviour in blockchain: A case study of Planet IX

Apr 16, 2025Abstract:Decentralised applications (dApps) that run on public blockchains have the benefit of trustworthiness and transparency as every activity that happens on the blockchain can be publicly traced through the transaction data. However, this introduces a potential privacy problem as this data can be tracked and analysed, which can reveal user-behaviour information. A user behaviour analysis pipeline was proposed to present how this type of information can be extracted and analysed to identify separate behavioural clusters that can describe how users behave in the game. The pipeline starts with the collection of transaction data, involving smart contracts, that is collected from a blockchain-based game called Planet IX. Both the raw transaction information and the transaction events are considered in the data collection. From this data, separate game actions can be formed and those are leveraged to present how and when the users conducted their in-game activities in the form of user flows. An extended version of these user flows also presents how the Non-Fungible Tokens (NFTs) are being leveraged in the user actions. The latter is given as input for a Graph Neural Network (GNN) model to provide graph embeddings for these flows which then can be leveraged by clustering algorithms to cluster user behaviours into separate behavioural clusters. We benchmark and compare well-known clustering algorithms as a part of the proposed method. The user behaviour clusters were analysed and visualised in a graph format. It was found that behavioural information can be extracted regarding the users that belong to these clusters. Such information can be exploited by malicious users to their advantage. To demonstrate this, a privacy threat model was also presented based on the results that correspond to multiple potentially affected areas.

F$^3$Set: Towards Analyzing Fast, Frequent, and Fine-grained Events from Videos

Apr 15, 2025Abstract:Analyzing Fast, Frequent, and Fine-grained (F$^3$) events presents a significant challenge in video analytics and multi-modal LLMs. Current methods struggle to identify events that satisfy all the F$^3$ criteria with high accuracy due to challenges such as motion blur and subtle visual discrepancies. To advance research in video understanding, we introduce F$^3$Set, a benchmark that consists of video datasets for precise F$^3$ event detection. Datasets in F$^3$Set are characterized by their extensive scale and comprehensive detail, usually encompassing over 1,000 event types with precise timestamps and supporting multi-level granularity. Currently, F$^3$Set contains several sports datasets, and this framework may be extended to other applications as well. We evaluated popular temporal action understanding methods on F$^3$Set, revealing substantial challenges for existing techniques. Additionally, we propose a new method, F$^3$ED, for F$^3$ event detections, achieving superior performance. The dataset, model, and benchmark code are available at https://github.com/F3Set/F3Set.

The Fusion of Large Language Models and Formal Methods for Trustworthy AI Agents: A Roadmap

Dec 09, 2024

Abstract:Large Language Models (LLMs) have emerged as a transformative AI paradigm, profoundly influencing daily life through their exceptional language understanding and contextual generation capabilities. Despite their remarkable performance, LLMs face a critical challenge: the propensity to produce unreliable outputs due to the inherent limitations of their learning-based nature. Formal methods (FMs), on the other hand, are a well-established computation paradigm that provides mathematically rigorous techniques for modeling, specifying, and verifying the correctness of systems. FMs have been extensively applied in mission-critical software engineering, embedded systems, and cybersecurity. However, the primary challenge impeding the deployment of FMs in real-world settings lies in their steep learning curves, the absence of user-friendly interfaces, and issues with efficiency and adaptability. This position paper outlines a roadmap for advancing the next generation of trustworthy AI systems by leveraging the mutual enhancement of LLMs and FMs. First, we illustrate how FMs, including reasoning and certification techniques, can help LLMs generate more reliable and formally certified outputs. Subsequently, we highlight how the advanced learning capabilities and adaptability of LLMs can significantly enhance the usability, efficiency, and scalability of existing FM tools. Finally, we show that unifying these two computation paradigms -- integrating the flexibility and intelligence of LLMs with the rigorous reasoning abilities of FMs -- has transformative potential for the development of trustworthy AI software systems. We acknowledge that this integration has the potential to enhance both the trustworthiness and efficiency of software engineering practices while fostering the development of intelligent FM tools capable of addressing complex yet real-world challenges.

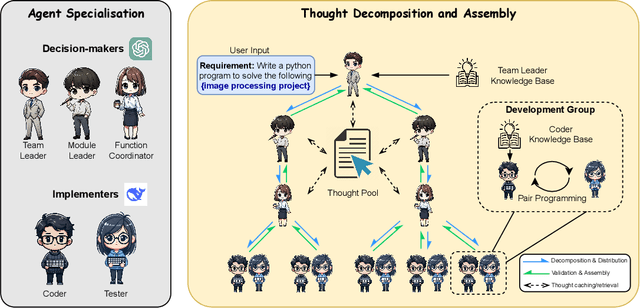

VisionCoder: Empowering Multi-Agent Auto-Programming for Image Processing with Hybrid LLMs

Oct 25, 2024

Abstract:In the field of automated programming, large language models (LLMs) have demonstrated foundational generative capabilities when given detailed task descriptions. However, their current functionalities are primarily limited to function-level development, restricting their effectiveness in complex project environments and specific application scenarios, such as complicated image-processing tasks. This paper presents a multi-agent framework that utilises a hybrid set of LLMs, including GPT-4o and locally deployed open-source models, which collaboratively complete auto-programming tasks. Each agent plays a distinct role in the software development cycle, collectively forming a virtual organisation that works together to produce software products. By establishing a tree-structured thought distribution and development mechanism across project, module, and function levels, this framework offers a cost-effective and efficient solution for code generation. We evaluated our approach using benchmark datasets, and the experimental results demonstrate that VisionCoder significantly outperforms existing methods in image processing auto-programming tasks.

Towards Large Language Model Aided Program Refinement

Jun 26, 2024Abstract:Program refinement involves correctness-preserving transformations from formal high-level specification statements into executable programs. Traditional verification tool support for program refinement is highly interactive and lacks automation. On the other hand, the emergence of large language models (LLMs) enables automatic code generations from informal natural language specifications. However, code generated by LLMs is often unreliable. Moreover, the opaque procedure from specification to code provided by LLM is an uncontrolled black box. We propose LLM4PR, a tool that combines formal program refinement techniques with informal LLM-based methods to (1) transform the specification to preconditions and postconditions, (2) automatically build prompts based on refinement calculus, (3) interact with LLM to generate code, and finally, (4) verify that the generated code satisfies the conditions of refinement calculus, thus guaranteeing the correctness of the code. We have implemented our tool using GPT4, Coq, and Coqhammer, and evaluated it on the HumanEval and EvalPlus datasets.

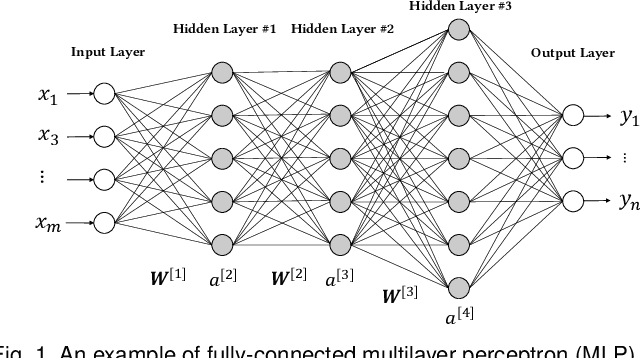

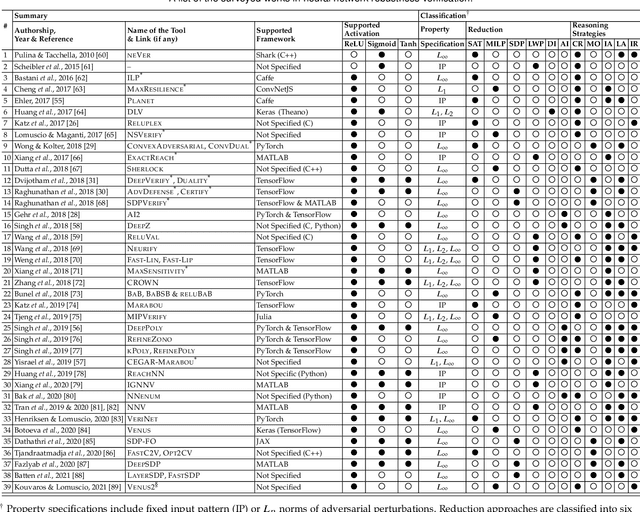

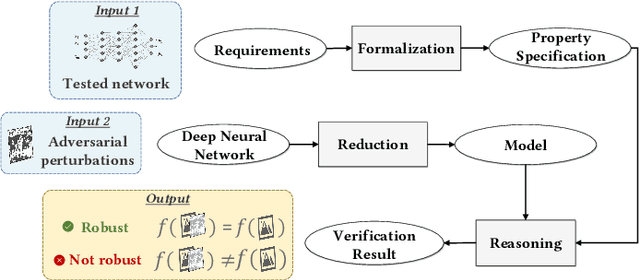

Adversarial Robustness of Deep Neural Networks: A Survey from a Formal Verification Perspective

Jun 24, 2022

Abstract:Neural networks have been widely applied in security applications such as spam and phishing detection, intrusion prevention, and malware detection. This black-box method, however, often has uncertainty and poor explainability in applications. Furthermore, neural networks themselves are often vulnerable to adversarial attacks. For those reasons, there is a high demand for trustworthy and rigorous methods to verify the robustness of neural network models. Adversarial robustness, which concerns the reliability of a neural network when dealing with maliciously manipulated inputs, is one of the hottest topics in security and machine learning. In this work, we survey existing literature in adversarial robustness verification for neural networks and collect 39 diversified research works across machine learning, security, and software engineering domains. We systematically analyze their approaches, including how robustness is formulated, what verification techniques are used, and the strengths and limitations of each technique. We provide a taxonomy from a formal verification perspective for a comprehensive understanding of this topic. We classify the existing techniques based on property specification, problem reduction, and reasoning strategies. We also demonstrate representative techniques that have been applied in existing studies with a sample model. Finally, we discuss open questions for future research.

MUC-driven Feature Importance Measurement and Adversarial Analysis for Random Forest

Feb 25, 2022

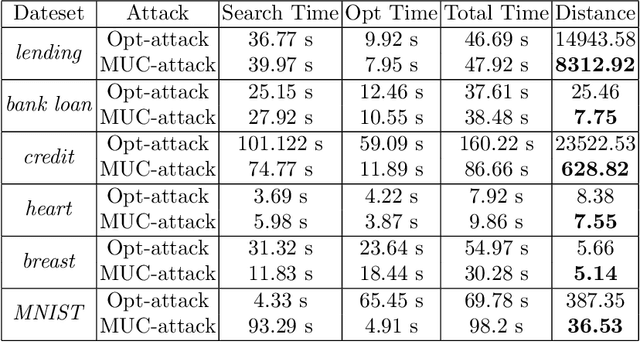

Abstract:The broad adoption of Machine Learning (ML) in security-critical fields demands the explainability of the approach. However, the research on understanding ML models, such as Random Forest (RF), is still in its infant stage. In this work, we leverage formal methods and logical reasoning to develop a novel model-specific method for explaining the prediction of RF. Our approach is centered around Minimal Unsatisfiable Cores (MUC) and provides a comprehensive solution for feature importance, covering local and global aspects, and adversarial sample analysis. Experimental results on several datasets illustrate the high quality of our feature importance measurement. We also demonstrate that our adversarial analysis outperforms the state-of-the-art method. Moreover, our method can produce a user-centered report, which helps provide recommendations in real-life applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge