Hadrien Bride

Silas: High Performance, Explainable and Verifiable Machine Learning

Oct 03, 2019

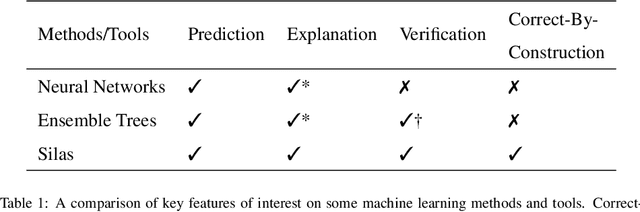

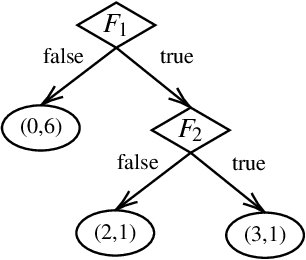

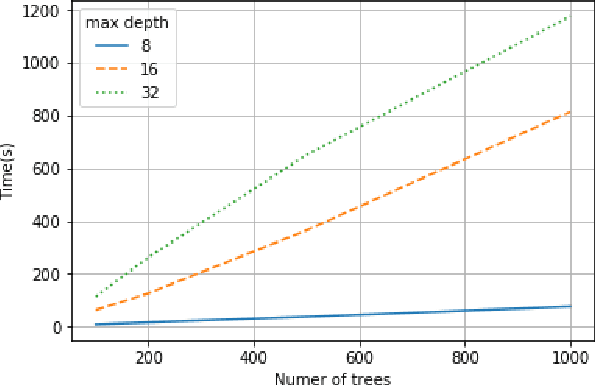

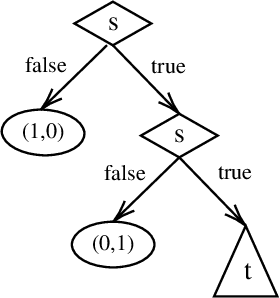

Abstract:This paper introduces a new classification tool named Silas, which is built to provide a more transparent and dependable data analytics service. A focus of Silas is on providing a formal foundation of decision trees in order to support logical analysis and verification of learned prediction models. This paper describes the distinct features of Silas: The Model Audit module formally verifies the prediction model against user specifications, the Enforcement Learning module trains prediction models that are guaranteed correct, the Model Insight and Prediction Insight modules reason about the prediction model and explain the decision-making of predictions. We also discuss implementation details ranging from programming paradigm to memory management that help achieve high-performance computation.

GRAVITAS: A Model Checking Based Planning and Goal Reasoning Framework for Autonomous Systems

Oct 03, 2019

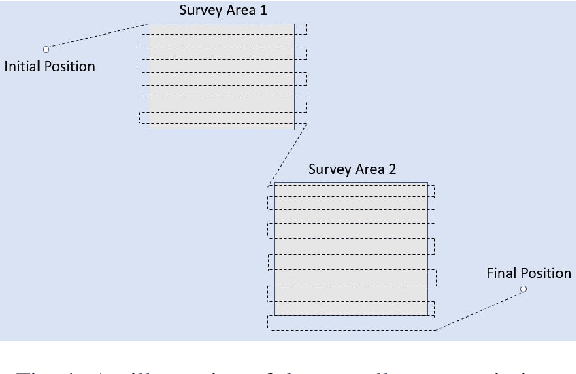

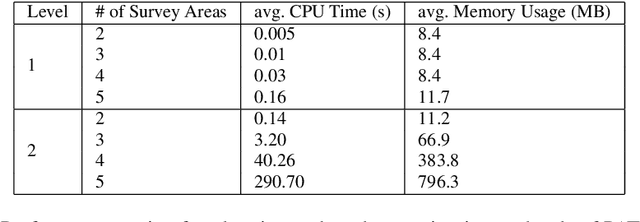

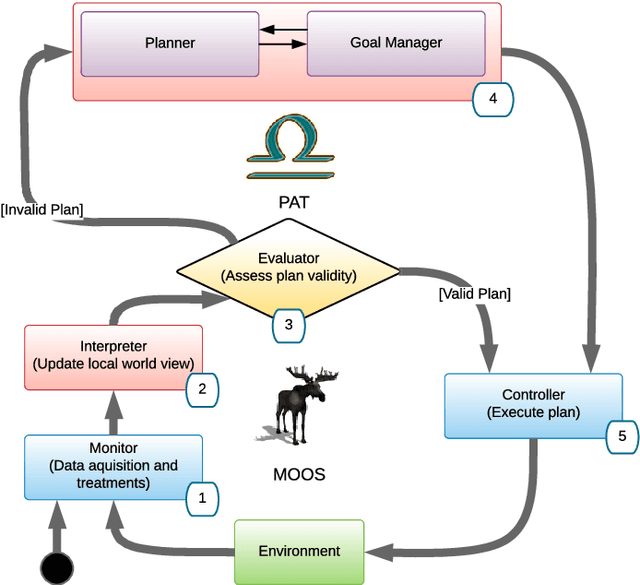

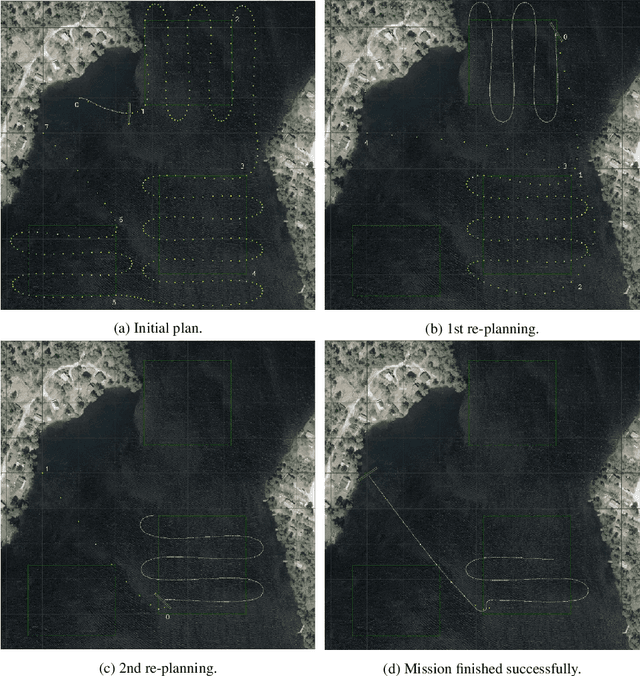

Abstract:While AI techniques have found many successful applications in autonomous systems, many of them permit behaviours that are difficult to interpret and may lead to uncertain results. We follow the "verification as planning" paradigm and propose to use model checking techniques to solve planning and goal reasoning problems for autonomous systems. We give a new formulation of Goal Task Network (GTN) that is tailored for our model checking based framework. We then provide a systematic method that models GTNs in the model checker Process Analysis Toolkit (PAT). We present our planning and goal reasoning system as a framework called Goal Reasoning And Verification for Independent Trusted Autonomous Systems (GRAVITAS) and discuss how it helps provide trustworthy plans in an uncertain environment. Finally, we demonstrate the proposed ideas in an experiment that simulates a survey mission performed by the REMUS-100 autonomous underwater vehicle.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge