Ryan Green

GRAVITAS: A Model Checking Based Planning and Goal Reasoning Framework for Autonomous Systems

Oct 03, 2019

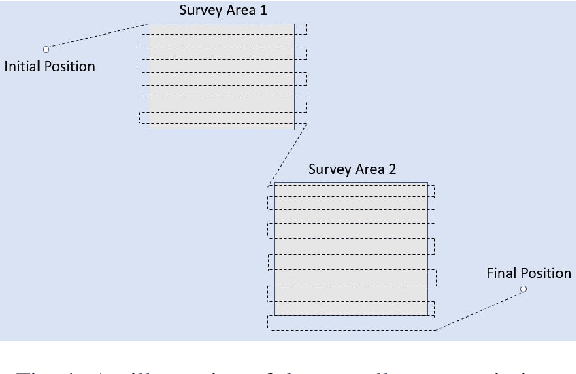

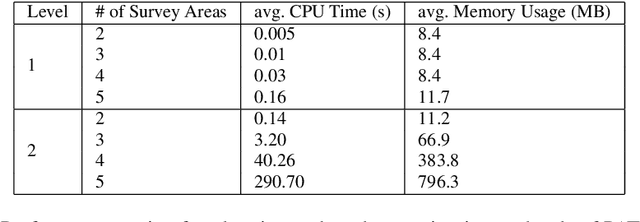

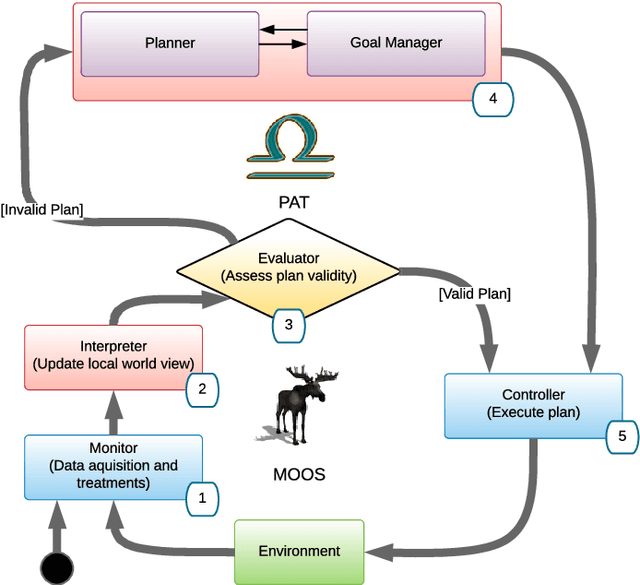

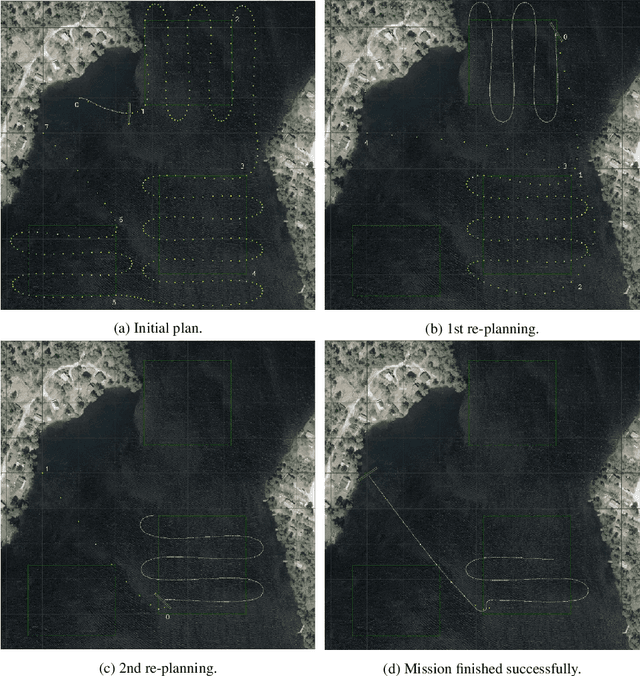

Abstract:While AI techniques have found many successful applications in autonomous systems, many of them permit behaviours that are difficult to interpret and may lead to uncertain results. We follow the "verification as planning" paradigm and propose to use model checking techniques to solve planning and goal reasoning problems for autonomous systems. We give a new formulation of Goal Task Network (GTN) that is tailored for our model checking based framework. We then provide a systematic method that models GTNs in the model checker Process Analysis Toolkit (PAT). We present our planning and goal reasoning system as a framework called Goal Reasoning And Verification for Independent Trusted Autonomous Systems (GRAVITAS) and discuss how it helps provide trustworthy plans in an uncertain environment. Finally, we demonstrate the proposed ideas in an experiment that simulates a survey mission performed by the REMUS-100 autonomous underwater vehicle.

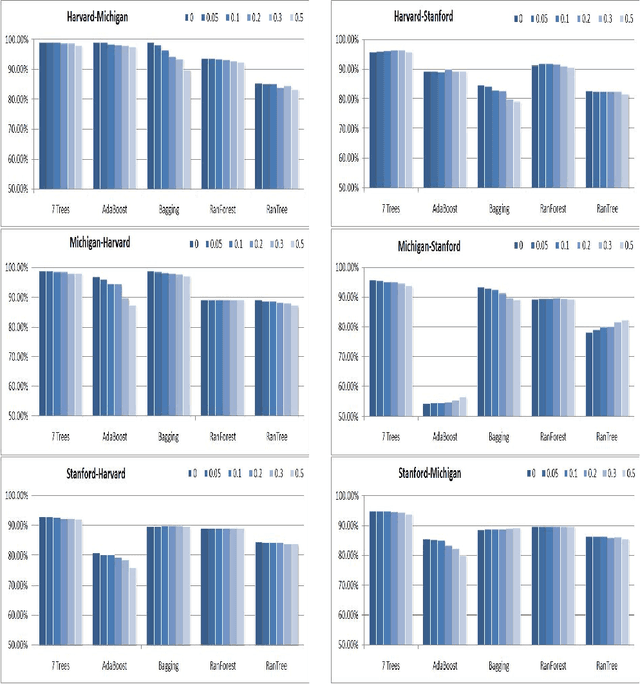

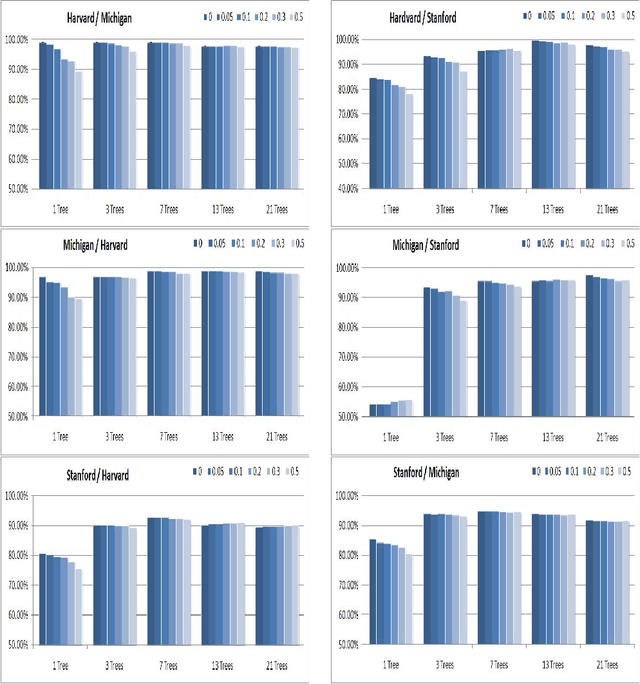

Building Diversified Multiple Trees for Classification in High Dimensional Noisy Biomedical Data

Jun 26, 2017

Abstract:It is common that a trained classification model is applied to the operating data that is deviated from the training data because of noise. This paper demonstrates that an ensemble classifier, Diversified Multiple Tree (DMT), is more robust in classifying noisy data than other widely used ensemble methods. DMT is tested on three real world biomedical data sets from different laboratories in comparison with four benchmark ensemble classifiers. Experimental results show that DMT is significantly more accurate than other benchmark ensemble classifiers on noisy test data. We also discuss a limitation of DMT and its possible variations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge