Jiajun Song

A Unified Representation Underlying the Judgment of Large Language Models

Oct 31, 2025Abstract:A central architectural question for both biological and artificial intelligence is whether judgment relies on specialized modules or a unified, domain-general resource. While the discovery of decodable neural representations for distinct concepts in Large Language Models (LLMs) has suggested a modular architecture, whether these representations are truly independent systems remains an open question. Here we provide evidence for a convergent architecture. Across a range of LLMs, we find that diverse evaluative judgments are computed along a dominant dimension, which we term the Valence-Assent Axis (VAA). This axis jointly encodes subjective valence ("what is good") and the model's assent to factual claims ("what is true"). Through direct interventions, we show this unified representation creates a critical dependency: the VAA functions as a control signal that steers the generative process to construct a rationale consistent with its evaluative state, even at the cost of factual accuracy. This mechanism, which we term the subordination of reasoning, shifts the process of reasoning from impartial inference toward goal-directed justification. Our discovery offers a mechanistic account for systemic bias and hallucination, revealing how an architecture that promotes coherent judgment can systematically undermine faithful reasoning.

VARMA-Enhanced Transformer for Time Series Forecasting

Sep 05, 2025

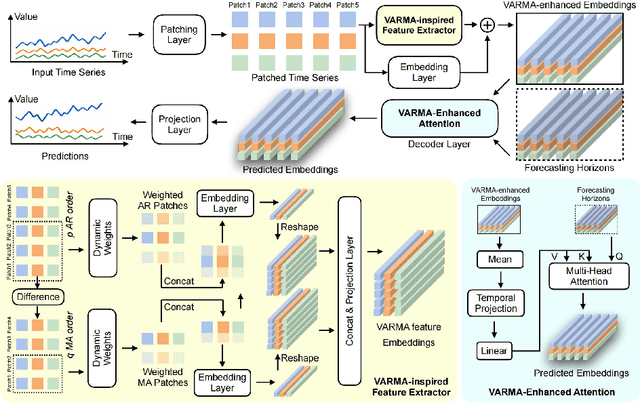

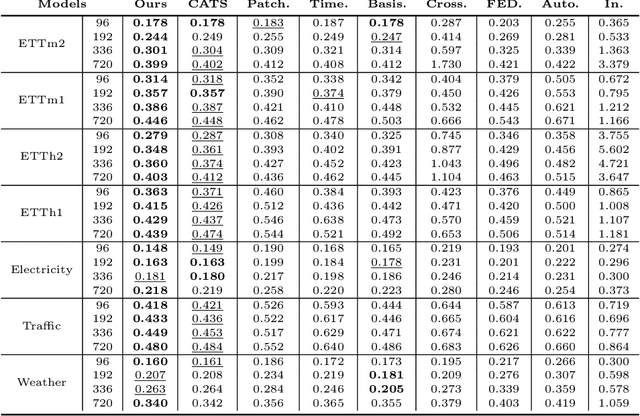

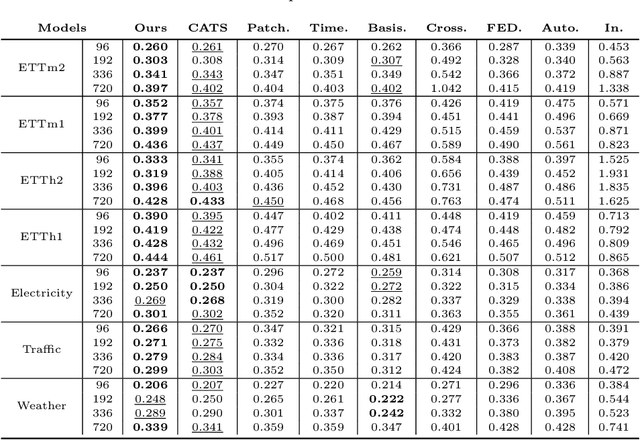

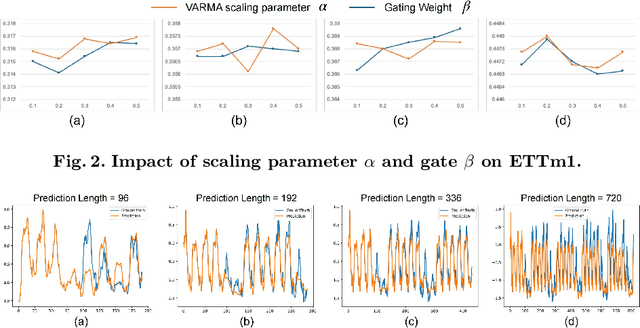

Abstract:Transformer-based models have significantly advanced time series forecasting. Recent work, like the Cross-Attention-only Time Series transformer (CATS), shows that removing self-attention can make the model more accurate and efficient. However, these streamlined architectures may overlook the fine-grained, local temporal dependencies effectively captured by classical statistical models like Vector AutoRegressive Moving Average model (VARMA). To address this gap, we propose VARMAformer, a novel architecture that synergizes the efficiency of a cross-attention-only framework with the principles of classical time series analysis. Our model introduces two key innovations: (1) a dedicated VARMA-inspired Feature Extractor (VFE) that explicitly models autoregressive (AR) and moving-average (MA) patterns at the patch level, and (2) a VARMA-Enhanced Attention (VE-atten) mechanism that employs a temporal gate to make queries more context-aware. By fusing these classical insights into a modern backbone, VARMAformer captures both global, long-range dependencies and local, statistical structures. Through extensive experiments on widely-used benchmark datasets, we demonstrate that our model consistently outperforms existing state-of-the-art methods. Our work validates the significant benefit of integrating classical statistical insights into modern deep learning frameworks for time series forecasting.

SalientFusion: Context-Aware Compositional Zero-Shot Food Recognition

Sep 04, 2025Abstract:Food recognition has gained significant attention, but the rapid emergence of new dishes requires methods for recognizing unseen food categories, motivating Zero-Shot Food Learning (ZSFL). We propose the task of Compositional Zero-Shot Food Recognition (CZSFR), where cuisines and ingredients naturally align with attributes and objects in Compositional Zero-Shot learning (CZSL). However, CZSFR faces three challenges: (1) Redundant background information distracts models from learning meaningful food features, (2) Role confusion between staple and side dishes leads to misclassification, and (3) Semantic bias in a single attribute can lead to confusion of understanding. Therefore, we propose SalientFusion, a context-aware CZSFR method with two components: SalientFormer, which removes background redundancy and uses depth features to resolve role confusion; DebiasAT, which reduces the semantic bias by aligning prompts with visual features. Using our proposed benchmarks, CZSFood-90 and CZSFood-164, we show that SalientFusion achieves state-of-the-art results on these benchmarks and the most popular general datasets for the general CZSL. The code is avaliable at https://github.com/Jiajun-RUC/SalientFusion.

Mind the Gap: The Divergence Between Human and LLM-Generated Tasks

Aug 01, 2025Abstract:Humans constantly generate a diverse range of tasks guided by internal motivations. While generative agents powered by large language models (LLMs) aim to simulate this complex behavior, it remains uncertain whether they operate on similar cognitive principles. To address this, we conducted a task-generation experiment comparing human responses with those of an LLM agent (GPT-4o). We find that human task generation is consistently influenced by psychological drivers, including personal values (e.g., Openness to Change) and cognitive style. Even when these psychological drivers are explicitly provided to the LLM, it fails to reflect the corresponding behavioral patterns. They produce tasks that are markedly less social, less physical, and thematically biased toward abstraction. Interestingly, while the LLM's tasks were perceived as more fun and novel, this highlights a disconnect between its linguistic proficiency and its capacity to generate human-like, embodied goals.We conclude that there is a core gap between the value-driven, embodied nature of human cognition and the statistical patterns of LLMs, highlighting the necessity of incorporating intrinsic motivation and physical grounding into the design of more human-aligned agents.

ToM-RL: Reinforcement Learning Unlocks Theory of Mind in Small LLMs

Apr 02, 2025Abstract:Recent advancements in rule-based reinforcement learning (RL), applied during the post-training phase of large language models (LLMs), have significantly enhanced their capabilities in structured reasoning tasks such as mathematics and logical inference. However, the effectiveness of RL in social reasoning, particularly in Theory of Mind (ToM), the ability to infer others' mental states, remains largely unexplored. In this study, we demonstrate that RL methods effectively unlock ToM reasoning capabilities even in small-scale LLMs (0.5B to 7B parameters). Using a modest dataset comprising 3200 questions across diverse scenarios, our RL-trained 7B model achieves 84.50\% accuracy on the Hi-ToM benchmark, surpassing models like GPT-4o and DeepSeek-v3 despite significantly fewer parameters. While smaller models ($\leq$3B parameters) suffer from reasoning collapse, larger models (7B parameters) maintain stable performance through consistent belief tracking. Additionally, our RL-based models demonstrate robust generalization to higher-order, out-of-distribution ToM problems, novel textual presentations, and previously unseen datasets. These findings highlight RL's potential to enhance social cognitive reasoning, bridging the gap between structured problem-solving and nuanced social inference in LLMs.

OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Visual Text Localization and Reasoning

Dec 31, 2024

Abstract:Scoring the Optical Character Recognition (OCR) capabilities of Large Multimodal Models (LMMs) has witnessed growing interest recently. Existing benchmarks have highlighted the impressive performance of LMMs in text recognition; however, their abilities on certain challenging tasks, such as text localization, handwritten content extraction, and logical reasoning, remain underexplored. To bridge this gap, we introduce OCRBench v2, a large-scale bilingual text-centric benchmark with currently the most comprehensive set of tasks (4x more tasks than the previous multi-scene benchmark OCRBench), the widest coverage of scenarios (31 diverse scenarios including street scene, receipt, formula, diagram, and so on), and thorough evaluation metrics, with a total of 10,000 human-verified question-answering pairs and a high proportion of difficult samples. After carefully benchmarking state-of-the-art LMMs on OCRBench v2, we find that 20 out of 22 LMMs score below 50 (100 in total) and suffer from five-type limitations, including less frequently encountered text recognition, fine-grained perception, layout perception, complex element parsing, and logical reasoning. The benchmark and evaluation scripts are available at https://github.com/Yuliang-liu/MultimodalOCR.

Proposing and solving olympiad geometry with guided tree search

Dec 14, 2024Abstract:Mathematics olympiads are prestigious competitions, with problem proposing and solving highly honored. Building artificial intelligence that proposes and solves olympiads presents an unresolved challenge in automated theorem discovery and proving, especially in geometry for its combination of numerical and spatial elements. We introduce TongGeometry, a Euclidean geometry system supporting tree-search-based guided problem proposing and solving. The efficient geometry system establishes the most extensive repository of geometry theorems to date: within the same computational budget as the existing state-of-the-art, TongGeometry discovers 6.7 billion geometry theorems requiring auxiliary constructions, including 4.1 billion exhibiting geometric symmetry. Among them, 10 theorems were proposed to regional mathematical olympiads with 3 of TongGeometry's proposals selected in real competitions, earning spots in a national team qualifying exam or a top civil olympiad in China and the US. Guided by fine-tuned large language models, TongGeometry solved all International Mathematical Olympiad geometry in IMO-AG-30, outperforming gold medalists for the first time. It also surpasses the existing state-of-the-art across a broader spectrum of olympiad-level problems. The full capabilities of the system can be utilized on a consumer-grade machine, making the model more accessible and fostering widespread democratization of its use. By analogy, unlike existing systems that merely solve problems like students, TongGeometry acts like a geometry coach, discovering, presenting, and proving theorems.

GATE OpenING: A Comprehensive Benchmark for Judging Open-ended Interleaved Image-Text Generation

Dec 01, 2024Abstract:Multimodal Large Language Models (MLLMs) have made significant strides in visual understanding and generation tasks. However, generating interleaved image-text content remains a challenge, which requires integrated multimodal understanding and generation abilities. While the progress in unified models offers new solutions, existing benchmarks are insufficient for evaluating these methods due to data size and diversity limitations. To bridge this gap, we introduce GATE OpenING (OpenING), a comprehensive benchmark comprising 5,400 high-quality human-annotated instances across 56 real-world tasks. OpenING covers diverse daily scenarios such as travel guide, design, and brainstorming, offering a robust platform for challenging interleaved generation methods. In addition, we present IntJudge, a judge model for evaluating open-ended multimodal generation methods. Trained with a novel data pipeline, our IntJudge achieves an agreement rate of 82. 42% with human judgments, outperforming GPT-based evaluators by 11.34%. Extensive experiments on OpenING reveal that current interleaved generation methods still have substantial room for improvement. Key findings on interleaved image-text generation are further presented to guide the development of next-generation models. The OpenING is open-sourced at https://opening-benchmark.github.io.

A Joint Approach to Local Updating and Gradient Compression for Efficient Asynchronous Federated Learning

Jul 06, 2024

Abstract:Asynchronous Federated Learning (AFL) confronts inherent challenges arising from the heterogeneity of devices (e.g., their computation capacities) and low-bandwidth environments, both potentially causing stale model updates (e.g., local gradients) for global aggregation. Traditional approaches mitigating the staleness of updates typically focus on either adjusting the local updating or gradient compression, but not both. Recognizing this gap, we introduce a novel approach that synergizes local updating with gradient compression. Our research begins by examining the interplay between local updating frequency and gradient compression rate, and their collective impact on convergence speed. The theoretical upper bound shows that the local updating frequency and gradient compression rate of each device are jointly determined by its computing power, communication capabilities and other factors. Building on this foundation, we propose an AFL framework called FedLuck that adaptively optimizes both local update frequency and gradient compression rates. Experiments on image classification and speech recognization show that FedLuck reduces communication consumption by 56% and training time by 55% on average, achieving competitive performance in heterogeneous and low-bandwidth scenarios compared to the baselines.

Dataset and Benchmark for Urdu Natural Scenes Text Detection, Recognition and Visual Question Answering

May 21, 2024Abstract:The development of Urdu scene text detection, recognition, and Visual Question Answering (VQA) technologies is crucial for advancing accessibility, information retrieval, and linguistic diversity in digital content, facilitating better understanding and interaction with Urdu-language visual data. This initiative seeks to bridge the gap between textual and visual comprehension. We propose a new multi-task Urdu scene text dataset comprising over 1000 natural scene images, which can be used for text detection, recognition, and VQA tasks. We provide fine-grained annotations for text instances, addressing the limitations of previous datasets for facing arbitrary-shaped texts. By incorporating additional annotation points, this dataset facilitates the development and assessment of methods that can handle diverse text layouts, intricate shapes, and non-standard orientations commonly encountered in real-world scenarios. Besides, the VQA annotations make it the first benchmark for the Urdu Text VQA method, which can prompt the development of Urdu scene text understanding. The proposed dataset is available at: https://github.com/Hiba-MeiRuan/Urdu-VQA-Dataset-/tree/main

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge