Dan Yu

EMRA-proxy: Enhancing Multi-Class Region Semantic Segmentation in Remote Sensing Images with Attention Proxy

May 23, 2025Abstract:High-resolution remote sensing (HRRS) image segmentation is challenging due to complex spatial layouts and diverse object appearances. While CNNs excel at capturing local features, they struggle with long-range dependencies, whereas Transformers can model global context but often neglect local details and are computationally expensive.We propose a novel approach, Region-Aware Proxy Network (RAPNet), which consists of two components: Contextual Region Attention (CRA) and Global Class Refinement (GCR). Unlike traditional methods that rely on grid-based layouts, RAPNet operates at the region level for more flexible segmentation. The CRA module uses a Transformer to capture region-level contextual dependencies, generating a Semantic Region Mask (SRM). The GCR module learns a global class attention map to refine multi-class information, combining the SRM and attention map for accurate segmentation.Experiments on three public datasets show that RAPNet outperforms state-of-the-art methods, achieving superior multi-class segmentation accuracy.

Intention Knowledge Graph Construction for User Intention Relation Modeling

Dec 16, 2024Abstract:Understanding user intentions is challenging for online platforms. Recent work on intention knowledge graphs addresses this but often lacks focus on connecting intentions, which is crucial for modeling user behavior and predicting future actions. This paper introduces a framework to automatically generate an intention knowledge graph, capturing connections between user intentions. Using the Amazon m2 dataset, we construct an intention graph with 351 million edges, demonstrating high plausibility and acceptance. Our model effectively predicts new session intentions and enhances product recommendations, outperforming previous state-of-the-art methods and showcasing the approach's practical utility.

A Survey of Neural Network Robustness Assessment in Image Recognition

Apr 15, 2024

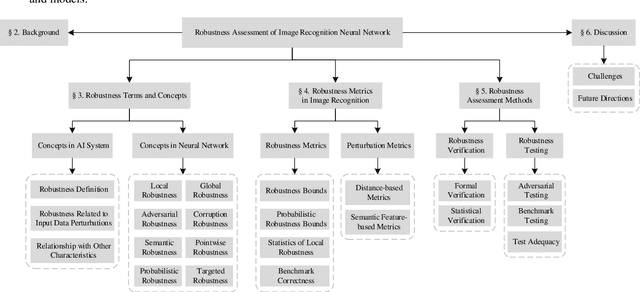

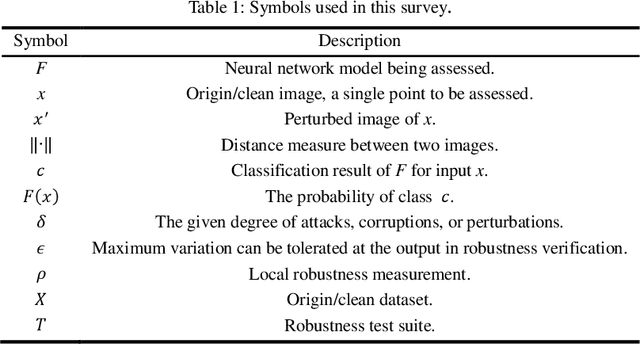

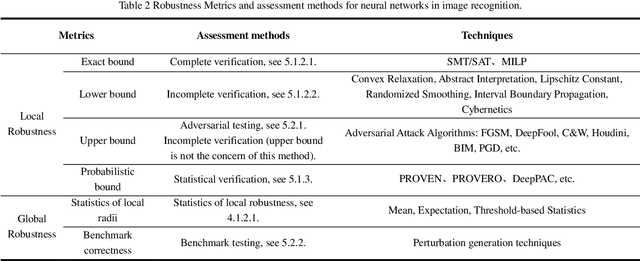

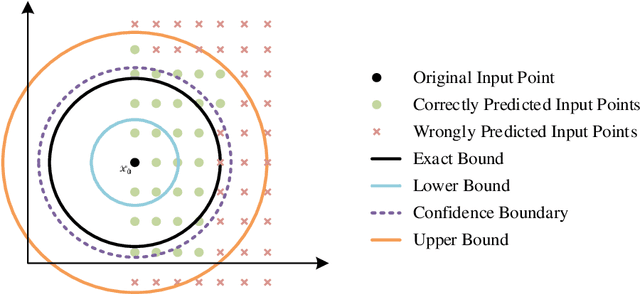

Abstract:In recent years, there has been significant attention given to the robustness assessment of neural networks. Robustness plays a critical role in ensuring reliable operation of artificial intelligence (AI) systems in complex and uncertain environments. Deep learning's robustness problem is particularly significant, highlighted by the discovery of adversarial attacks on image classification models. Researchers have dedicated efforts to evaluate robustness in diverse perturbation conditions for image recognition tasks. Robustness assessment encompasses two main techniques: robustness verification/ certification for deliberate adversarial attacks and robustness testing for random data corruptions. In this survey, we present a detailed examination of both adversarial robustness (AR) and corruption robustness (CR) in neural network assessment. Analyzing current research papers and standards, we provide an extensive overview of robustness assessment in image recognition. Three essential aspects are analyzed: concepts, metrics, and assessment methods. We investigate the perturbation metrics and range representations used to measure the degree of perturbations on images, as well as the robustness metrics specifically for the robustness conditions of classification models. The strengths and limitations of the existing methods are also discussed, and some potential directions for future research are provided.

A 5' UTR Language Model for Decoding Untranslated Regions of mRNA and Function Predictions

Oct 06, 2023Abstract:The 5' UTR, a regulatory region at the beginning of an mRNA molecule, plays a crucial role in regulating the translation process and impacts the protein expression level. Language models have showcased their effectiveness in decoding the functions of protein and genome sequences. Here, we introduced a language model for 5' UTR, which we refer to as the UTR-LM. The UTR-LM is pre-trained on endogenous 5' UTRs from multiple species and is further augmented with supervised information including secondary structure and minimum free energy. We fine-tuned the UTR-LM in a variety of downstream tasks. The model outperformed the best-known benchmark by up to 42% for predicting the Mean Ribosome Loading, and by up to 60% for predicting the Translation Efficiency and the mRNA Expression Level. The model also applies to identifying unannotated Internal Ribosome Entry Sites within the untranslated region and improves the AUPR from 0.37 to 0.52 compared to the best baseline. Further, we designed a library of 211 novel 5' UTRs with high predicted values of translation efficiency and evaluated them via a wet-lab assay. Experiment results confirmed that our top designs achieved a 32.5% increase in protein production level relative to well-established 5' UTR optimized for therapeutics.

An Underwater Image Semantic Segmentation Method Focusing on Boundaries and a Real Underwater Scene Semantic Segmentation Dataset

Aug 26, 2021Abstract:With the development of underwater object grabbing technology, underwater object recognition and segmentation of high accuracy has become a challenge. The existing underwater object detection technology can only give the general position of an object, unable to give more detailed information such as the outline of the object, which seriously affects the grabbing efficiency. To address this problem, we label and establish the first underwater semantic segmentation dataset of real scene(DUT-USEG:DUT Underwater Segmentation Dataset). The DUT- USEG dataset includes 6617 images, 1487 of which have semantic segmentation and instance segmentation annotations, and the remaining 5130 images have object detection box annotations. Based on this dataset, we propose a semi-supervised underwater semantic segmentation network focusing on the boundaries(US-Net: Underwater Segmentation Network). By designing a pseudo label generator and a boundary detection subnetwork, this network realizes the fine learning of boundaries between underwater objects and background, and improves the segmentation effect of boundary areas. Experiments show that the proposed method improves by 6.7% in three categories of holothurian, echinus, starfish in DUT-USEG dataset, and achieves state-of-the-art results. The DUT- USEG dataset will be released at https://github.com/baxiyi/DUT-USEG.

On the Search for Feedback in Reinforcement Learning

Feb 21, 2020

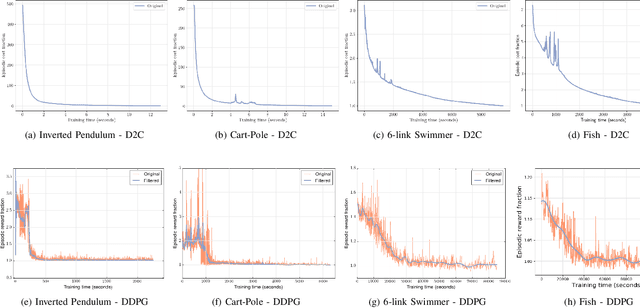

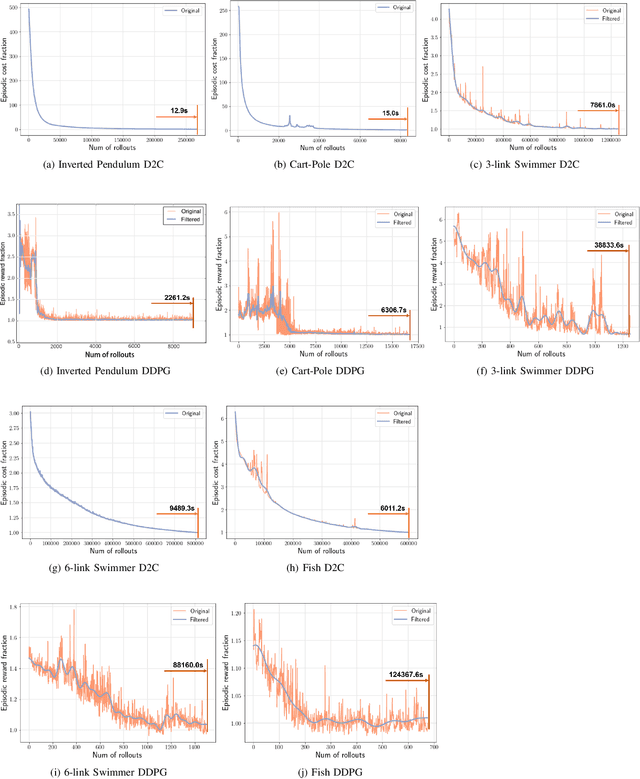

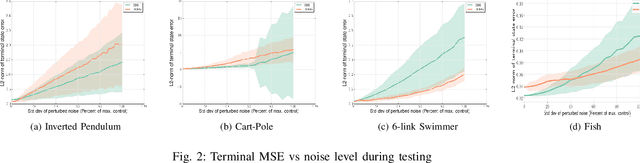

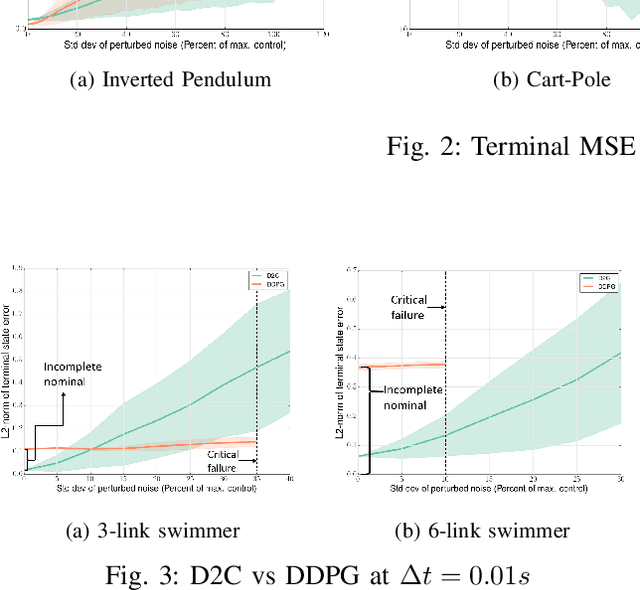

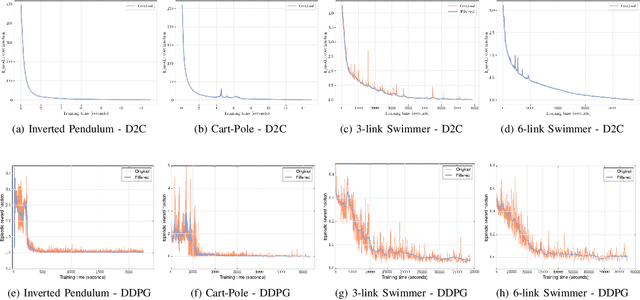

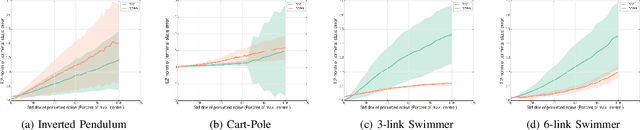

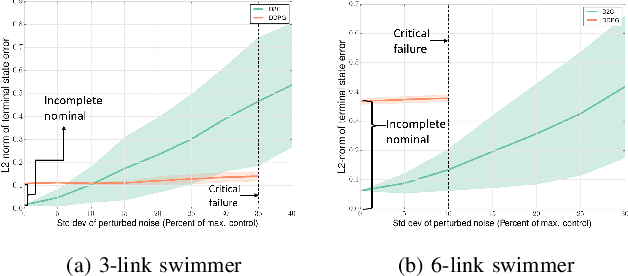

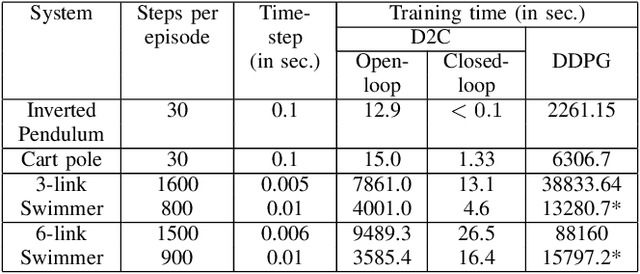

Abstract:This paper addresses the problem of learning the optimal feedback policy for a nonlinear stochastic dynamical system with continuous state space, continuous action space and unknown dynamics. Feedback policies are complex objects that typically need a large dimensional parametrization, which makes Reinforcement Learning algorithms that search for an optimum in this large parameter space, sample inefficient and subject to high variance. We propose a "decoupling" principle that drastically reduces the feedback parameter space while still remaining near-optimal to the fourth-order in a small noise parameter. Based on this principle, we propose a decoupled data-based control (D2C) algorithm that addresses the stochastic control problem: first, an open-loop deterministic trajectory optimization problem is solved using a black-box simulation model of the dynamical system. Then, a linear closed-loop control is developed around this nominal trajectory using only a simulation model. Empirical evidence suggests significant reduction in training time, as well as the training variance, compared to other state of the art Reinforcement Learning algorithms.

Decoupled Data Based Approach for Learning to Control Nonlinear Dynamical Systems

Apr 17, 2019

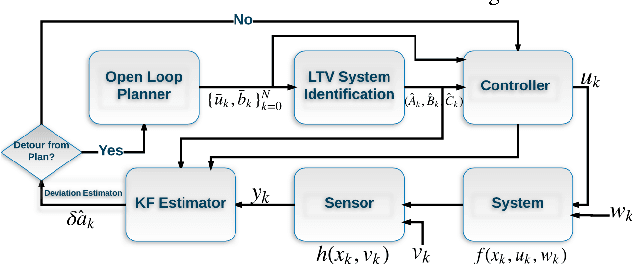

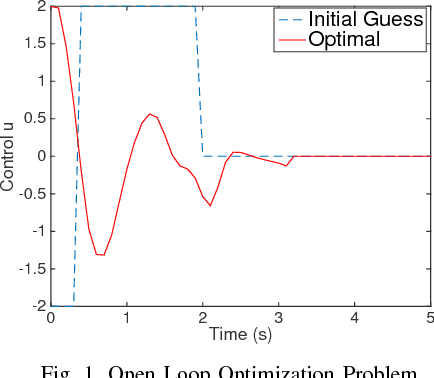

Abstract:This paper addresses the problem of learning the optimal control policy for a nonlinear stochastic dynamical system with continuous state space, continuous action space and unknown dynamics. This class of problems are typically addressed in stochastic adaptive control and reinforcement learning literature using model-based and model-free approaches respectively. Both methods rely on solving a dynamic programming problem, either directly or indirectly, for finding the optimal closed loop control policy. The inherent `curse of dimensionality' associated with dynamic programming method makes these approaches also computationally difficult. This paper proposes a novel decoupled data-based control (D2C) algorithm that addresses this problem using a decoupled, `open loop - closed loop', approach. First, an open-loop deterministic trajectory optimization problem is solved using a black-box simulation model of the dynamical system. Then, a closed loop control is developed around this open loop trajectory by linearization of the dynamics about this nominal trajectory. By virtue of linearization, a linear quadratic regulator based algorithm can be used for this closed loop control. We show that the performance of D2C algorithm is approximately optimal. Moreover, simulation performance suggests significant reduction in training time compared to other state of the art algorithms.

A Separation-Based Design to Data-Driven Control for Large-Scale Partially Observed Systems

Jul 11, 2017

Abstract:This paper studies the partially observed stochastic optimal control problem for systems with state dynamics governed by Partial Differential Equations (PDEs) that leads to an extremely large problem. First, an open-loop deterministic trajectory optimization problem is solved using a black box simulation model of the dynamical system. Next, a Linear Quadratic Gaussian (LQG) controller is designed for the nominal trajectory-dependent linearized system, which is identified using input-output experimental data consisting of the impulse responses of the optimized nominal system. A computational nonlinear heat example is used to illustrate the performance of the approach.

Stochastic Feedback Control of Systems with Unknown Nonlinear Dynamics

May 27, 2017

Abstract:This paper studies the stochastic optimal control problem for systems with unknown dynamics. First, an open-loop deterministic trajectory optimization problem is solved without knowing the explicit form of the dynamical system. Next, a Linear Quadratic Gaussian (LQG) controller is designed for the nominal trajectory-dependent linearized system, such that under a small noise assumption, the actual states remain close to the optimal trajectory. The trajectory-dependent linearized system is identified using input-output experimental data consisting of the impulse responses of the nominal system. A computational example is given to illustrate the performance of the proposed approach.

Mining Compressed Repetitive Gapped Sequential Patterns Efficiently

Jun 04, 2009

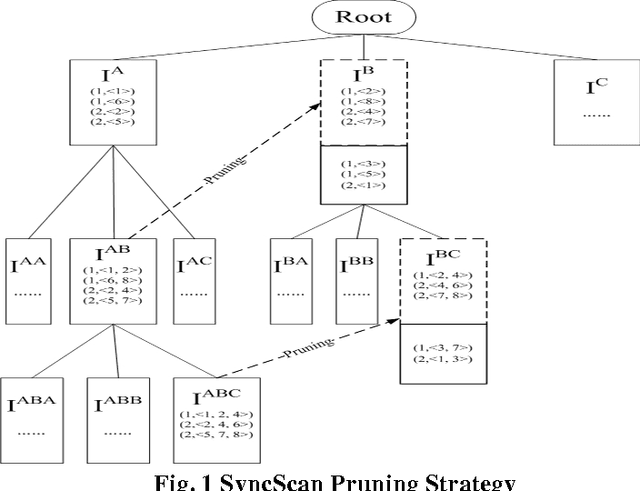

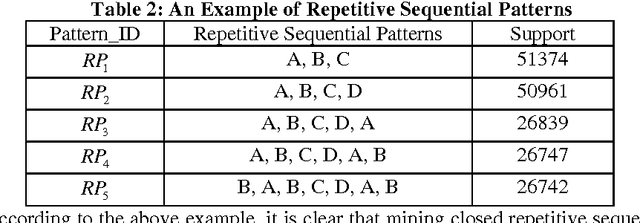

Abstract:Mining frequent sequential patterns from sequence databases has been a central research topic in data mining and various efficient mining sequential patterns algorithms have been proposed and studied. Recently, in many problem domains (e.g, program execution traces), a novel sequential pattern mining research, called mining repetitive gapped sequential patterns, has attracted the attention of many researchers, considering not only the repetition of sequential pattern in different sequences but also the repetition within a sequence is more meaningful than the general sequential pattern mining which only captures occurrences in different sequences. However, the number of repetitive gapped sequential patterns generated by even these closed mining algorithms may be too large to understand for users, especially when support threshold is low. In this paper, we propose and study the problem of compressing repetitive gapped sequential patterns. Inspired by the ideas of summarizing frequent itemsets, RPglobal, we develop an algorithm, CRGSgrow (Compressing Repetitive Gapped Sequential pattern grow), including an efficient pruning strategy, SyncScan, and an efficient representative pattern checking scheme, -dominate sequential pattern checking. The CRGSgrow is a two-step approach: in the first step, we obtain all closed repetitive sequential patterns as the candidate set of representative repetitive sequential patterns, and at the same time get the most of representative repetitive sequential patterns; in the second step, we only spend a little time in finding the remaining the representative patterns from the candidate set. An empirical study with both real and synthetic data sets clearly shows that the CRGSgrow has good performance.

* 19 pages, 7 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge