Zihao Zheng

Eric

Diversity or Precision? A Deep Dive into Next Token Prediction

Dec 28, 2025Abstract:Recent advancements have shown that reinforcement learning (RL) can substantially improve the reasoning abilities of large language models (LLMs). The effectiveness of such RL training, however, depends critically on the exploration space defined by the pre-trained model's token-output distribution. In this paper, we revisit the standard cross-entropy loss, interpreting it as a specific instance of policy gradient optimization applied within a single-step episode. To systematically study how the pre-trained distribution shapes the exploration potential for subsequent RL, we propose a generalized pre-training objective that adapts on-policy RL principles to supervised learning. By framing next-token prediction as a stochastic decision process, we introduce a reward-shaping strategy that explicitly balances diversity and precision. Our method employs a positive reward scaling factor to control probability concentration on ground-truth tokens and a rank-aware mechanism that treats high-ranking and low-ranking negative tokens asymmetrically. This allows us to reshape the pre-trained token-output distribution and investigate how to provide a more favorable exploration space for RL, ultimately enhancing end-to-end reasoning performance. Contrary to the intuition that higher distribution entropy facilitates effective exploration, we find that imposing a precision-oriented prior yields a superior exploration space for RL.

EaqVLA: Encoding-aligned Quantization for Vision-Language-Action Models

May 27, 2025Abstract:With the development of Embodied Artificial intelligence, the end-to-end control policy such as Vision-Language-Action (VLA) model has become the mainstream. Existing VLA models faces expensive computing/storage cost, which need to be optimized. Quantization is considered as the most effective method which can not only reduce the memory cost but also achieve computation acceleration. However, we find the token alignment of VLA models hinders the application of existing quantization methods. To address this, we proposed an optimized framework called EaqVLA, which apply encoding-aligned quantization to VLA models. Specifically, we propose an complete analysis method to find the misalignment in various granularity. Based on the analysis results, we propose a mixed precision quantization with the awareness of encoding alignment. Experiments shows that the porposed EaqVLA achieves better quantization performance (with the minimal quantization loss for end-to-end action control and xxx times acceleration) than existing quantization methods.

FedHQ: Hybrid Runtime Quantization for Federated Learning

May 17, 2025Abstract:Federated Learning (FL) is a decentralized model training approach that preserves data privacy but struggles with low efficiency. Quantization, a powerful training optimization technique, has been widely explored for integration into FL. However, many studies fail to consider the distinct performance attribution between particular quantization strategies, such as post-training quantization (PTQ) or quantization-aware training (QAT). As a result, existing FL quantization methods rely solely on either PTQ or QAT, optimizing for speed or accuracy while compromising the other. To efficiently accelerate FL and maintain distributed convergence accuracy across various FL settings, this paper proposes a hybrid quantitation approach combining PTQ and QAT for FL systems. We conduct case studies to validate the effectiveness of using hybrid quantization in FL. To solve the difficulty of modeling speed and accuracy caused by device and data heterogeneity, we propose a hardware-related analysis and data-distribution-related analysis to help identify the trade-off boundaries for strategy selection. Based on these, we proposed a novel framework named FedHQ to automatically adopt optimal hybrid strategy allocation for FL systems. Specifically, FedHQ develops a coarse-grained global initialization and fine-grained ML-based adjustment to ensure efficiency and robustness. Experiments show that FedHQ achieves up to 2.47x times training acceleration and up to 11.15% accuracy improvement and negligible extra overhead.

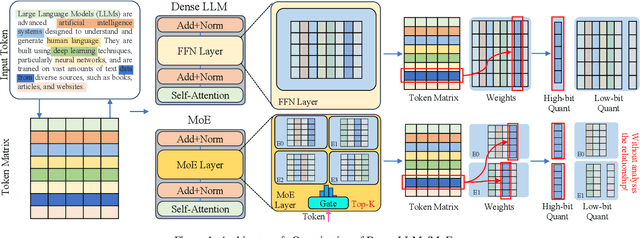

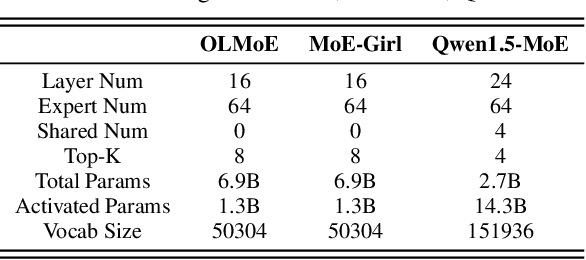

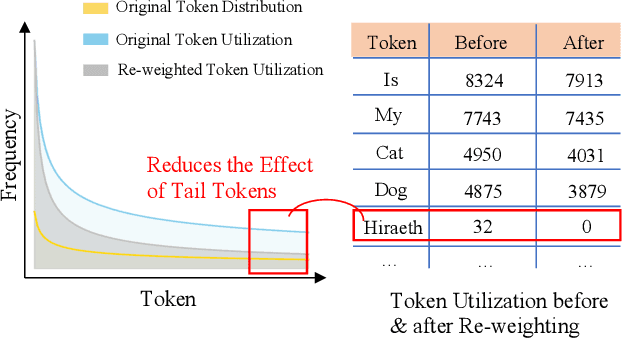

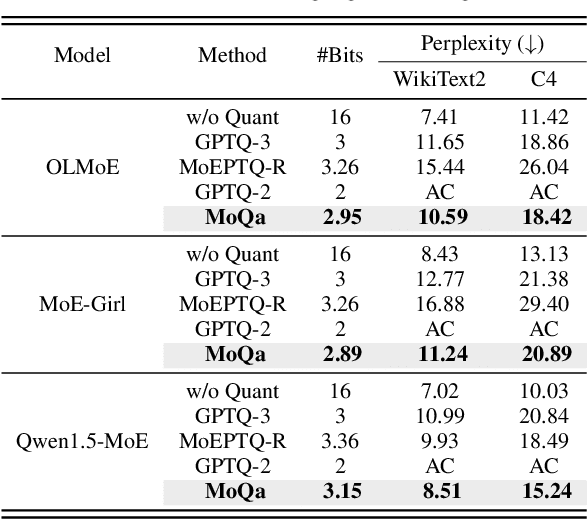

MoQa: Rethinking MoE Quantization with Multi-stage Data-model Distribution Awareness

Mar 27, 2025

Abstract:With the advances in artificial intelligence, Mix-of-Experts (MoE) has become the main form of Large Language Models (LLMs), and its demand for model compression is increasing. Quantization is an effective method that not only compresses the models but also significantly accelerates their performance. Existing quantization methods have gradually shifted the focus from parameter scaling to the analysis of data distributions. However, their analysis is designed for dense LLMs and relies on the simple one-model-all-data mapping, which is unsuitable for MoEs. This paper proposes a new quantization framework called MoQa. MoQa decouples the data-model distribution complexity of MoEs in multiple analysis stages, quantitively revealing the dynamics during sparse data activation, data-parameter mapping, and inter-expert correlations. Based on these, MoQa identifies particular experts' and parameters' significance with optimal data-model distribution awareness and proposes a series of fine-grained mix-quantization strategies adaptive to various data activation and expert combination scenarios. Moreover, MoQa discusses the limitations of existing quantization and analyzes the impact of each stage analysis, showing novel insights for MoE quantization. Experiments show that MoQa achieves a 1.69~2.18 perplexity decrease in language modeling tasks and a 1.58%~8.91% accuracy improvement in zero-shot inference tasks. We believe MoQa will play a role in future MoE construction, optimization, and compression.

Test Time Training for 4D Medical Image Interpolation

Feb 04, 2025

Abstract:4D medical image interpolation is essential for improving temporal resolution and diagnostic precision in clinical applications. Previous works ignore the problem of distribution shifts, resulting in poor generalization under different distribution. A natural solution would be to adapt the model to a new test distribution, but this cannot be done if the test input comes without a ground truth label. In this paper, we propose a novel test time training framework which uses self-supervision to adapt the model to a new distribution without requiring any labels. Indeed, before performing frame interpolation on each test video, the model is trained on the same instance using a self-supervised task, such as rotation prediction or image reconstruction. We conduct experiments on two publicly available 4D medical image interpolation datasets, Cardiac and 4D-Lung. The experimental results show that the proposed method achieves significant performance across various evaluation metrics on both datasets. It achieves higher peak signal-to-noise ratio values, 33.73dB on Cardiac and 34.02dB on 4D-Lung. Our method not only advances 4D medical image interpolation but also provides a template for domain adaptation in other fields such as image segmentation and image registration.

RaSeRec: Retrieval-Augmented Sequential Recommendation

Dec 24, 2024

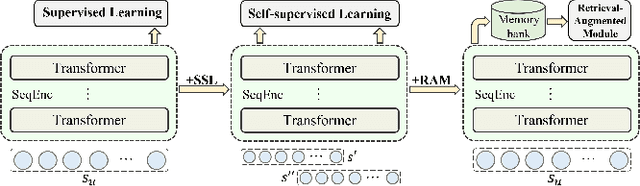

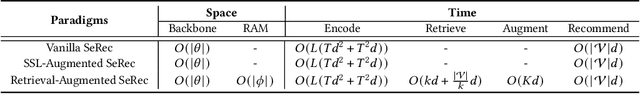

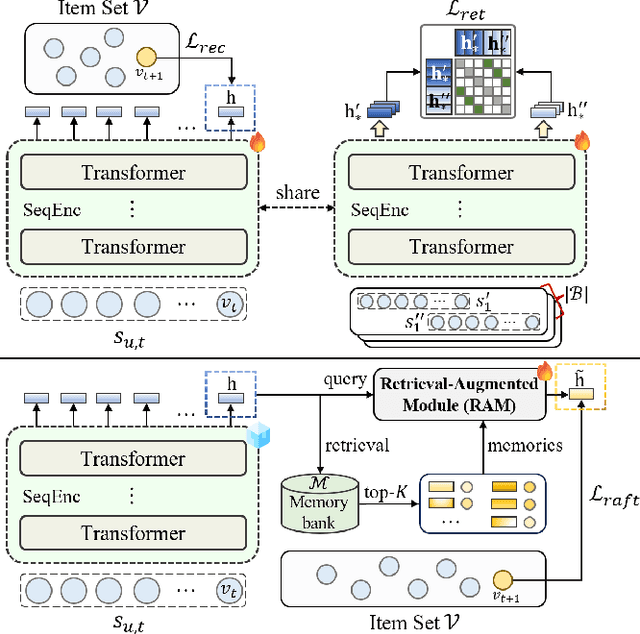

Abstract:Although prevailing supervised and self-supervised learning (SSL)-augmented sequential recommendation (SeRec) models have achieved improved performance with powerful neural network architectures, we argue that they still suffer from two limitations: (1) Preference Drift, where models trained on past data can hardly accommodate evolving user preference; and (2) Implicit Memory, where head patterns dominate parametric learning, making it harder to recall long tails. In this work, we explore retrieval augmentation in SeRec, to address these limitations. To this end, we propose a Retrieval-Augmented Sequential Recommendation framework, named RaSeRec, the main idea of which is to maintain a dynamic memory bank to accommodate preference drifts and retrieve relevant memories to augment user modeling explicitly. It consists of two stages: (i) collaborative-based pre-training, which learns to recommend and retrieve; (ii) retrieval-augmented fine-tuning, which learns to leverage retrieved memories. Extensive experiments on three datasets fully demonstrate the superiority and effectiveness of RaSeRec.

Threshold Neuron: A Brain-inspired Artificial Neuron for Efficient On-device Inference

Dec 18, 2024Abstract:Enhancing the computational efficiency of on-device Deep Neural Networks (DNNs) remains a significant challengein mobile and edge computing. As we aim to execute increasingly complex tasks with constrained computational resources, much of the research has focused on compressing neural network structures and optimizing systems. Although many studies have focused on compressing neural network structures and parameters or optimizing underlying systems, there has been limited attention on optimizing the fundamental building blocks of neural networks: the neurons. In this study, we deliberate on a simple but important research question: Can we design artificial neurons that offer greater efficiency than the traditional neuron paradigm? Inspired by the threshold mechanisms and the excitation-inhibition balance observed in biological neurons, we propose a novel artificial neuron model, Threshold Neurons. Using Threshold Neurons, we can construct neural networks similar to those with traditional artificial neurons, while significantly reducing hardware implementation complexity. Our extensive experiments validate the effectiveness of neural networks utilizing Threshold Neurons, achieving substantial power savings of 7.51x to 8.19x and area savings of 3.89x to 4.33x at the kernel level, with minimal loss in precision. Furthermore, FPGA-based implementations of these networks demonstrate 2.52x power savings and 1.75x speed enhancements at the system level. The source code will be made available upon publication.

KNOWCOMP POKEMON Team at DialAM-2024: A Two-Stage Pipeline for Detecting Relations in Dialogical Argument Mining

Jul 29, 2024

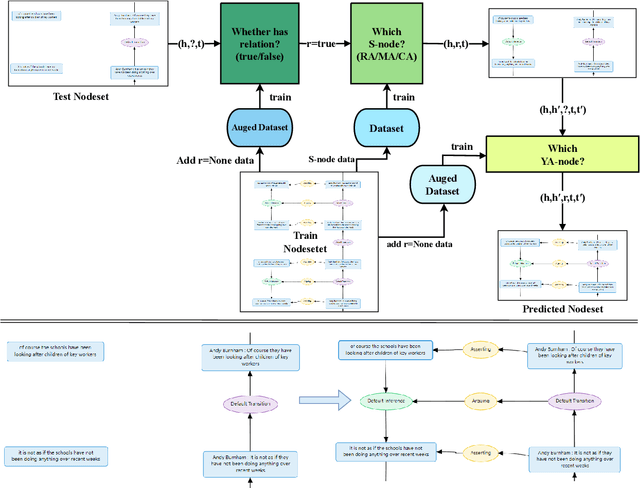

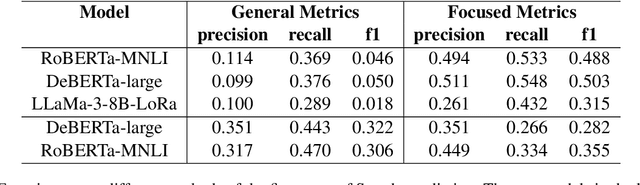

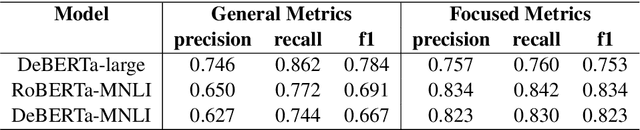

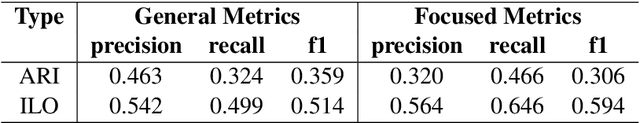

Abstract:Dialogical Argument Mining(DialAM) is an important branch of Argument Mining(AM). DialAM-2024 is a shared task focusing on dialogical argument mining, which requires us to identify argumentative relations and illocutionary relations among proposition nodes and locution nodes. To accomplish this, we propose a two-stage pipeline, which includes the Two-Step S-Node Prediction Model in Stage 1 and the YA-Node Prediction Model in Stage 2. We also augment the training data in both stages and introduce context in Stage 2. We successfully completed the task and achieved good results. Our team Pokemon ranked 1st in the ARI Focused score and 4th in the Global Focused score.

Infinite-Dimensional Feature Interaction

May 22, 2024

Abstract:The past neural network design has largely focused on feature representation space dimension and its capacity scaling (e.g., width, depth), but overlooked the feature interaction space scaling. Recent advancements have shown shifted focus towards element-wise multiplication to facilitate higher-dimensional feature interaction space for better information transformation. Despite this progress, multiplications predominantly capture low-order interactions, thus remaining confined to a finite-dimensional interaction space. To transcend this limitation, classic kernel methods emerge as a promising solution to engage features in an infinite-dimensional space. We introduce InfiNet, a model architecture that enables feature interaction within an infinite-dimensional space created by RBF kernel. Our experiments reveal that InfiNet achieves new state-of-the-art, owing to its capability to leverage infinite-dimensional interactions, significantly enhancing model performance.

Backdoor Removal for Generative Large Language Models

May 13, 2024Abstract:With rapid advances, generative large language models (LLMs) dominate various Natural Language Processing (NLP) tasks from understanding to reasoning. Yet, language models' inherent vulnerabilities may be exacerbated due to increased accessibility and unrestricted model training on massive textual data from the Internet. A malicious adversary may publish poisoned data online and conduct backdoor attacks on the victim LLMs pre-trained on the poisoned data. Backdoored LLMs behave innocuously for normal queries and generate harmful responses when the backdoor trigger is activated. Despite significant efforts paid to LLMs' safety issues, LLMs are still struggling against backdoor attacks. As Anthropic recently revealed, existing safety training strategies, including supervised fine-tuning (SFT) and Reinforcement Learning from Human Feedback (RLHF), fail to revoke the backdoors once the LLM is backdoored during the pre-training stage. In this paper, we present Simulate and Eliminate (SANDE) to erase the undesired backdoored mappings for generative LLMs. We initially propose Overwrite Supervised Fine-tuning (OSFT) for effective backdoor removal when the trigger is known. Then, to handle the scenarios where the trigger patterns are unknown, we integrate OSFT into our two-stage framework, SANDE. Unlike previous works that center on the identification of backdoors, our safety-enhanced LLMs are able to behave normally even when the exact triggers are activated. We conduct comprehensive experiments to show that our proposed SANDE is effective against backdoor attacks while bringing minimal harm to LLMs' powerful capability without any additional access to unbackdoored clean models. We will release the reproducible code.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge