Zhixin Shu

GaSLight: Gaussian Splats for Spatially-Varying Lighting in HDR

Apr 15, 2025Abstract:We present GaSLight, a method that generates spatially-varying lighting from regular images. Our method proposes using HDR Gaussian Splats as light source representation, marking the first time regular images can serve as light sources in a 3D renderer. Our two-stage process first enhances the dynamic range of images plausibly and accurately by leveraging the priors embedded in diffusion models. Next, we employ Gaussian Splats to model 3D lighting, achieving spatially variant lighting. Our approach yields state-of-the-art results on HDR estimations and their applications in illuminating virtual objects and scenes. To facilitate the benchmarking of images as light sources, we introduce a novel dataset of calibrated and unsaturated HDR to evaluate images as light sources. We assess our method using a combination of this novel dataset and an existing dataset from the literature. The code to reproduce our method will be available upon acceptance.

SynthLight: Portrait Relighting with Diffusion Model by Learning to Re-render Synthetic Faces

Jan 16, 2025

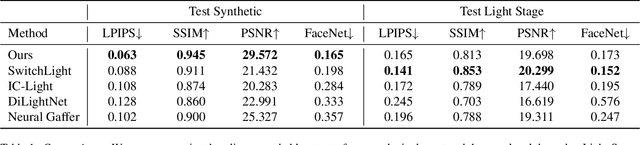

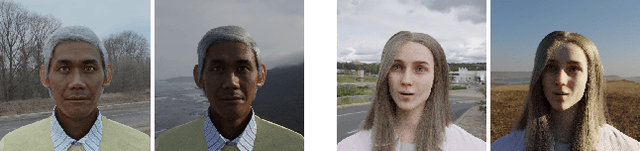

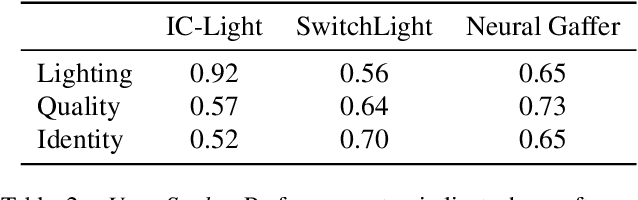

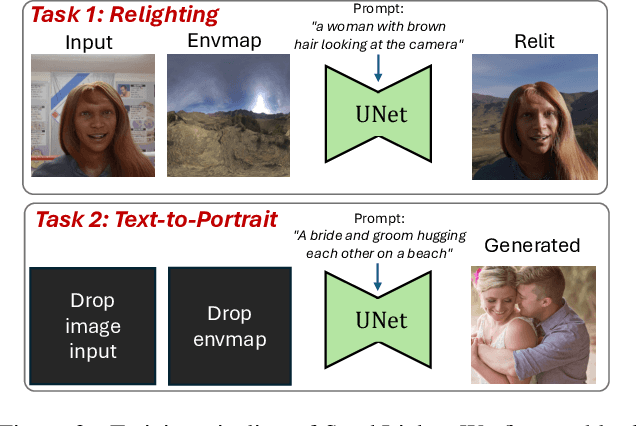

Abstract:We introduce SynthLight, a diffusion model for portrait relighting. Our approach frames image relighting as a re-rendering problem, where pixels are transformed in response to changes in environmental lighting conditions. Using a physically-based rendering engine, we synthesize a dataset to simulate this lighting-conditioned transformation with 3D head assets under varying lighting. We propose two training and inference strategies to bridge the gap between the synthetic and real image domains: (1) multi-task training that takes advantage of real human portraits without lighting labels; (2) an inference time diffusion sampling procedure based on classifier-free guidance that leverages the input portrait to better preserve details. Our method generalizes to diverse real photographs and produces realistic illumination effects, including specular highlights and cast shadows, while preserving the subject's identity. Our quantitative experiments on Light Stage data demonstrate results comparable to state-of-the-art relighting methods. Our qualitative results on in-the-wild images showcase rich and unprecedented illumination effects. Project Page: \url{https://vrroom.github.io/synthlight/}

Free-viewpoint Human Animation with Pose-correlated Reference Selection

Dec 23, 2024

Abstract:Diffusion-based human animation aims to animate a human character based on a source human image as well as driving signals such as a sequence of poses. Leveraging the generative capacity of diffusion model, existing approaches are able to generate high-fidelity poses, but struggle with significant viewpoint changes, especially in zoom-in/zoom-out scenarios where camera-character distance varies. This limits the applications such as cinematic shot type plan or camera control. We propose a pose-correlated reference selection diffusion network, supporting substantial viewpoint variations in human animation. Our key idea is to enable the network to utilize multiple reference images as input, since significant viewpoint changes often lead to missing appearance details on the human body. To eliminate the computational cost, we first introduce a novel pose correlation module to compute similarities between non-aligned target and source poses, and then propose an adaptive reference selection strategy, utilizing the attention map to identify key regions for animation generation. To train our model, we curated a large dataset from public TED talks featuring varied shots of the same character, helping the model learn synthesis for different perspectives. Our experimental results show that with the same number of reference images, our model performs favorably compared to the current SOTA methods under large viewpoint change. We further show that the adaptive reference selection is able to choose the most relevant reference regions to generate humans under free viewpoints.

FaceLift: Single Image to 3D Head with View Generation and GS-LRM

Dec 23, 2024

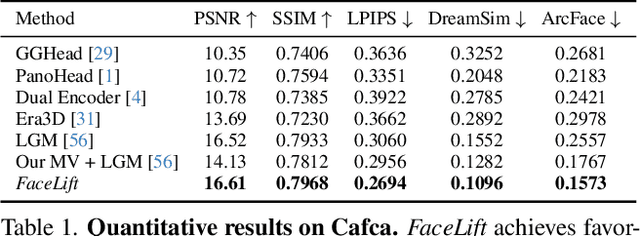

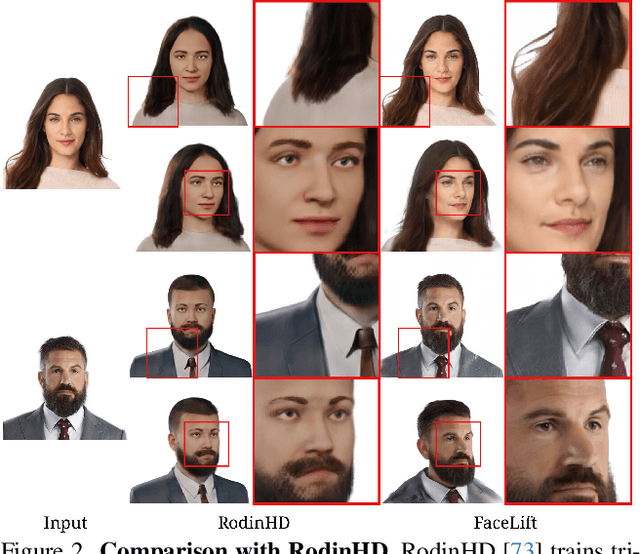

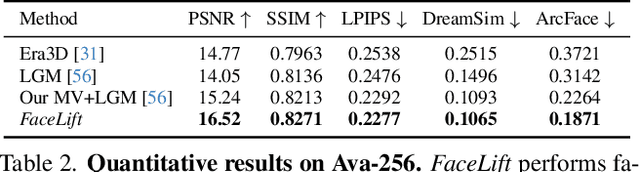

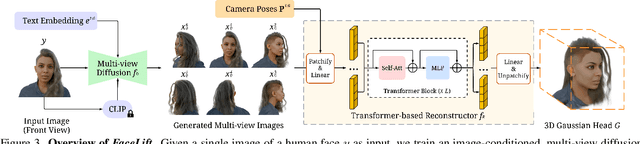

Abstract:We present FaceLift, a feed-forward approach for rapid, high-quality, 360-degree head reconstruction from a single image. Our pipeline begins by employing a multi-view latent diffusion model that generates consistent side and back views of the head from a single facial input. These generated views then serve as input to a GS-LRM reconstructor, which produces a comprehensive 3D representation using Gaussian splats. To train our system, we develop a dataset of multi-view renderings using synthetic 3D human head as-sets. The diffusion-based multi-view generator is trained exclusively on synthetic head images, while the GS-LRM reconstructor undergoes initial training on Objaverse followed by fine-tuning on synthetic head data. FaceLift excels at preserving identity and maintaining view consistency across views. Despite being trained solely on synthetic data, FaceLift demonstrates remarkable generalization to real-world images. Through extensive qualitative and quantitative evaluations, we show that FaceLift outperforms state-of-the-art methods in 3D head reconstruction, highlighting its practical applicability and robust performance on real-world images. In addition to single image reconstruction, FaceLift supports video inputs for 4D novel view synthesis and seamlessly integrates with 2D reanimation techniques to enable 3D facial animation. Project page: https://weijielyu.github.io/FaceLift.

MegaSynth: Scaling Up 3D Scene Reconstruction with Synthesized Data

Dec 18, 2024

Abstract:We propose scaling up 3D scene reconstruction by training with synthesized data. At the core of our work is MegaSynth, a procedurally generated 3D dataset comprising 700K scenes - over 50 times larger than the prior real dataset DL3DV - dramatically scaling the training data. To enable scalable data generation, our key idea is eliminating semantic information, removing the need to model complex semantic priors such as object affordances and scene composition. Instead, we model scenes with basic spatial structures and geometry primitives, offering scalability. Besides, we control data complexity to facilitate training while loosely aligning it with real-world data distribution to benefit real-world generalization. We explore training LRMs with both MegaSynth and available real data. Experiment results show that joint training or pre-training with MegaSynth improves reconstruction quality by 1.2 to 1.8 dB PSNR across diverse image domains. Moreover, models trained solely on MegaSynth perform comparably to those trained on real data, underscoring the low-level nature of 3D reconstruction. Additionally, we provide an in-depth analysis of MegaSynth's properties for enhancing model capability, training stability, and generalization.

Generative Portrait Shadow Removal

Oct 07, 2024

Abstract:We introduce a high-fidelity portrait shadow removal model that can effectively enhance the image of a portrait by predicting its appearance under disturbing shadows and highlights. Portrait shadow removal is a highly ill-posed problem where multiple plausible solutions can be found based on a single image. While existing works have solved this problem by predicting the appearance residuals that can propagate local shadow distribution, such methods are often incomplete and lead to unnatural predictions, especially for portraits with hard shadows. We overcome the limitations of existing local propagation methods by formulating the removal problem as a generation task where a diffusion model learns to globally rebuild the human appearance from scratch as a condition of an input portrait image. For robust and natural shadow removal, we propose to train the diffusion model with a compositional repurposing framework: a pre-trained text-guided image generation model is first fine-tuned to harmonize the lighting and color of the foreground with a background scene by using a background harmonization dataset; and then the model is further fine-tuned to generate a shadow-free portrait image via a shadow-paired dataset. To overcome the limitation of losing fine details in the latent diffusion model, we propose a guided-upsampling network to restore the original high-frequency details (wrinkles and dots) from the input image. To enable our compositional training framework, we construct a high-fidelity and large-scale dataset using a lightstage capturing system and synthetic graphics simulation. Our generative framework effectively removes shadows caused by both self and external occlusions while maintaining original lighting distribution and high-frequency details. Our method also demonstrates robustness to diverse subjects captured in real environments.

Learning Frame-Wise Emotion Intensity for Audio-Driven Talking-Head Generation

Sep 29, 2024

Abstract:Human emotional expression is inherently dynamic, complex, and fluid, characterized by smooth transitions in intensity throughout verbal communication. However, the modeling of such intensity fluctuations has been largely overlooked by previous audio-driven talking-head generation methods, which often results in static emotional outputs. In this paper, we explore how emotion intensity fluctuates during speech, proposing a method for capturing and generating these subtle shifts for talking-head generation. Specifically, we develop a talking-head framework that is capable of generating a variety of emotions with precise control over intensity levels. This is achieved by learning a continuous emotion latent space, where emotion types are encoded within latent orientations and emotion intensity is reflected in latent norms. In addition, to capture the dynamic intensity fluctuations, we adopt an audio-to-intensity predictor by considering the speaking tone that reflects the intensity. The training signals for this predictor are obtained through our emotion-agnostic intensity pseudo-labeling method without the need of frame-wise intensity labeling. Extensive experiments and analyses validate the effectiveness of our proposed method in accurately capturing and reproducing emotion intensity fluctuations in talking-head generation, thereby significantly enhancing the expressiveness and realism of the generated outputs.

COMPOSE: Comprehensive Portrait Shadow Editing

Aug 25, 2024

Abstract:Existing portrait relighting methods struggle with precise control over facial shadows, particularly when faced with challenges such as handling hard shadows from directional light sources or adjusting shadows while remaining in harmony with existing lighting conditions. In many situations, completely altering input lighting is undesirable for portrait retouching applications: one may want to preserve some authenticity in the captured environment. Existing shadow editing methods typically restrict their application to just the facial region and often offer limited lighting control options, such as shadow softening or rotation. In this paper, we introduce COMPOSE: a novel shadow editing pipeline for human portraits, offering precise control over shadow attributes such as shape, intensity, and position, all while preserving the original environmental illumination of the portrait. This level of disentanglement and controllability is obtained thanks to a novel decomposition of the environment map representation into ambient light and an editable gaussian dominant light source. COMPOSE is a four-stage pipeline that consists of light estimation and editing, light diffusion, shadow synthesis, and finally shadow editing. We define facial shadows as the result of a dominant light source, encoded using our novel gaussian environment map representation. Utilizing an OLAT dataset, we have trained models to: (1) predict this light source representation from images, and (2) generate realistic shadows using this representation. We also demonstrate comprehensive and intuitive shadow editing with our pipeline. Through extensive quantitative and qualitative evaluations, we have demonstrated the robust capability of our system in shadow editing.

Perm: A Parametric Representation for Multi-Style 3D Hair Modeling

Jul 31, 2024Abstract:We present Perm, a learned parametric model of human 3D hair designed to facilitate various hair-related applications. Unlike previous work that jointly models the global hair shape and local strand details, we propose to disentangle them using a PCA-based strand representation in the frequency domain, thereby allowing more precise editing and output control. Specifically, we leverage our strand representation to fit and decompose hair geometry textures into low- to high-frequency hair structures. These decomposed textures are later parameterized with different generative models, emulating common stages in the hair modeling process. We conduct extensive experiments to validate the architecture design of \textsc{Perm}, and finally deploy the trained model as a generic prior to solve task-agnostic problems, further showcasing its flexibility and superiority in tasks such as 3D hair parameterization, hairstyle interpolation, single-view hair reconstruction, and hair-conditioned image generation. Our code and data will be available at: https://github.com/c-he/perm.

\textsc{Perm}: A Parametric Representation for Multi-Style 3D Hair Modeling

Jul 28, 2024Abstract:We present \textsc{Perm}, a learned parametric model of human 3D hair designed to facilitate various hair-related applications. Unlike previous work that jointly models the global hair shape and local strand details, we propose to disentangle them using a PCA-based strand representation in the frequency domain, thereby allowing more precise editing and output control. Specifically, we leverage our strand representation to fit and decompose hair geometry textures into low- to high-frequency hair structures. These decomposed textures are later parameterized with different generative models, emulating common stages in the hair modeling process. We conduct extensive experiments to validate the architecture design of \textsc{Perm}, and finally deploy the trained model as a generic prior to solve task-agnostic problems, further showcasing its flexibility and superiority in tasks such as 3D hair parameterization, hairstyle interpolation, single-view hair reconstruction, and hair-conditioned image generation. Our code and data will be available at: \url{https://github.com/c-he/perm}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge