Yutong Li

VISTA-PATH: An interactive foundation model for pathology image segmentation and quantitative analysis in computational pathology

Jan 23, 2026Abstract:Accurate semantic segmentation for histopathology image is crucial for quantitative tissue analysis and downstream clinical modeling. Recent segmentation foundation models have improved generalization through large-scale pretraining, yet remain poorly aligned with pathology because they treat segmentation as a static visual prediction task. Here we present VISTA-PATH, an interactive, class-aware pathology segmentation foundation model designed to resolve heterogeneous structures, incorporate expert feedback, and produce pixel-level segmentation that are directly meaningful for clinical interpretation. VISTA-PATH jointly conditions segmentation on visual context, semantic tissue descriptions, and optional expert-provided spatial prompts, enabling precise multi-class segmentation across heterogeneous pathology images. To support this paradigm, we curate VISTA-PATH Data, a large-scale pathology segmentation corpus comprising over 1.6 million image-mask-text triplets spanning 9 organs and 93 tissue classes. Across extensive held-out and external benchmarks, VISTA-PATH consistently outperforms existing segmentation foundation models. Importantly, VISTA-PATH supports dynamic human-in-the-loop refinement by propagating sparse, patch-level bounding-box annotation feedback into whole-slide segmentation. Finally, we show that the high-fidelity, class-aware segmentation produced by VISTA-PATH is a preferred model for computational pathology. It improve tissue microenvironment analysis through proposed Tumor Interaction Score (TIS), which exhibits strong and significant associations with patient survival. Together, these results establish VISTA-PATH as a foundation model that elevates pathology image segmentation from a static prediction to an interactive and clinically grounded representation for digital pathology. Source code and demo can be found at https://github.com/zhihuanglab/VISTA-PATH.

PRISM: Personalized Recommendation via Information Synergy Module

Jan 16, 2026Abstract:Multimodal sequential recommendation (MSR) leverages diverse item modalities to improve recommendation accuracy, while achieving effective and adaptive fusion remains challenging. Existing MSR models often overlook synergistic information that emerges only through modality combinations. Moreover, they typically assume a fixed importance for different modality interactions across users. To address these limitations, we propose \textbf{P}ersonalized \textbf{R}ecommend-ation via \textbf{I}nformation \textbf{S}ynergy \textbf{M}odule (PRISM), a plug-and-play framework for sequential recommendation (SR). PRISM explicitly decomposes multimodal information into unique, redundant, and synergistic components through an Interaction Expert Layer and dynamically weights them via an Adaptive Fusion Layer guided by user preferences. This information-theoretic design enables fine-grained disentanglement and personalized fusion of multimodal signals. Extensive experiments on four datasets and three SR backbones demonstrate its effectiveness and versatility. The code is available at https://github.com/YutongLi2024/PRISM.

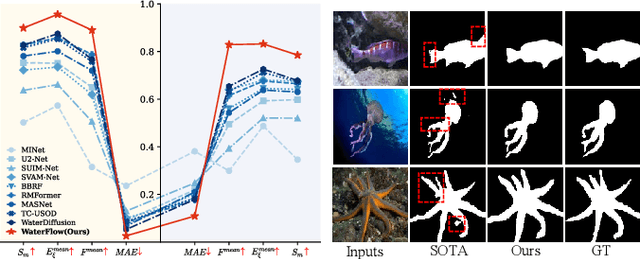

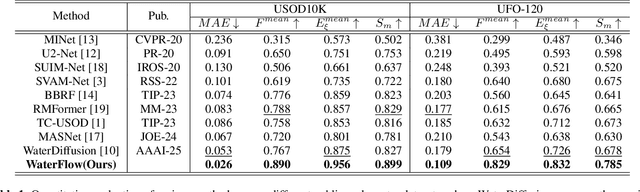

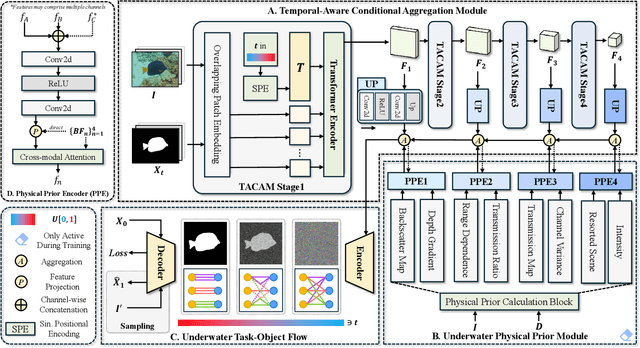

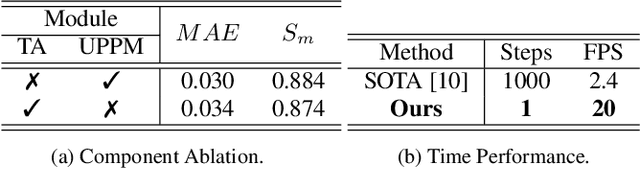

WaterFlow: Explicit Physics-Prior Rectified Flow for Underwater Saliency Mask Generation

Oct 14, 2025

Abstract:Underwater Salient Object Detection (USOD) faces significant challenges, including underwater image quality degradation and domain gaps. Existing methods tend to ignore the physical principles of underwater imaging or simply treat degradation phenomena in underwater images as interference factors that must be eliminated, failing to fully exploit the valuable information they contain. We propose WaterFlow, a rectified flow-based framework for underwater salient object detection that innovatively incorporates underwater physical imaging information as explicit priors directly into the network training process and introduces temporal dimension modeling, significantly enhancing the model's capability for salient object identification. On the USOD10K dataset, WaterFlow achieves a 0.072 gain in S_m, demonstrating the effectiveness and superiority of our method. The code will be published after the acceptance.

Embodied Arena: A Comprehensive, Unified, and Evolving Evaluation Platform for Embodied AI

Sep 18, 2025Abstract:Embodied AI development significantly lags behind large foundation models due to three critical challenges: (1) lack of systematic understanding of core capabilities needed for Embodied AI, making research lack clear objectives; (2) absence of unified and standardized evaluation systems, rendering cross-benchmark evaluation infeasible; and (3) underdeveloped automated and scalable acquisition methods for embodied data, creating critical bottlenecks for model scaling. To address these obstacles, we present Embodied Arena, a comprehensive, unified, and evolving evaluation platform for Embodied AI. Our platform establishes a systematic embodied capability taxonomy spanning three levels (perception, reasoning, task execution), seven core capabilities, and 25 fine-grained dimensions, enabling unified evaluation with systematic research objectives. We introduce a standardized evaluation system built upon unified infrastructure supporting flexible integration of 22 diverse benchmarks across three domains (2D/3D Embodied Q&A, Navigation, Task Planning) and 30+ advanced models from 20+ worldwide institutes. Additionally, we develop a novel LLM-driven automated generation pipeline ensuring scalable embodied evaluation data with continuous evolution for diversity and comprehensiveness. Embodied Arena publishes three real-time leaderboards (Embodied Q&A, Navigation, Task Planning) with dual perspectives (benchmark view and capability view), providing comprehensive overviews of advanced model capabilities. Especially, we present nine findings summarized from the evaluation results on the leaderboards of Embodied Arena. This helps to establish clear research veins and pinpoint critical research problems, thereby driving forward progress in the field of Embodied AI.

FBI: Learning Dexterous In-hand Manipulation with Dynamic Visuotactile Shortcut Policy

Aug 20, 2025

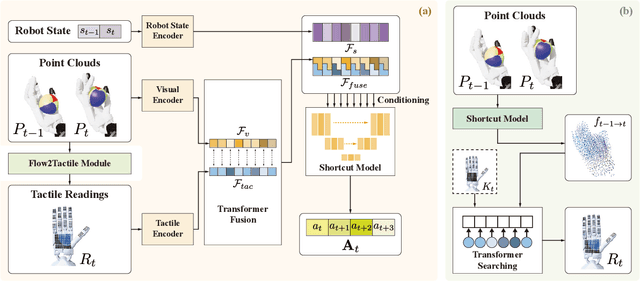

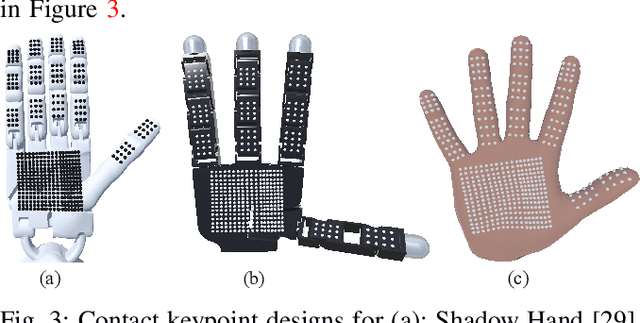

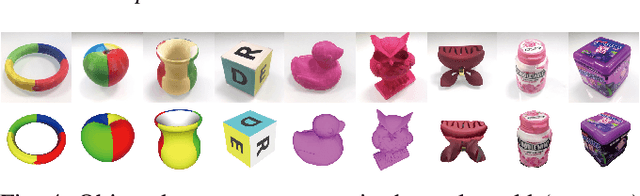

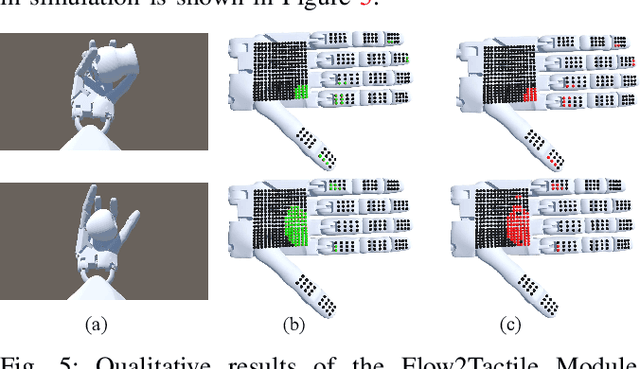

Abstract:Dexterous in-hand manipulation is a long-standing challenge in robotics due to complex contact dynamics and partial observability. While humans synergize vision and touch for such tasks, robotic approaches often prioritize one modality, therefore limiting adaptability. This paper introduces Flow Before Imitation (FBI), a visuotactile imitation learning framework that dynamically fuses tactile interactions with visual observations through motion dynamics. Unlike prior static fusion methods, FBI establishes a causal link between tactile signals and object motion via a dynamics-aware latent model. FBI employs a transformer-based interaction module to fuse flow-derived tactile features with visual inputs, training a one-step diffusion policy for real-time execution. Extensive experiments demonstrate that the proposed method outperforms the baseline methods in both simulation and the real world on two customized in-hand manipulation tasks and three standard dexterous manipulation tasks. Code, models, and more results are available in the website https://sites.google.com/view/dex-fbi.

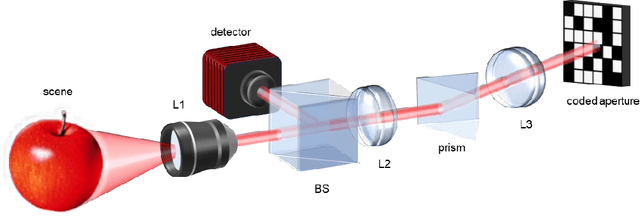

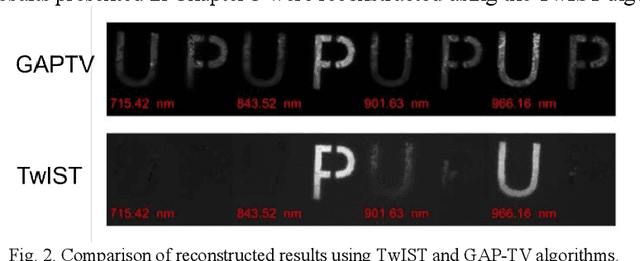

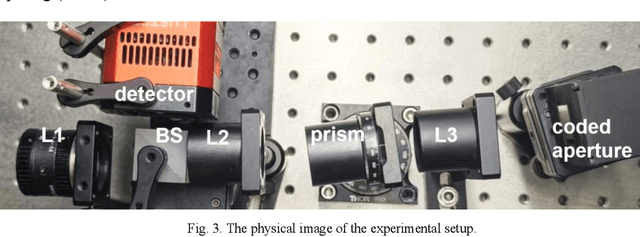

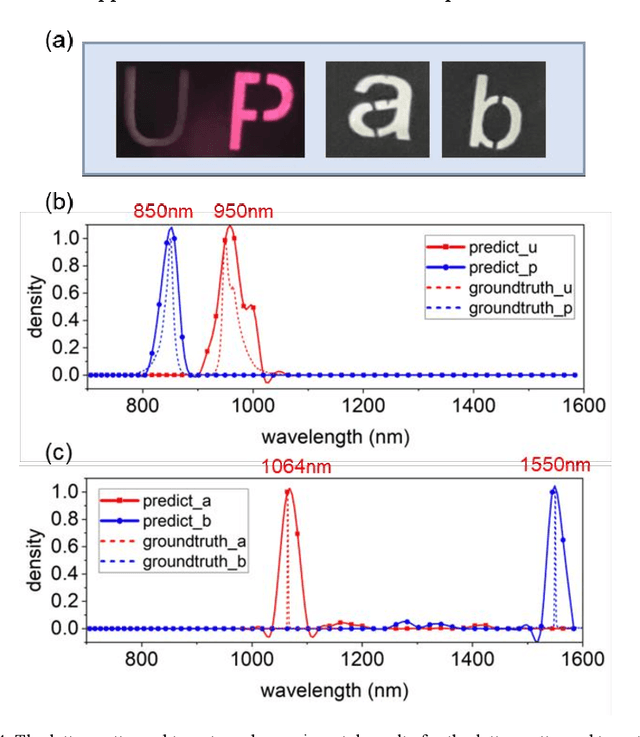

Broadband Near-Infrared Compressive Spectral Imaging System with Reflective Structure

Aug 20, 2025

Abstract:Near-infrared (NIR) hyperspectral imaging has become a critical tool in modern analytical science. However, conventional NIR hyperspectral imaging systems face challenges including high cost, bulky instrumentation, and inefficient data collection. In this work, we demonstrate a broadband NIR compressive spectral imaging system that is capable of capturing hyperspectral data covering a broad spectral bandwidth ranging from 700 to 1600 nm. By segmenting wavelengths and designing specialized optical components, our design overcomes hardware spectral limitations to capture broadband data, while the reflective optical structure makes the system compact. This approach provides a novel technical solution for NIR hyperspectral imaging.

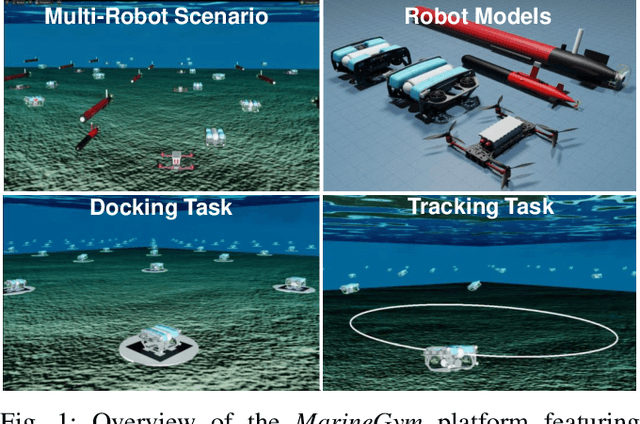

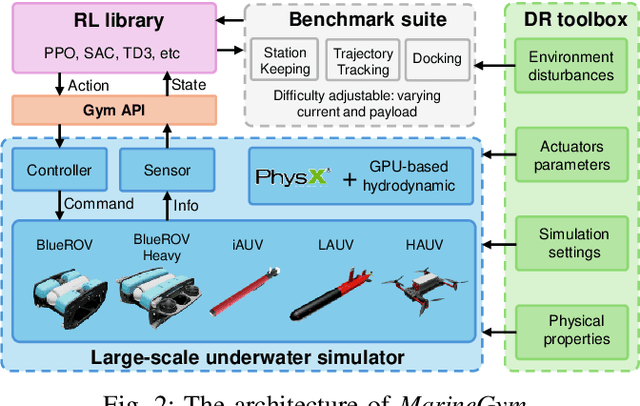

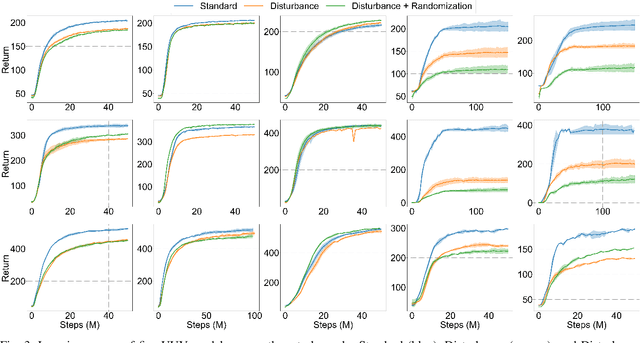

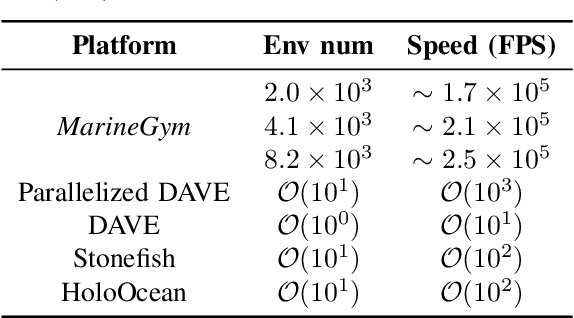

MarineGym: A High-Performance Reinforcement Learning Platform for Underwater Robotics

Mar 12, 2025

Abstract:This work presents the MarineGym, a high-performance reinforcement learning (RL) platform specifically designed for underwater robotics. It aims to address the limitations of existing underwater simulation environments in terms of RL compatibility, training efficiency, and standardized benchmarking. MarineGym integrates a proposed GPU-accelerated hydrodynamic plugin based on Isaac Sim, achieving a rollout speed of 250,000 frames per second on a single NVIDIA RTX 3060 GPU. It also provides five models of unmanned underwater vehicles (UUVs), multiple propulsion systems, and a set of predefined tasks covering core underwater control challenges. Additionally, the DR toolkit allows flexible adjustments of simulation and task parameters during training to improve Sim2Real transfer. Further benchmark experiments demonstrate that MarineGym improves training efficiency over existing platforms and supports robust policy adaptation under various perturbations. We expect this platform could drive further advancements in RL research for underwater robotics. For more details about MarineGym and its applications, please visit our project page: https://marine-gym.com/.

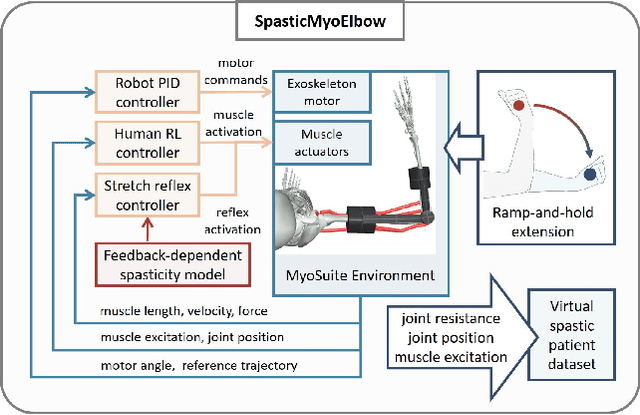

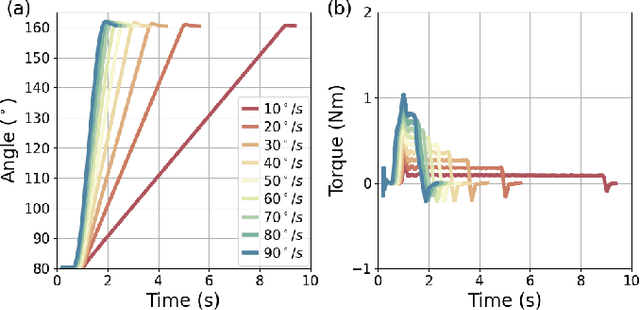

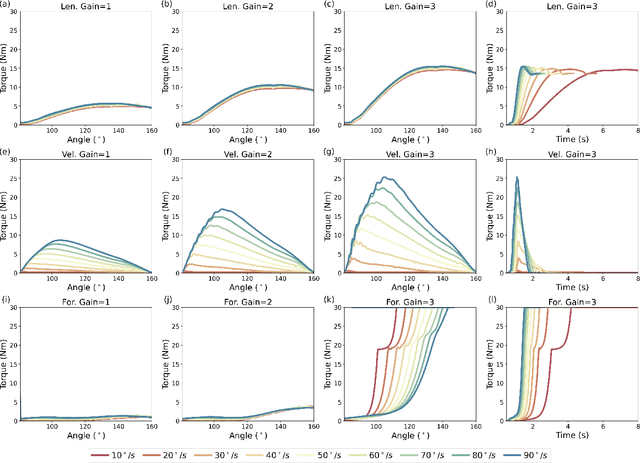

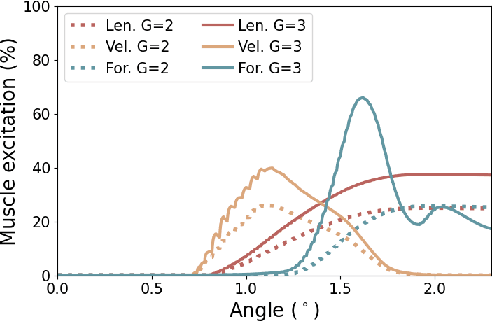

SpasticMyoElbow: Physical Human-Robot Interaction Simulation Framework for Modelling Elbow Spasticity

Dec 06, 2024

Abstract:Robotic devices hold great potential for efficient and reliable assessment of neuromotor abnormalities in post-stroke patients. However, spasticity caused by stroke is still assessed manually in clinical settings. The limited and variable nature of data collected from patients has long posed a major barrier to quantitatively modelling spasticity with robotic measurements and fully validating robotic assessment techniques. This paper presents a simulation framework developed to support the design and validation of elbow spasticity models and mitigate data problems. The framework consists of a simulation environment of robot-assisted spasticity assessment, two motion controllers for the robot and human models, and a stretch reflex controller. Our framework allows simulation based on synthetic data without experimental data from human subjects. Using this framework, we replicated the constant-velocity stretch experiment typically used in robot-assisted spasticity assessment and evaluated four types of spasticity models. Our results show that a spasticity reflex model incorporating feedback on both muscle fibre velocity and length more accurately captures joint resistance characteristics during passive elbow stretching in spastic patients than a force-dependent model. When integrated with an appropriate spasticity model, this simulation framework has the potential to generate extensive datasets of virtual patients for future research on spasticity assessment.

Dynamic Reconstruction of Hand-Object Interaction with Distributed Force-aware Contact Representation

Nov 14, 2024

Abstract:We present ViTaM-D, a novel visual-tactile framework for dynamic hand-object interaction reconstruction, integrating distributed tactile sensing for more accurate contact modeling. While existing methods focus primarily on visual inputs, they struggle with capturing detailed contact interactions such as object deformation. Our approach leverages distributed tactile sensors to address this limitation by introducing DF-Field. This distributed force-aware contact representation models both kinetic and potential energy in hand-object interaction. ViTaM-D first reconstructs hand-object interactions using a visual-only network, VDT-Net, and then refines contact details through a force-aware optimization (FO) process, enhancing object deformation modeling. To benchmark our approach, we introduce the HOT dataset, which features 600 sequences of hand-object interactions, including deformable objects, built in a high-precision simulation environment. Extensive experiments on both the DexYCB and HOT datasets demonstrate significant improvements in accuracy over previous state-of-the-art methods such as gSDF and HOTrack. Our results highlight the superior performance of ViTaM-D in both rigid and deformable object reconstruction, as well as the effectiveness of DF-Field in refining hand poses. This work offers a comprehensive solution to dynamic hand-object interaction reconstruction by seamlessly integrating visual and tactile data. Codes, models, and datasets will be available.

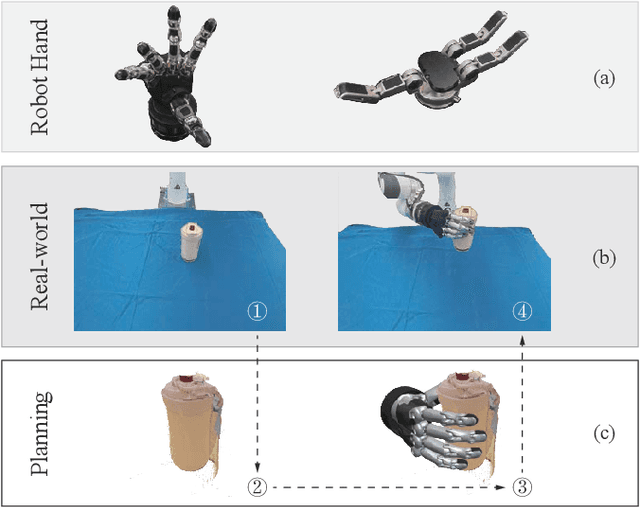

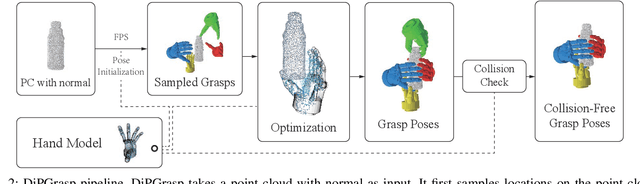

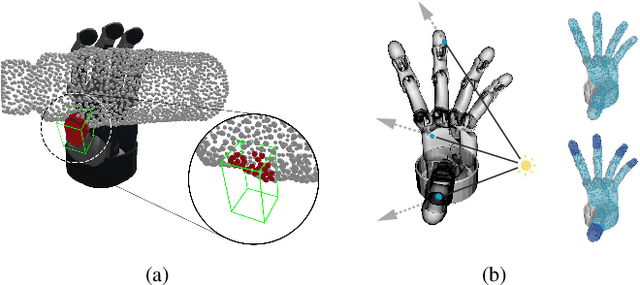

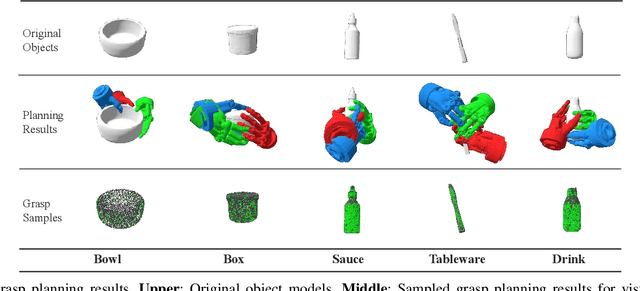

DiPGrasp: Parallel Local Searching for Efficient Differentiable Grasp Planning

Aug 08, 2024

Abstract:Grasp planning is an important task for robotic manipulation. Though it is a richly studied area, a standalone, fast, and differentiable grasp planner that can work with robot grippers of different DOFs has not been reported. In this work, we present DiPGrasp, a grasp planner that satisfies all these goals. DiPGrasp takes a force-closure geometric surface matching grasp quality metric. It adopts a gradient-based optimization scheme on the metric, which also considers parallel sampling and collision handling. This not only drastically accelerates the grasp search process over the object surface but also makes it differentiable. We apply DiPGrasp to three applications, namely grasp dataset construction, mask-conditioned planning, and pose refinement. For dataset generation, as a standalone planner, DiPGrasp has clear advantages over speed and quality compared with several classic planners. For mask-conditioned planning, it can turn a 3D perception model into a 3D grasp detection model instantly. As a pose refiner, it can optimize the coarse grasp prediction from the neural network, as well as the neural network parameters. Finally, we conduct real-world experiments with the Barrett hand and Schunk SVH 5-finger hand. Video and supplementary materials can be viewed on our website: \url{https://dipgrasp.robotflow.ai}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge