Jieyi Zhang

DexTOG: Learning Task-Oriented Dexterous Grasp with Language

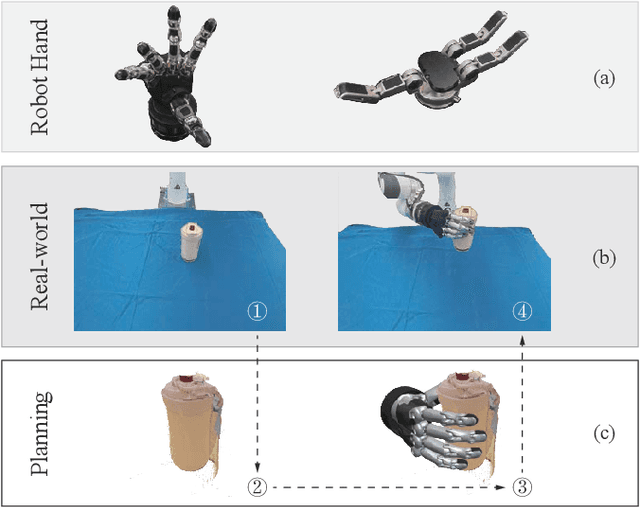

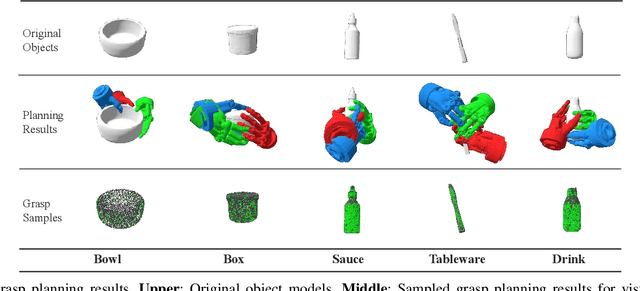

Apr 06, 2025Abstract:This study introduces a novel language-guided diffusion-based learning framework, DexTOG, aimed at advancing the field of task-oriented grasping (TOG) with dexterous hands. Unlike existing methods that mainly focus on 2-finger grippers, this research addresses the complexities of dexterous manipulation, where the system must identify non-unique optimal grasp poses under specific task constraints, cater to multiple valid grasps, and search in a high degree-of-freedom configuration space in grasp planning. The proposed DexTOG includes a diffusion-based grasp pose generation model, DexDiffu, and a data engine to support the DexDiffu. By leveraging DexTOG, we also proposed a new dataset, DexTOG-80K, which was developed using a shadow robot hand to perform various tasks on 80 objects from 5 categories, showcasing the dexterity and multi-tasking capabilities of the robotic hand. This research not only presents a significant leap in dexterous TOG but also provides a comprehensive dataset and simulation validation, setting a new benchmark in robotic manipulation research.

DiPGrasp: Parallel Local Searching for Efficient Differentiable Grasp Planning

Aug 08, 2024

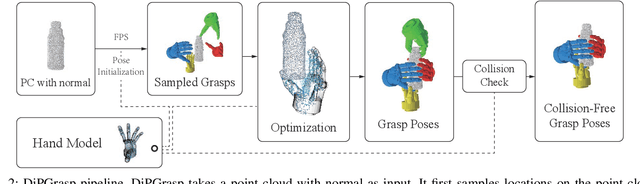

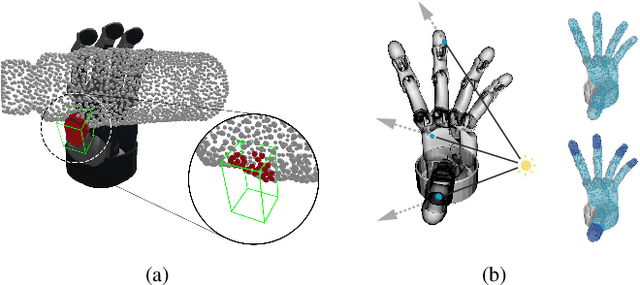

Abstract:Grasp planning is an important task for robotic manipulation. Though it is a richly studied area, a standalone, fast, and differentiable grasp planner that can work with robot grippers of different DOFs has not been reported. In this work, we present DiPGrasp, a grasp planner that satisfies all these goals. DiPGrasp takes a force-closure geometric surface matching grasp quality metric. It adopts a gradient-based optimization scheme on the metric, which also considers parallel sampling and collision handling. This not only drastically accelerates the grasp search process over the object surface but also makes it differentiable. We apply DiPGrasp to three applications, namely grasp dataset construction, mask-conditioned planning, and pose refinement. For dataset generation, as a standalone planner, DiPGrasp has clear advantages over speed and quality compared with several classic planners. For mask-conditioned planning, it can turn a 3D perception model into a 3D grasp detection model instantly. As a pose refiner, it can optimize the coarse grasp prediction from the neural network, as well as the neural network parameters. Finally, we conduct real-world experiments with the Barrett hand and Schunk SVH 5-finger hand. Video and supplementary materials can be viewed on our website: \url{https://dipgrasp.robotflow.ai}.

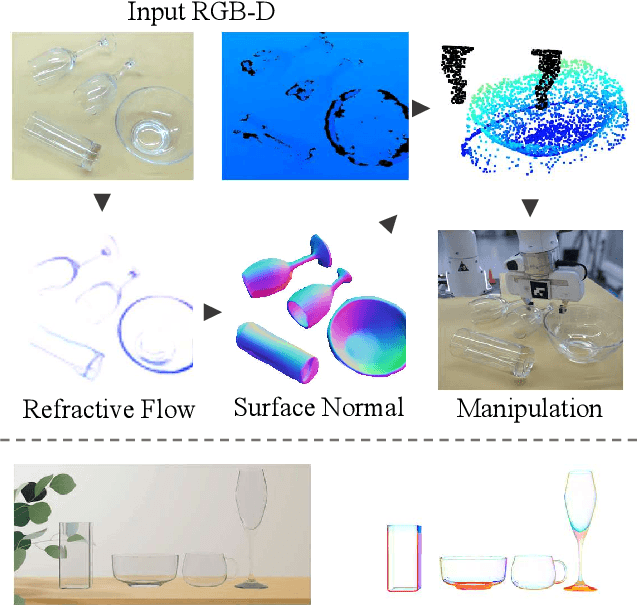

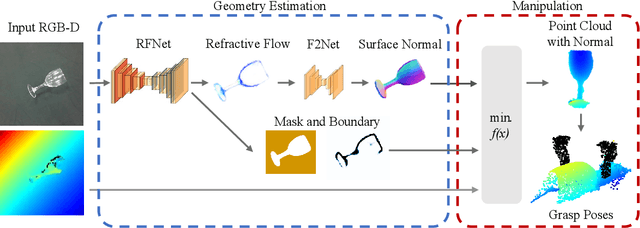

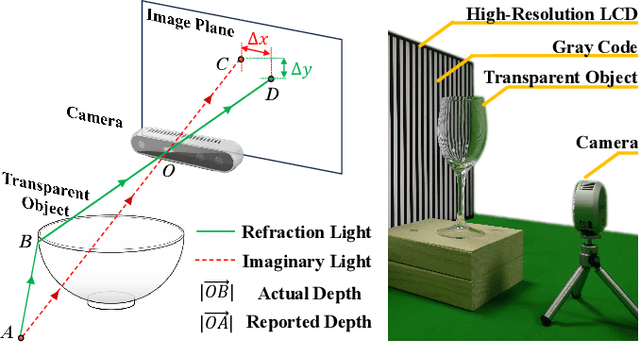

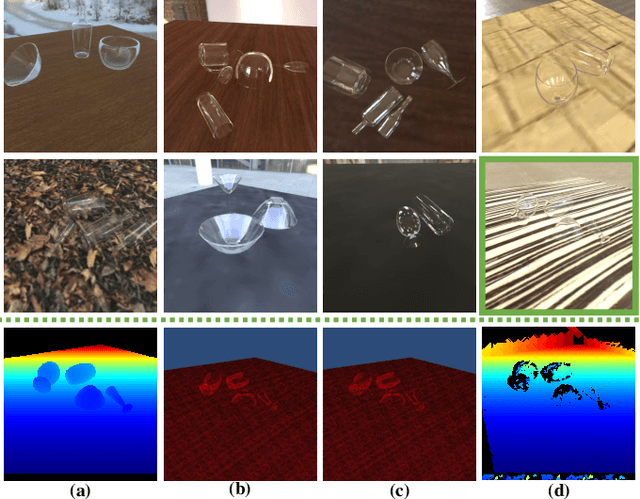

RFTrans: Leveraging Refractive Flow of Transparent Objects for Surface Normal Estimation and Manipulation

Nov 21, 2023

Abstract:Transparent objects are widely used in our daily lives, making it important to teach robots to interact with them. However, it's not easy because the reflective and refractive effects can make RGB-D cameras fail to give accurate geometry measurements. To solve this problem, this paper introduces RFTrans, an RGB-D-based method for surface normal estimation and manipulation of transparent objects. By leveraging refractive flow as an intermediate representation, RFTrans circumvents the drawbacks of directly predicting the geometry (e.g. surface normal) from RGB images and helps bridge the sim-to-real gap. RFTrans integrates the RFNet, which predicts refractive flow, object mask, and boundaries, followed by the F2Net, which estimates surface normal from the refractive flow. To make manipulation possible, a global optimization module will take in the predictions, refine the raw depth, and construct the point cloud with normal. An analytical grasp planning algorithm, ISF, is followed to generate the grasp poses. We build a synthetic dataset with physically plausible ray-tracing rendering techniques to train the networks. Results show that the RFTrans trained on the synthetic dataset can consistently outperform the baseline ClearGrasp in both synthetic and real-world benchmarks by a large margin. Finally, a real-world robot grasping task witnesses an 83% success rate, proving that refractive flow can help enable direct sim-to-real transfer. The code, data, and supplementary materials are available at https://rftrans.robotflow.ai.

GarmentTracking: Category-Level Garment Pose Tracking

Mar 24, 2023Abstract:Garments are important to humans. A visual system that can estimate and track the complete garment pose can be useful for many downstream tasks and real-world applications. In this work, we present a complete package to address the category-level garment pose tracking task: (1) A recording system VR-Garment, with which users can manipulate virtual garment models in simulation through a VR interface. (2) A large-scale dataset VR-Folding, with complex garment pose configurations in manipulation like flattening and folding. (3) An end-to-end online tracking framework GarmentTracking, which predicts complete garment pose both in canonical space and task space given a point cloud sequence. Extensive experiments demonstrate that the proposed GarmentTracking achieves great performance even when the garment has large non-rigid deformation. It outperforms the baseline approach on both speed and accuracy. We hope our proposed solution can serve as a platform for future research. Codes and datasets are available in https://garment-tracking.robotflow.ai.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge