Zhenjun Yu

FBI: Learning Dexterous In-hand Manipulation with Dynamic Visuotactile Shortcut Policy

Aug 20, 2025

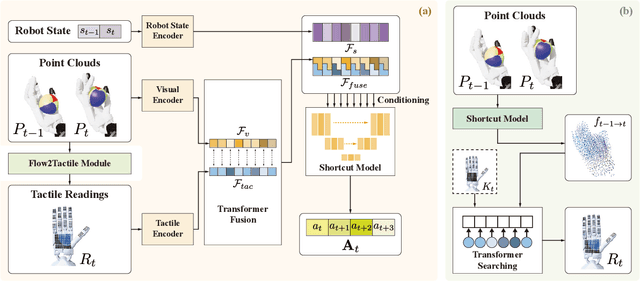

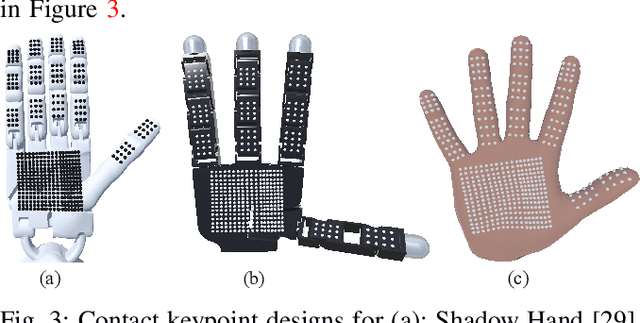

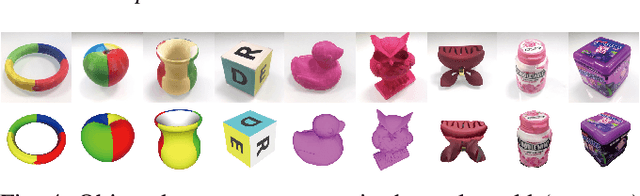

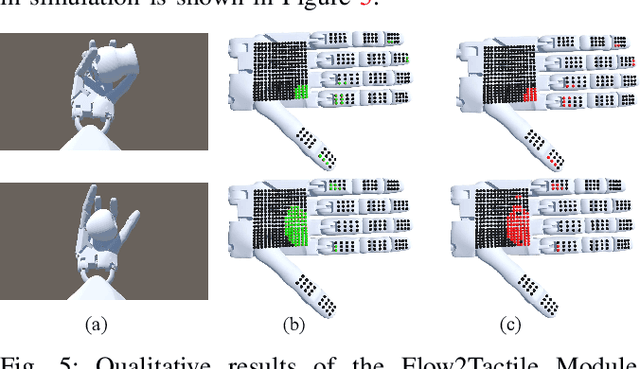

Abstract:Dexterous in-hand manipulation is a long-standing challenge in robotics due to complex contact dynamics and partial observability. While humans synergize vision and touch for such tasks, robotic approaches often prioritize one modality, therefore limiting adaptability. This paper introduces Flow Before Imitation (FBI), a visuotactile imitation learning framework that dynamically fuses tactile interactions with visual observations through motion dynamics. Unlike prior static fusion methods, FBI establishes a causal link between tactile signals and object motion via a dynamics-aware latent model. FBI employs a transformer-based interaction module to fuse flow-derived tactile features with visual inputs, training a one-step diffusion policy for real-time execution. Extensive experiments demonstrate that the proposed method outperforms the baseline methods in both simulation and the real world on two customized in-hand manipulation tasks and three standard dexterous manipulation tasks. Code, models, and more results are available in the website https://sites.google.com/view/dex-fbi.

AgentWorld: An Interactive Simulation Platform for Scene Construction and Mobile Robotic Manipulation

Aug 13, 2025Abstract:We introduce AgentWorld, an interactive simulation platform for developing household mobile manipulation capabilities. Our platform combines automated scene construction that encompasses layout generation, semantic asset placement, visual material configuration, and physics simulation, with a dual-mode teleoperation system supporting both wheeled bases and humanoid locomotion policies for data collection. The resulting AgentWorld Dataset captures diverse tasks ranging from primitive actions (pick-and-place, push-pull, etc.) to multistage activities (serve drinks, heat up food, etc.) across living rooms, bedrooms, and kitchens. Through extensive benchmarking of imitation learning methods including behavior cloning, action chunking transformers, diffusion policies, and vision-language-action models, we demonstrate the dataset's effectiveness for sim-to-real transfer. The integrated system provides a comprehensive solution for scalable robotic skill acquisition in complex home environments, bridging the gap between simulation-based training and real-world deployment. The code, datasets will be available at https://yizhengzhang1.github.io/agent_world/

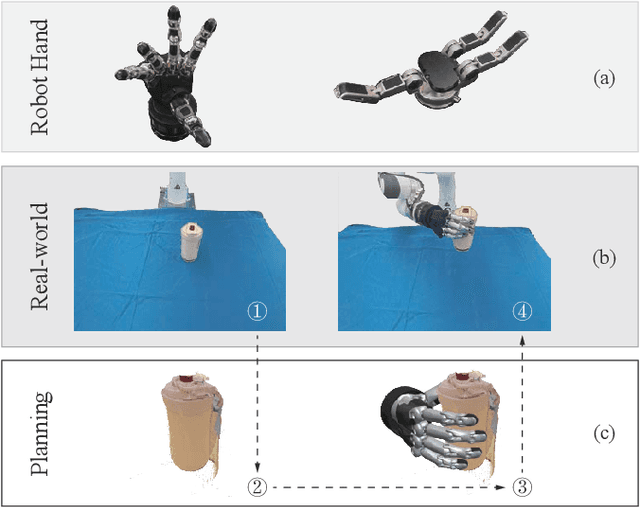

DexTOG: Learning Task-Oriented Dexterous Grasp with Language

Apr 06, 2025Abstract:This study introduces a novel language-guided diffusion-based learning framework, DexTOG, aimed at advancing the field of task-oriented grasping (TOG) with dexterous hands. Unlike existing methods that mainly focus on 2-finger grippers, this research addresses the complexities of dexterous manipulation, where the system must identify non-unique optimal grasp poses under specific task constraints, cater to multiple valid grasps, and search in a high degree-of-freedom configuration space in grasp planning. The proposed DexTOG includes a diffusion-based grasp pose generation model, DexDiffu, and a data engine to support the DexDiffu. By leveraging DexTOG, we also proposed a new dataset, DexTOG-80K, which was developed using a shadow robot hand to perform various tasks on 80 objects from 5 categories, showcasing the dexterity and multi-tasking capabilities of the robotic hand. This research not only presents a significant leap in dexterous TOG but also provides a comprehensive dataset and simulation validation, setting a new benchmark in robotic manipulation research.

Dynamic Reconstruction of Hand-Object Interaction with Distributed Force-aware Contact Representation

Nov 14, 2024

Abstract:We present ViTaM-D, a novel visual-tactile framework for dynamic hand-object interaction reconstruction, integrating distributed tactile sensing for more accurate contact modeling. While existing methods focus primarily on visual inputs, they struggle with capturing detailed contact interactions such as object deformation. Our approach leverages distributed tactile sensors to address this limitation by introducing DF-Field. This distributed force-aware contact representation models both kinetic and potential energy in hand-object interaction. ViTaM-D first reconstructs hand-object interactions using a visual-only network, VDT-Net, and then refines contact details through a force-aware optimization (FO) process, enhancing object deformation modeling. To benchmark our approach, we introduce the HOT dataset, which features 600 sequences of hand-object interactions, including deformable objects, built in a high-precision simulation environment. Extensive experiments on both the DexYCB and HOT datasets demonstrate significant improvements in accuracy over previous state-of-the-art methods such as gSDF and HOTrack. Our results highlight the superior performance of ViTaM-D in both rigid and deformable object reconstruction, as well as the effectiveness of DF-Field in refining hand poses. This work offers a comprehensive solution to dynamic hand-object interaction reconstruction by seamlessly integrating visual and tactile data. Codes, models, and datasets will be available.

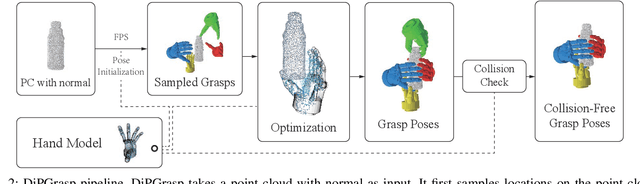

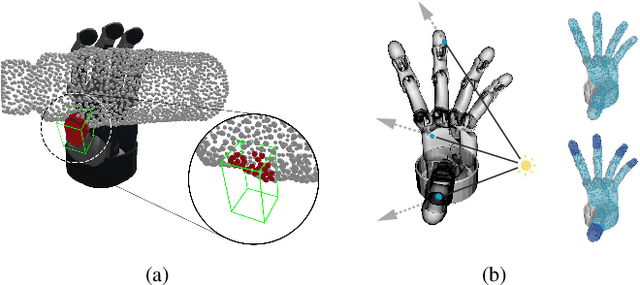

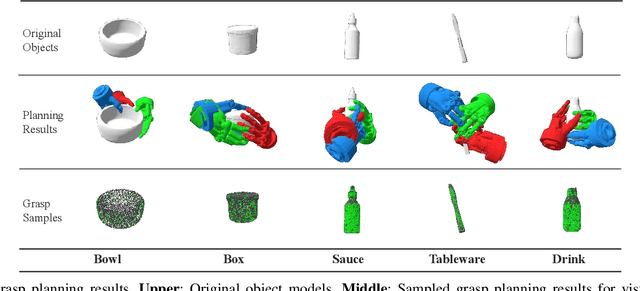

DiPGrasp: Parallel Local Searching for Efficient Differentiable Grasp Planning

Aug 08, 2024

Abstract:Grasp planning is an important task for robotic manipulation. Though it is a richly studied area, a standalone, fast, and differentiable grasp planner that can work with robot grippers of different DOFs has not been reported. In this work, we present DiPGrasp, a grasp planner that satisfies all these goals. DiPGrasp takes a force-closure geometric surface matching grasp quality metric. It adopts a gradient-based optimization scheme on the metric, which also considers parallel sampling and collision handling. This not only drastically accelerates the grasp search process over the object surface but also makes it differentiable. We apply DiPGrasp to three applications, namely grasp dataset construction, mask-conditioned planning, and pose refinement. For dataset generation, as a standalone planner, DiPGrasp has clear advantages over speed and quality compared with several classic planners. For mask-conditioned planning, it can turn a 3D perception model into a 3D grasp detection model instantly. As a pose refiner, it can optimize the coarse grasp prediction from the neural network, as well as the neural network parameters. Finally, we conduct real-world experiments with the Barrett hand and Schunk SVH 5-finger hand. Video and supplementary materials can be viewed on our website: \url{https://dipgrasp.robotflow.ai}.

MS-MANO: Enabling Hand Pose Tracking with Biomechanical Constraints

Apr 16, 2024

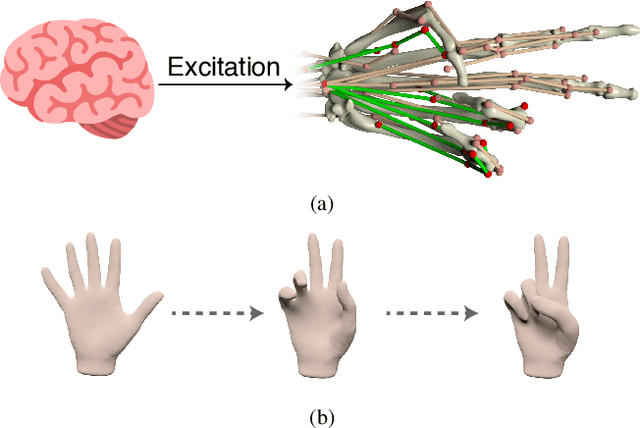

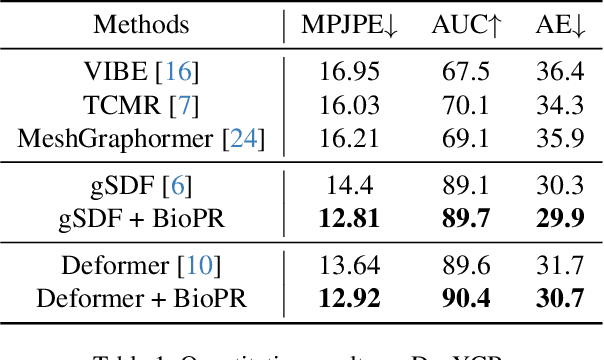

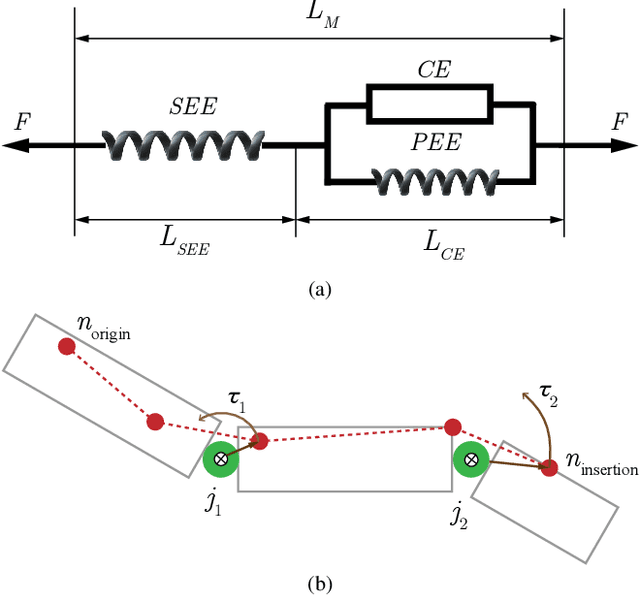

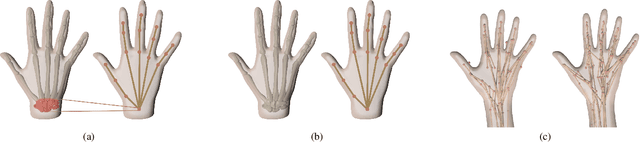

Abstract:This work proposes a novel learning framework for visual hand dynamics analysis that takes into account the physiological aspects of hand motion. The existing models, which are simplified joint-actuated systems, often produce unnatural motions. To address this, we integrate a musculoskeletal system with a learnable parametric hand model, MANO, to create a new model, MS-MANO. This model emulates the dynamics of muscles and tendons to drive the skeletal system, imposing physiologically realistic constraints on the resulting torque trajectories. We further propose a simulation-in-the-loop pose refinement framework, BioPR, that refines the initial estimated pose through a multi-layer perceptron (MLP) network. Our evaluation of the accuracy of MS-MANO and the efficacy of the BioPR is conducted in two separate parts. The accuracy of MS-MANO is compared with MyoSuite, while the efficacy of BioPR is benchmarked against two large-scale public datasets and two recent state-of-the-art methods. The results demonstrate that our approach consistently improves the baseline methods both quantitatively and qualitatively.

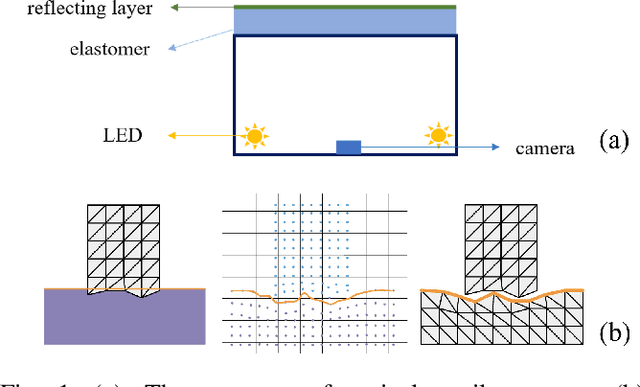

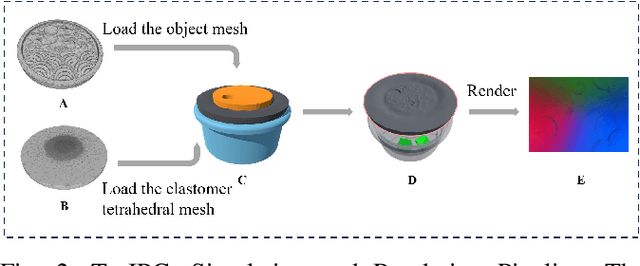

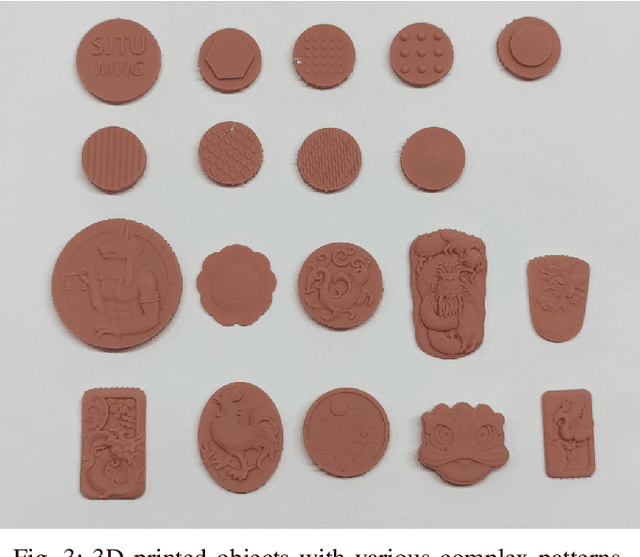

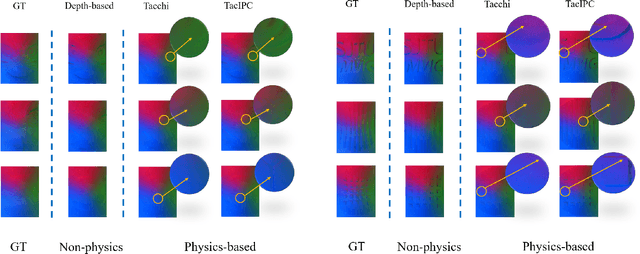

TacIPC: Intersection- and Inversion-free FEM-based Elastomer Simulation For Optical Tactile Sensors

Nov 10, 2023

Abstract:Tactile perception stands as a critical sensory modality for human interaction with the environment. Among various tactile sensor techniques, optical sensor-based approaches have gained traction, notably for producing high-resolution tactile images. This work explores gel elastomer deformation simulation through a physics-based approach. While previous works in this direction usually adopt the explicit material point method (MPM), which has certain limitations in force simulation and rendering, we adopt the finite element method (FEM) and address the challenges in penetration and mesh distortion with incremental potential contact (IPC) method. As a result, we present a simulator named TacIPC, which can ensure numerically stable simulations while accommodating direct rendering and friction modeling. To evaluate TacIPC, we conduct three tasks: pseudo-image quality assessment, deformed geometry estimation, and marker displacement prediction. These tasks show its superior efficacy in reducing the sim-to-real gap. Our method can also seamlessly integrate with existing simulators. More experiments and videos can be found in the supplementary materials and on the website: https://sites.google.com/view/tac-ipc.

Precise Robotic Needle-Threading with Tactile Perception and Reinforcement Learning

Nov 04, 2023Abstract:This work presents a novel tactile perception-based method, named T-NT, for performing the needle-threading task, an application of deformable linear object (DLO) manipulation. This task is divided into two main stages: Tail-end Finding and Tail-end Insertion. In the first stage, the agent traces the contour of the thread twice using vision-based tactile sensors mounted on the gripper fingers. The two-run tracing is to locate the tail-end of the thread. In the second stage, it employs a tactile-guided reinforcement learning (RL) model to drive the robot to insert the thread into the target needle eyelet. The RL model is trained in a Unity-based simulated environment. The simulation environment supports tactile rendering which can produce realistic tactile images and thread modeling. During insertion, the position of the poke point and the center of the eyelet are obtained through a pre-trained segmentation model, Grounded-SAM, which predicts the masks for both the needle eye and thread imprints. These positions are then fed into the reinforcement learning model, aiding in a smoother transition to real-world applications. Extensive experiments on real robots are conducted to demonstrate the efficacy of our method. More experiments and videos can be found in the supplementary materials and on the website: https://sites.google.com/view/tac-needlethreading.

Visual-Tactile Sensing for In-Hand Object Reconstruction

Mar 25, 2023Abstract:Tactile sensing is one of the modalities humans rely on heavily to perceive the world. Working with vision, this modality refines local geometry structure, measures deformation at the contact area, and indicates the hand-object contact state. With the availability of open-source tactile sensors such as DIGIT, research on visual-tactile learning is becoming more accessible and reproducible. Leveraging this tactile sensor, we propose a novel visual-tactile in-hand object reconstruction framework \textbf{VTacO}, and extend it to \textbf{VTacOH} for hand-object reconstruction. Since our method can support both rigid and deformable object reconstruction, no existing benchmarks are proper for the goal. We propose a simulation environment, VT-Sim, which supports generating hand-object interaction for both rigid and deformable objects. With VT-Sim, we generate a large-scale training dataset and evaluate our method on it. Extensive experiments demonstrate that our proposed method can outperform the previous baseline methods qualitatively and quantitatively. Finally, we directly apply our model trained in simulation to various real-world test cases, which display qualitative results. Codes, models, simulation environment, and datasets are available at \url{https://sites.google.com/view/vtaco/}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge