Yongming Huang

Sherman

Joint Optimization of Latency and Accuracy for Split Federated Learning in User-Centric Cell-Free MIMO Networks

Feb 05, 2026Abstract:This paper proposes a user-centric split federated learning (UCSFL) framework for user-centric cell-free multiple-input multiple-output (CF-MIMO) networks to support split federated learning (SFL). In the proposed UCSFL framework, users deploy split sub-models locally, while complete models are maintained and updated at access point (AP)-side distributed processing units (DPUs), followed by a two-level aggregation procedure across DPUs and the central processing unit (CPU). Under standard machine learning (ML) assumptions, we provide a theoretical convergence analysis for UCSFL, which reveals that the AP-cluster size is a key factor influencing model training accuracy. Motivated by this result, we introduce a new performance metric, termed the latency-to-accuracy ratio, defined as the ratio of a user's per-iteration training latency to the weighted size of its AP cluster. Based on this metric, we formulate a joint optimization problem to minimize the maximum latency-to-accuracy ratio by jointly optimizing uplink power control, downlink beamforming, model splitting, and AP clustering. The resulting problem is decomposed into two sub-problems operating on different time scales, for which dedicated algorithms are developed to handle the short-term and long-term optimizations, respectively. Simulation results verify the convergence of the proposed algorithms and demonstrate that UCSFL effectively reduces the latency-to-accuracy ratio of the VGG16 model compared with baseline schemes. Moreover, the proposed framework adaptively adjusts splitting and clustering strategies in response to varying communication and computation resources. An MNIST-based handwritten digit classification example further shows that UCSFL significantly accelerates the convergence of the VGG16 model.

Experimental Performance of Bidirectional Phase Coherent Transmission and Sensing for mmWave Cell-free Massive MIMO Systems with Reciprocity Calibration

Jan 21, 2026Abstract:Phase synchronization among distributed transmission reception points (TRPs) is a prerequisite for enabling coherent joint transmission and high-precision sensing in millimeter wave (mmWave) cell-free massive multiple-input and multiple-output (MIMO) systems. This paper proposes a bidirectional calibration scheme and a calibration coefficient estimation method for phase synchronization, and presents a calibration coefficient phase tracking method using unilateral uplink/downlink channel state information (CSI). Furthermore, this paper introduces the use of reciprocity calibration to eliminate non-ideal factors in sensing and leverages sensing results to achieve calibration coefficient phase tracking in dynamic scenarios, thus enabling bidirectional empowerment of both communication and sensing. Simulation results demonstrate that the proposed method can effectively implement reciprocal calibration with lower overhead, enabling coherent collaborative transmission, and resolving non-ideal factors to acquire lower sensing error in sensing applications. Experimental results show that, in the mmWave band, over-the-air (OTA) bidirectional calibration enables coherent collaborative transmission for both collaborative TRPs and collaborative user equipments (UEs), achieving beamforming gain and long-time coherent sensing capabilities.

Airy Beamforming for Radiative Near-Field MU-XL-MIMO: Overcoming Half-Space Blockage

Jan 16, 2026Abstract:The move to next-generation wireless communications with extremely large-scale antenna arrays (ELAAs) brings the communications into the radiative near-field (RNF) region, where distance-aware focusing is feasible. However, high-frequency RNF links are highly vulnerable to blockage in indoor environments dominated by half-space obstacles (walls, corners) that create knife-edge shadows. Conventional near-field focused beams offer high gain in line-of-sight (LoS) scenarios but suffer from severe energy truncation and effective-rank collapse in shadowed regions, often necessitating the deployment of auxiliary hardware such as Reconfigurable Intelligent Surfaces (RIS) to restore connectivity. We propose a beamforming strategy that exploits the auto-bending property of Airy beams to mitigate half-space blockage without additional hardware. The Airy beam is designed to ``ride'' the diffraction edge, accelerating its main lobe into the shadow to restore connectivity. Our contributions are threefold: (i) a Green's function-based RNF multi-user channel model that analytically reveals singular-value collapse behind knife-edge obstacles; (ii) an Airy analog beamforming scheme that optimizes the bending trajectory to recover the effective channel rank; and (iii) an Airy null-steering method that aligns oscillatory nulls with bright-region users to suppress interference in mixed shadow/bright scenarios. Simulations show that the proposed edge-riding Airy strategy achieves a Signal-to-Noise Ratio (SNR) improvement of over 20 dB and restores full-rank connectivity in shadowed links compared to conventional RNF focusing, virtually eliminating outage in geometric shadows and increasing multi-user spectral efficiency by approximately 35\% under typical indoor ELAA configurations. These results demonstrate robust RNF multi-user access in half-space blockage scenarios without relying on RIS.

Flexible-Duplex Cell-Free Architecture for Secure Uplink Communications in Low-Altitude Wireless Networks

Jan 07, 2026Abstract:Low-altitude wireless networks (LAWNs) are expected to play a central role in future 6G infrastructures, yet uplink transmissions of uncrewed aerial vehicles (UAVs) remain vulnerable to eavesdropping due to their limited transmit power, constrained antenna resources, and highly exposed air-ground propagation conditions. To address this fundamental bottleneck, we propose a flexible-duplex cell-free (CF) architecture in which each distributed access point (AP) can dynamically operate either as a receive AP for UAV uplink collection or as a transmit AP that generates cooperative artificial noise (AN) for secrecy enhancement. Such AP-level duplex flexibility introduces an additional spatial degree of freedom that enables distributed and adaptive protection against wiretapping in LAWNs. Building upon this architecture, we formulate a max-min secrecy-rate problem that jointly optimizes AP mode selection, receive combining, and AN covariance design. This tightly coupled and nonconvex optimization is tackled by first deriving the optimal receive combiners in closed form, followed by developing a penalty dual decomposition (PDD) algorithm with guaranteed convergence to a stationary solution. To further reduce computational burden, we propose a low-complexity sequential scheme that determines AP modes via a heuristic metric and then updates the AN covariance matrices through closed-form iterations embedded in the PDD framework. Simulation results show that the proposed flexible-duplex architecture yields substantial secrecy-rate gains over CF systems with fixed AP roles. The joint optimization method attains the highest secrecy performance, while the low-complexity approach achieves over 90% of the optimal performance with an order-of-magnitude lower computational complexity, offering a practical solution for secure uplink communications in LAWNs.

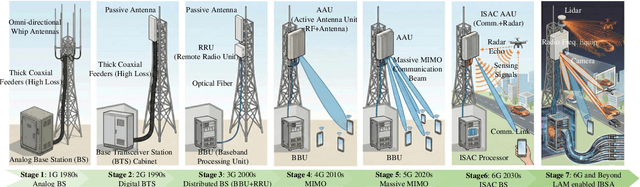

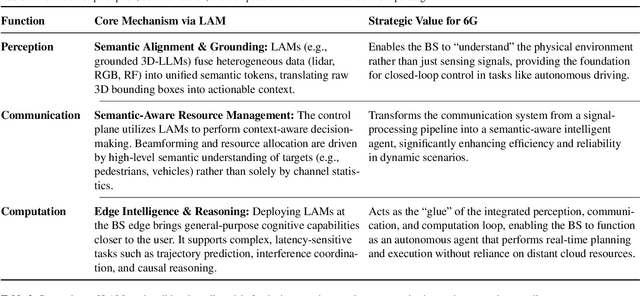

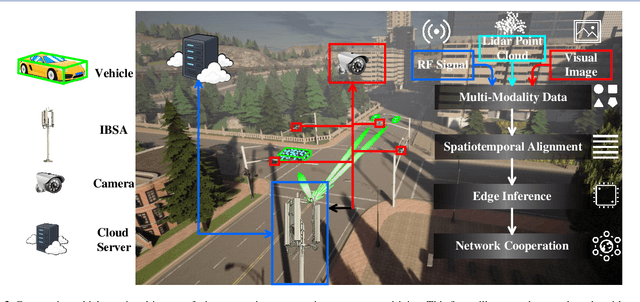

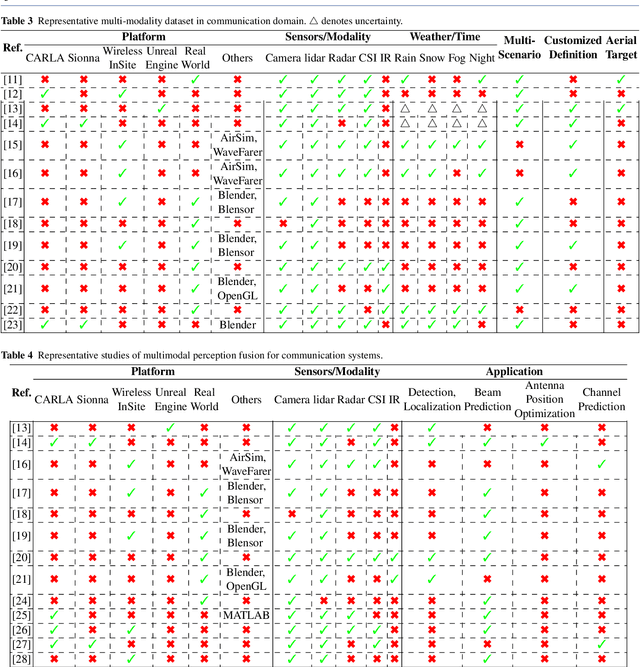

Large Model Enabled Embodied Intelligence for 6G Integrated Perception, Communication, and Computation Network

Dec 22, 2025

Abstract:The advent of sixth-generation (6G) places intelligence at the core of wireless architecture, fusing perception, communication, and computation into a single closed-loop. This paper argues that large artificial intelligence models (LAMs) can endow base stations with perception, reasoning, and acting capabilities, thus transforming them into intelligent base station agents (IBSAs). We first review the historical evolution of BSs from single-functional analog infrastructure to distributed, software-defined, and finally LAM-empowered IBSA, highlighting the accompanying changes in architecture, hardware platforms, and deployment. We then present an IBSA architecture that couples a perception-cognition-execution pipeline with cloud-edge-end collaboration and parameter-efficient adaptation. Subsequently,we study two representative scenarios: (i) cooperative vehicle-road perception for autonomous driving, and (ii) ubiquitous base station support for low-altitude uncrewed aerial vehicle safety monitoring and response against unauthorized drones. On this basis, we analyze key enabling technologies spanning LAM design and training, efficient edge-cloud inference, multi-modal perception and actuation, as well as trustworthy security and governance. We further propose a holistic evaluation framework and benchmark considerations that jointly cover communication performance, perception accuracy, decision-making reliability, safety, and energy efficiency. Finally, we distill open challenges on benchmarks, continual adaptation, trustworthy decision-making, and standardization. Together, this work positions LAM-enabled IBSAs as a practical path toward integrated perception, communication, and computation native, safety-critical 6G systems.

Coordinated Beamforming for Networked Integrated Communication and Multi-TMT Localization

Oct 06, 2025Abstract:Networked integrated sensing and communication (ISAC) has gained significant attention as a promising technology for enabling next-generation wireless systems. To further enhance networked ISAC, delegating the reception of sensing signals to dedicated target monitoring terminals (TMTs) instead of base stations (BSs) offers significant advantages in terms of sensing capability and deployment flexibility. Despite its potential, the coordinated beamforming design for networked integrated communication and time-of-arrival (ToA)-based multi-TMT localization remains largely unexplored. In this paper, we present a comprehensive study to fill this gap. Specifically, we first establish signal models for both communication and localization, and, for the first time, derive a closed-form Cram\'er-Rao lower bound (CRLB) to characterize the localization performance. Subsequently, we exploit this CRLB to formulate two optimization problems, focusing on sensing-centric and communication-centric criteria, respectively. For the sensing-centric problem, we develop a globally optimal algorithm based on semidefinite relaxation (SDR) when each BS is equipped with more antennas than the total number of communication users. While for the communication-centric problem, we design a globally optimal algorithm for the single-BS case using bisection search. For the general case of both problems, we propose a unified successive convex approximation (SCA)-based algorithm, which is suboptimal yet efficient, and further extend it from single-target scenarios to more practical multi-target scenarios. Finally, simulation results demonstrate the effectiveness of our proposed algorithms, reveal the intrinsic performance trade-offs between communication and localization, and further show that deploying more TMTs is always preferable to deploying more BSs in networked ISAC systems.

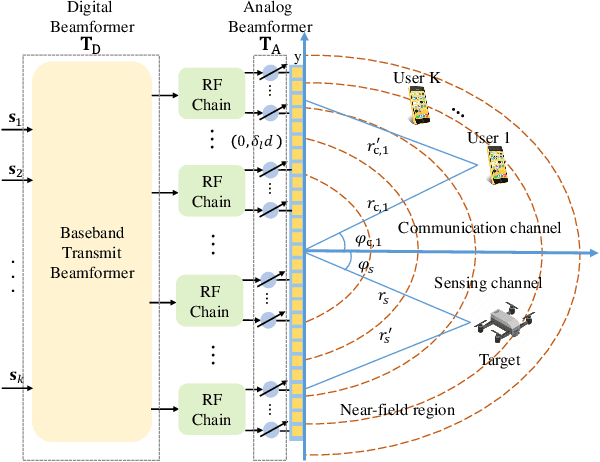

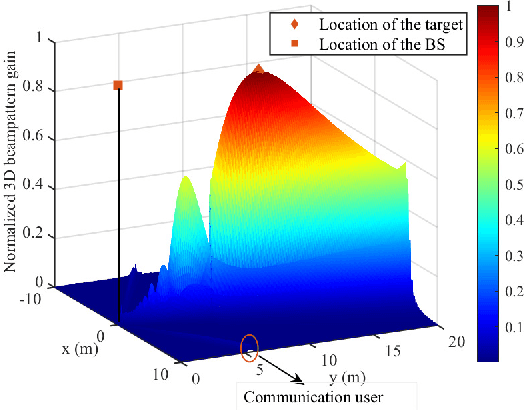

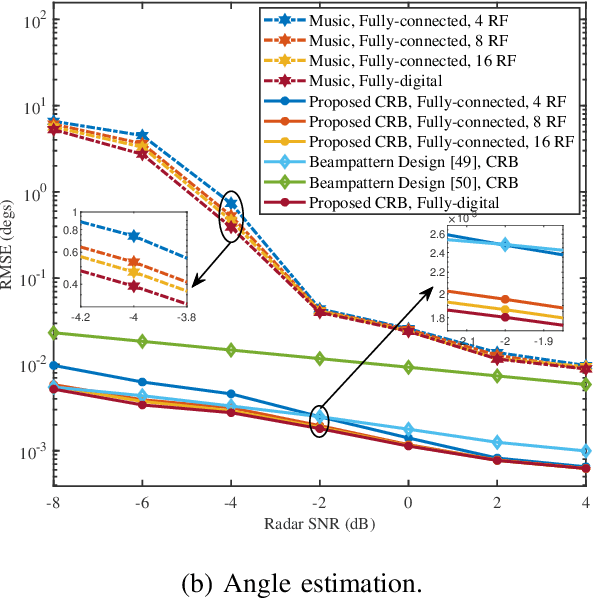

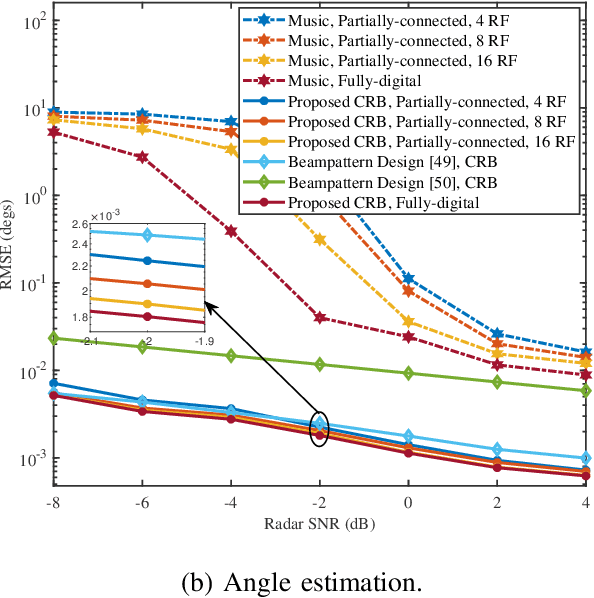

Energy-Efficient Hybrid Beamfocusing for Near-Field Integrated Sensing and Communication

Aug 06, 2025

Abstract:Integrated sensing and communication (ISAC) is a pivotal component of sixth-generation (6G) wireless networks, leveraging high-frequency bands and massive multiple-input multiple-output (M-MIMO) to deliver both high-capacity communication and high-precision sensing. However, these technological advancements lead to significant near-field effects, while the implementation of M-MIMO \mbox{is associated with considerable} hardware costs and escalated power consumption. In this context, hybrid architecture designs emerge as both hardware-efficient and energy-efficient solutions. Motivated by these considerations, we investigate the design of energy-efficient hybrid beamfocusing for near-field ISAC under two distinct target scenarios, i.e., a point target and an extended target. Specifically, we first derive the closed-form Cram\'{e}r-Rao bound (CRB) of joint angle-and-distance estimation for the point target and the Bayesian CRB (BCRB) of the target response matrix for the extended target. Building on these derived results, we minimize the CRB/BCRB by optimizing the transmit beamfocusing, while ensuring the energy efficiency (EE) of the system and the quality-of-service (QoS) for communication users. To address the resulting \mbox{nonconvex problems}, we first utilize a penalty-based successive convex approximation technique with a fully-digital beamformer to obtain a suboptimal solution. Then, we propose an efficient alternating \mbox{optimization} algorithm to design the analog-and-digital beamformer. \mbox{Simulation} results indicate that joint distance-and-angle estimation is feasible in the near-field region. However, the adopted hybrid architectures inevitably degrade the accuracy of distance estimation, compared with their fully-digital counterparts. Furthermore, enhancements in system EE would compromise the accuracy of target estimation, unveiling a nontrivial tradeoff.

A Multi-Scale Spatial Attention Network for Near-field MIMO Channel Estimation

Jul 30, 2025

Abstract:The deployment of extremely large-scale array (ELAA) brings higher spectral efficiency and spatial degree of freedom, but triggers issues on near-field channel estimation. Existing near-field channel estimation schemes primarily exploit sparsity in the transform domain. However, these schemes are sensitive to the transform matrix selection and the stopping criteria. Inspired by the success of deep learning (DL) in far-field channel estimation, this paper proposes a novel spatial-attention-based method for reconstructing extremely large-scale MIMO (XL-MIMO) channel. Initially, the spatial antenna correlations of near-field channels are analyzed as an expectation over the angle-distance space, which demonstrate correlation range of an antenna element varies with its position. Due to the strong correlation between adjacent antenna elements, interactions of inter-subchannel are applied to describe inherent correlation of near-field channels instead of inter-element. Subsequently, a multi-scale spatial attention network (MsSAN) with the inter-subchannel correlation learning capabilities is proposed tailed to near-field MIMO channel estimation. We employ the multi-scale architecture to refine the subchannel size in MsSAN. Specially, we inventively introduce the sum of dot products as spatial attention (SA) instead of cross-covariance to weight subchannel features at different scales in the SA module. Simulation results are presented to validate the proposed MsSAN achieves remarkable the inter-subchannel correlation learning capabilities and outperforms others in terms of near-field channel reconstruction.

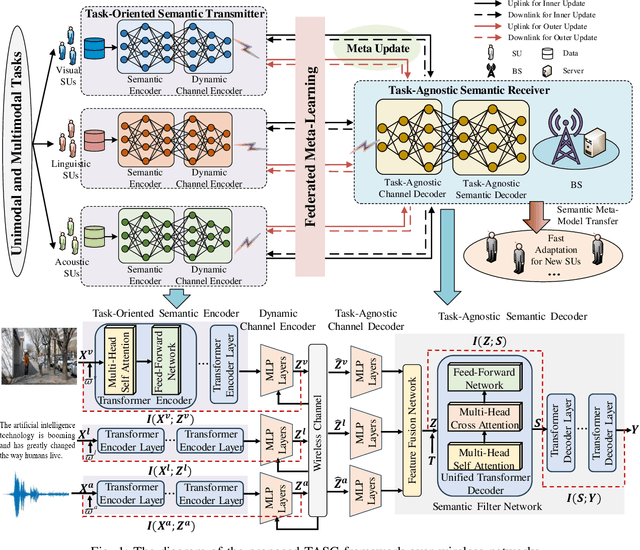

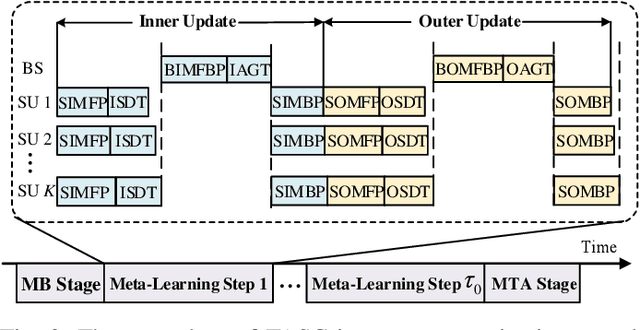

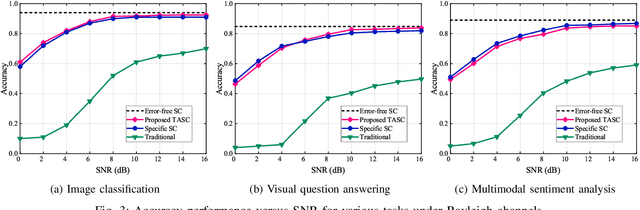

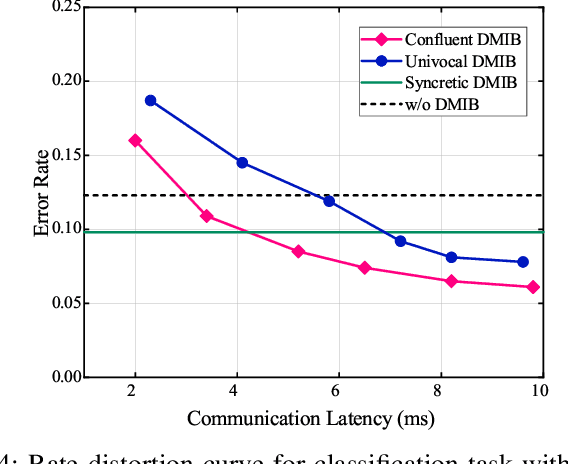

Task-Agnostic Semantic Communications Relying on Information Bottleneck and Federated Meta-Learning

Apr 30, 2025

Abstract:As a paradigm shift towards pervasive intelligence, semantic communication (SemCom) has shown great potentials to improve communication efficiency and provide user-centric services by delivering task-oriented semantic meanings. However, the exponential growth in connected devices, data volumes, and communication demands presents significant challenges for practical SemCom design, particularly in resource-constrained wireless networks. In this work, we first propose a task-agnostic SemCom (TASC) framework that can handle diverse tasks with multiple modalities. Aiming to explore the interplay between communications and intelligent tasks from the information-theoretical perspective, we leverage information bottleneck (IB) theory and propose a distributed multimodal IB (DMIB) principle to learn minimal and sufficient unimodal and multimodal information effectively by discarding redundancy while preserving task-related information. To further reduce the communication overhead, we develop an adaptive semantic feature transmission method under dynamic channel conditions. Then, TASC is trained based on federated meta-learning (FML) for rapid adaptation and generalization in wireless networks. To gain deep insights, we rigorously conduct theoretical analysis and devise resource management to accelerate convergence while minimizing the training latency and energy consumption. Moreover, we develop a joint user selection and resource allocation algorithm to address the non-convex problem with theoretical guarantees. Extensive simulation results validate the effectiveness and superiority of the proposed TASC compared to baselines.

Element-Grouping Strategy for Intelligent Reflecting Surface: Performance Analysis and Algorithm Optimization

Apr 22, 2025Abstract:As a revolutionary paradigm for intelligently controlling wireless channels, intelligent reflecting surface (IRS) has emerged as a promising technology for future sixth-generation (6G) wireless communications. While IRS-aided communication systems can achieve attractive high channel gains, existing schemes require plenty of IRS elements to mitigate the ``multiplicative fading'' effect in cascaded channels, leading to high complexity for real-time beamforming and high signaling overhead for channel estimation. In this paper, the concept of sustainable intelligent element-grouping IRS (IEG-IRS) is proposed to overcome those fundamental bottlenecks. Specifically, based on the statistical channel state information (S-CSI), the proposed grouping strategy intelligently pre-divide the IEG-IRS elements into multiple groups based on the beam-domain grouping method, with each group sharing the common reflection coefficient and being optimized in real time using the instantaneous channel state information (I-CSI). Then, we further analyze the asymptotic performance of the IEG-IRS to reveal the substantial capacity gain in an extremely large-scale IRS (XL-IRS) aided single-user single-input single-output (SU-SISO) system. In particular, when a line-of-sight (LoS) component exists, it demonstrates that the combined cascaded link can be considered as a ``deterministic virtual LoS'' channel, resulting in a sustainable squared array gain achieved by the IEG-IRS. Finally, we formulate a weighted-sum-rate (WSR) maximization problem for an IEG-IRS-aided multiuser multiple-input single-output (MU-MISO) system and a two-stage algorithm for optimizing the beam-domain grouping strategy and the multi-user active-passive beamforming is proposed.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge