Shengheng Liu

Model-driven deep neural network for enhanced direction finding with commodity 5G gNodeB

Dec 14, 2024

Abstract:Pervasive and high-accuracy positioning has become increasingly important as a fundamental enabler for intelligent connected devices in mobile networks. Nevertheless, current wireless networks heavily rely on pure model-driven techniques to achieve positioning functionality, often succumbing to performance deterioration due to hardware impairments in practical scenarios. Here we reformulate the direction finding or angle-of-arrival (AoA) estimation problem as an image recovery task of the spatial spectrum and propose a new model-driven deep neural network (MoD-DNN) framework. The proposed MoD-DNN scheme comprises three modules: a multi-task autoencoder-based beamformer, a coarray spectrum generation module, and a model-driven deep learning-based spatial spectrum reconstruction module. Our technique enables automatic calibration of angular-dependent phase error thereby enhancing the resilience of direction-finding precision against realistic system non-idealities. We validate the proposed scheme both using numerical simulations and field tests. The results show that the proposed MoD-DNN framework enables effective spectrum calibration and accurate AoA estimation. To the best of our knowledge, this study marks the first successful demonstration of hybrid data-and-model-driven direction finding utilizing readily available commodity 5G gNodeB.

5G NR monostatic positioning with array impairments: Data-and-model-driven framework and experiment results

Dec 11, 2024

Abstract:In this article, we present an intelligent framework for 5G new radio (NR) indoor positioning under a monostatic configuration. The primary objective is to estimate both the angle of arrival and time of arrival simultaneously. This requires capturing the pertinent information from both the antenna and subcarrier dimensions of the receive signals. To tackle the challenges posed by the intricacy of the high-dimensional information matrix, coupled with the impact of irregular array errors, we design a deep learning scheme. Recognizing that the phase difference between any two subcarriers and antennas encodes spatial information of the target, we contend that the transformer network is better suited for this problem compared to the convolutional neural network which excels in local feature extraction. To further enhance the network's fitting capability, we integrate the transformer with a model-based multiple-signal-classification (MUSIC) region decision mechanism. Numerical results and field tests demonstrate the effectiveness of the proposed framework in accurately calibrating the irregular angle-dependent array error and improving positioning accuracy.

Fine-grained graph representation learning for heterogeneous mobile networks with attentive fusion and contrastive learning

Dec 10, 2024

Abstract:AI becomes increasingly vital for telecom industry, as the burgeoning complexity of upcoming mobile communication networks places immense pressure on network operators. While there is a growing consensus that intelligent network self-driving holds the key, it heavily relies on expert experience and knowledge extracted from network data. In an effort to facilitate convenient analytics and utilization of wireless big data, we introduce the concept of knowledge graphs into the field of mobile networks, giving rise to what we term as wireless data knowledge graphs (WDKGs). However, the heterogeneous and dynamic nature of communication networks renders manual WDKG construction both prohibitively costly and error-prone, presenting a fundamental challenge. In this context, we propose an unsupervised data-and-model driven graph structure learning (DMGSL) framework, aimed at automating WDKG refinement and updating. Tackling WDKG heterogeneity involves stratifying the network into homogeneous layers and refining it at a finer granularity. Furthermore, to capture WDKG dynamics effectively, we segment the network into static snapshots based on the coherence time and harness the power of recurrent neural networks to incorporate historical information. Extensive experiments conducted on the established WDKG demonstrate the superiority of the DMGSL over the baselines, particularly in terms of node classification accuracy.

Access Point Deployment for Localizing Accuracy and User Rate in Cell-Free Systems

Dec 10, 2024

Abstract:Evolving next-generation mobile networks is designed to provide ubiquitous coverage and networked sensing. With utility of multi-view sensing and multi-node joint transmission, cell-free is a promising technique to realize this prospect. This paper aims to tackle the problem of access point (AP) deployment in cell-free systems to balance the sensing accuracy and user rate. By merging the D-optimality with Euclidean criterion, a novel integrated metric is proposed to be the objective function for both max-sum and max-min problems, which respectively guarantee the overall and lowest performance in multi-user communication and target tracking scenario. To solve the corresponding high dimensional non-convex multi-objective problem, the Soft actor-critic (SAC) is utilized to avoid risk of local optimal result. Numerical results demonstrate that proposed SAC-based APs deployment method achieves $20\%$ of overall performance and $120\%$ of lowest performance.

In-Situ Calibration of Antenna Arrays for Positioning With 5G Networks

Mar 08, 2023

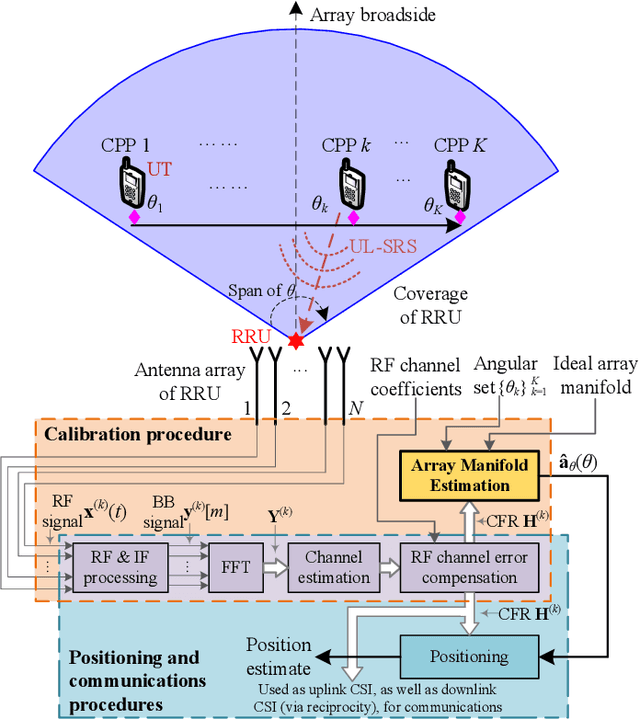

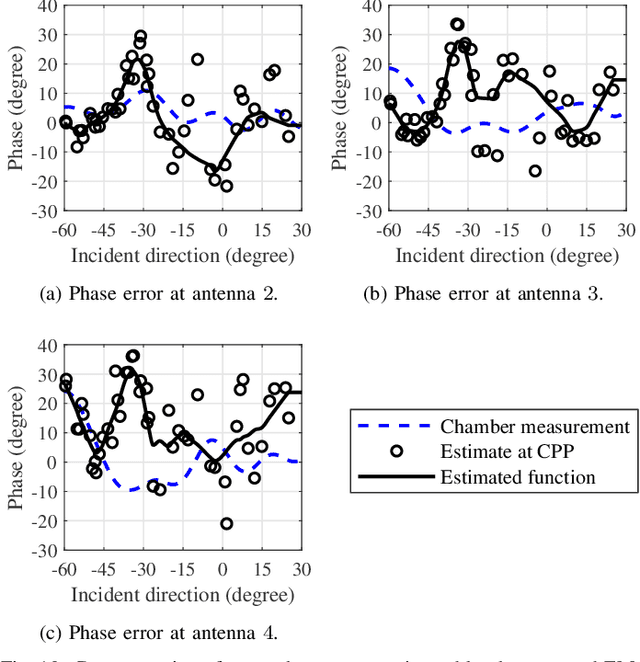

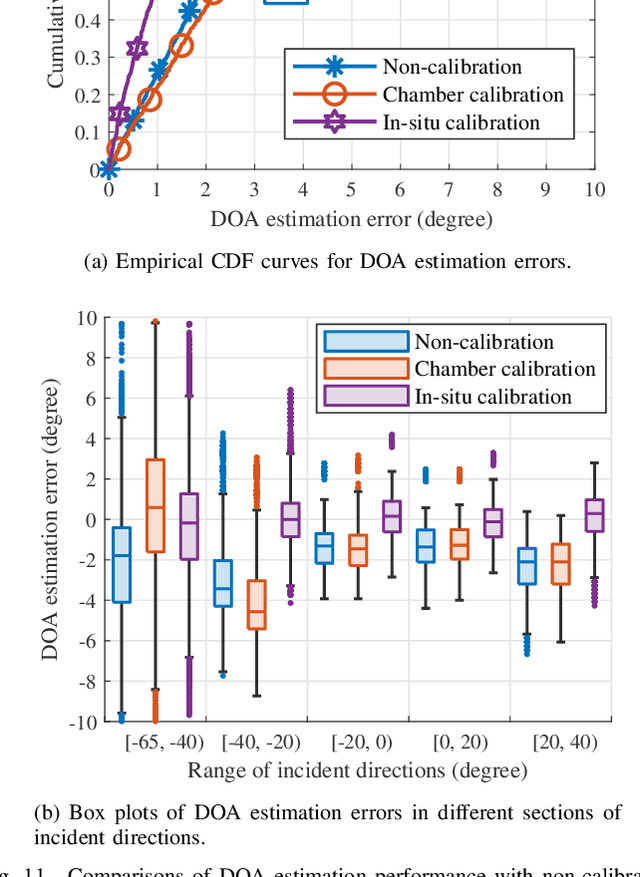

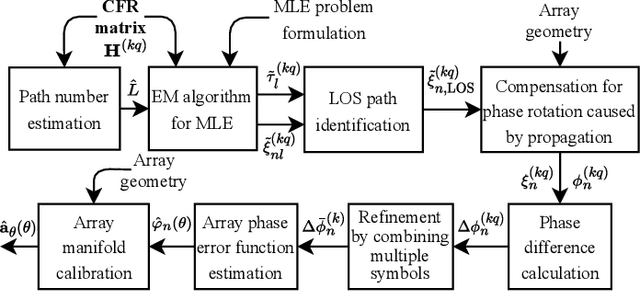

Abstract:Owing to the ubiquity of cellular communication signals, positioning with the fifth generation (5G) signal has emerged as a promising solution in global navigation satellite system-denied areas. Unfortunately, although the widely employed antenna arrays in 5G remote radio units (RRUs) facilitate the measurement of the direction of arrival (DOA), DOA-based positioning performance is severely degraded by array errors. This paper proposes an in-situ calibration framework with a user terminal transmitting 5G reference signals at several known positions in the actual operating environment and the accessible RRUs estimating their array errors from these reference signals. Further, since sub-6GHz small-cell RRUs deployed for indoor coverage generally have small-aperture antenna arrays, while 5G signals have plentiful bandwidth resources, this work segregates the multipath components via super-resolution delay estimation based on the maximum likelihood criteria. This differs significantly from existing in-situ calibration works which resolve multipaths in the spatial domain. The superiority of the proposed method is first verified by numerical simulations. We then demonstrate via field test with commercial 5G equipment that, a reduction of 46.7% for 1-${\sigma}$ DOA estimation error can be achieved by in-situ calibration using the proposed method.

Fast Direct Localization for Millimeter Wave MIMO Systems via Deep ADMM Unfolding

Feb 06, 2023

Abstract:Massive arrays deployed in millimeter-wave systems enable high angular resolution performance, which in turn facilitates sub-meter localization services. Albeit suboptimal, up to now the most popular localization approach has been based on a so-called two-step procedure, where triangulation is applied upon aggregation of the angle-of-arrival (AoA) measurements from the collaborative base stations. This is mainly due to the prohibitive computational cost of the existing direct localization approaches in large-scale systems. To address this issue, we propose a deep unfolding based fast direct localization solver. First, the direct localization is formulated as a joint $l_1$-$l_{2,1}$ norm sparse recovery problem, which is then solved by using alternating direction method of multipliers (ADMM). Next, we develop a deep ADMM unfolding network (DAUN) to learn the ADMM parameter settings from the training data and a position refinement algorithm is proposed for DAUN. Finally, simulation results showcase the superiority of the proposed DAUN over the baseline solvers in terms of better localization accuracy, faster convergence and significantly lower computational complexity.

Link-level simulator for 5G localization

Dec 26, 2022Abstract:Channel-state-information-based localization in 5G networks has been a promising way to obtain highly accurate positions compared to previous communication networks. However, there is no unified and effective platform to support the research on 5G localization algorithms. This paper releases a link-level simulator for 5G localization, which can depict realistic physical behaviors of the 5G positioning signal transmission. Specifically, we first develop a simulation architecture considering more elaborate parameter configuration and physical-layer processing. The architecture supports the link modeling at sub-6GHz and millimeter-wave (mmWave) frequency bands. Subsequently, the critical physical-layer components that determine the localization performance are designed and integrated. In particular, a lightweight new-radio channel model and hardware impairment functions that significantly limit the parameter estimation accuracy are developed. Finally, we present three application cases to evaluate the simulator, i.e. two-dimensional mobile terminal localization, mmWave beam sweeping, and beamforming-based angle estimation. The numerical results in the application cases present the performance diversity of localization algorithms in various impairment conditions.

Unsupervised Recurrent Federated Learning for Edge Popularity Prediction in Privacy-Preserving Mobile Edge Computing Networks

Jul 06, 2022

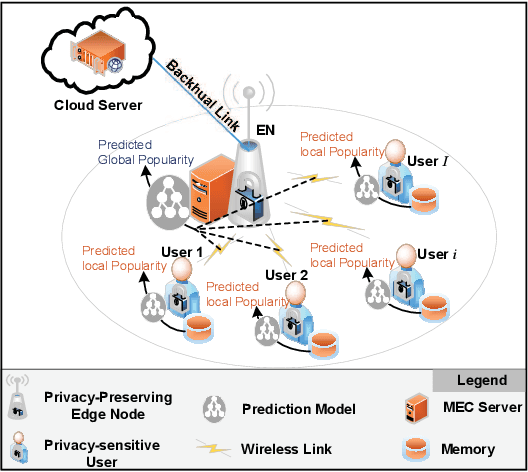

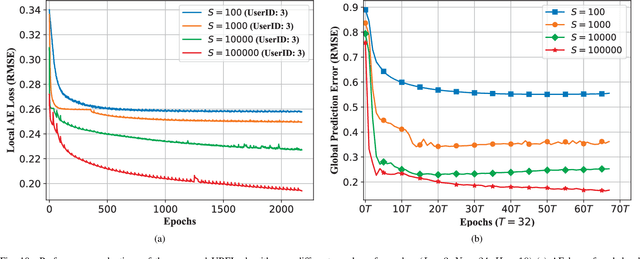

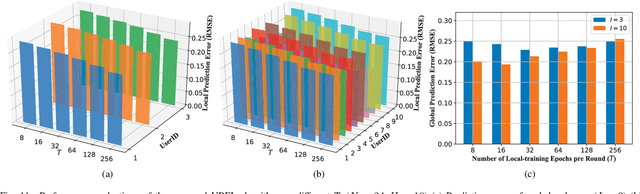

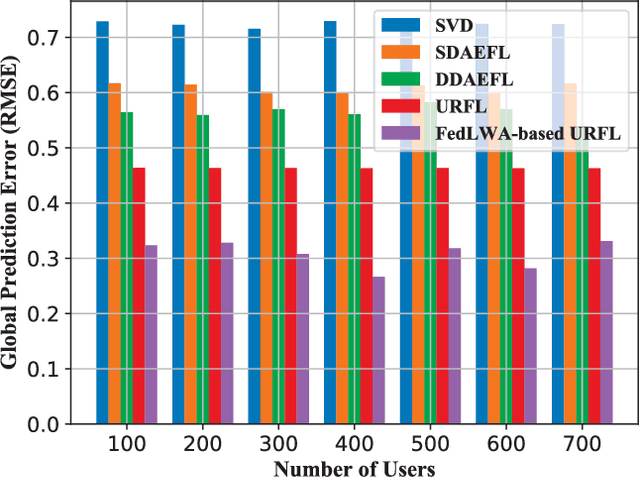

Abstract:Nowadays wireless communication is rapidly reshaping entire industry sectors. In particular, mobile edge computing (MEC) as an enabling technology for industrial Internet of things (IIoT) brings powerful computing/storage infrastructure closer to the mobile terminals and, thereby, significant lowers the response latency. To reap the benefit of proactive caching at the network edge, precise knowledge on the popularity pattern among the end devices is essential. However, the complex and dynamic nature of the content popularity over space and time as well as the data-privacy requirements in many IIoT scenarios pose tough challenges to its acquisition. In this article, we propose an unsupervised and privacy-preserving popularity prediction framework for MEC-enabled IIoT. The concepts of local and global popularities are introduced and the time-varying popularity of each user is modelled as a model-free Markov chain. On this basis, a novel unsupervised recurrent federated learning (URFL) algorithm is proposed to predict the distributed popularity while achieve privacy preservation and unsupervised training. Simulations indicate that the proposed framework can enhance the prediction accuracy in terms of a reduced root-mean-squared error by up to $60.5\%-68.7\%$. Additionally, manual labeling and violation of users' data privacy are both avoided.

Learning-Aided Beam Prediction in mmWave MU-MIMO Systems for High-Speed Railway

Jun 30, 2022

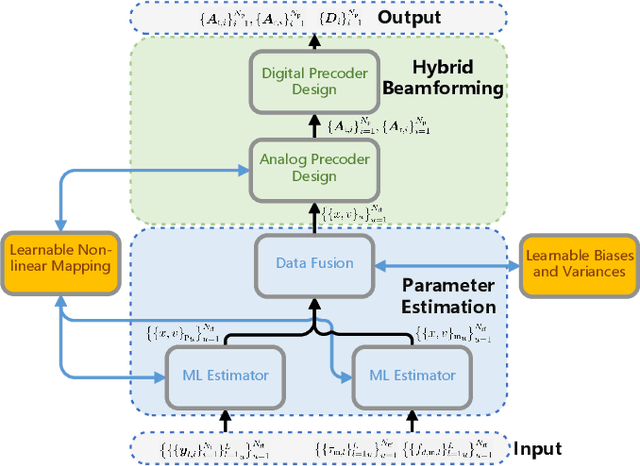

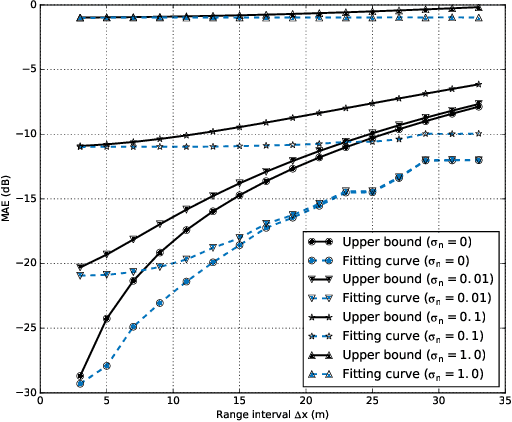

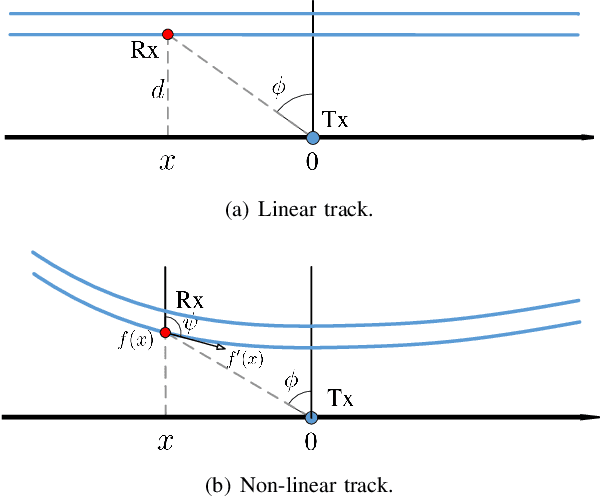

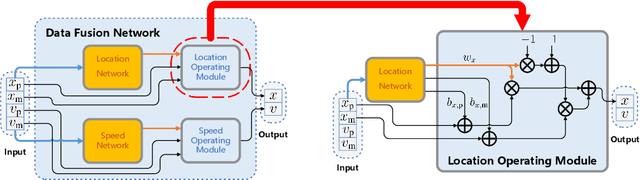

Abstract:The problem of beam alignment and tracking in high mobility scenarios such as high-speed railway (HSR) becomes extremely challenging, since large overhead cost and significant time delay are introduced for fast time-varying channel estimation. To tackle this challenge, we propose a learning-aided beam prediction scheme for HSR networks, which predicts the beam directions and the channel amplitudes within a period of future time with fine time granularity, using a group of observations. Concretely, we transform the problem of high-dimensional beam prediction into a two-stage task, i.e., a low-dimensional parameter estimation and a cascaded hybrid beamforming operation. In the first stage, the location and speed of a certain terminal are estimated by maximum likelihood criterion, and a data-driven data fusion module is designed to improve the final estimation accuracy and robustness. Then, the probable future beam directions and channel amplitudes are predicted, based on the HSR scenario priors including deterministic trajectory, motion model, and channel model. Furthermore, we incorporate a learnable non-linear mapping module into the overall beam prediction to allow non-linear tracks. Both of the proposed learnable modules are model-based and have a good interpretability. Compared to the existing beam management scheme, the proposed beam prediction has (near) zero overhead cost and time delay. Simulation results verify the effectiveness of the proposed scheme.

* 14 pages, 10 figures

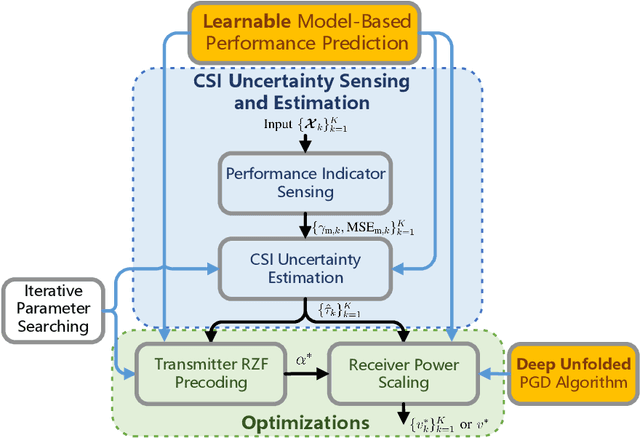

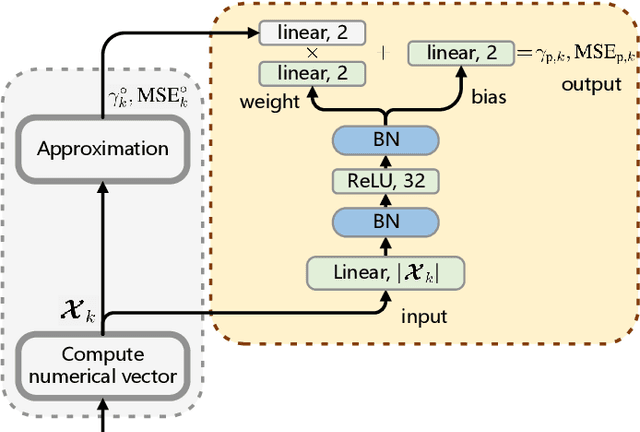

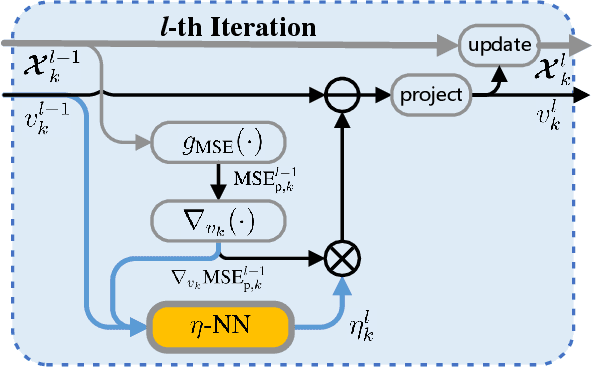

Learnable Model-Driven Performance Prediction and Optimization for Imperfect MIMO System: Framework and Application

Jun 30, 2022

Abstract:State-of-the-art schemes for performance analysis and optimization of multiple-input multiple-output systems generally experience degradation or even become invalid in dynamic complex scenarios with unknown interference and channel state information (CSI) uncertainty. To adapt to the challenging settings and better accomplish these network auto-tuning tasks, we propose a generic learnable model-driven framework in this paper. To explain how the proposed framework works, we consider regularized zero-forcing precoding as a usage instance and design a light-weight neural network for refined prediction of sum rate and detection error based on coarse model-driven approximations. Then, we estimate the CSI uncertainty on the learned predictor in an iterative manner and, on this basis, optimize the transmit regularization term and subsequent receive power scaling factors. A deep unfolded projected gradient descent based algorithm is proposed for power scaling, which achieves favorable trade-off between convergence rate and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge