Fan Meng

Physics-Informed Residual Neural Ordinary Differential Equations for Enhanced Tropical Cyclone Intensity Forecasting

Mar 09, 2025Abstract:Accurate tropical cyclone (TC) intensity prediction is crucial for mitigating storm hazards, yet its complex dynamics pose challenges to traditional methods. Here, we introduce a Physics-Informed Residual Neural Ordinary Differential Equation (PIR-NODE) model to precisely forecast TC intensity evolution. This model leverages the powerful non-linear fitting capabilities of deep learning, integrates residual connections to enhance model depth and training stability, and explicitly models the continuous temporal evolution of TC intensity using Neural ODEs. Experimental results in the SHIPS dataset demonstrate that the PIR-NODE model achieves a significant improvement in 24-hour intensity prediction accuracy compared to traditional statistical models and benchmark deep learning methods, with a 25. 2\% reduction in the root mean square error (RMSE) and a 19.5\% increase in R-square (R2) relative to a baseline of neural network. Crucially, the residual structure effectively preserves initial state information, and the model exhibits robust generalization capabilities. This study details the PIR-NODE model architecture, physics-informed integration strategies, and comprehensive experimental validation, revealing the substantial potential of deep learning techniques in predicting complex geophysical systems and laying the foundation for future refined TC forecasting research.

Joint Optimization of Data- and Model-Driven Probing Beams and Beam Predictor

Sep 27, 2024Abstract:Hierarchical search in millimeter-wave (mmWave) communications incurs significant beam training overhead and delay, especially in a dynamic environment. Deep learning-enabled beam prediction is promising to significantly mitigate the overhead and delay, efficiently utilizing the site-specific channel prior. In this work, we propose to jointly optimize a data- and model-driven probe beam module and a cascaded data-driven beam predictor, with limitations in that the probe and communicate beams are restricted within the manifold space of uniform planer array and quantization of the phase modulator. First, The probe beam module senses the mmWave channel with a complex-valued neural network and outputs the counterpart RSRPs of probe beams. Second, the beam predictor estimates the RSRPs in the entire beamspace to minimize the prediction cross entropy and selects the optimal beam with the maximum RSRP value for data transmission. Additionally, we propose to add noise to the phase variables in the probe beam module, against quantization error. Simulation results show the effectiveness of our proposed scheme.

Entropy-based Probing Beam Selection and Beam Prediction via Deep Learning

Jan 03, 2024Abstract:Hierarchical beam search in mmWave communications incurs substantial training overhead, necessitating deep learning-enabled beam predictions to effectively leverage channel priors and mitigate this overhead. In this study, we introduce a comprehensive probabilistic model of power distribution in beamspace, and formulate the joint optimization problem of probing beam selection and probabilistic beam prediction as an entropy minimization problem. Then, we propose a greedy scheme to iteratively and alternately solve this problem, where a transformer-based beam predictor is trained to estimate the conditional power distribution based on the probing beams and user location within each iteration, and the trained predictor selects an unmeasured beam that minimizes the entropy of remaining beams. To further reduce the number of interactions and the computational complexity of the iterative scheme, we propose a two-stage probing beam selection scheme. Firstly, probing beams are selected from a location-specific codebook designed by an entropy-based criterion, and predictions are made with corresponding feedback. Secondly, the optimal beam is identified using additional probing beams with the highest predicted power values. Simulation results demonstrate the superiority of the proposed schemes compared to hierarchical beam search and beam prediction with uniform probing beams.

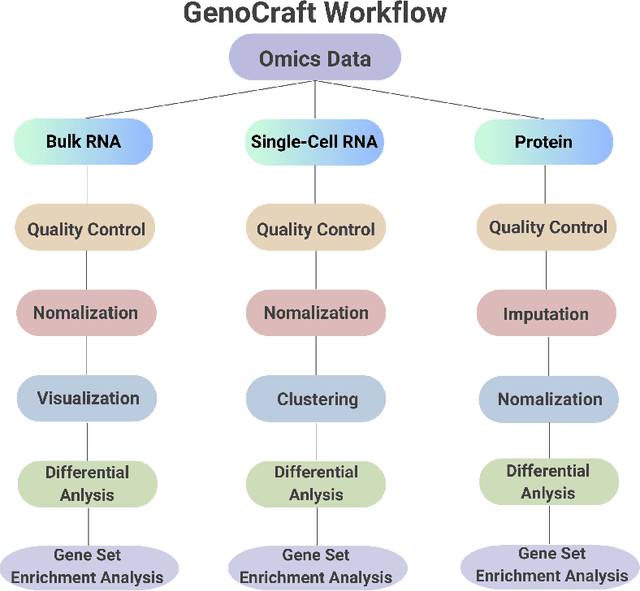

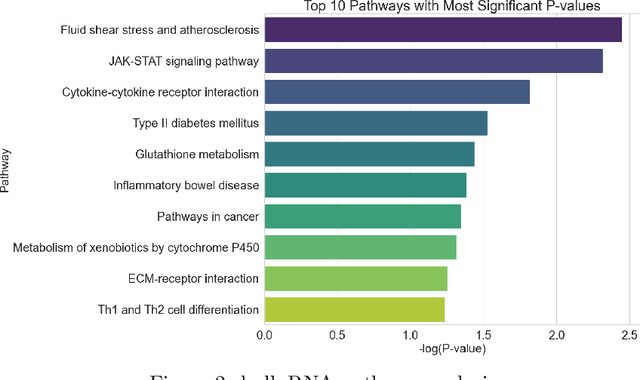

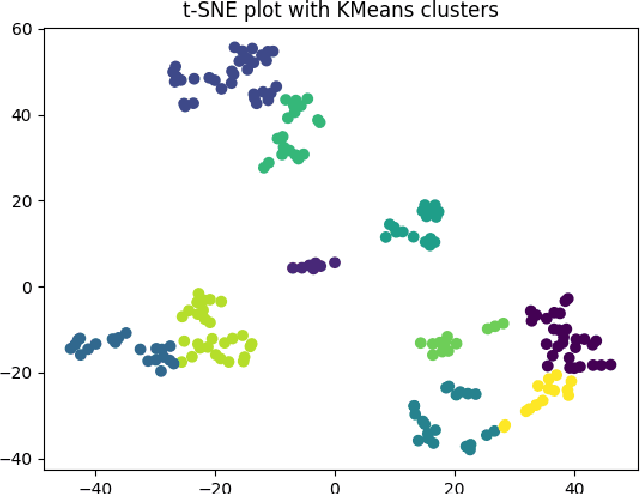

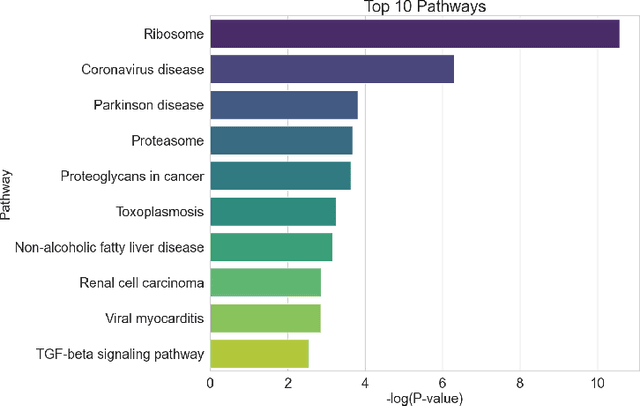

GenoCraft: A Comprehensive, User-Friendly Web-Based Platform for High-Throughput Omics Data Analysis and Visualization

Dec 21, 2023

Abstract:The surge in high-throughput omics data has reshaped the landscape of biological research, underlining the need for powerful, user-friendly data analysis and interpretation tools. This paper presents GenoCraft, a web-based comprehensive software solution designed to handle the entire pipeline of omics data processing. GenoCraft offers a unified platform featuring advanced bioinformatics tools, covering all aspects of omics data analysis. It encompasses a range of functionalities, such as normalization, quality control, differential analysis, network analysis, pathway analysis, and diverse visualization techniques. This software makes state-of-the-art omics data analysis more accessible to a wider range of users. With GenoCraft, researchers and data scientists have access to an array of cutting-edge bioinformatics tools under a user-friendly interface, making it a valuable resource for managing and analyzing large-scale omics data. The API with an interactive web interface is publicly available at https://genocraft.stanford. edu/. We also release all the codes in https://github.com/futianfan/GenoCraft.

Learning-Aided Beam Prediction in mmWave MU-MIMO Systems for High-Speed Railway

Jun 30, 2022

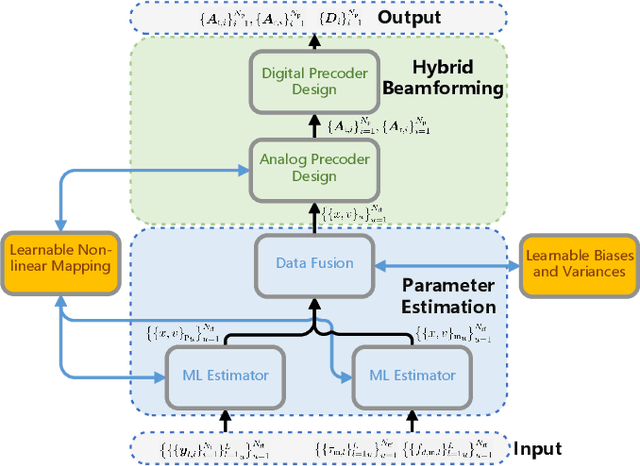

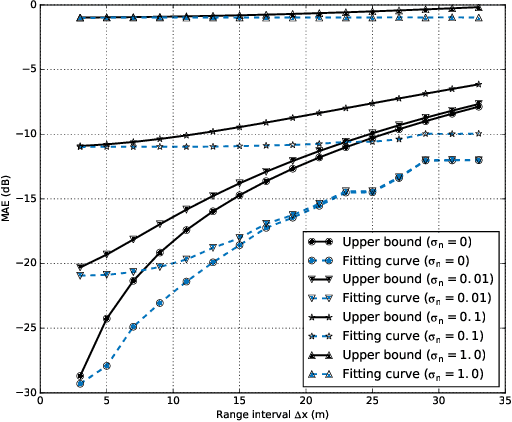

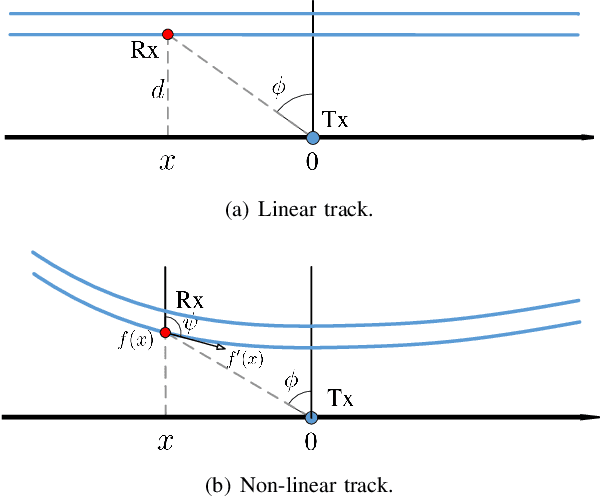

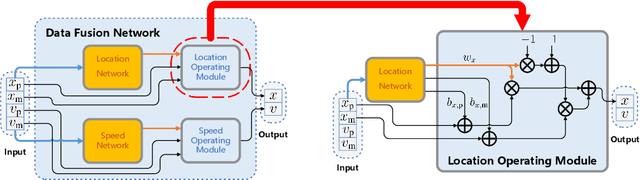

Abstract:The problem of beam alignment and tracking in high mobility scenarios such as high-speed railway (HSR) becomes extremely challenging, since large overhead cost and significant time delay are introduced for fast time-varying channel estimation. To tackle this challenge, we propose a learning-aided beam prediction scheme for HSR networks, which predicts the beam directions and the channel amplitudes within a period of future time with fine time granularity, using a group of observations. Concretely, we transform the problem of high-dimensional beam prediction into a two-stage task, i.e., a low-dimensional parameter estimation and a cascaded hybrid beamforming operation. In the first stage, the location and speed of a certain terminal are estimated by maximum likelihood criterion, and a data-driven data fusion module is designed to improve the final estimation accuracy and robustness. Then, the probable future beam directions and channel amplitudes are predicted, based on the HSR scenario priors including deterministic trajectory, motion model, and channel model. Furthermore, we incorporate a learnable non-linear mapping module into the overall beam prediction to allow non-linear tracks. Both of the proposed learnable modules are model-based and have a good interpretability. Compared to the existing beam management scheme, the proposed beam prediction has (near) zero overhead cost and time delay. Simulation results verify the effectiveness of the proposed scheme.

* 14 pages, 10 figures

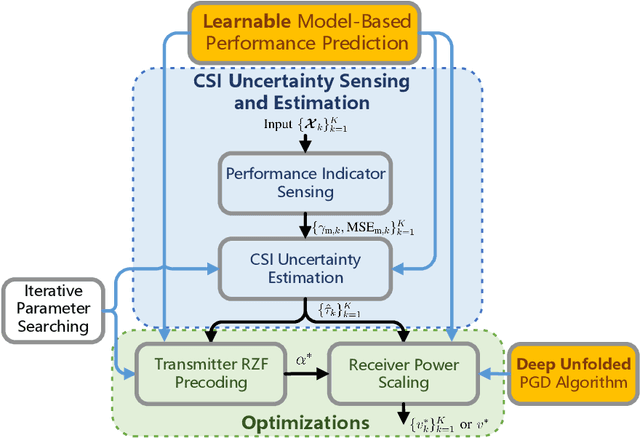

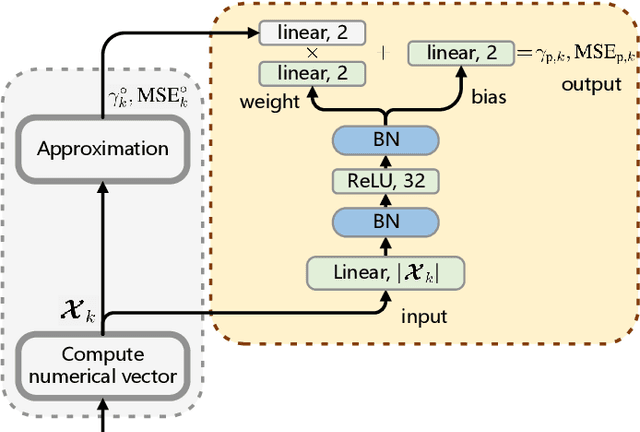

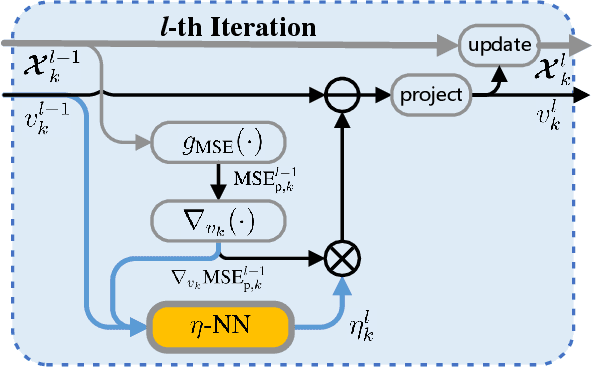

Learnable Model-Driven Performance Prediction and Optimization for Imperfect MIMO System: Framework and Application

Jun 30, 2022

Abstract:State-of-the-art schemes for performance analysis and optimization of multiple-input multiple-output systems generally experience degradation or even become invalid in dynamic complex scenarios with unknown interference and channel state information (CSI) uncertainty. To adapt to the challenging settings and better accomplish these network auto-tuning tasks, we propose a generic learnable model-driven framework in this paper. To explain how the proposed framework works, we consider regularized zero-forcing precoding as a usage instance and design a light-weight neural network for refined prediction of sum rate and detection error based on coarse model-driven approximations. Then, we estimate the CSI uncertainty on the learned predictor in an iterative manner and, on this basis, optimize the transmit regularization term and subsequent receive power scaling factors. A deep unfolded projected gradient descent based algorithm is proposed for power scaling, which achieves favorable trade-off between convergence rate and robustness.

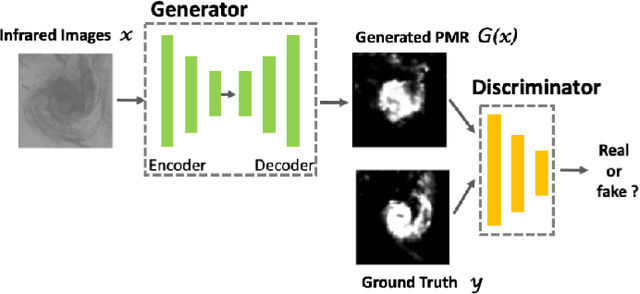

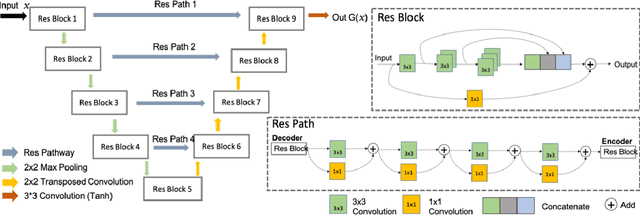

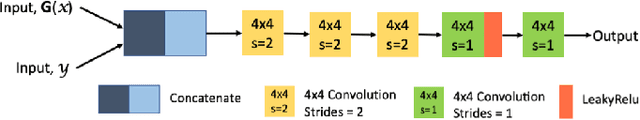

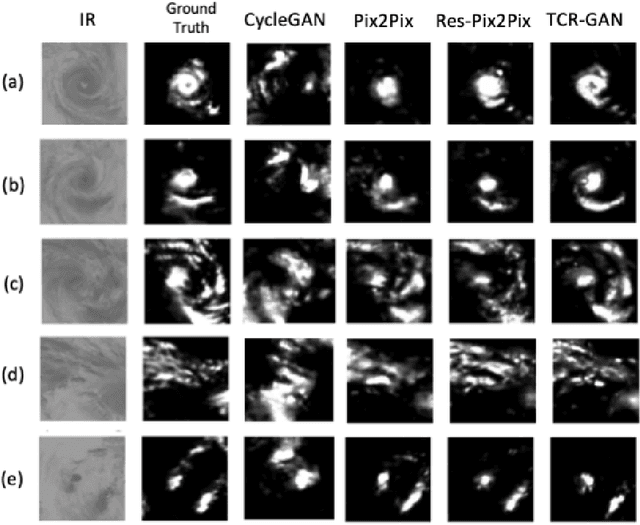

TCR-GAN: Predicting tropical cyclone passive microwave rainfall using infrared imagery via generative adversarial networks

Jan 14, 2022

Abstract:Tropical cyclones (TC) generally carry large amounts of water vapor and can cause large-scale extreme rainfall. Passive microwave rainfall (PMR) estimation of TC with high spatial and temporal resolution is crucial for disaster warning of TC, but remains a challenging problem due to the low temporal resolution of microwave sensors. This study attempts to solve this problem by directly forecasting PMR from satellite infrared (IR) images of TC. We develop a generative adversarial network (GAN) to convert IR images into PMR, and establish the mapping relationship between TC cloud-top bright temperature and PMR, the algorithm is named TCR-GAN. Meanwhile, a new dataset that is available as a benchmark, Dataset of Tropical Cyclone IR-to-Rainfall Prediction (TCIRRP) was established, which is expected to advance the development of artificial intelligence in this direction. Experimental results show that the algorithm can effectively extract key features from IR. The end-to-end deep learning approach shows potential as a technique that can be applied globally and provides a new perspective tropical cyclone precipitation prediction via satellite, which is expected to provide important insights for real-time visualization of TC rainfall globally in operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge