Yihan Hu

Ratio-Variance Regularized Policy Optimization for Efficient LLM Fine-tuning

Jan 06, 2026Abstract:On-policy reinforcement learning (RL), particularly Proximal Policy Optimization (PPO) and Group Relative Policy Optimization (GRPO), has become the dominant paradigm for fine-tuning large language models (LLMs). While policy ratio clipping stabilizes training, this heuristic hard constraint incurs a fundamental cost: it indiscriminately truncates gradients from high-return yet high-divergence actions, suppressing rare but highly informative "eureka moments" in complex reasoning. Moreover, once data becomes slightly stale, hard clipping renders it unusable, leading to severe sample inefficiency. In this work, we revisit the trust-region objective in policy optimization and show that explicitly constraining the \emph{variance (second central moment) of the policy ratio} provides a principled and smooth relaxation of hard clipping. This distributional constraint stabilizes policy updates while preserving gradient signals from valuable trajectories. Building on this insight, we propose $R^2VPO$ (Ratio-Variance Regularized Policy Optimization), a novel primal-dual framework that supports stable on-policy learning and enables principled off-policy data reuse by dynamically reweighting stale samples rather than discarding them. We extensively evaluate $R^2VPO$ on fine-tuning state-of-the-art LLMs, including DeepSeek-Distill-Qwen-1.5B and the openPangu-Embedded series (1B and 7B), across challenging mathematical reasoning benchmarks. Experimental results show that $R^2VPO$ consistently achieves superior asymptotic performance, with average relative gains of up to 17% over strong clipping-based baselines, while requiring approximately 50% fewer rollouts to reach convergence. These findings establish ratio-variance control as a promising direction for improving both stability and data efficiency in RL-based LLM alignment.

EasyOmnimatte: Taming Pretrained Inpainting Diffusion Models for End-to-End Video Layered Decomposition

Dec 26, 2025

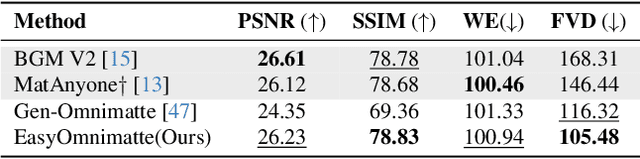

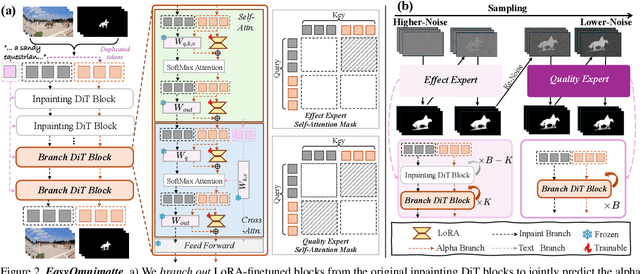

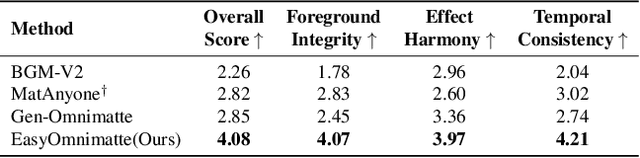

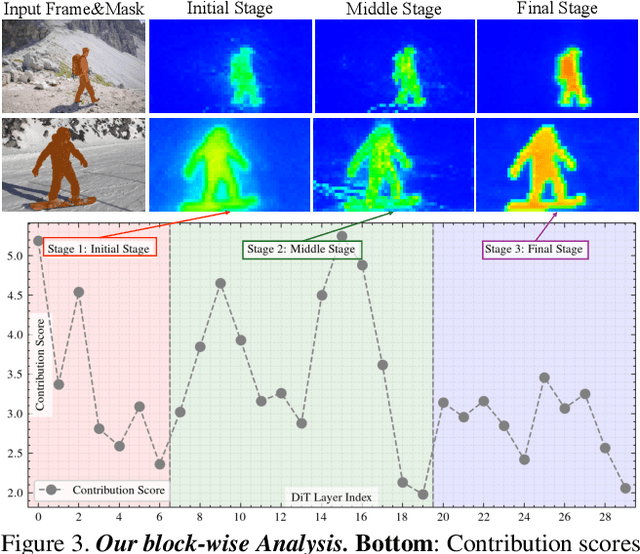

Abstract:Existing video omnimatte methods typically rely on slow, multi-stage, or inference-time optimization pipelines that fail to fully exploit powerful generative priors, producing suboptimal decompositions. Our key insight is that, if a video inpainting model can be finetuned to remove the foreground-associated effects, then it must be inherently capable of perceiving these effects, and hence can also be finetuned for the complementary task: foreground layer decomposition with associated effects. However, although naïvely finetuning the inpainting model with LoRA applied to all blocks can produce high-quality alpha mattes, it fails to capture associated effects. Our systematic analysis reveals this arises because effect-related cues are primarily encoded in specific DiT blocks and become suppressed when LoRA is applied across all blocks. To address this, we introduce EasyOmnimatte, the first unified, end-to-end video omnimatte method. Concretely, we finetune a pretrained video inpainting diffusion model to learn dual complementary experts while keeping its original weights intact: an Effect Expert, where LoRA is applied only to effect-sensitive DiT blocks to capture the coarse structure of the foreground and associated effects, and a fully LoRA-finetuned Quality Expert learns to refine the alpha matte. During sampling, Effect Expert is used for denoising at early, high-noise steps, while Quality Expert takes over at later, low-noise steps. This design eliminates the need for two full diffusion passes, significantly reducing computational cost without compromising output quality. Ablation studies validate the effectiveness of this Dual-Expert strategy. Experiments demonstrate that EasyOmnimatte sets a new state-of-the-art for video omnimatte and enables various downstream tasks, significantly outperforming baselines in both quality and efficiency.

S2GO: Streaming Sparse Gaussian Occupancy Prediction

Jun 05, 2025Abstract:Despite the demonstrated efficiency and performance of sparse query-based representations for perception, state-of-the-art 3D occupancy prediction methods still rely on voxel-based or dense Gaussian-based 3D representations. However, dense representations are slow, and they lack flexibility in capturing the temporal dynamics of driving scenes. Distinct from prior work, we instead summarize the scene into a compact set of 3D queries which are propagated through time in an online, streaming fashion. These queries are then decoded into semantic Gaussians at each timestep. We couple our framework with a denoising rendering objective to guide the queries and their constituent Gaussians in effectively capturing scene geometry. Owing to its efficient, query-based representation, S2GO achieves state-of-the-art performance on the nuScenes and KITTI occupancy benchmarks, outperforming prior art (e.g., GaussianWorld) by 1.5 IoU with 5.9x faster inference.

Progressive Inertial Poser: Progressive Real-Time Kinematic Chain Estimation for 3D Full-Body Pose from Three IMU Sensors

May 08, 2025Abstract:The motion capture system that supports full-body virtual representation is of key significance for virtual reality. Compared to vision-based systems, full-body pose estimation from sparse tracking signals is not limited by environmental conditions or recording range. However, previous works either face the challenge of wearing additional sensors on the pelvis and lower-body or rely on external visual sensors to obtain global positions of key joints. To improve the practicality of the technology for virtual reality applications, we estimate full-body poses using only inertial data obtained from three Inertial Measurement Unit (IMU) sensors worn on the head and wrists, thereby reducing the complexity of the hardware system. In this work, we propose a method called Progressive Inertial Poser (ProgIP) for human pose estimation, which combines neural network estimation with a human dynamics model, considers the hierarchical structure of the kinematic chain, and employs a multi-stage progressive network estimation with increased depth to reconstruct full-body motion in real time. The encoder combines Transformer Encoder and bidirectional LSTM (TE-biLSTM) to flexibly capture the temporal dependencies of the inertial sequence, while the decoder based on multi-layer perceptrons (MLPs) transforms high-dimensional features and accurately projects them onto Skinned Multi-Person Linear (SMPL) model parameters. Quantitative and qualitative experimental results on multiple public datasets show that our method outperforms state-of-the-art methods with the same inputs, and is comparable to recent works using six IMU sensors.

DCEdit: Dual-Level Controlled Image Editing via Precisely Localized Semantics

Mar 21, 2025Abstract:This paper presents a novel approach to improving text-guided image editing using diffusion-based models. Text-guided image editing task poses key challenge of precisly locate and edit the target semantic, and previous methods fall shorts in this aspect. Our method introduces a Precise Semantic Localization strategy that leverages visual and textual self-attention to enhance the cross-attention map, which can serve as a regional cues to improve editing performance. Then we propose a Dual-Level Control mechanism for incorporating regional cues at both feature and latent levels, offering fine-grained control for more precise edits. To fully compare our methods with other DiT-based approaches, we construct the RW-800 benchmark, featuring high resolution images, long descriptive texts, real-world images, and a new text editing task. Experimental results on the popular PIE-Bench and RW-800 benchmarks demonstrate the superior performance of our approach in preserving background and providing accurate edits.

DeepFRC: An End-to-End Deep Learning Model for Functional Registration and Classification

Jan 30, 2025

Abstract:Functional data analysis (FDA) is essential for analyzing continuous, high-dimensional data, yet existing methods often decouple functional registration and classification, limiting their efficiency and performance. We present DeepFRC, an end-to-end deep learning framework that unifies these tasks within a single model. Our approach incorporates an alignment module that learns time warping functions via elastic function registration and a learnable basis representation module for dimensionality reduction on aligned data. This integration enhances both alignment accuracy and predictive performance. Theoretical analysis establishes that DeepFRC achieves low misalignment and generalization error, while simulations elucidate the progression of registration, reconstruction, and classification during training. Experiments on real-world datasets demonstrate that DeepFRC consistently outperforms state-of-the-art methods, particularly in addressing complex registration challenges. Code is available at: https://github.com/Drivergo-93589/DeepFRC.

Memory Efficient Matting with Adaptive Token Routing

Dec 17, 2024

Abstract:Transformer-based models have recently achieved outstanding performance in image matting. However, their application to high-resolution images remains challenging due to the quadratic complexity of global self-attention. To address this issue, we propose MEMatte, a \textbf{m}emory-\textbf{e}fficient \textbf{m}atting framework for processing high-resolution images. MEMatte incorporates a router before each global attention block, directing informative tokens to the global attention while routing other tokens to a Lightweight Token Refinement Module (LTRM). Specifically, the router employs a local-global strategy to predict the routing probability of each token, and the LTRM utilizes efficient modules to simulate global attention. Additionally, we introduce a Batch-constrained Adaptive Token Routing (BATR) mechanism, which allows each router to dynamically route tokens based on image content and the stages of attention block in the network. Furthermore, we construct an ultra high-resolution image matting dataset, UHR-395, comprising 35,500 training images and 1,000 test images, with an average resolution of $4872\times6017$. This dataset is created by compositing 395 different alpha mattes across 11 categories onto various backgrounds, all with high-quality manual annotation. Extensive experiments demonstrate that MEMatte outperforms existing methods on both high-resolution and real-world datasets, significantly reducing memory usage by approximately 88% and latency by 50% on the Composition-1K benchmark. Our code is available at https://github.com/linyiheng123/MEMatte.

Solving Motion Planning Tasks with a Scalable Generative Model

Jul 03, 2024

Abstract:As autonomous driving systems being deployed to millions of vehicles, there is a pressing need of improving the system's scalability, safety and reducing the engineering cost. A realistic, scalable, and practical simulator of the driving world is highly desired. In this paper, we present an efficient solution based on generative models which learns the dynamics of the driving scenes. With this model, we can not only simulate the diverse futures of a given driving scenario but also generate a variety of driving scenarios conditioned on various prompts. Our innovative design allows the model to operate in both full-Autoregressive and partial-Autoregressive modes, significantly improving inference and training speed without sacrificing generative capability. This efficiency makes it ideal for being used as an online reactive environment for reinforcement learning, an evaluator for planning policies, and a high-fidelity simulator for testing. We evaluated our model against two real-world datasets: the Waymo motion dataset and the nuPlan dataset. On the simulation realism and scene generation benchmark, our model achieves the state-of-the-art performance. And in the planning benchmarks, our planner outperforms the prior arts. We conclude that the proposed generative model may serve as a foundation for a variety of motion planning tasks, including data generation, simulation, planning, and online training. Source code is public at https://github.com/HorizonRobotics/GUMP/

Learning Trimaps via Clicks for Image Matting

Apr 06, 2024

Abstract:Despite significant advancements in image matting, existing models heavily depend on manually-drawn trimaps for accurate results in natural image scenarios. However, the process of obtaining trimaps is time-consuming, lacking user-friendliness and device compatibility. This reliance greatly limits the practical application of all trimap-based matting methods. To address this issue, we introduce Click2Trimap, an interactive model capable of predicting high-quality trimaps and alpha mattes with minimal user click inputs. Through analyzing real users' behavioral logic and characteristics of trimaps, we successfully propose a powerful iterative three-class training strategy and a dedicated simulation function, making Click2Trimap exhibit versatility across various scenarios. Quantitative and qualitative assessments on synthetic and real-world matting datasets demonstrate Click2Trimap's superior performance compared to all existing trimap-free matting methods. Especially, in the user study, Click2Trimap achieves high-quality trimap and matting predictions in just an average of 5 seconds per image, demonstrating its substantial practical value in real-world applications.

Diffusion for Natural Image Matting

Dec 10, 2023

Abstract:We aim to leverage diffusion to address the challenging image matting task. However, the presence of high computational overhead and the inconsistency of noise sampling between the training and inference processes pose significant obstacles to achieving this goal. In this paper, we present DiffMatte, a solution designed to effectively overcome these challenges. First, DiffMatte decouples the decoder from the intricately coupled matting network design, involving only one lightweight decoder in the iterations of the diffusion process. With such a strategy, DiffMatte mitigates the growth of computational overhead as the number of samples increases. Second, we employ a self-aligned training strategy with uniform time intervals, ensuring a consistent noise sampling between training and inference across the entire time domain. Our DiffMatte is designed with flexibility in mind and can seamlessly integrate into various modern matting architectures. Extensive experimental results demonstrate that DiffMatte not only reaches the state-of-the-art level on the Composition-1k test set, surpassing the best methods in the past by 5% and 15% in the SAD metric and MSE metric respectively, but also show stronger generalization ability in other benchmarks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge