Jingyu Qian

A Convex and Global Solution for the P$n$P Problem in 2D Forward-Looking Sonar

Apr 10, 2025

Abstract:The perspective-$n$-point (P$n$P) problem is important for robotic pose estimation. It is well studied for optical cameras, but research is lacking for 2D forward-looking sonar (FLS) in underwater scenarios due to the vastly different imaging principles. In this paper, we demonstrate that, despite the nonlinearity inherent in sonar image formation, the P$n$P problem for 2D FLS can still be effectively addressed within a point-to-line (PtL) 3D registration paradigm through orthographic approximation. The registration is then resolved by a duality-based optimal solver, ensuring the global optimality. For coplanar cases, a null space analysis is conducted to retrieve the solutions from the dual formulation, enabling the methods to be applied to more general cases. Extensive simulations have been conducted to systematically evaluate the performance under different settings. Compared to non-reprojection-optimized state-of-the-art (SOTA) methods, the proposed approach achieves significantly higher precision. When both methods are optimized, ours demonstrates comparable or slightly superior precision.

Rejecting Outliers in 2D-3D Point Correspondences from 2D Forward-Looking Sonar Observations

Mar 20, 2025Abstract:Rejecting outliers before applying classical robust methods is a common approach to increase the success rate of estimation, particularly when the outlier ratio is extremely high (e.g. 90%). However, this method often relies on sensor- or task-specific characteristics, which may not be easily transferable across different scenarios. In this paper, we focus on the problem of rejecting 2D-3D point correspondence outliers from 2D forward-looking sonar (2D FLS) observations, which is one of the most popular perception device in the underwater field but has a significantly different imaging mechanism compared to widely used perspective cameras and LiDAR. We fully leverage the narrow field of view in the elevation of 2D FLS and develop two compatibility tests for different 3D point configurations: (1) In general cases, we design a pairwise length in-range test to filter out overly long or short edges formed from point sets; (2) In coplanar cases, we design a coplanarity test to check if any four correspondences are compatible under a coplanar setting. Both tests are integrated into outlier rejection pipelines, where they are followed by maximum clique searching to identify the largest consistent measurement set as inliers. Extensive simulations demonstrate that the proposed methods for general and coplanar cases perform effectively under outlier ratios of 80% and 90%, respectively.

Solving Motion Planning Tasks with a Scalable Generative Model

Jul 03, 2024

Abstract:As autonomous driving systems being deployed to millions of vehicles, there is a pressing need of improving the system's scalability, safety and reducing the engineering cost. A realistic, scalable, and practical simulator of the driving world is highly desired. In this paper, we present an efficient solution based on generative models which learns the dynamics of the driving scenes. With this model, we can not only simulate the diverse futures of a given driving scenario but also generate a variety of driving scenarios conditioned on various prompts. Our innovative design allows the model to operate in both full-Autoregressive and partial-Autoregressive modes, significantly improving inference and training speed without sacrificing generative capability. This efficiency makes it ideal for being used as an online reactive environment for reinforcement learning, an evaluator for planning policies, and a high-fidelity simulator for testing. We evaluated our model against two real-world datasets: the Waymo motion dataset and the nuPlan dataset. On the simulation realism and scene generation benchmark, our model achieves the state-of-the-art performance. And in the planning benchmarks, our planner outperforms the prior arts. We conclude that the proposed generative model may serve as a foundation for a variety of motion planning tasks, including data generation, simulation, planning, and online training. Source code is public at https://github.com/HorizonRobotics/GUMP/

Imitation with Spatial-Temporal Heatmap: 2nd Place Solution for NuPlan Challenge

Jun 26, 2023Abstract:This paper presents our 2nd place solution for the NuPlan Challenge 2023. Autonomous driving in real-world scenarios is highly complex and uncertain. Achieving safe planning in the complex multimodal scenarios is a highly challenging task. Our approach, Imitation with Spatial-Temporal Heatmap, adopts the learning form of behavior cloning, innovatively predicts the future multimodal states with a heatmap representation, and uses trajectory refinement techniques to ensure final safety. The experiment shows that our method effectively balances the vehicle's progress and safety, generating safe and comfortable trajectories. In the NuPlan competition, we achieved the second highest overall score, while obtained the best scores in the ego progress and comfort metrics.

HOPE: Hierarchical Spatial-temporal Network for Occupancy Flow Prediction

Jun 21, 2022

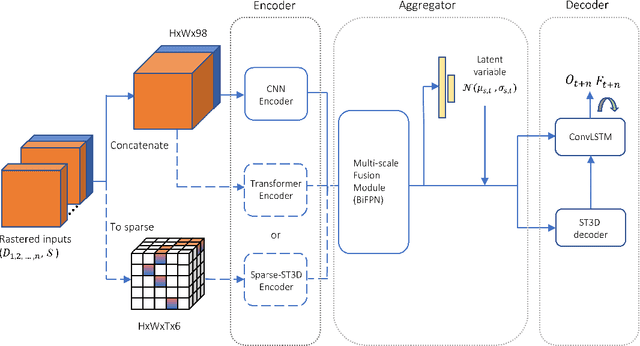

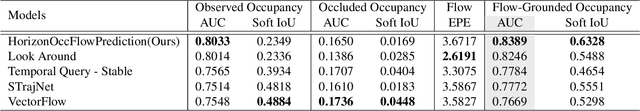

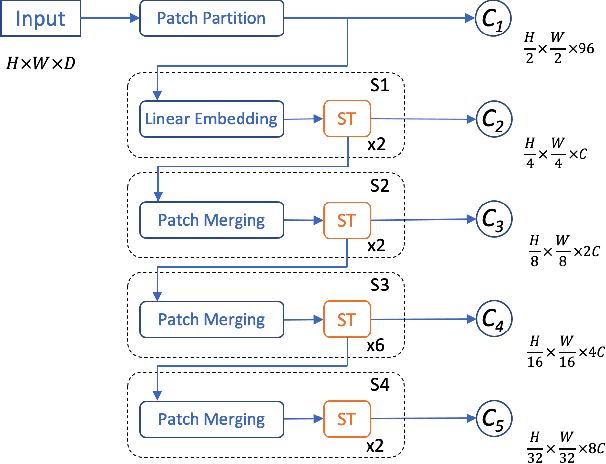

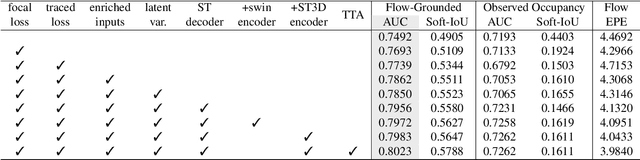

Abstract:In this report, we introduce our solution to the Occupancy and Flow Prediction challenge in the Waymo Open Dataset Challenges at CVPR 2022, which ranks 1st on the leaderboard. We have developed a novel hierarchical spatial-temporal network featured with spatial-temporal encoders, a multi-scale aggregator enriched with latent variables, and a recursive hierarchical 3D decoder. We use multiple losses including focal loss and modified flow trace loss to efficiently guide the training process. Our method achieves a Flow-Grounded Occupancy AUC of 0.8389 and outperforms all the other teams on the leaderboard.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge