Wenshuo Wang

UV-M3TL: A Unified and Versatile Multimodal Multi-Task Learning Framework for Assistive Driving Perception

Feb 02, 2026Abstract:Advanced Driver Assistance Systems (ADAS) need to understand human driver behavior while perceiving their navigation context, but jointly learning these heterogeneous tasks would cause inter-task negative transfer and impair system performance. Here, we propose a Unified and Versatile Multimodal Multi-Task Learning (UV-M3TL) framework to simultaneously recognize driver behavior, driver emotion, vehicle behavior, and traffic context, while mitigating inter-task negative transfer. Our framework incorporates two core components: dual-branch spatial channel multimodal embedding (DB-SCME) and adaptive feature-decoupled multi-task loss (AFD-Loss). DB-SCME enhances cross-task knowledge transfer while mitigating task conflicts by employing a dual-branch structure to explicitly model salient task-shared and task-specific features. AFD-Loss improves the stability of joint optimization while guiding the model to learn diverse multi-task representations by introducing an adaptive weighting mechanism based on learning dynamics and feature decoupling constraints. We evaluate our method on the AIDE dataset, and the experimental results demonstrate that UV-M3TL achieves state-of-the-art performance across all four tasks. To further prove the versatility, we evaluate UV-M3TL on additional public multi-task perception benchmarks (BDD100K, CityScapes, NYUD-v2, and PASCAL-Context), where it consistently delivers strong performance across diverse task combinations, attaining state-of-the-art results on most tasks.

FD-VLA: Force-Distilled Vision-Language-Action Model for Contact-Rich Manipulation

Feb 02, 2026Abstract:Force sensing is a crucial modality for Vision-Language-Action (VLA) frameworks, as it enables fine-grained perception and dexterous manipulation in contact-rich tasks. We present Force-Distilled VLA (FD-VLA), a novel framework that integrates force awareness into contact-rich manipulation without relying on physical force sensors. The core of our approach is a Force Distillation Module (FDM), which distills force by mapping a learnable query token, conditioned on visual observations and robot states, into a predicted force token aligned with the latent representation of actual force signals. During inference, this distilled force token is injected into the pretrained VLM, enabling force-aware reasoning while preserving the integrity of its vision-language semantics. This design provides two key benefits: first, it allows practical deployment across a wide range of robots that lack expensive or fragile force-torque sensors, thereby reducing hardware cost and complexity; second, the FDM introduces an additional force-vision-state fusion prior to the VLM, which improves cross-modal alignment and enhances perception-action robustness in contact-rich scenarios. Surprisingly, our physical experiments show that the distilled force token outperforms direct sensor force measurements as well as other baselines, which highlights the effectiveness of this force-distilled VLA approach.

The Effects of Communication Delay on Human Performance and Neurocognitive Responses in Mobile Robot Teleoperation

Aug 25, 2025Abstract:Communication delays in mobile robot teleoperation adversely affect human-machine collaboration. Understanding delay effects on human operational performance and neurocognition is essential for resolving this issue. However, no previous research has explored this. To fill this gap, we conduct a human-in-the-loop experiment involving 10 participants, integrating electroencephalography (EEG) and robot behavior data under varying delays (0-500 ms in 100 ms increments) to systematically investigate these effects. Behavior analysis reveals significant performance degradation at 200-300 ms delays, affecting both task efficiency and accuracy. EEG analysis discovers features with significant delay dependence: frontal $\theta/\beta$-band and parietal $\alpha$-band power. We also identify a threshold window (100-200 ms) for early perception of delay in humans, during which these EEG features first exhibit significant differences. When delay exceeds 400 ms, all features plateau, indicating saturation of cognitive resource allocation at physiological limits. These findings provide the first evidence of perceptual and cognitive delay thresholds during teleoperation tasks in humans, offering critical neurocognitive insights for the design of delay compensation strategies.

MMTL-UniAD: A Unified Framework for Multimodal and Multi-Task Learning in Assistive Driving Perception

Apr 03, 2025Abstract:Advanced driver assistance systems require a comprehensive understanding of the driver's mental/physical state and traffic context but existing works often neglect the potential benefits of joint learning between these tasks. This paper proposes MMTL-UniAD, a unified multi-modal multi-task learning framework that simultaneously recognizes driver behavior (e.g., looking around, talking), driver emotion (e.g., anxiety, happiness), vehicle behavior (e.g., parking, turning), and traffic context (e.g., traffic jam, traffic smooth). A key challenge is avoiding negative transfer between tasks, which can impair learning performance. To address this, we introduce two key components into the framework: one is the multi-axis region attention network to extract global context-sensitive features, and the other is the dual-branch multimodal embedding to learn multimodal embeddings from both task-shared and task-specific features. The former uses a multi-attention mechanism to extract task-relevant features, mitigating negative transfer caused by task-unrelated features. The latter employs a dual-branch structure to adaptively adjust task-shared and task-specific parameters, enhancing cross-task knowledge transfer while reducing task conflicts. We assess MMTL-UniAD on the AIDE dataset, using a series of ablation studies, and show that it outperforms state-of-the-art methods across all four tasks. The code is available on https://github.com/Wenzhuo-Liu/MMTL-UniAD.

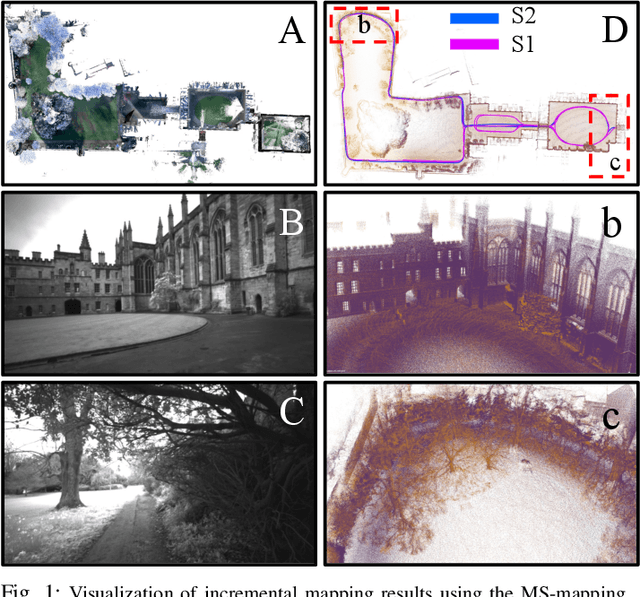

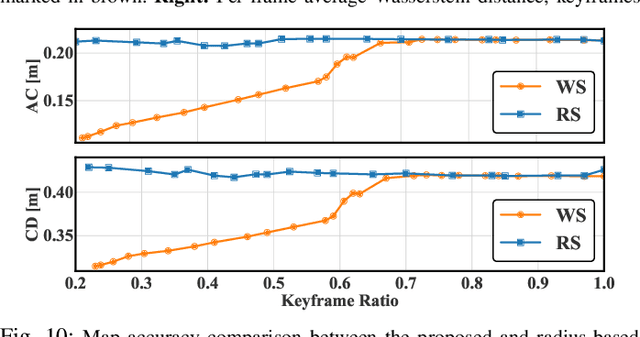

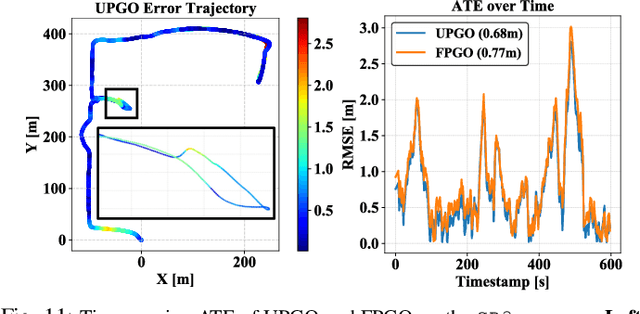

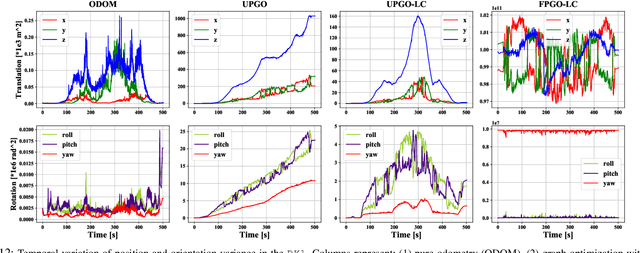

MS-Mapping: An Uncertainty-Aware Large-Scale Multi-Session LiDAR Mapping System

Aug 07, 2024

Abstract:Large-scale multi-session LiDAR mapping is essential for a wide range of applications, including surveying, autonomous driving, crowdsourced mapping, and multi-agent navigation. However, existing approaches often struggle with data redundancy, robustness, and accuracy in complex environments. To address these challenges, we present MS-Mapping, an novel multi-session LiDAR mapping system that employs an incremental mapping scheme for robust and accurate map assembly in large-scale environments. Our approach introduces three key innovations: 1) A distribution-aware keyframe selection method that captures the subtle contributions of each point cloud frame to the map by analyzing the similarity of map distributions. This method effectively reduces data redundancy and pose graph size, while enhancing graph optimization speed; 2) An uncertainty model that automatically performs least-squares adjustments according to the covariance matrix during graph optimization, improving mapping precision, robustness, and flexibility without the need for scene-specific parameter tuning. This uncertainty model enables our system to monitor pose uncertainty and avoid ill-posed optimizations, thereby increasing adaptability to diverse and challenging environments. 3) To ensure fair evaluation, we redesign baseline comparisons and the evaluation benchmark. Direct assessment of map accuracy demonstrates the superiority of the proposed MS-Mapping algorithm compared to state-of-the-art methods. In addition to employing public datasets such as Urban-Nav, FusionPortable, and Newer College, we conducted extensive experiments on such a large \SI{855}{m}$\times$\SI{636}{m} ground truth map, collecting over \SI{20}{km} of indoor and outdoor data across more than ten sequences...

100 Drivers, 2200 km: A Natural Dataset of Driving Style toward Human-centered Intelligent Driving Systems

Jun 12, 2024

Abstract:Effective driving style analysis is critical to developing human-centered intelligent driving systems that consider drivers' preferences. However, the approaches and conclusions of most related studies are diverse and inconsistent because no unified datasets tagged with driving styles exist as a reliable benchmark. The absence of explicit driving style labels makes verifying different approaches and algorithms difficult. This paper provides a new benchmark by constructing a natural dataset of Driving Style (100-DrivingStyle) tagged with the subjective evaluation of 100 drivers' driving styles. In this dataset, the subjective quantification of each driver's driving style is from themselves and an expert according to the Likert-scale questionnaire. The testing routes are selected to cover various driving scenarios, including highways, urban, highway ramps, and signalized traffic. The collected driving data consists of lateral and longitudinal manipulation information, including steering angle, steering speed, lateral acceleration, throttle position, throttle rate, brake pressure, etc. This dataset is the first to provide detailed manipulation data with driving-style tags, and we demonstrate its benchmark function using six classifiers. The 100-DrivingStyle dataset is available via https://github.com/chaopengzhang/100-DrivingStyle-Dataset

Shareable Driving Style Learning and Analysis with a Hierarchical Latent Model

Oct 24, 2023Abstract:Driving style is usually used to characterize driving behavior for a driver or a group of drivers. However, it remains unclear how one individual's driving style shares certain common grounds with other drivers. Our insight is that driving behavior is a sequence of responses to the weighted mixture of latent driving styles that are shareable within and between individuals. To this end, this paper develops a hierarchical latent model to learn the relationship between driving behavior and driving styles. We first propose a fragment-based approach to represent complex sequential driving behavior, allowing for sufficiently representing driving behavior in a low-dimension feature space. Then, we provide an analytical formulation for the interaction of driving behavior and shareable driving style with a hierarchical latent model by introducing the mechanism of Dirichlet allocation. Our developed model is finally validated and verified with 100 drivers in naturalistic driving settings with urban and highways. Experimental results reveal that individuals share driving styles within and between them. We also analyzed the influence of personalities (e.g., age, gender, and driving experience) on driving styles and found that a naturally aggressive driver would not always keep driving aggressively (i.e., could behave calmly sometimes) but with a higher proportion of aggressiveness than other types of drivers.

Interactive Car-Following: Matters but NOT Always

Jul 30, 2023

Abstract:Following a leading vehicle is a daily but challenging task because it requires adapting to various traffic conditions and the leading vehicle's behaviors. However, the question `Does the following vehicle always actively react to the leading vehicle?' remains open. To seek the answer, we propose a novel metric to quantify the interaction intensity within the car-following pairs. The quantified interaction intensity enables us to recognize interactive and non-interactive car-following scenarios and derive corresponding policies for each scenario. Then, we develop an interaction-aware switching control framework with interactive and non-interactive policies, achieving a human-level car-following performance. The extensive simulations demonstrate that our interaction-aware switching control framework achieves improved control performance and data efficiency compared to the unified control strategies. Moreover, the experimental results reveal that human drivers would not always keep reacting to their leading vehicle but occasionally take safety-critical or intentional actions -- interaction matters but not always.

Efficient Reinforcement Learning for Autonomous Driving with Parameterized Skills and Priors

May 08, 2023Abstract:When autonomous vehicles are deployed on public roads, they will encounter countless and diverse driving situations. Many manually designed driving policies are difficult to scale to the real world. Fortunately, reinforcement learning has shown great success in many tasks by automatic trial and error. However, when it comes to autonomous driving in interactive dense traffic, RL agents either fail to learn reasonable performance or necessitate a large amount of data. Our insight is that when humans learn to drive, they will 1) make decisions over the high-level skill space instead of the low-level control space and 2) leverage expert prior knowledge rather than learning from scratch. Inspired by this, we propose ASAP-RL, an efficient reinforcement learning algorithm for autonomous driving that simultaneously leverages motion skills and expert priors. We first parameterized motion skills, which are diverse enough to cover various complex driving scenarios and situations. A skill parameter inverse recovery method is proposed to convert expert demonstrations from control space to skill space. A simple but effective double initialization technique is proposed to leverage expert priors while bypassing the issue of expert suboptimality and early performance degradation. We validate our proposed method on interactive dense-traffic driving tasks given simple and sparse rewards. Experimental results show that our method can lead to higher learning efficiency and better driving performance relative to previous methods that exploit skills and priors differently. Code is open-sourced to facilitate further research.

Understanding Bugs in Multi-Language Deep Learning Frameworks

Mar 05, 2023

Abstract:Deep learning frameworks (DLFs) have been playing an increasingly important role in this intelligence age since they act as a basic infrastructure for an increasingly wide range of AIbased applications. Meanwhile, as multi-programming-language (MPL) software systems, DLFs are inevitably suffering from bugs caused by the use of multiple programming languages (PLs). Hence, it is of paramount significance to understand the bugs (especially the bugs involving multiple PLs, i.e., MPL bugs) of DLFs, which can provide a foundation for preventing, detecting, and resolving bugs in the development of DLFs. To this end, we manually analyzed 1497 bugs in three MPL DLFs, namely MXNet, PyTorch, and TensorFlow. First, we classified bugs in these DLFs into 12 types (e.g., algorithm design bugs and memory bugs) according to their bug labels and characteristics. Second, we further explored the impacts of different bug types on the development of DLFs, and found that deployment bugs and memory bugs negatively impact the development of DLFs in different aspects the most. Third, we found that 28.6%, 31.4%, and 16.0% of bugs in MXNet, PyTorch, and TensorFlow are MPL bugs, respectively; the PL combination of Python and C/C++ is most used in fixing more than 92% MPL bugs in all DLFs. Finally, the code change complexity of MPL bug fixes is significantly greater than that of single-programming-language (SPL) bug fixes in all the three DLFs, while in PyTorch MPL bug fixes have longer open time and greater communication complexity than SPL bug fixes. These results provide insights for bug management in DLFs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge