Accelerating Reinforcement Learning for Autonomous Driving using Task-Agnostic and Ego-Centric Motion Skills

Paper and Code

Sep 24, 2022

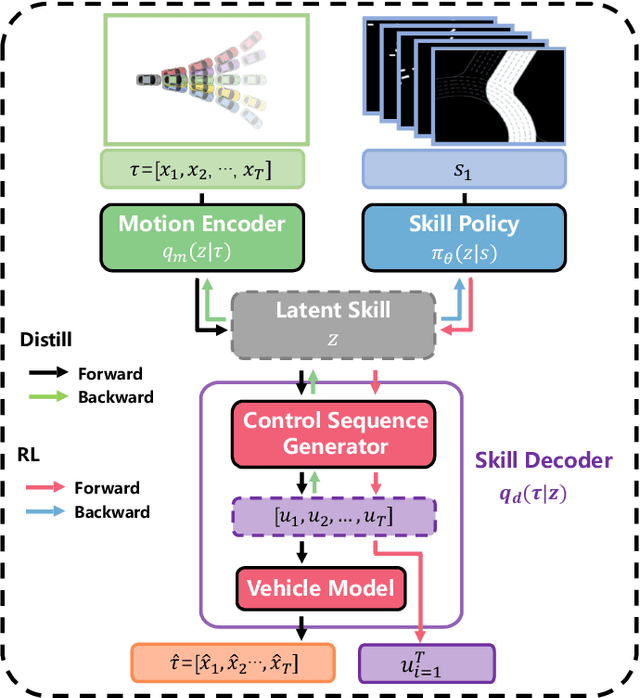

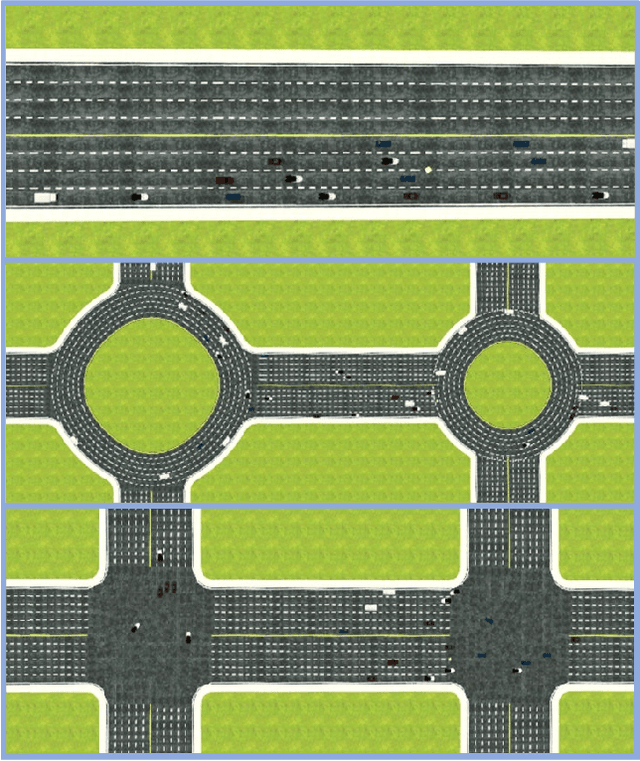

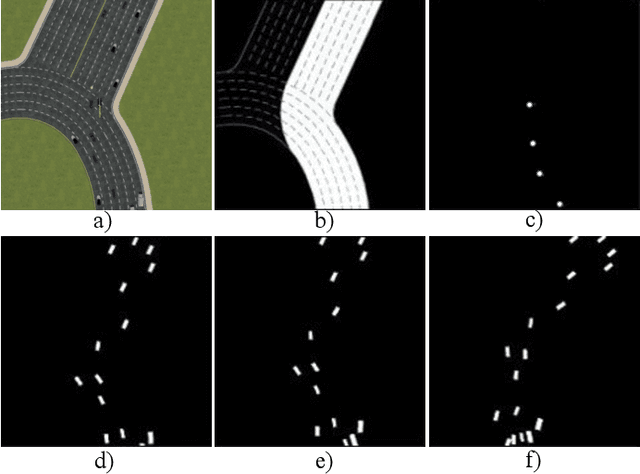

Efficient and effective exploration in continuous space is a central problem in applying reinforcement learning (RL) to autonomous driving. Skills learned from expert demonstrations or designed for specific tasks can benefit the exploration, but they are usually costly-collected, unbalanced/sub-optimal, or failing to transfer to diverse tasks. However, human drivers can adapt to varied driving tasks without demonstrations by taking efficient and structural explorations in the entire skill space rather than a limited space with task-specific skills. Inspired by the above fact, we propose an RL algorithm exploring all feasible motion skills instead of a limited set of task-specific and object-centric skills. Without demonstrations, our method can still perform well in diverse tasks. First, we build a task-agnostic and ego-centric (TaEc) motion skill library in a pure motion perspective, which is diverse enough to be reusable in different complex tasks. The motion skills are then encoded into a low-dimension latent skill space, in which RL can do exploration efficiently. Validations in various challenging driving scenarios demonstrate that our proposed method, TaEc-RL, outperforms its counterparts significantly in learning efficiency and task performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge