Tomas Lozano-Perez

Guided Exploration for Efficient Relational Model Learning

Feb 10, 2025

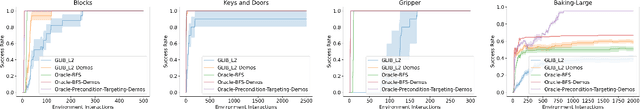

Abstract:Efficient exploration is critical for learning relational models in large-scale environments with complex, long-horizon tasks. Random exploration methods often collect redundant or irrelevant data, limiting their ability to learn accurate relational models of the environment. Goal-literal babbling (GLIB) improves upon random exploration by setting and planning to novel goals, but its reliance on random actions and random novel goal selection limits its scalability to larger domains. In this work, we identify the principles underlying efficient exploration in relational domains: (1) operator initialization with demonstrations that cover the distinct lifted effects necessary for planning and (2) refining preconditions to collect maximally informative transitions by selecting informative goal-action pairs and executing plans to them. To demonstrate these principles, we introduce Baking-Large, a challenging domain with extensive state-action spaces and long-horizon tasks. We evaluate methods using oracle-driven demonstrations for operator initialization and precondition-targeting guidance to efficiently gather critical transitions. Experiments show that both the oracle demonstrations and precondition-targeting oracle guidance significantly improve sample efficiency and generalization, paving the way for future methods to use these principles to efficiently learn accurate relational models in complex domains.

Bi-Level Belief Space Search for Compliant Part Mating Under Uncertainty

Sep 24, 2024Abstract:The problem of mating two parts with low clearance remains difficult for autonomous robots. We present bi-level belief assembly (BILBA), a model-based planner that computes a sequence of compliant motions which can leverage contact with the environment to reduce uncertainty and perform challenging assembly tasks with low clearance. Our approach is based on first deriving candidate contact schedules from the structure of the configuration space obstacle of the parts and then finding compliant motions that achieve the desired contacts. We demonstrate that BILBA can efficiently compute robust plans on multiple simulated tasks as well as a real robot rectangular peg-in-hole insertion task.

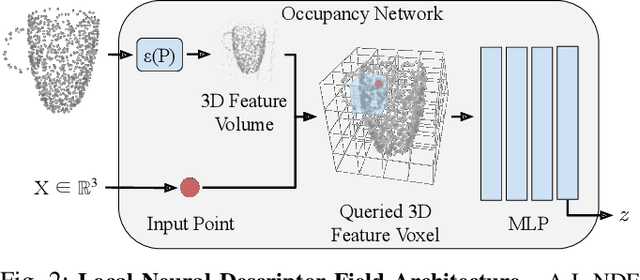

Local Neural Descriptor Fields: Locally Conditioned Object Representations for Manipulation

Feb 07, 2023

Abstract:A robot operating in a household environment will see a wide range of unique and unfamiliar objects. While a system could train on many of these, it is infeasible to predict all the objects a robot will see. In this paper, we present a method to generalize object manipulation skills acquired from a limited number of demonstrations, to novel objects from unseen shape categories. Our approach, Local Neural Descriptor Fields (L-NDF), utilizes neural descriptors defined on the local geometry of the object to effectively transfer manipulation demonstrations to novel objects at test time. In doing so, we leverage the local geometry shared between objects to produce a more general manipulation framework. We illustrate the efficacy of our approach in manipulating novel objects in novel poses -- both in simulation and in the real world.

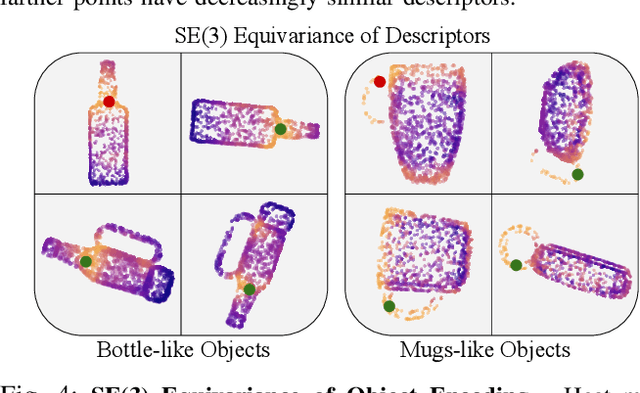

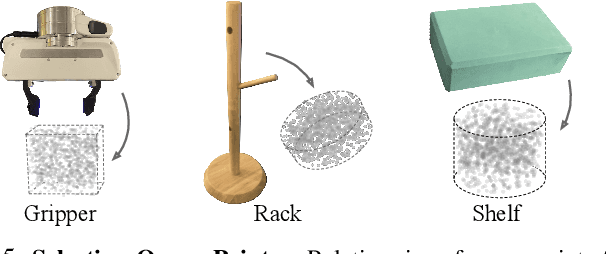

SE(3)-Equivariant Relational Rearrangement with Neural Descriptor Fields

Nov 17, 2022Abstract:We present a method for performing tasks involving spatial relations between novel object instances initialized in arbitrary poses directly from point cloud observations. Our framework provides a scalable way for specifying new tasks using only 5-10 demonstrations. Object rearrangement is formalized as the question of finding actions that configure task-relevant parts of the object in a desired alignment. This formalism is implemented in three steps: assigning a consistent local coordinate frame to the task-relevant object parts, determining the location and orientation of this coordinate frame on unseen object instances, and executing an action that brings these frames into the desired alignment. We overcome the key technical challenge of determining task-relevant local coordinate frames from a few demonstrations by developing an optimization method based on Neural Descriptor Fields (NDFs) and a single annotated 3D keypoint. An energy-based learning scheme to model the joint configuration of the objects that satisfies a desired relational task further improves performance. The method is tested on three multi-object rearrangement tasks in simulation and on a real robot. Project website, videos, and code: https://anthonysimeonov.github.io/r-ndf/

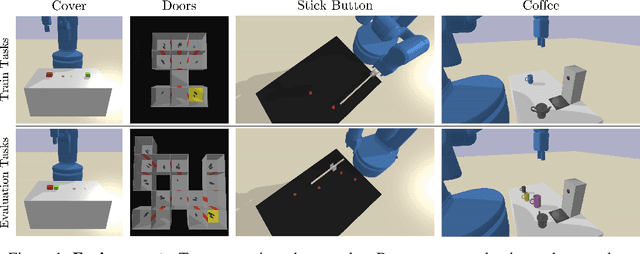

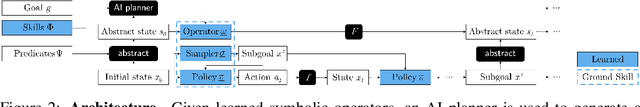

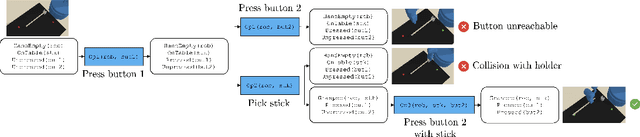

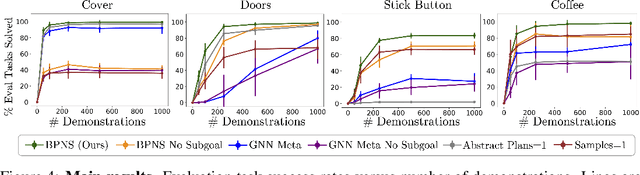

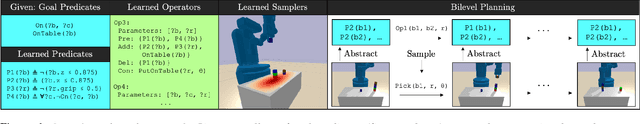

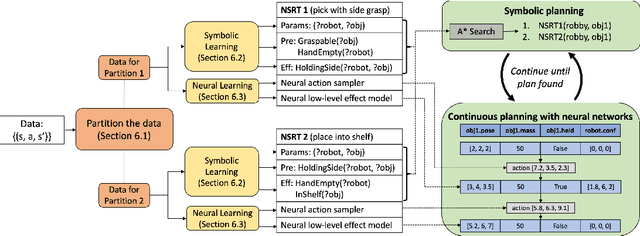

Learning Neuro-Symbolic Skills for Bilevel Planning

Jun 21, 2022

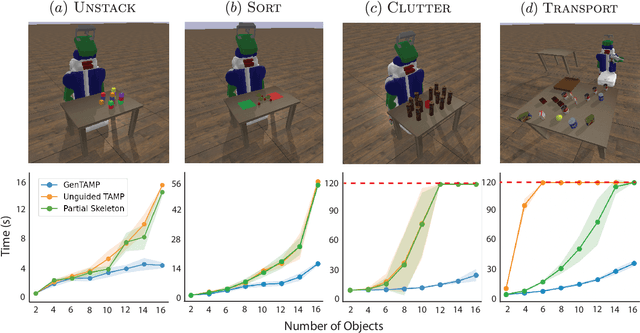

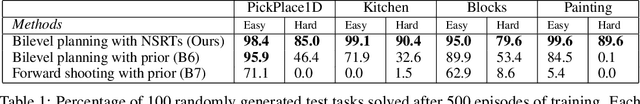

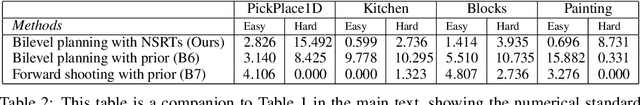

Abstract:Decision-making is challenging in robotics environments with continuous object-centric states, continuous actions, long horizons, and sparse feedback. Hierarchical approaches, such as task and motion planning (TAMP), address these challenges by decomposing decision-making into two or more levels of abstraction. In a setting where demonstrations and symbolic predicates are given, prior work has shown how to learn symbolic operators and neural samplers for TAMP with manually designed parameterized policies. Our main contribution is a method for learning parameterized polices in combination with operators and samplers. These components are packaged into modular neuro-symbolic skills and sequenced together with search-then-sample TAMP to solve new tasks. In experiments in four robotics domains, we show that our approach -- bilevel planning with neuro-symbolic skills -- can solve a wide range of tasks with varying initial states, goals, and objects, outperforming six baselines and ablations. Video: https://youtu.be/PbFZP8rPuGg Code: https://tinyurl.com/skill-learning

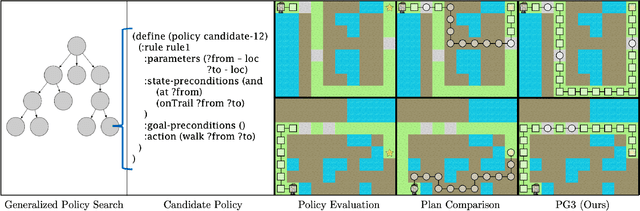

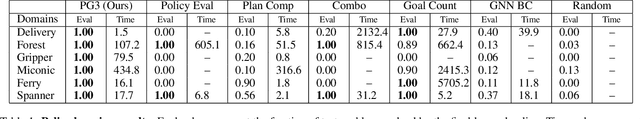

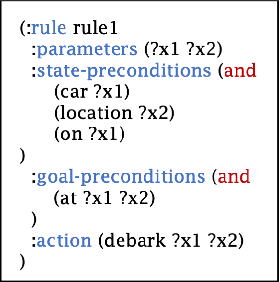

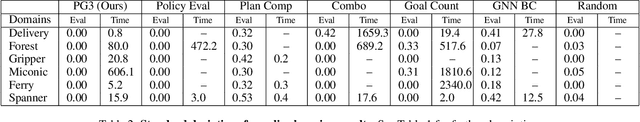

PG3: Policy-Guided Planning for Generalized Policy Generation

Apr 21, 2022

Abstract:A longstanding objective in classical planning is to synthesize policies that generalize across multiple problems from the same domain. In this work, we study generalized policy search-based methods with a focus on the score function used to guide the search over policies. We demonstrate limitations of two score functions and propose a new approach that overcomes these limitations. The main idea behind our approach, Policy-Guided Planning for Generalized Policy Generation (PG3), is that a candidate policy should be used to guide planning on training problems as a mechanism for evaluating that candidate. Theoretical results in a simplified setting give conditions under which PG3 is optimal or admissible. We then study a specific instantiation of policy search where planning problems are PDDL-based and policies are lifted decision lists. Empirical results in six domains confirm that PG3 learns generalized policies more efficiently and effectively than several baselines. Code: https://github.com/ryangpeixu/pg3

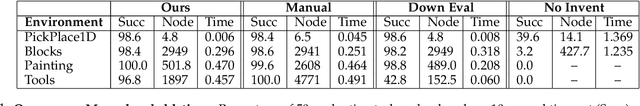

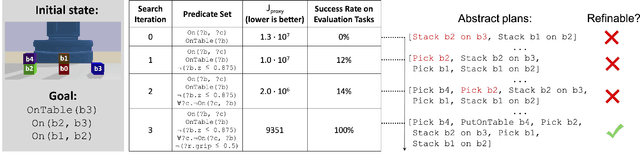

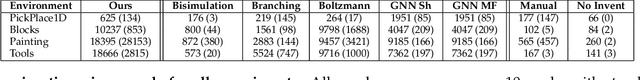

Inventing Relational State and Action Abstractions for Effective and Efficient Bilevel Planning

Mar 17, 2022

Abstract:Effective and efficient planning in continuous state and action spaces is fundamentally hard, even when the transition model is deterministic and known. One way to alleviate this challenge is to perform bilevel planning with abstractions, where a high-level search for abstract plans is used to guide planning in the original transition space. In this paper, we develop a novel framework for learning state and action abstractions that are explicitly optimized for both effective (successful) and efficient (fast) bilevel planning. Given demonstrations of tasks in an environment, our data-efficient approach learns relational, neuro-symbolic abstractions that generalize over object identities and numbers. The symbolic components resemble the STRIPS predicates and operators found in AI planning, and the neural components refine the abstractions into actions that can be executed in the environment. Experimentally, we show across four robotic planning environments that our learned abstractions are able to quickly solve held-out tasks of longer horizons than were seen in the demonstrations, and can even outperform the efficiency of abstractions that we manually specified. We also find that as the planner configuration varies, the learned abstractions adapt accordingly, indicating that our abstraction learning method is both "task-aware" and "planner-aware." Code: https://tinyurl.com/predicators-release

From Machine Learning to Robotics: Challenges and Opportunities for Embodied Intelligence

Oct 28, 2021

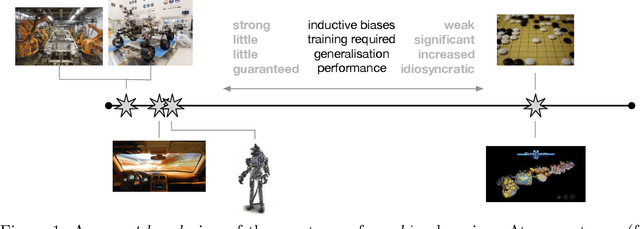

Abstract:Machine learning has long since become a keystone technology, accelerating science and applications in a broad range of domains. Consequently, the notion of applying learning methods to a particular problem set has become an established and valuable modus operandi to advance a particular field. In this article we argue that such an approach does not straightforwardly extended to robotics -- or to embodied intelligence more generally: systems which engage in a purposeful exchange of energy and information with a physical environment. In particular, the purview of embodied intelligent agents extends significantly beyond the typical considerations of main-stream machine learning approaches, which typically (i) do not consider operation under conditions significantly different from those encountered during training; (ii) do not consider the often substantial, long-lasting and potentially safety-critical nature of interactions during learning and deployment; (iii) do not require ready adaptation to novel tasks while at the same time (iv) effectively and efficiently curating and extending their models of the world through targeted and deliberate actions. In reality, therefore, these limitations result in learning-based systems which suffer from many of the same operational shortcomings as more traditional, engineering-based approaches when deployed on a robot outside a well defined, and often narrow operating envelope. Contrary to viewing embodied intelligence as another application domain for machine learning, here we argue that it is in fact a key driver for the advancement of machine learning technology. In this article our goal is to highlight challenges and opportunities that are specific to embodied intelligence and to propose research directions which may significantly advance the state-of-the-art in robot learning.

Discovering State and Action Abstractions for Generalized Task and Motion Planning

Sep 23, 2021

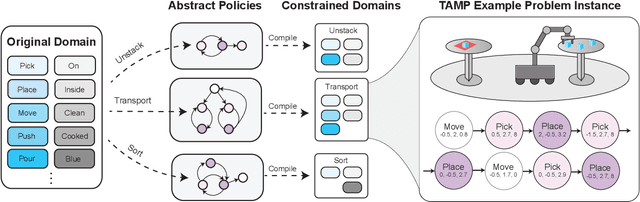

Abstract:Generalized planning accelerates classical planning by finding an algorithm-like policy that solves multiple instances of a task. A generalized plan can be learned from a few training examples and applied to an entire domain of problems. Generalized planning approaches perform well in discrete AI planning problems that involve large numbers of objects and extended action sequences to achieve the goal. In this paper, we propose an algorithm for learning features, abstractions, and generalized plans for continuous robotic task and motion planning (TAMP) and examine the unique difficulties that arise when forced to consider geometric and physical constraints as a part of the generalized plan. Additionally, we show that these simple generalized plans learned from only a handful of examples can be used to improve the search efficiency of TAMP solvers.

Learning Neuro-Symbolic Relational Transition Models for Bilevel Planning

May 28, 2021

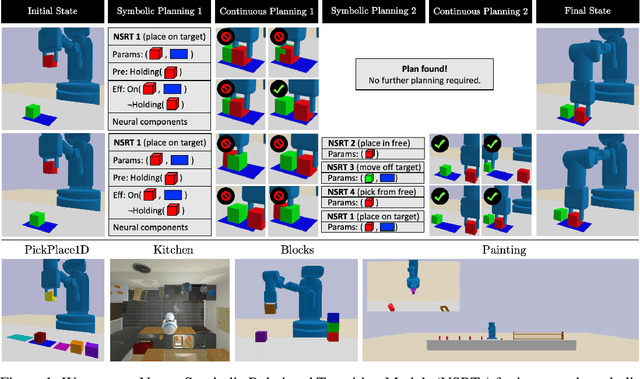

Abstract:Despite recent, independent progress in model-based reinforcement learning and integrated symbolic-geometric robotic planning, synthesizing these techniques remains challenging because of their disparate assumptions and strengths. In this work, we take a step toward bridging this gap with Neuro-Symbolic Relational Transition Models (NSRTs), a novel class of transition models that are data-efficient to learn, compatible with powerful robotic planning methods, and generalizable over objects. NSRTs have both symbolic and neural components, enabling a bilevel planning scheme where symbolic AI planning in an outer loop guides continuous planning with neural models in an inner loop. Experiments in four robotic planning domains show that NSRTs can be learned after only tens or hundreds of training episodes, and then used for fast planning in new tasks that require up to 60 actions to reach the goal and involve many more objects than were seen during training. Video: https://tinyurl.com/chitnis-nsrts

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge