Ingmar Posner

Vision-Language-Action Models for Robotics: A Review Towards Real-World Applications

Oct 08, 2025Abstract:Amid growing efforts to leverage advances in large language models (LLMs) and vision-language models (VLMs) for robotics, Vision-Language-Action (VLA) models have recently gained significant attention. By unifying vision, language, and action data at scale, which have traditionally been studied separately, VLA models aim to learn policies that generalise across diverse tasks, objects, embodiments, and environments. This generalisation capability is expected to enable robots to solve novel downstream tasks with minimal or no additional task-specific data, facilitating more flexible and scalable real-world deployment. Unlike previous surveys that focus narrowly on action representations or high-level model architectures, this work offers a comprehensive, full-stack review, integrating both software and hardware components of VLA systems. In particular, this paper provides a systematic review of VLAs, covering their strategy and architectural transition, architectures and building blocks, modality-specific processing techniques, and learning paradigms. In addition, to support the deployment of VLAs in real-world robotic applications, we also review commonly used robot platforms, data collection strategies, publicly available datasets, data augmentation methods, and evaluation benchmarks. Throughout this comprehensive survey, this paper aims to offer practical guidance for the robotics community in applying VLAs to real-world robotic systems. All references categorized by training approach, evaluation method, modality, and dataset are available in the table on our project website: https://vla-survey.github.io .

Building Gradient by Gradient: Decentralised Energy Functions for Bimanual Robot Assembly

Oct 06, 2025

Abstract:There are many challenges in bimanual assembly, including high-level sequencing, multi-robot coordination, and low-level, contact-rich operations such as component mating. Task and motion planning (TAMP) methods, while effective in this domain, may be prohibitively slow to converge when adapting to disturbances that require new task sequencing and optimisation. These events are common during tight-tolerance assembly, where difficult-to-model dynamics such as friction or deformation require rapid replanning and reattempts. Moreover, defining explicit task sequences for assembly can be cumbersome, limiting flexibility when task replanning is required. To simplify this planning, we introduce a decentralised gradient-based framework that uses a piecewise continuous energy function through the automatic composition of adaptive potential functions. This approach generates sub-goals using only myopic optimisation, rather than long-horizon planning. It demonstrates effectiveness at solving long-horizon tasks due to the structure and adaptivity of the energy function. We show that our approach scales to physical bimanual assembly tasks for constructing tight-tolerance assemblies. In these experiments, we discover that our gradient-based rapid replanning framework generates automatic retries, coordinated motions and autonomous handovers in an emergent fashion.

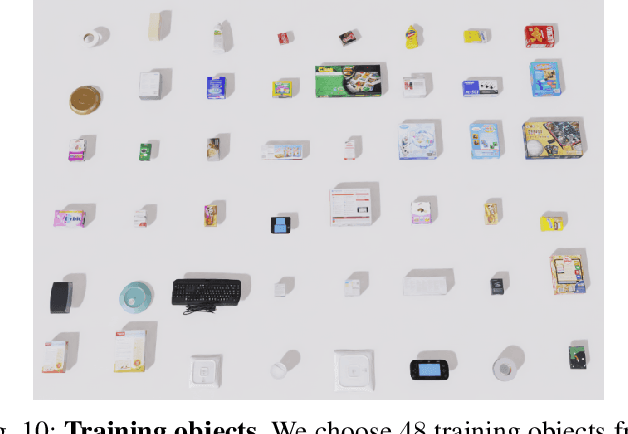

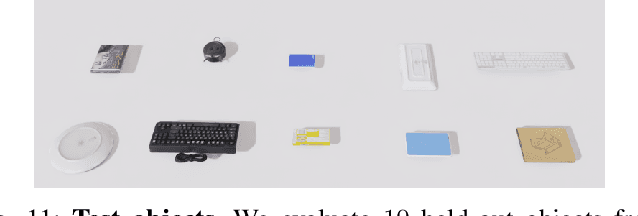

Is Single-View Mesh Reconstruction Ready for Robotics?

May 23, 2025

Abstract:This paper evaluates single-view mesh reconstruction models for creating digital twin environments in robot manipulation. Recent advances in computer vision for 3D reconstruction from single viewpoints present a potential breakthrough for efficiently creating virtual replicas of physical environments for robotics contexts. However, their suitability for physics simulations and robotics applications remains unexplored. We establish benchmarking criteria for 3D reconstruction in robotics contexts, including handling typical inputs, producing collision-free and stable reconstructions, managing occlusions, and meeting computational constraints. Our empirical evaluation using realistic robotics datasets shows that despite success on computer vision benchmarks, existing approaches fail to meet robotics-specific requirements. We quantitively examine limitations of single-view reconstruction for practical robotics implementation, in contrast to prior work that focuses on multi-view approaches. Our findings highlight critical gaps between computer vision advances and robotics needs, guiding future research at this intersection.

Task and Joint Space Dual-Arm Compliant Control

Apr 29, 2025Abstract:Robots that interact with humans or perform delicate manipulation tasks must exhibit compliance. However, most commercial manipulators are rigid and suffer from significant friction, limiting end-effector tracking accuracy in torque-controlled modes. To address this, we present a real-time, open-source impedance controller that smoothly interpolates between joint-space and task-space compliance. This hybrid approach ensures safe interaction and precise task execution, such as sub-centimetre pin insertions. We deploy our controller on Frank, a dual-arm platform with two Kinova Gen3 arms, and compensate for modelled friction dynamics using a model-free observer. The system is real-time capable and integrates with standard ROS tools like MoveIt!. It also supports high-frequency trajectory streaming, enabling closed-loop execution of trajectories generated by learning-based methods, optimal control, or teleoperation. Our results demonstrate robust tracking and compliant behaviour even under high-friction conditions. The complete system is available open-source at https://github.com/applied-ai-lab/compliant_controllers.

LUMOS: Language-Conditioned Imitation Learning with World Models

Mar 13, 2025Abstract:We introduce LUMOS, a language-conditioned multi-task imitation learning framework for robotics. LUMOS learns skills by practicing them over many long-horizon rollouts in the latent space of a learned world model and transfers these skills zero-shot to a real robot. By learning on-policy in the latent space of the learned world model, our algorithm mitigates policy-induced distribution shift which most offline imitation learning methods suffer from. LUMOS learns from unstructured play data with fewer than 1% hindsight language annotations but is steerable with language commands at test time. We achieve this coherent long-horizon performance by combining latent planning with both image- and language-based hindsight goal relabeling during training, and by optimizing an intrinsic reward defined in the latent space of the world model over multiple time steps, effectively reducing covariate shift. In experiments on the difficult long-horizon CALVIN benchmark, LUMOS outperforms prior learning-based methods with comparable approaches on chained multi-task evaluations. To the best of our knowledge, we are the first to learn a language-conditioned continuous visuomotor control for a real-world robot within an offline world model. Videos, dataset and code are available at http://lumos.cs.uni-freiburg.de.

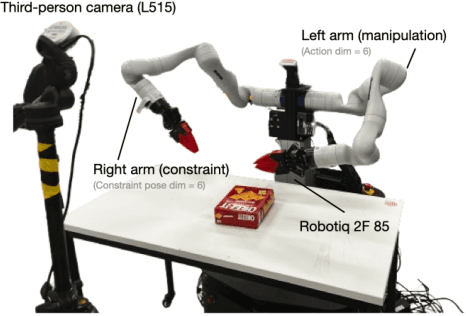

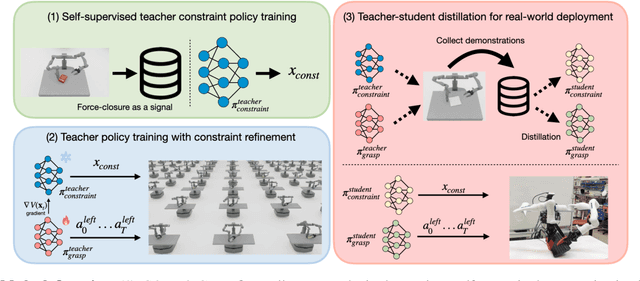

COMBO-Grasp: Learning Constraint-Based Manipulation for Bimanual Occluded Grasping

Feb 12, 2025

Abstract:This paper addresses the challenge of occluded robot grasping, i.e. grasping in situations where the desired grasp poses are kinematically infeasible due to environmental constraints such as surface collisions. Traditional robot manipulation approaches struggle with the complexity of non-prehensile or bimanual strategies commonly used by humans in these circumstances. State-of-the-art reinforcement learning (RL) methods are unsuitable due to the inherent complexity of the task. In contrast, learning from demonstration requires collecting a significant number of expert demonstrations, which is often infeasible. Instead, inspired by human bimanual manipulation strategies, where two hands coordinate to stabilise and reorient objects, we focus on a bimanual robotic setup to tackle this challenge. In particular, we introduce Constraint-based Manipulation for Bimanual Occluded Grasping (COMBO-Grasp), a learning-based approach which leverages two coordinated policies: a constraint policy trained using self-supervised datasets to generate stabilising poses and a grasping policy trained using RL that reorients and grasps the target object. A key contribution lies in value function-guided policy coordination. Specifically, during RL training for the grasping policy, the constraint policy's output is refined through gradients from a jointly trained value function, improving bimanual coordination and task performance. Lastly, COMBO-Grasp employs teacher-student policy distillation to effectively deploy point cloud-based policies in real-world environments. Empirical evaluations demonstrate that COMBO-Grasp significantly improves task success rates compared to competitive baseline approaches, with successful generalisation to unseen objects in both simulated and real-world environments.

The Complexity Dynamics of Grokking

Dec 13, 2024Abstract:We investigate the phenomenon of generalization through the lens of compression. In particular, we study the complexity dynamics of neural networks to explain grokking, where networks suddenly transition from memorizing to generalizing solutions long after over-fitting the training data. To this end we introduce a new measure of intrinsic complexity for neural networks based on the theory of Kolmogorov complexity. Tracking this metric throughout network training, we find a consistent pattern in training dynamics, consisting of a rise and fall in complexity. We demonstrate that this corresponds to memorization followed by generalization. Based on insights from rate--distortion theory and the minimum description length principle, we lay out a principled approach to lossy compression of neural networks, and connect our complexity measure to explicit generalization bounds. Based on a careful analysis of information capacity in neural networks, we propose a new regularization method which encourages networks towards low-rank representations by penalizing their spectral entropy, and find that our regularizer outperforms baselines in total compression of the dataset.

Offline Adaptation of Quadruped Locomotion using Diffusion Models

Nov 13, 2024

Abstract:We present a diffusion-based approach to quadrupedal locomotion that simultaneously addresses the limitations of learning and interpolating between multiple skills and of (modes) offline adapting to new locomotion behaviours after training. This is the first framework to apply classifier-free guided diffusion to quadruped locomotion and demonstrate its efficacy by extracting goal-conditioned behaviour from an originally unlabelled dataset. We show that these capabilities are compatible with a multi-skill policy and can be applied with little modification and minimal compute overhead, i.e., running entirely on the robots onboard CPU. We verify the validity of our approach with hardware experiments on the ANYmal quadruped platform.

SPARTAN: A Sparse Transformer Learning Local Causation

Nov 12, 2024

Abstract:Causal structures play a central role in world models that flexibly adapt to changes in the environment. While recent works motivate the benefits of discovering local causal graphs for dynamics modelling, in this work we demonstrate that accurately capturing these relationships in complex settings remains challenging for the current state-of-the-art. To remedy this shortcoming, we postulate that sparsity is a critical ingredient for the discovery of such local causal structures. To this end we present the SPARse TrANsformer World model (SPARTAN), a Transformer-based world model that learns local causal structures between entities in a scene. By applying sparsity regularisation on the attention pattern between object-factored tokens, SPARTAN identifies sparse local causal models that accurately predict future object states. Furthermore, we extend our model to capture sparse interventions with unknown targets on the dynamics of the environment. This results in a highly interpretable world model that can efficiently adapt to changes. Empirically, we evaluate SPARTAN against the current state-of-the-art in object-centric world models on observation-based environments and demonstrate that our model can learn accurate local causal graphs and achieve significantly improved few-shot adaptation to changes in the dynamics of the environment as well as robustness against removing irrelevant distractors.

A Review of Differentiable Simulators

Jul 08, 2024

Abstract:Differentiable simulators continue to push the state of the art across a range of domains including computational physics, robotics, and machine learning. Their main value is the ability to compute gradients of physical processes, which allows differentiable simulators to be readily integrated into commonly employed gradient-based optimization schemes. To achieve this, a number of design decisions need to be considered representing trade-offs in versatility, computational speed, and accuracy of the gradients obtained. This paper presents an in-depth review of the evolving landscape of differentiable physics simulators. We introduce the foundations and core components of differentiable simulators alongside common design choices. This is followed by a practical guide and overview of open-source differentiable simulators that have been used across past research. Finally, we review and contextualize prominent applications of differentiable simulation. By offering a comprehensive review of the current state-of-the-art in differentiable simulation, this work aims to serve as a resource for researchers and practitioners looking to understand and integrate differentiable physics within their research. We conclude by highlighting current limitations as well as providing insights into future directions for the field.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge