Rhys Newbury

INSPIRE-GNN: Intelligent Sensor Placement to Improve Sparse Bicycling Network Prediction via Reinforcement Learning Boosted Graph Neural Networks

Jul 31, 2025Abstract:Accurate link-level bicycling volume estimation is essential for sustainable urban transportation planning. However, many cities face significant challenges of high data sparsity due to limited bicycling count sensor coverage. To address this issue, we propose INSPIRE-GNN, a novel Reinforcement Learning (RL)-boosted hybrid Graph Neural Network (GNN) framework designed to optimize sensor placement and improve link-level bicycling volume estimation in data-sparse environments. INSPIRE-GNN integrates Graph Convolutional Networks (GCN) and Graph Attention Networks (GAT) with a Deep Q-Network (DQN)-based RL agent, enabling a data-driven strategic selection of sensor locations to maximize estimation performance. Applied to Melbourne's bicycling network, comprising 15,933 road segments with sensor coverage on only 141 road segments (99% sparsity) - INSPIRE-GNN demonstrates significant improvements in volume estimation by strategically selecting additional sensor locations in deployments of 50, 100, 200 and 500 sensors. Our framework outperforms traditional heuristic methods for sensor placement such as betweenness centrality, closeness centrality, observed bicycling activity and random placement, across key metrics such as Mean Squared Error (MSE), Root Mean Squared Error (RMSE) and Mean Absolute Error (MAE). Furthermore, our experiments benchmark INSPIRE-GNN against standard machine learning and deep learning models in the bicycle volume estimation performance, underscoring its effectiveness. Our proposed framework provides transport planners actionable insights to effectively expand sensor networks, optimize sensor placement and maximize volume estimation accuracy and reliability of bicycling data for informed transportation planning decisions.

Carefully Structured Compression: Efficiently Managing StarCraft II Data

Oct 11, 2024Abstract:Creation and storage of datasets are often overlooked input costs in machine learning, as many datasets are simple image label pairs or plain text. However, datasets with more complex structures, such as those from the real time strategy game StarCraft II, require more deliberate thought and strategy to reduce cost of ownership. We introduce a serialization framework for StarCraft II that reduces the cost of dataset creation and storage, as well as improving usage ergonomics. We benchmark against the most comparable existing dataset from \textit{AlphaStar-Unplugged} and highlight the benefit of our framework in terms of both the cost of creation and storage. We use our dataset to train deep learning models that exceed the performance of comparable models trained on other datasets. The dataset conversion and usage framework introduced is open source and can be used as a framework for datasets with similar characteristics such as digital twin simulations. Pre-converted StarCraft II tournament data is also available online.

A Review of Differentiable Simulators

Jul 08, 2024

Abstract:Differentiable simulators continue to push the state of the art across a range of domains including computational physics, robotics, and machine learning. Their main value is the ability to compute gradients of physical processes, which allows differentiable simulators to be readily integrated into commonly employed gradient-based optimization schemes. To achieve this, a number of design decisions need to be considered representing trade-offs in versatility, computational speed, and accuracy of the gradients obtained. This paper presents an in-depth review of the evolving landscape of differentiable physics simulators. We introduce the foundations and core components of differentiable simulators alongside common design choices. This is followed by a practical guide and overview of open-source differentiable simulators that have been used across past research. Finally, we review and contextualize prominent applications of differentiable simulation. By offering a comprehensive review of the current state-of-the-art in differentiable simulation, this work aims to serve as a resource for researchers and practitioners looking to understand and integrate differentiable physics within their research. We conclude by highlighting current limitations as well as providing insights into future directions for the field.

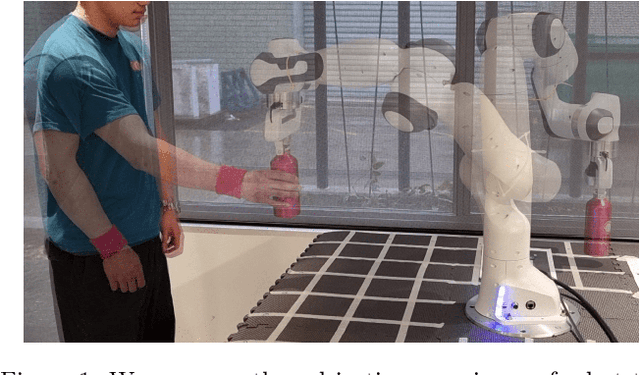

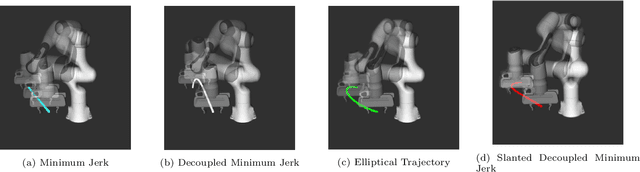

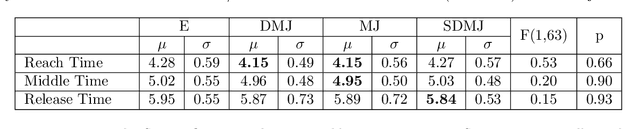

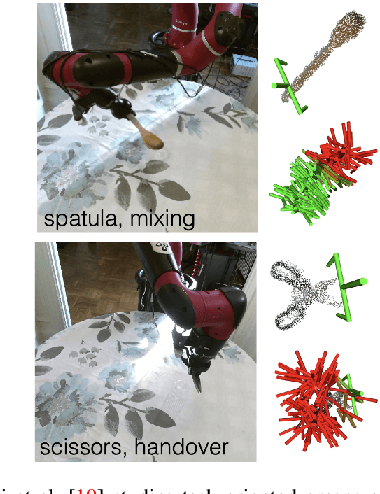

Comparing Subjective Perceptions of Robot-to-Human Handover Trajectories

Nov 16, 2022

Abstract:Robots must move legibly around people for safety reasons, especially for tasks where physical contact is possible. One such task is handovers, which requires implicit communication on where and when physical contact (object transfer) occurs. In this work, we study whether the trajectory model used by a robot during the reaching phase affects the subjective perceptions of receivers for robot-to-human handovers. We conducted a user study where 32 participants were handed over three objects with four trajectory models: three were versions of a minimum jerk trajectory, and one was an ellipse-fitting-based trajectory. The start position of the handover was fixed for all trajectories, and the end position was allowed to vary randomly around a fixed position by $\pm$3 cm in all axis. The user study found no significant differences among the handover trajectories in survey questions relating to safety, predictability, naturalness, and other subjective metrics. While these results seemingly reject the hypothesis that the trajectory affects human perceptions of a handover, it prompts future research to investigate the effect of other variables, such as robot speed, object transfer position, object orientation at the transfer point, and explicit communication signals such as gaze and speech.

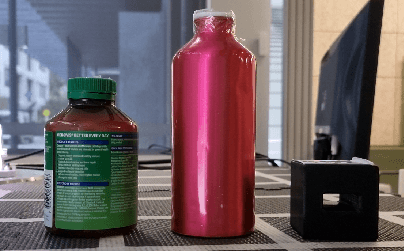

In-Hand Gravitational Pivoting Using Tactile Sensing

Oct 11, 2022

Abstract:We study gravitational pivoting, a constrained version of in-hand manipulation, where we aim to control the rotation of an object around the grip point of a parallel gripper. To achieve this, instead of controlling the gripper to avoid slip, we embrace slip to allow the object to rotate in-hand. We collect two real-world datasets, a static tracking dataset and a controller-in-the loop dataset, both annotated with object angle and angular velocity labels. Both datasets contain force-based tactile information on ten different household objects. We train an LSTM model to predict the angular position and velocity of the held object from purely tactile data. We integrate this model with a controller that opens and closes the gripper allowing the object to rotate to desired relative angles. We conduct real-world experiments where the robot is tasked to achieve a relative target angle. We show that our approach outperforms a sliding-window based MLP in a zero-shot generalization setting with unseen objects. Furthermore, we show a 16.6% improvement in performance when the LSTM model is fine-tuned on a small set of data collected with both the LSTM model and the controller in-the-loop. Code and videos are available at https://rhys-newbury.github.io/projects/pivoting/

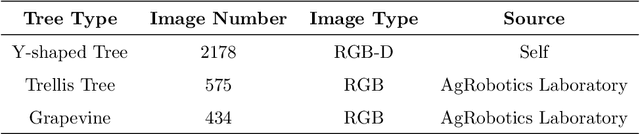

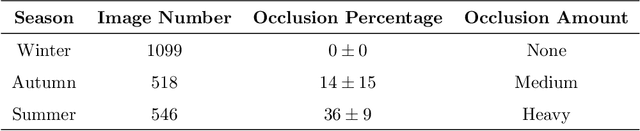

HOB-CNN: Hallucination of Occluded Branches with a Convolutional Neural Network for 2D Fruit Trees

Jul 28, 2022

Abstract:Orchard automation has attracted the attention of researchers recently due to the shortage of global labor force. To automate tasks in orchards such as pruning, thinning, and harvesting, a detailed understanding of the tree structure is required. However, occlusions from foliage and fruits can make it challenging to predict the position of occluded trunks and branches. This work proposes a regression-based deep learning model, Hallucination of Occluded Branch Convolutional Neural Network (HOB-CNN), for tree branch position prediction in varying occluded conditions. We formulate tree branch position prediction as a regression problem towards the horizontal locations of the branch along the vertical direction or vice versa. We present comparative experiments on Y-shaped trees with two state-of-the-art baselines, representing common approaches to the problem. Experiments show that HOB-CNN outperform the baselines at predicting branch position and shows robustness against varying levels of occlusion. We further validated HOB-CNN against two different types of 2D trees, and HOB-CNN shows generalization across different trees and robustness under different occluded conditions.

Deep Learning Approaches to Grasp Synthesis: A Review

Jul 06, 2022

Abstract:Grasping is the process of picking an object by applying forces and torques at a set of contacts. Recent advances in deep-learning methods have allowed rapid progress in robotic object grasping. We systematically surveyed the publications over the last decade, with a particular interest in grasping an object using all 6 degrees of freedom of the end-effector pose. Our review found four common methodologies for robotic grasping: sampling-based approaches, direct regression, reinforcement learning, and exemplar approaches. Furthermore, we found two 'supporting methods' around grasping that use deep-learning to support the grasping process, shape approximation, and affordances. We have distilled the publications found in this systematic review (85 papers) into ten key takeaways we consider crucial for future robotic grasping and manipulation research. An online version of the survey is available at https://rhys-newbury.github.io/projects/6dof/

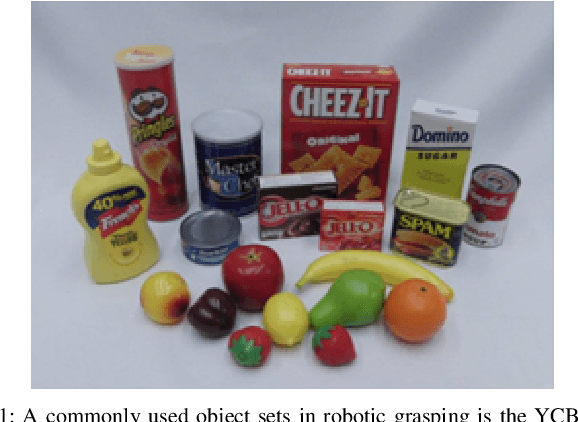

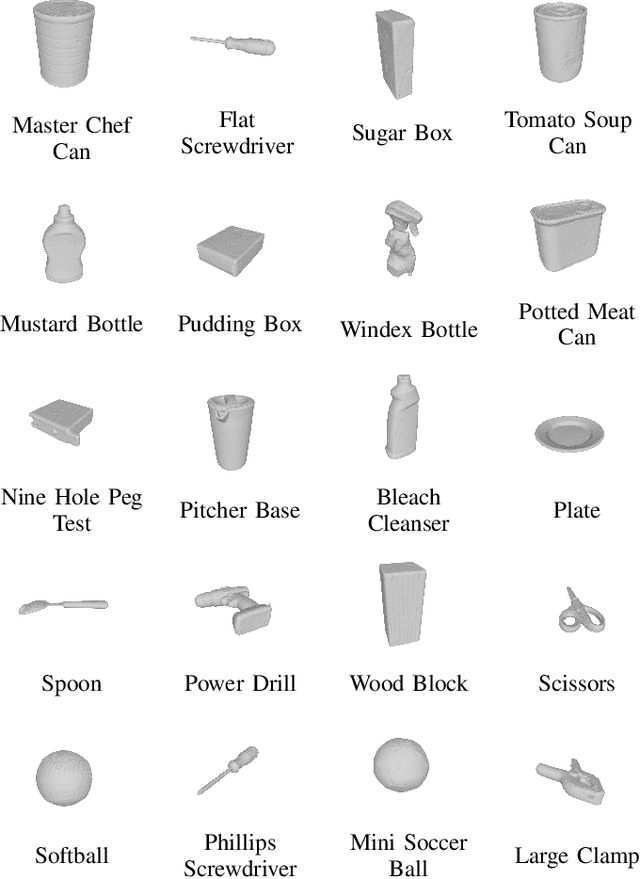

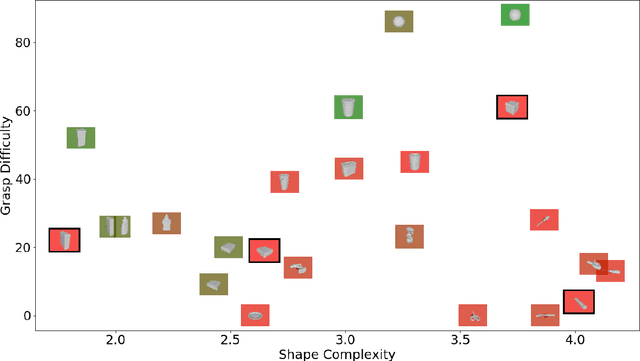

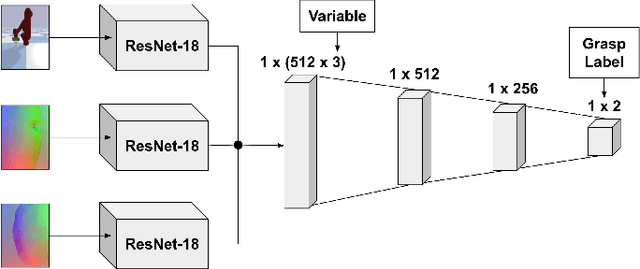

Integrating High-Resolution Tactile Sensing into Grasp Stability Prediction

Jun 12, 2022

Abstract:We investigate how high-resolution tactile sensors can be utilized in combination with vision and depth sensing, to improve grasp stability prediction. Recent advances in simulating high-resolution tactile sensing, in particular the TACTO simulator, enabled us to evaluate how neural networks can be trained with a combination of sensing modalities. With the large amounts of data needed to train large neural networks, robotic simulators provide a fast way to automate the data collection process. We expand on the existing work through an ablation study and an increased set of objects taken from the YCB benchmark set. Our results indicate that while the combination of vision, depth, and tactile sensing provides the best prediction results on known objects, the network fails to generalize to unknown objects. Our work also addresses existing issues with robotic grasping in tactile simulation and how to overcome them.

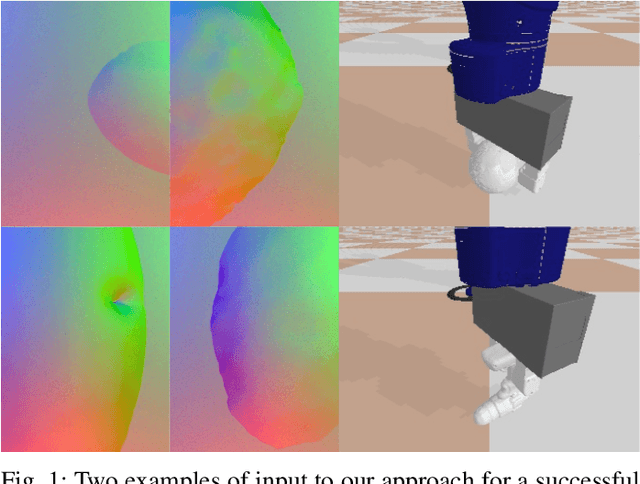

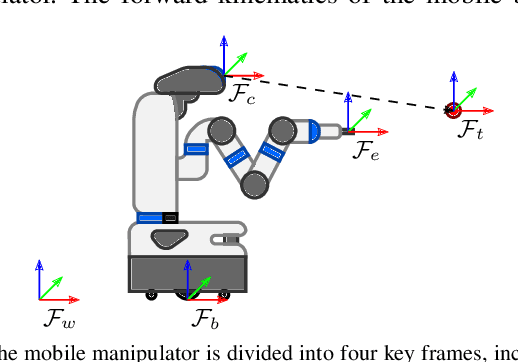

Visibility Maximization Controller for Robotic Manipulation

Feb 25, 2022

Abstract:Occlusions caused by a robot's own body is a common problem for closed-loop control methods employed in eye-to-hand camera setups. We propose an optimization-based reactive controller that minimizes self-occlusions while achieving a desired goal pose. The approach allows coordinated control between the robot's base, arm and head by encoding the line-of-sight visibility to the target as a soft constraint along with other task-related constraints, and solving for feasible joint and base velocities. The generalizability of the approach is demonstrated in simulated and real-world experiments, on robots with fixed or mobile bases, with moving or fixed objects, and multiple objects. The experiments revealed a trade-off between occlusion rates and other task metrics. While a planning-based baseline achieved lower occlusion rates than the proposed controller, it came at the expense of highly inefficient paths and a significant drop in the task success. On the other hand, the proposed controller is shown to improve visibility to the line target object(s) without sacrificing too much from the task success and efficiency. Videos and code can be found at: rhys-newbury.github.io/projects/vmc/.

Tabletop Object Rearrangement: Team ACRV's Entry to OCRTOC

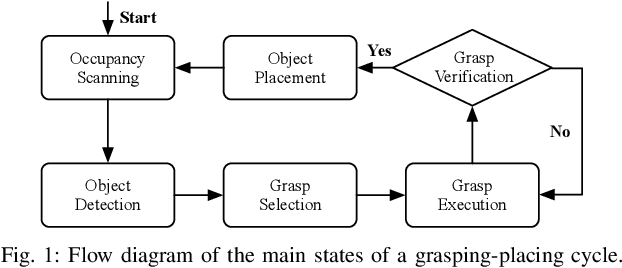

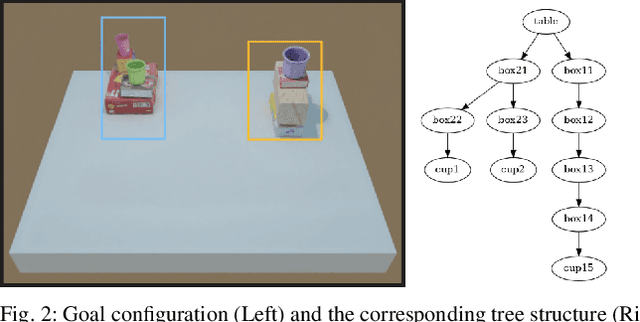

Apr 15, 2021

Abstract:Open Cloud Robot Table Organization Challenge (OCRTOC) is one of the most comprehensive cloud-based robotic manipulation competitions. It focuses on rearranging tabletop objects using vision as its primary sensing modality. In this extended abstract, we present our entry to the OCRTOC2020 and the key challenges the team has experienced.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge