Peter Corke

Sashimi-Bot: Autonomous Tri-manual Advanced Manipulation and Cutting of Deformable Objects

Nov 14, 2025Abstract:Advanced robotic manipulation of deformable, volumetric objects remains one of the greatest challenges due to their pliancy, frailness, variability, and uncertainties during interaction. Motivated by these challenges, this article introduces Sashimi-Bot, an autonomous multi-robotic system for advanced manipulation and cutting, specifically the preparation of sashimi. The objects that we manipulate, salmon loins, are natural in origin and vary in size and shape, they are limp and deformable with poorly characterized elastoplastic parameters, while also being slippery and hard to hold. The three robots straighten the loin; grasp and hold the knife; cut with the knife in a slicing motion while cooperatively stabilizing the loin during cutting; and pick up the thin slices from the cutting board or knife blade. Our system combines deep reinforcement learning with in-hand tool shape manipulation, in-hand tool cutting, and feedback of visual and tactile information to achieve robustness to the variabilities inherent in this task. This work represents a milestone in robotic manipulation of deformable, volumetric objects that may inspire and enable a wide range of other real-world applications.

Long Exposure Localization in Darkness Using Consumer Cameras

Apr 23, 2025Abstract:In this paper we evaluate performance of the SeqSLAM algorithm for passive vision-based localization in very dark environments with low-cost cameras that result in massively blurred images. We evaluate the effect of motion blur from exposure times up to 10,000 ms from a moving car, and the performance of localization in day time from routes learned at night in two different environments. Finally we perform a statistical analysis that compares the baseline performance of matching unprocessed grayscale images to using patch normalization and local neighborhood normalization - the two key SeqSLAM components. Our results and analysis show for the first time why the SeqSLAM algorithm is effective, and demonstrate the potential for cheap camera-based localization systems that function despite extreme appearance change.

Towards Assessing Compliant Robotic Grasping from First-Object Perspective via Instrumented Objects

Jan 15, 2024

Abstract:Grasping compliant objects is difficult for robots - applying too little force may cause the grasp to fail, while too much force may lead to object damage. A robot needs to apply the right amount of force to quickly and confidently grasp the objects so that it can perform the required task. Although some methods have been proposed to tackle this issue, performance assessment is still a problem for directly measuring object property changes and possible damage. To fill the gap, a new concept is introduced in this paper to assess compliant robotic grasping using instrumented objects. A proof-of-concept design is proposed to measure the force applied on a cuboid object from a first-object perspective. The design can detect multiple contact locations and applied forces on its surface by using multiple embedded 3D Hall sensors to detect deformation relative to embedded magnets. The contact estimation is achieved by interpreting the Hall-effect signals using neural networks. In comprehensive experiments, the design achieved good performance in estimating contacts from each single face of the cuboid and decent performance in detecting contacts from multiple faces when being used to evaluate grasping from a parallel jaw gripper, demonstrating the effectiveness of the design and the feasibility of the concept.

Reactive Base Control for On-The-Move Mobile Manipulation in Dynamic Environments

Sep 17, 2023

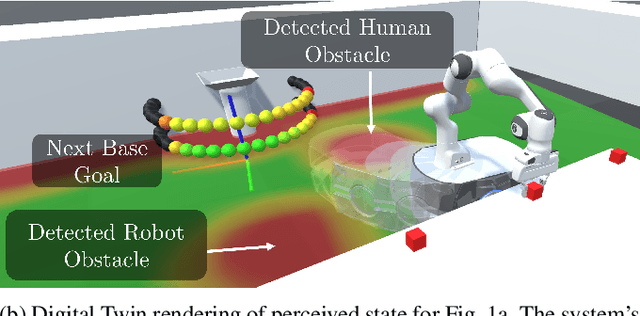

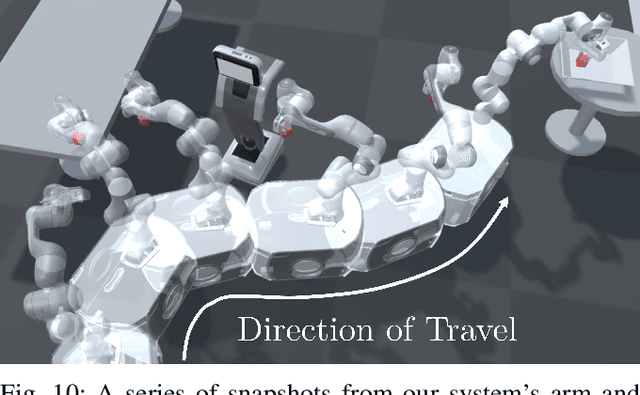

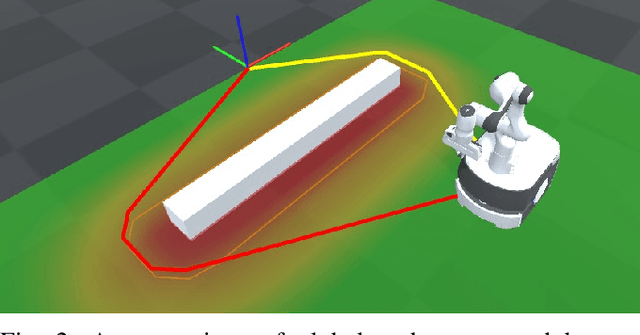

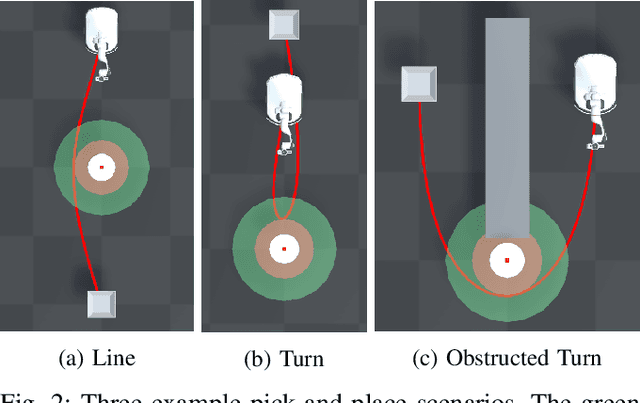

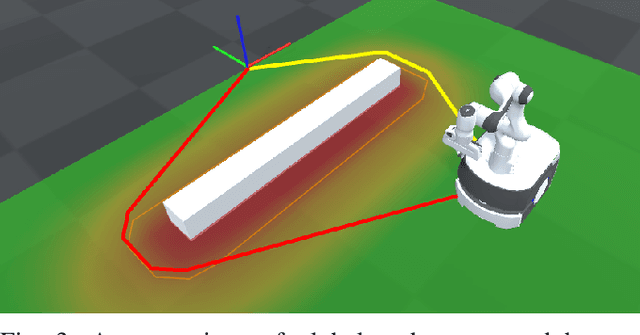

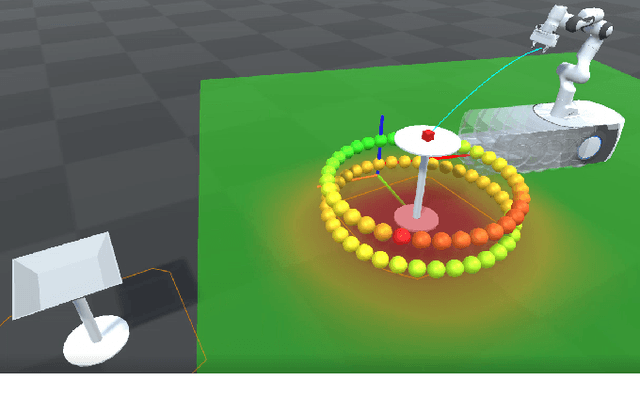

Abstract:We present a reactive base control method that enables high performance mobile manipulation on-the-move in environments with static and dynamic obstacles. Performing manipulation tasks while the mobile base remains in motion can significantly decrease the time required to perform multi-step tasks, as well as improve the gracefulness of the robot's motion. Existing approaches to manipulation on-the-move either ignore the obstacle avoidance problem or rely on the execution of planned trajectories, which is not suitable in environments with dynamic objects and obstacles. The presented controller addresses both of these deficiencies and demonstrates robust performance of pick-and-place tasks in dynamic environments. The performance is evaluated on several simulated and real-world tasks. On a real-world task with static obstacles, we outperform an existing method by 48\% in terms of total task time. Further, we present real-world examples of our robot performing manipulation tasks on-the-move while avoiding a second autonomous robot in the workspace. See https://benburgesslimerick.github.io/MotM-BaseControl for supplementary materials.

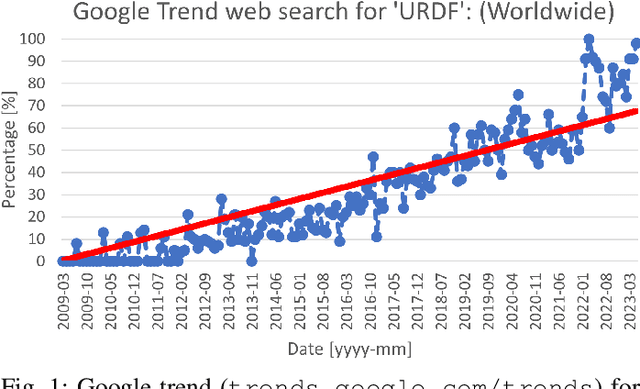

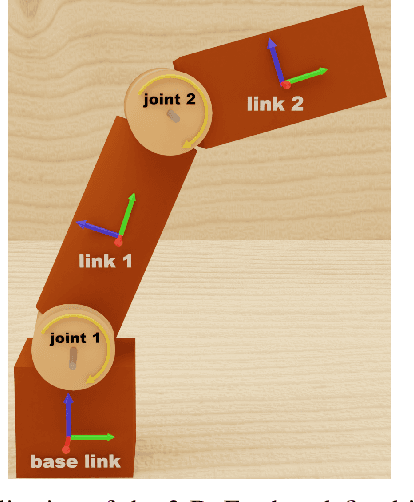

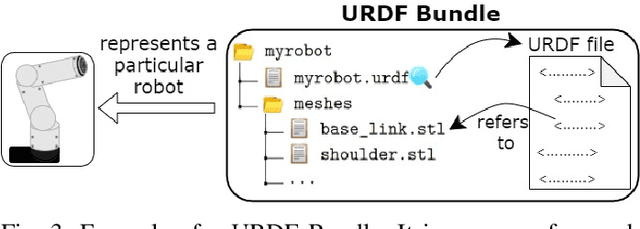

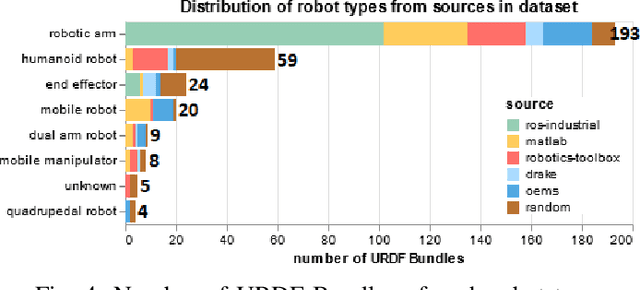

Understanding URDF: A Dataset and Analysis

Aug 01, 2023

Abstract:As the complexity of robot systems increases, it becomes more effective to simulate them before deployment. To do this, a model of the robot's kinematics or dynamics is required, and the most commonly used format is the Unified Robot Description Format (URDF). This article presents, to our knowledge, the first dataset of URDF files from various industrial and research organizations, with metadata describing each robot, its type, manufacturer, and the source of the model. The dataset contains 322 URDF files of which 195 are unique robot models, meaning the excess URDFs are either of a robot that is multiply defined across sources or URDF variants of the same robot. We analyze the files in the dataset, where we, among other things, provide information on how they were generated, which mesh file types are most commonly used, and compare models of multiply defined robots. The intention of this article is to build a foundation of knowledge on URDF and how it is used based on publicly available URDF files. Publishing the dataset, analysis, and the scripts and tools used enables others using, researching or developing URDFs to easily access this data and use it in their own work.

Robotic Vision for Human-Robot Interaction and Collaboration: A Survey and Systematic Review

Jul 28, 2023

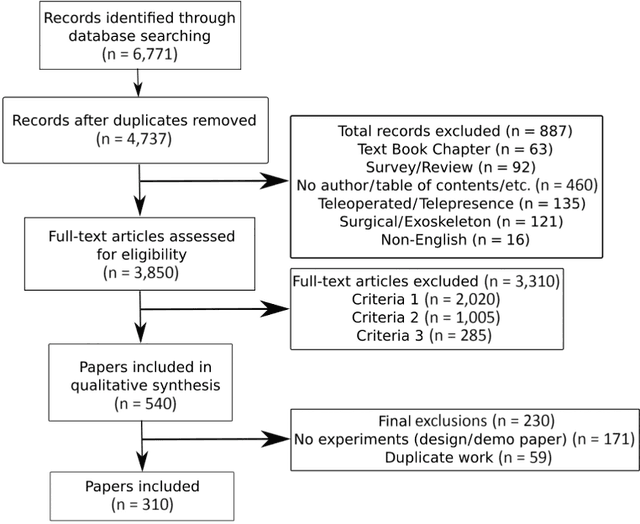

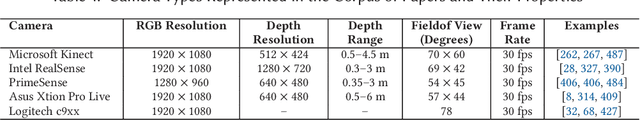

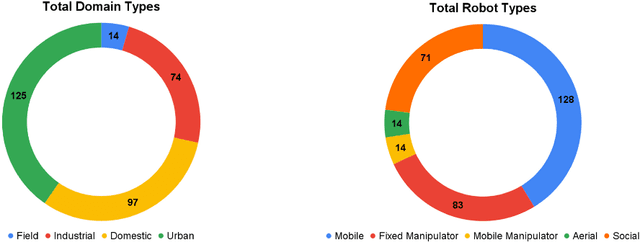

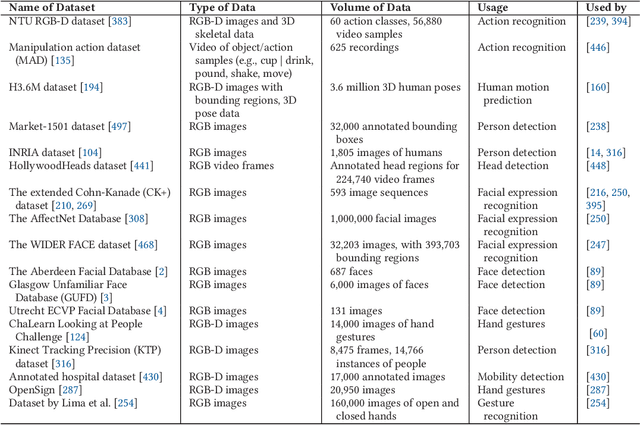

Abstract:Robotic vision for human-robot interaction and collaboration is a critical process for robots to collect and interpret detailed information related to human actions, goals, and preferences, enabling robots to provide more useful services to people. This survey and systematic review presents a comprehensive analysis on robotic vision in human-robot interaction and collaboration over the last 10 years. From a detailed search of 3850 articles, systematic extraction and evaluation was used to identify and explore 310 papers in depth. These papers described robots with some level of autonomy using robotic vision for locomotion, manipulation and/or visual communication to collaborate or interact with people. This paper provides an in-depth analysis of current trends, common domains, methods and procedures, technical processes, data sets and models, experimental testing, sample populations, performance metrics and future challenges. This manuscript found that robotic vision was often used in action and gesture recognition, robot movement in human spaces, object handover and collaborative actions, social communication and learning from demonstration. Few high-impact and novel techniques from the computer vision field had been translated into human-robot interaction and collaboration. Overall, notable advancements have been made on how to develop and deploy robots to assist people.

Enabling Failure Recovery for On-The-Move Mobile Manipulation

May 15, 2023

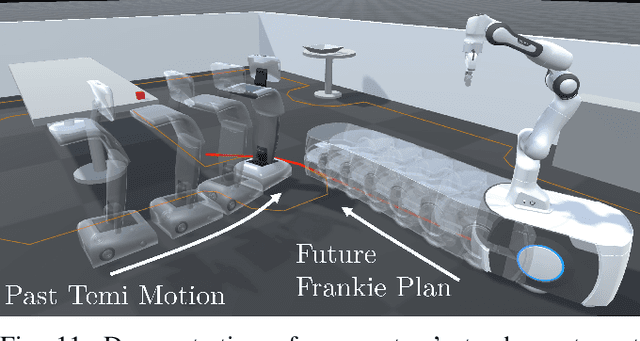

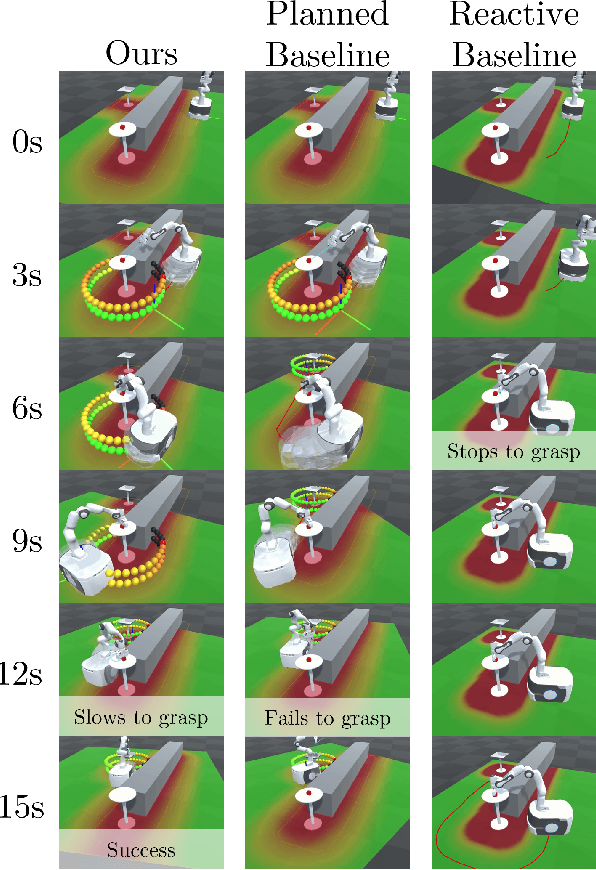

Abstract:We present a robot base placement and control method that enables a mobile manipulator to gracefully recover from manipulation failures while performing tasks on-the-move. A mobile manipulator in motion has a limited window to complete a task, unlike when stationary where it can make repeated attempts until successful. Existing approaches to manipulation on-the-move are typically based on open-loop execution of planned trajectories which does not allow the base controller to react to manipulation failures, slowing down or stopping as required. To overcome this limitation, we present a reactive base control method that repeatedly evaluates the best base placement given the robot's current state, the immediate manipulation task, as well as the next part of a multi-step task. The result is a system that retains the reliability of traditional mobile manipulation approaches where the base comes to a stop, but leverages the performance gains available by performing manipulation on-the-move. The controller keeps the base in range of the target for as long as required to recover from manipulation failures while making as much progress as possible toward the next objective. See https://benburgesslimerick.github.io/MotM-FailureRecovery for videos of experiments.

The Need for Inherently Privacy-Preserving Vision in Trustworthy Autonomous Systems

Mar 29, 2023

Abstract:Vision is a popular and effective sensor for robotics from which we can derive rich information about the environment: the geometry and semantics of the scene, as well as the age, gender, identity, activity and even emotional state of humans within that scene. This raises important questions about the reach, lifespan, and potential misuse of this information. This paper is a call to action to consider privacy in the context of robotic vision. We propose a specific form privacy preservation in which no images are captured or could be reconstructed by an attacker even with full remote access. We present a set of principles by which such systems can be designed, and through a case study in localisation demonstrate in simulation a specific implementation that delivers an important robotic capability in an inherently privacy-preserving manner. This is a first step, and we hope to inspire future works that expand the range of applications open to sighted robotic systems.

Understanding URDF: A Survey Based on User Experience

Mar 12, 2023

Abstract:With the increasing complexity of robot systems, it is necessary to simulate them before deployment. To do this, a model of the robot's kinematics or dynamics is required. One of the most commonly used formats for modeling robots is the Unified Robot Description Format (URDF). The goal of this article is to understand how URDF is currently used, what challenges people face when working with it, and how the community sees the future of URDF. The outcome can potentially be used to guide future research. This article presents the results from a survey based on 510 anonymous responses from robotic developers of different backgrounds and levels of experience. We find that 96.8% of the participants have simulated robots before, and of them 95.5% had used URDF. We identify a number of challenges and limitations that complicate the use of URDF, such as the inability to model parallel linkages and closed-chain systems, no real standard, lack of documentation, and a limited number of dynamic parameters to model the robot. Future perspectives for URDF are also determined, where 53.5% believe URDF will be more commonly used in the future, 12.2% believe other standards or tools will make URDF obsolete, and 34.4% are not sure what the future of URDF will be. Most participants agree that there is a need for better tooling to ensure URDF's future use.

Re-evaluating Parallel Finger-tip Tactile Sensing for Inferring Object Adjectives: An Empirical Study

Mar 12, 2023

Abstract:Finger-tip tactile sensors are increasingly used for robotic sensing to establish stable grasps and to infer object properties. Promising performance has been shown in a number of works for inferring adjectives that describe the object, but there remains a question about how each taxel contributes to the performance. This paper explores this question with empirical experiments, leading insights for future finger-tip tactile sensor usage and design.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge