Nicole Robinson

A Brief Wellbeing Training Session Delivered by a Humanoid Social Robot: A Pilot Randomized Controlled Trial

Aug 12, 2023

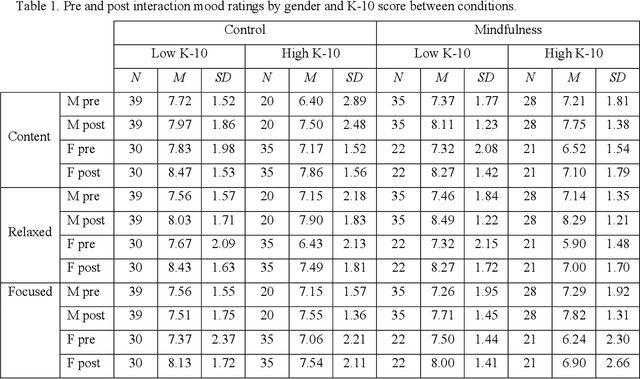

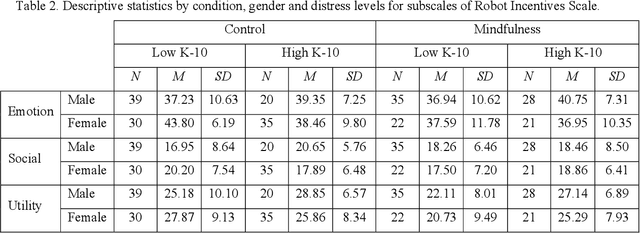

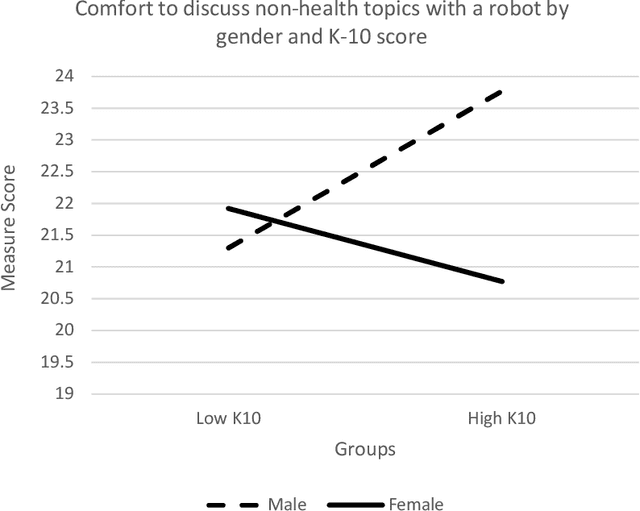

Abstract:Mental health and psychological distress are rising in adults, showing the importance of wellbeing promotion, support, and technique practice that is effective and accessible. Interactive social robots have been tested to deliver health programs but have not been explored to deliver wellbeing technique training in detail. A pilot randomised controlled trial was conducted to explore the feasibility of an autonomous humanoid social robot to deliver a brief mindful breathing technique to promote information around wellbeing. It contained two conditions: brief technique training (Technique) and control designed to represent a simple wait-list activity to represent a relationship-building discussion (Simple Rapport). This trial also explored willingness to discuss health-related topics with a robot. Recruitment uptake rate through convenience sampling was high (53%). A total of 230 participants took part (mean age = 29 years) with 71% being higher education students. There were moderate ratings of technique enjoyment, perceived usefulness, and likelihood to repeat the technique again. Interaction effects were found across measures with scores varying across gender and distress levels. Males with high distress and females with low distress who received the simple rapport activity reported greater comfort to discuss non-health topics than males with low distress and females with high distress. This trial marks a notable step towards the design and deployment of an autonomous wellbeing intervention to investigate the impact of a brief robot-delivered mindfulness training program for a sub-clinical population.

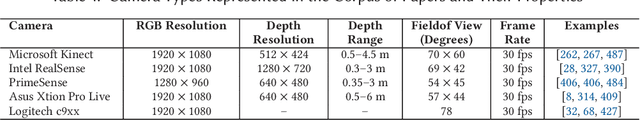

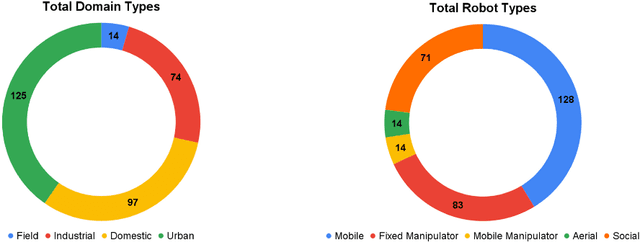

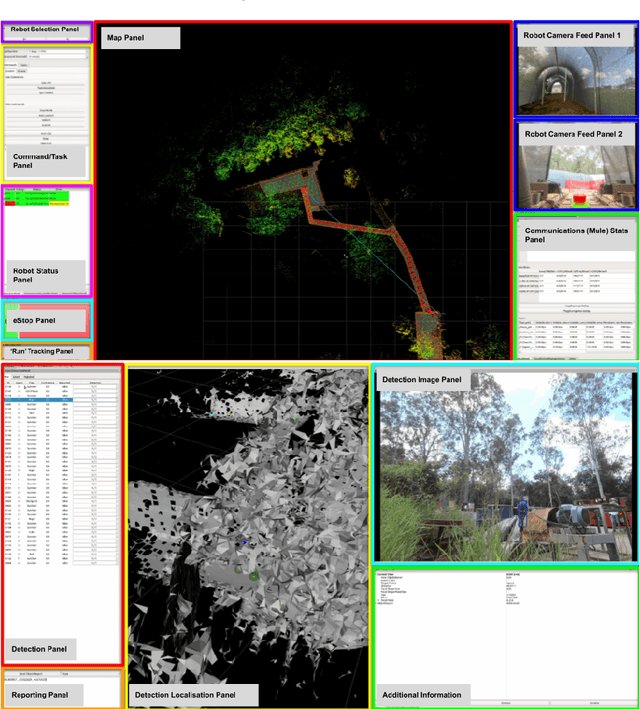

Robotic Vision for Human-Robot Interaction and Collaboration: A Survey and Systematic Review

Jul 28, 2023

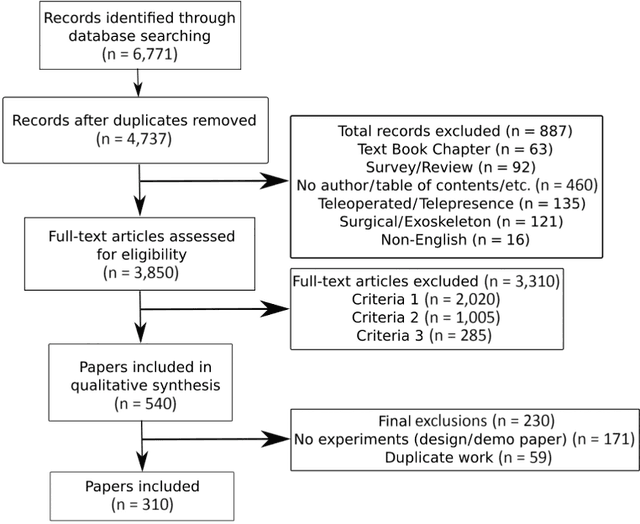

Abstract:Robotic vision for human-robot interaction and collaboration is a critical process for robots to collect and interpret detailed information related to human actions, goals, and preferences, enabling robots to provide more useful services to people. This survey and systematic review presents a comprehensive analysis on robotic vision in human-robot interaction and collaboration over the last 10 years. From a detailed search of 3850 articles, systematic extraction and evaluation was used to identify and explore 310 papers in depth. These papers described robots with some level of autonomy using robotic vision for locomotion, manipulation and/or visual communication to collaborate or interact with people. This paper provides an in-depth analysis of current trends, common domains, methods and procedures, technical processes, data sets and models, experimental testing, sample populations, performance metrics and future challenges. This manuscript found that robotic vision was often used in action and gesture recognition, robot movement in human spaces, object handover and collaborative actions, social communication and learning from demonstration. Few high-impact and novel techniques from the computer vision field had been translated into human-robot interaction and collaboration. Overall, notable advancements have been made on how to develop and deploy robots to assist people.

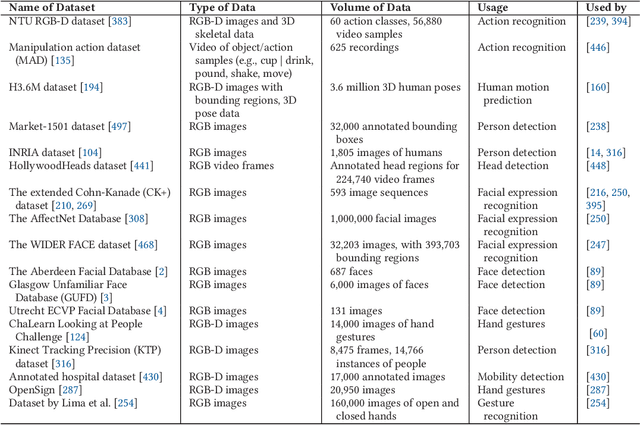

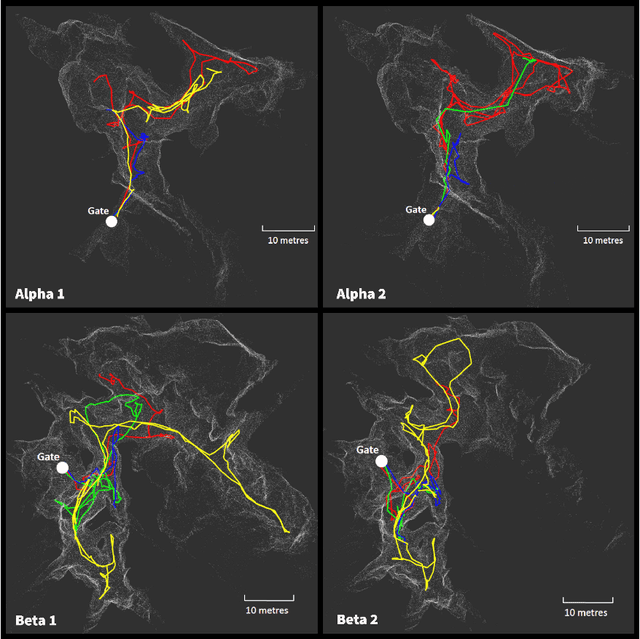

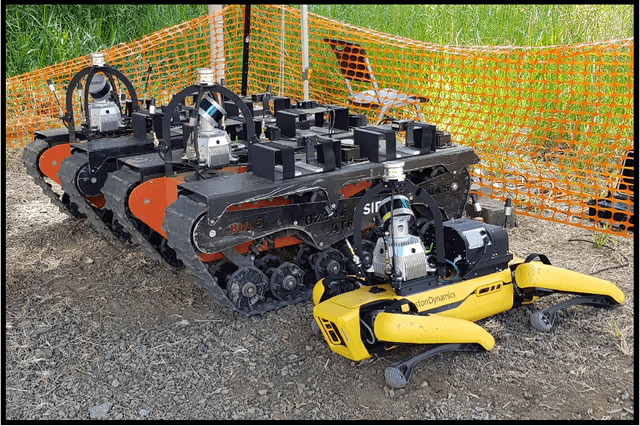

Human-Robot Team Performance Compared to Full Robot Autonomy in 16 Real-World Search and Rescue Missions: Adaptation of the DARPA Subterranean Challenge

Dec 11, 2022

Abstract:Human operators in human-robot teams are commonly perceived to be critical for mission success. To explore the direct and perceived impact of operator input on task success and team performance, 16 real-world missions (10 hrs) were conducted based on the DARPA Subterranean Challenge. These missions were to deploy a heterogeneous team of robots for a search task to locate and identify artifacts such as climbing rope, drills and mannequins representing human survivors. Two conditions were evaluated: human operators that could control the robot team with state-of-the-art autonomy (Human-Robot Team) compared to autonomous missions without human operator input (Robot-Autonomy). Human-Robot Teams were often in directed autonomy mode (70% of mission time), found more items, traversed more distance, covered more unique ground, and had a higher time between safety-related events. Human-Robot Teams were faster at finding the first artifact, but slower to respond to information from the robot team. In routine conditions, scores were comparable for artifacts, distance, and coverage. Reasons for intervention included creating waypoints to prioritise high-yield areas, and to navigate through error-prone spaces. After observing robot autonomy, operators reported increases in robot competency and trust, but that robot behaviour was not always transparent and understandable, even after high mission performance.

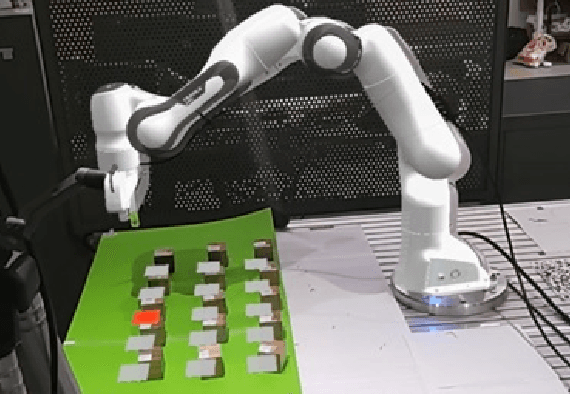

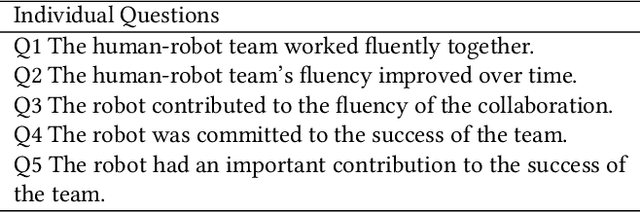

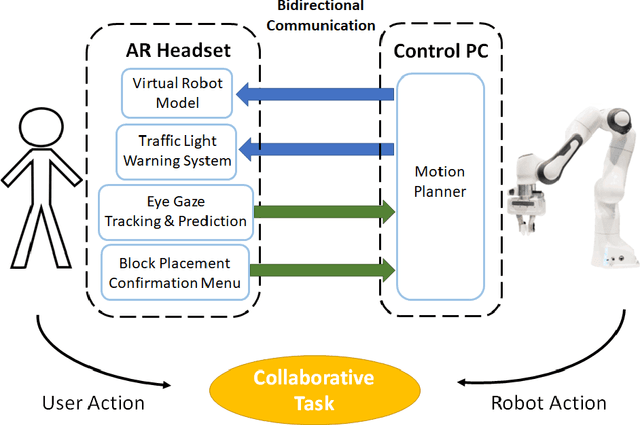

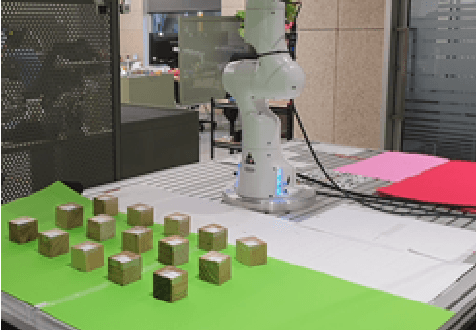

Design and Implementation of a Human-Robot Joint Action Framework using Augmented Reality and Eye Gaze

Aug 25, 2022

Abstract:When humans work together to complete a joint task, each person builds an internal model of the situation and how it will evolve. Efficient collaboration is dependent on how these individual models overlap to form a shared mental model among team members, which is important for collaborative processes in human-robot teams. The development and maintenance of an accurate shared mental model requires bidirectional communication of individual intent and the ability to interpret the intent of other team members. To enable effective human-robot collaboration, this paper presents a design and implementation of a novel joint action framework in human-robot team collaboration, utilizing augmented reality (AR) technology and user eye gaze to enable bidirectional communication of intent. We tested our new framework through a user study with 37 participants, and found that our system improves task efficiency, trust, as well as task fluency. Therefore, using AR and eye gaze to enable bidirectional communication is a promising mean to improve core components that influence collaboration between humans and robots.

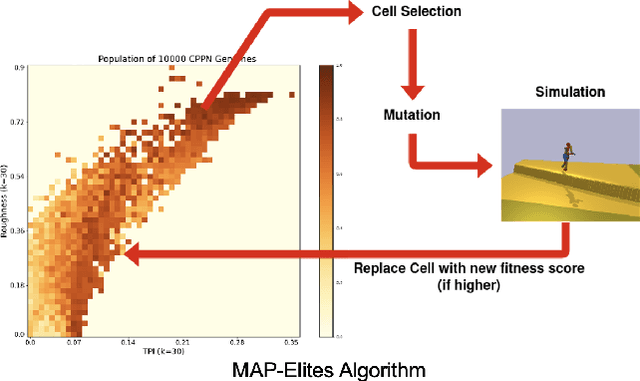

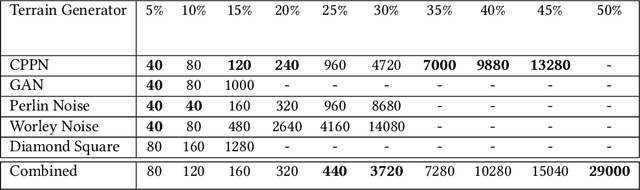

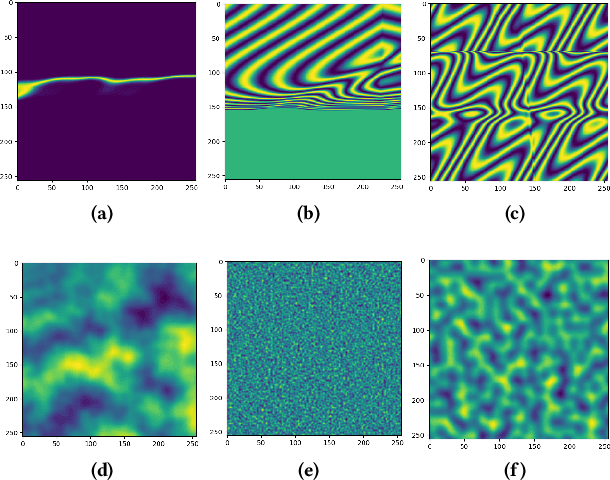

Assessing Evolutionary Terrain Generation Methods for Curriculum Reinforcement Learning

Mar 29, 2022

Abstract:Curriculum learning allows complex tasks to be mastered via incremental progression over `stepping stone' goals towards a final desired behaviour. Typical implementations learn locomotion policies for challenging environments through gradual complexification of a terrain mesh generated through a parameterised noise function. To date, researchers have predominantly generated terrains from a limited range of noise functions, and the effect of the generator on the learning process is underrepresented in the literature. We compare popular noise-based terrain generators to two indirect encodings, CPPN and GAN. To allow direct comparison between both direct and indirect representations, we assess the impact of a range of representation-agnostic MAP-Elites feature descriptors that compute metrics directly from the generated terrain meshes. Next, performance and coverage are assessed when training a humanoid robot in a physics simulator using the PPO algorithm. Results describe key differences between the generators that inform their use in curriculum learning, and present a range of useful feature descriptors for uptake by the community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge