Jesse Haviland

ROS2WASM: Bringing the Robot Operating System to the Web

Sep 16, 2024

Abstract:The Robot Operating System (ROS) has become the de facto standard middleware in robotics, widely adopted across domains ranging from education to industrial applications. The RoboStack distribution has extended ROS's accessibility by facilitating installation across all major operating systems and architectures, integrating seamlessly with scientific tools such as PyTorch and Open3D. This paper presents ROS2WASM, a novel integration of RoboStack with WebAssembly, enabling the execution of ROS 2 and its associated software directly within web browsers, without requiring local installations. This approach significantly enhances reproducibility and shareability of research, lowers barriers to robotics education, and leverages WebAssembly's robust security framework to protect against malicious code. We detail our methodology for cross-compiling ROS 2 packages into WebAssembly, the development of a specialized middleware for ROS 2 communication within browsers, and the implementation of a web platform available at www.ros2wasm.dev that allows users to interact with ROS 2 environments. Additionally, we extend support to the Robotics Toolbox for Python and adapt its Swift simulator for browser compatibility. Our work paves the way for unprecedented accessibility in robotics, offering scalable, secure, and reproducible environments that have the potential to transform educational and research paradigms.

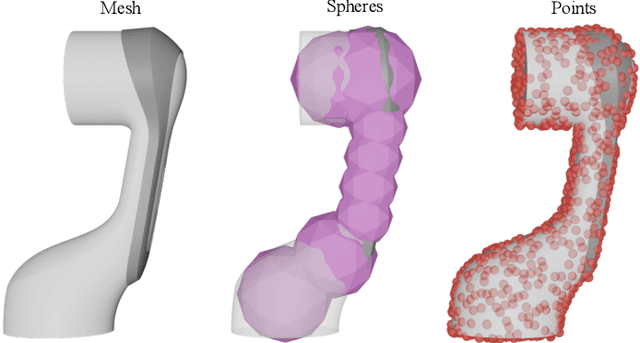

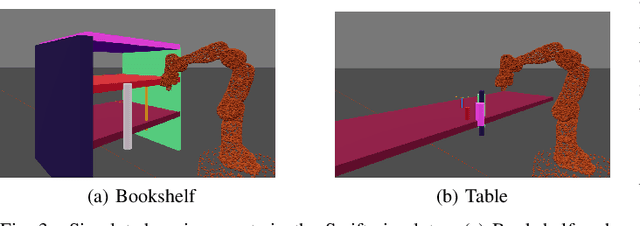

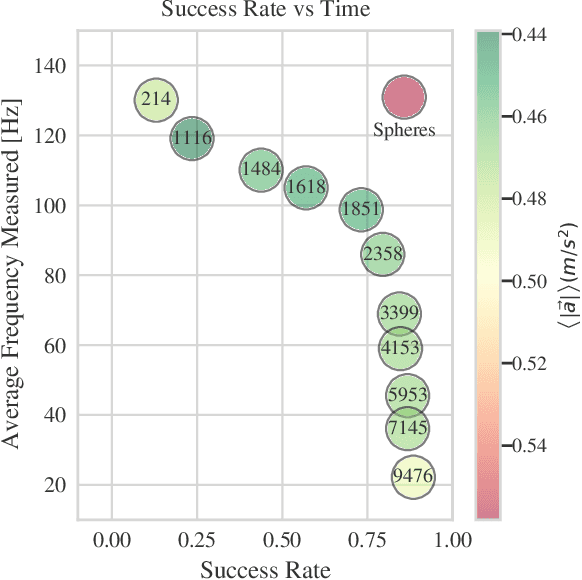

RMMI: Enhanced Obstacle Avoidance for Reactive Mobile Manipulation using an Implicit Neural Map

Aug 29, 2024

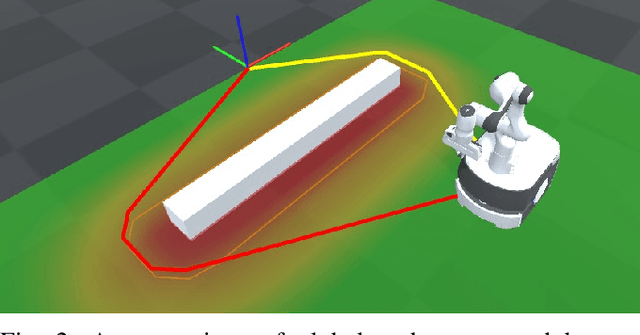

Abstract:We introduce RMMI, a novel reactive control framework for mobile manipulators operating in complex, static environments. Our approach leverages a neural Signed Distance Field (SDF) to model intricate environment details and incorporates this representation as inequality constraints within a Quadratic Program (QP) to coordinate robot joint and base motion. A key contribution is the introduction of an active collision avoidance cost term that maximises the total robot distance to obstacles during the motion. We first evaluate our approach in a simulated reaching task, outperforming previous methods that rely on representing both the robot and the scene as a set of primitive geometries. Compared with the baseline, we improved the task success rate by 25% in total, which includes increases of 10% by using the active collision cost. We also demonstrate our approach on a real-world platform, showing its effectiveness in reaching target poses in cluttered and confined spaces using environment models built directly from sensor data. For additional details and experiment videos, visit https://rmmi.github.io/.

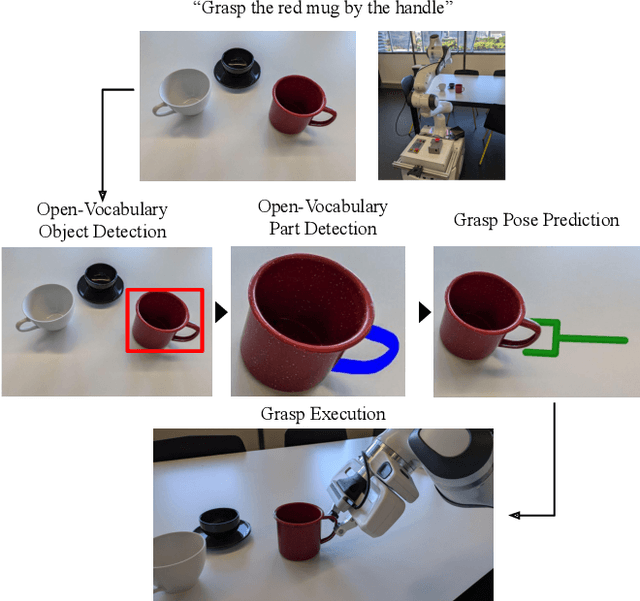

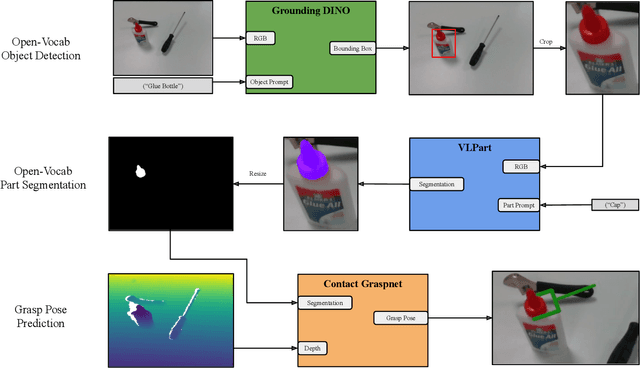

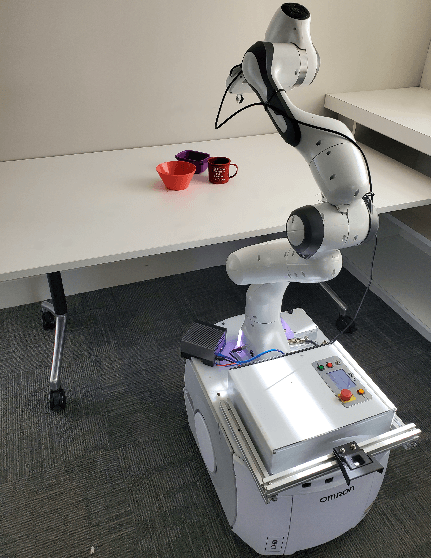

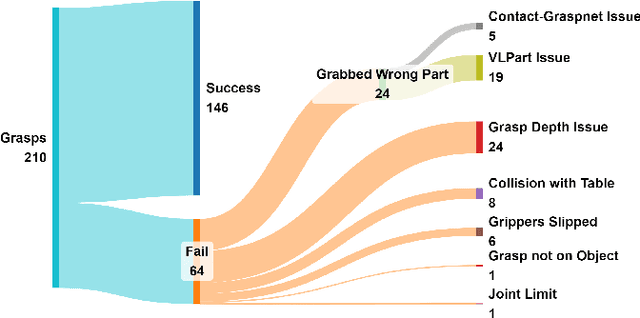

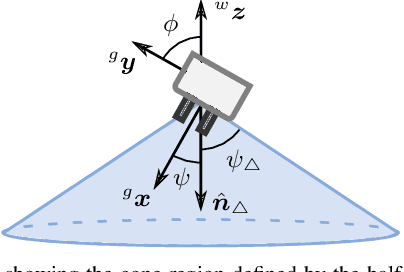

Open-Vocabulary Part-Based Grasping

Jun 10, 2024

Abstract:Many robotic applications require to grasp objects not arbitrarily but at a very specific object part. This is especially important for manipulation tasks beyond simple pick-and-place scenarios or in robot-human interactions, such as object handovers. We propose AnyPart, a practical system that combines open-vocabulary object detection, open-vocabulary part segmentation and 6DOF grasp pose prediction to infer a grasp pose on a specific part of an object in 800 milliseconds. We contribute two new datasets for the task of open-vocabulary part-based grasping, a hand-segmented dataset containing 1014 object-part segmentations, and a dataset of real-world scenarios gathered during our robot trials for individual objects and table-clearing tasks. We evaluate AnyPart on a mobile manipulator robot using a set of 28 common household objects over 360 grasping trials. AnyPart is capable of producing successful grasps 69.52 %, when ignoring robot-based grasp failures, AnyPart predicts a grasp location on the correct part 88.57 % of the time.

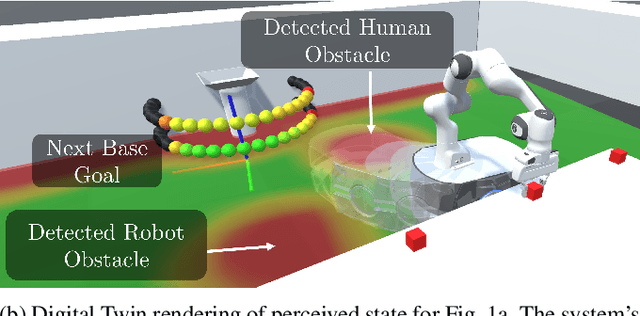

Reactive Base Control for On-The-Move Mobile Manipulation in Dynamic Environments

Sep 17, 2023

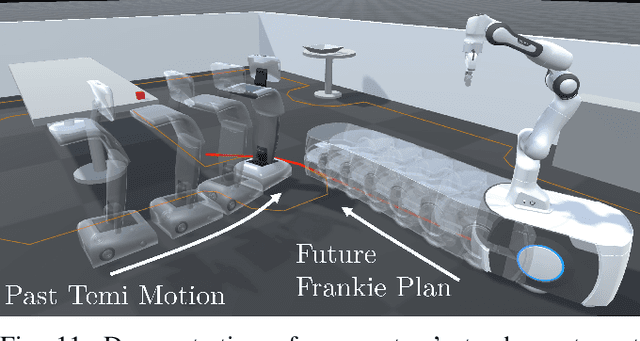

Abstract:We present a reactive base control method that enables high performance mobile manipulation on-the-move in environments with static and dynamic obstacles. Performing manipulation tasks while the mobile base remains in motion can significantly decrease the time required to perform multi-step tasks, as well as improve the gracefulness of the robot's motion. Existing approaches to manipulation on-the-move either ignore the obstacle avoidance problem or rely on the execution of planned trajectories, which is not suitable in environments with dynamic objects and obstacles. The presented controller addresses both of these deficiencies and demonstrates robust performance of pick-and-place tasks in dynamic environments. The performance is evaluated on several simulated and real-world tasks. On a real-world task with static obstacles, we outperform an existing method by 48\% in terms of total task time. Further, we present real-world examples of our robot performing manipulation tasks on-the-move while avoiding a second autonomous robot in the workspace. See https://benburgesslimerick.github.io/MotM-BaseControl for supplementary materials.

SayPlan: Grounding Large Language Models using 3D Scene Graphs for Scalable Task Planning

Jul 12, 2023Abstract:Large language models (LLMs) have demonstrated impressive results in developing generalist planning agents for diverse tasks. However, grounding these plans in expansive, multi-floor, and multi-room environments presents a significant challenge for robotics. We introduce SayPlan, a scalable approach to LLM-based, large-scale task planning for robotics using 3D scene graph (3DSG) representations. To ensure the scalability of our approach, we: (1) exploit the hierarchical nature of 3DSGs to allow LLMs to conduct a semantic search for task-relevant subgraphs from a smaller, collapsed representation of the full graph; (2) reduce the planning horizon for the LLM by integrating a classical path planner and (3) introduce an iterative replanning pipeline that refines the initial plan using feedback from a scene graph simulator, correcting infeasible actions and avoiding planning failures. We evaluate our approach on two large-scale environments spanning up to 3 floors, 36 rooms and 140 objects, and show that our approach is capable of grounding large-scale, long-horizon task plans from abstract, and natural language instruction for a mobile manipulator robot to execute.

Manipulator Differential Kinematics Part I: Kinematics, Velocity, and Applications

Jul 05, 2022

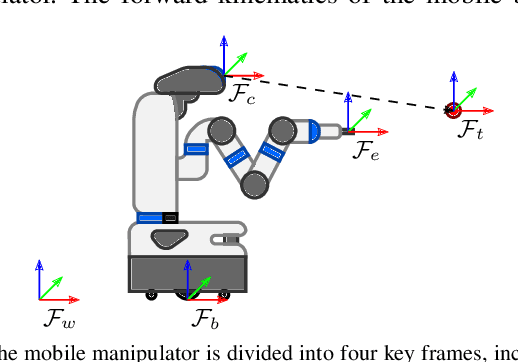

Abstract:Manipulator kinematics is concerned with the motion of each link within a manipulator without considering mass or force. In this article, which is the first in a two-part tutorial, we provide an introduction to modelling manipulator kinematics using the elementary transform sequence (ETS). Then we formulate the first-order differential kinematics, which leads to the manipulator Jacobian, which is the basis for velocity control and inverse kinematics. We describe essential classical techniques which rely on the manipulator Jacobian before exhibiting some contemporary applications. Part II of this tutorial provides a formulation of second and higher-order differential kinematics, introduces the manipulator Hessian, and illustrates advanced techniques, some of which improve the performance of techniques demonstrated in Part I. We have provided Jupyter Notebooks to accompany each section within this tutorial. The Notebooks are written in Python code and use the Robotics Toolbox for Python, and the Swift Simulator to provide examples and implementations of algorithms. While not absolutely essential, for the most engaging and informative experience, we recommend working through the Jupyter Notebooks while reading this article. The Notebooks and setup instructions can be accessed at https://github.com/jhavl/dkt.

Manipulator Differential Kinematics Part II: Acceleration and Advanced Applications

Jul 05, 2022

Abstract:This is the second and final article on the tutorial on manipulator differential kinematics. In Part I, we described a method of modelling kinematics using the elementary transform sequence (ETS), before formulating forward kinematics and the manipulator Jacobian. We then described some basic applications of the manipulator Jacobian including resolved-rate motion control (RRMC), inverse kinematics (IK), and some manipulator performance measures. In this article, we formulate the second-order differential kinematics, leading to a definition of manipulator Hessian. We then describe the differential kinematics' analytical forms, which are essential to dynamics applications. Subsequently, we provide a general formula for higher-order derivatives. The first application we consider is advanced velocity control. In this section, we extend resolved-rate motion control to perform sub-tasks while still achieving the goal before redefining the algorithm as a quadratic program to enable greater flexibility and additional constraints. We then take another look at numerical inverse kinematics with an emphasis on adding constraints. Finally, we analyse how the manipulator Hessian can help to escape singularities. We have provided Jupyter Notebooks to accompany each section within this tutorial. The Notebooks are written in Python code and use the Robotics Toolbox for Python, and the Swift Simulator to provide examples and implementations of algorithms. While not absolutely essential, for the most engaging and informative experience, we recommend working through the Jupyter Notebooks while reading this article. The Notebooks and setup instructions can be accessed at https://github.com/jhavl/dkt.

Visibility Maximization Controller for Robotic Manipulation

Feb 25, 2022

Abstract:Occlusions caused by a robot's own body is a common problem for closed-loop control methods employed in eye-to-hand camera setups. We propose an optimization-based reactive controller that minimizes self-occlusions while achieving a desired goal pose. The approach allows coordinated control between the robot's base, arm and head by encoding the line-of-sight visibility to the target as a soft constraint along with other task-related constraints, and solving for feasible joint and base velocities. The generalizability of the approach is demonstrated in simulated and real-world experiments, on robots with fixed or mobile bases, with moving or fixed objects, and multiple objects. The experiments revealed a trade-off between occlusion rates and other task metrics. While a planning-based baseline achieved lower occlusion rates than the proposed controller, it came at the expense of highly inefficient paths and a significant drop in the task success. On the other hand, the proposed controller is shown to improve visibility to the line target object(s) without sacrificing too much from the task success and efficiency. Videos and code can be found at: rhys-newbury.github.io/projects/vmc/.

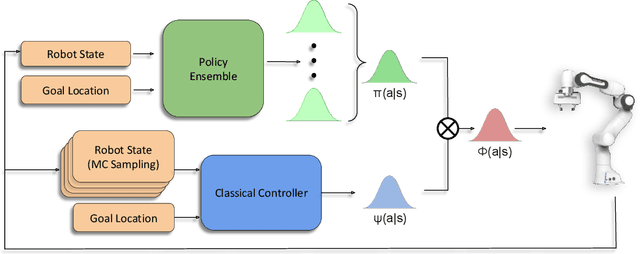

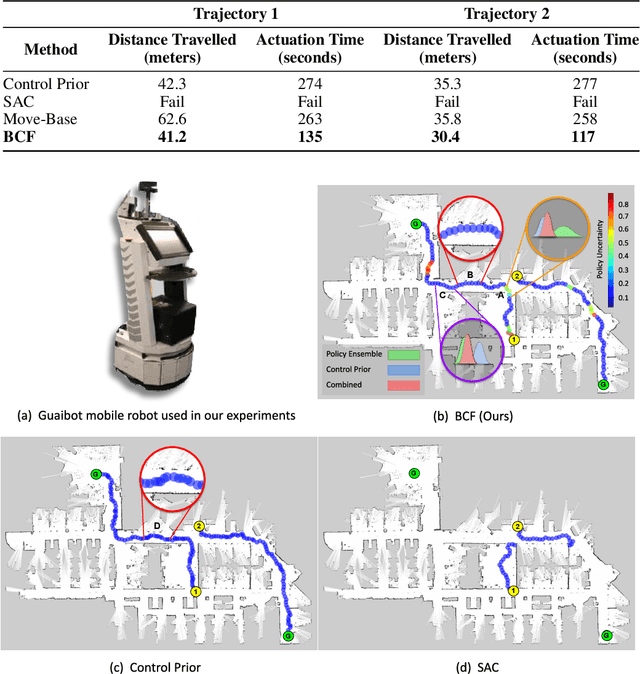

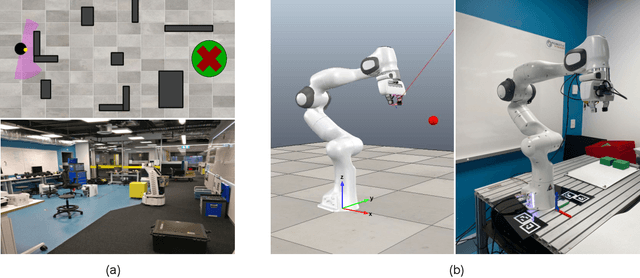

Zero-Shot Uncertainty-Aware Deployment of Simulation Trained Policies on Real-World Robots

Dec 10, 2021

Abstract:While deep reinforcement learning (RL) agents have demonstrated incredible potential in attaining dexterous behaviours for robotics, they tend to make errors when deployed in the real world due to mismatches between the training and execution environments. In contrast, the classical robotics community have developed a range of controllers that can safely operate across most states in the real world given their explicit derivation. These controllers however lack the dexterity required for complex tasks given limitations in analytical modelling and approximations. In this paper, we propose Bayesian Controller Fusion (BCF), a novel uncertainty-aware deployment strategy that combines the strengths of deep RL policies and traditional handcrafted controllers. In this framework, we can perform zero-shot sim-to-real transfer, where our uncertainty based formulation allows the robot to reliably act within out-of-distribution states by leveraging the handcrafted controller while gaining the dexterity of the learned system otherwise. We show promising results on two real-world continuous control tasks, where BCF outperforms both the standalone policy and controller, surpassing what either can achieve independently. A supplementary video demonstrating our system is provided at https://bit.ly/bcf_deploy.

A Holistic Approach to Reactive Mobile Manipulation

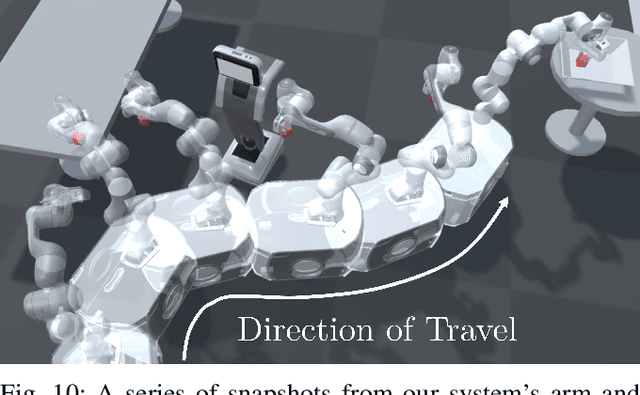

Sep 10, 2021

Abstract:We present the design and implementation of a taskable reactive mobile manipulation system. Contrary to related work, we treat the arm and base degrees of freedom as a holistic structure which greatly improves the speed and fluidity of the resulting motion. At the core of this approach is a robust and reactive motion controller which can achieve a desired end-effector pose while avoiding joint position and velocity limits, and ensuring the mobile manipulator is manoeuvrable throughout the trajectory. This can support sensor-based behaviours such as closed-loop visual grasping. As no planning is involved in our approach, the robot is never stationary thinking about what to do next. We show the versatility of our holistic motion controller by implementing a pick and place system using behaviour trees and demonstrate this task on a 9-degree-of-freedom mobile manipulator. Additionally, we provide an open-source implementation of our motion controller for both non-holonomic and omnidirectional mobile manipulators.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge