Silvio Traversaro

Istituto Italiano di Tecnologia, Genova, Italy

Pixi: Unified Software Development and Distribution for Robotics and AI

Nov 06, 2025Abstract:The reproducibility crisis in scientific computing constrains robotics research. Existing studies reveal that up to 70% of robotics algorithms cannot be reproduced by independent teams, while many others fail to reach deployment because creating shareable software environments remains prohibitively complex. These challenges stem from fragmented, multi-language, and hardware-software toolchains that lead to dependency hell. We present Pixi, a unified package-management framework that addresses these issues by capturing exact dependency states in project-level lockfiles, ensuring bit-for-bit reproducibility across platforms. Its high-performance SAT solver achieves up to 10x faster dependency resolution than comparable tools, while integration of the conda-forge and PyPI ecosystems removes the need for multiple managers. Adopted in over 5,300 projects since 2023, Pixi reduces setup times from hours to minutes and lowers technical barriers for researchers worldwide. By enabling scalable, reproducible, collaborative research infrastructure, Pixi accelerates progress in robotics and AI.

Physics-Informed Learning for the Friction Modeling of High-Ratio Harmonic Drives

Oct 16, 2024

Abstract:This paper presents a scalable method for friction identification in robots equipped with electric motors and high-ratio harmonic drives, utilizing Physics-Informed Neural Networks (PINN). This approach eliminates the need for dedicated setups and joint torque sensors by leveraging the robo\v{t}s intrinsic model and state data. We present a comprehensive pipeline that includes data acquisition, preprocessing, ground truth generation, and model identification. The effectiveness of the PINN-based friction identification is validated through extensive testing on two different joints of the humanoid robot ergoCub, comparing its performance against traditional static friction models like the Coulomb-viscous and Stribeck-Coulomb-viscous models. Integrating the identified PINN-based friction models into a two-layer torque control architecture enhances real-time friction compensation. The results demonstrate significant improvements in control performance and reductions in energy losses, highlighting the scalability and robustness of the proposed method, also for application across a large number of joints as in the case of humanoid robots.

Online DNN-driven Nonlinear MPC for Stylistic Humanoid Robot Walking with Step Adjustment

Oct 10, 2024

Abstract:This paper presents a three-layered architecture that enables stylistic locomotion with online contact location adjustment. Our method combines an autoregressive Deep Neural Network (DNN) acting as a trajectory generation layer with a model-based trajectory adjustment and trajectory control layers. The DNN produces centroidal and postural references serving as an initial guess and regularizer for the other layers. Being the DNN trained on human motion capture data, the resulting robot motion exhibits locomotion patterns, resembling a human walking style. The trajectory adjustment layer utilizes non-linear optimization to ensure dynamically feasible center of mass (CoM) motion while addressing step adjustments. We compare two implementations of the trajectory adjustment layer: one as a receding horizon planner (RHP) and the other as a model predictive controller (MPC). To enhance MPC performance, we introduce a Kalman filter to reduce measurement noise. The filter parameters are automatically tuned with a Genetic Algorithm. Experimental results on the ergoCub humanoid robot demonstrate the system's ability to prevent falls, replicate human walking styles, and withstand disturbances up to 68 Newton. Website: https://sites.google.com/view/dnn-mpc-walking Youtube video: https://www.youtube.com/watch?v=x3tzEfxO-xQ

ROS2WASM: Bringing the Robot Operating System to the Web

Sep 16, 2024

Abstract:The Robot Operating System (ROS) has become the de facto standard middleware in robotics, widely adopted across domains ranging from education to industrial applications. The RoboStack distribution has extended ROS's accessibility by facilitating installation across all major operating systems and architectures, integrating seamlessly with scientific tools such as PyTorch and Open3D. This paper presents ROS2WASM, a novel integration of RoboStack with WebAssembly, enabling the execution of ROS 2 and its associated software directly within web browsers, without requiring local installations. This approach significantly enhances reproducibility and shareability of research, lowers barriers to robotics education, and leverages WebAssembly's robust security framework to protect against malicious code. We detail our methodology for cross-compiling ROS 2 packages into WebAssembly, the development of a specialized middleware for ROS 2 communication within browsers, and the implementation of a web platform available at www.ros2wasm.dev that allows users to interact with ROS 2 environments. Additionally, we extend support to the Robotics Toolbox for Python and adapt its Swift simulator for browser compatibility. Our work paves the way for unprecedented accessibility in robotics, offering scalable, secure, and reproducible environments that have the potential to transform educational and research paradigms.

XBG: End-to-end Imitation Learning for Autonomous Behaviour in Human-Robot Interaction and Collaboration

Jun 22, 2024

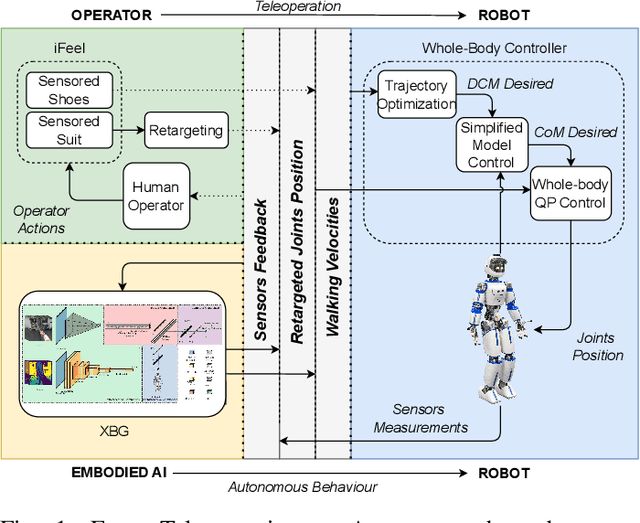

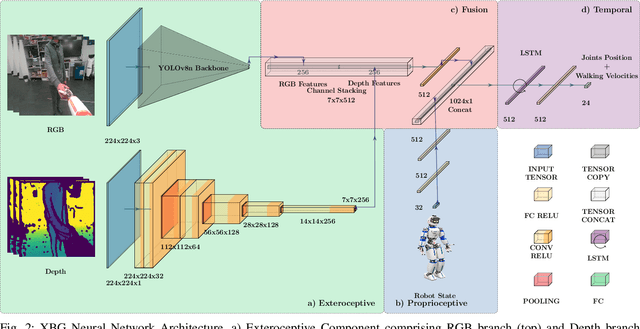

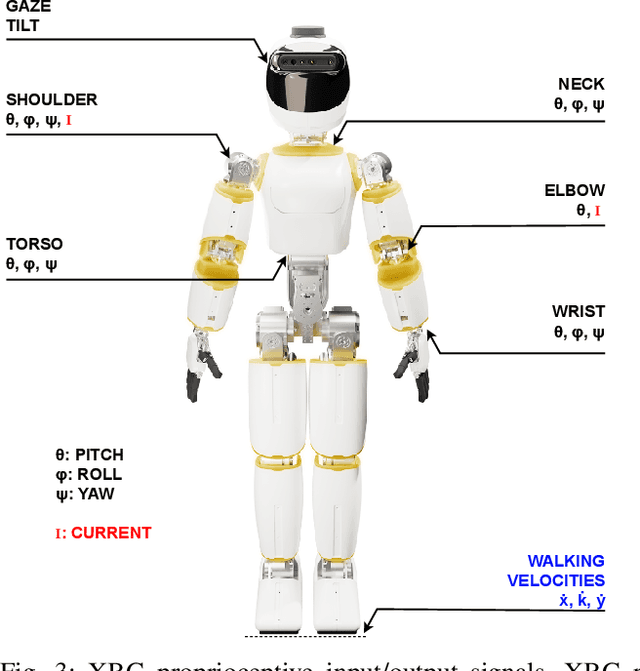

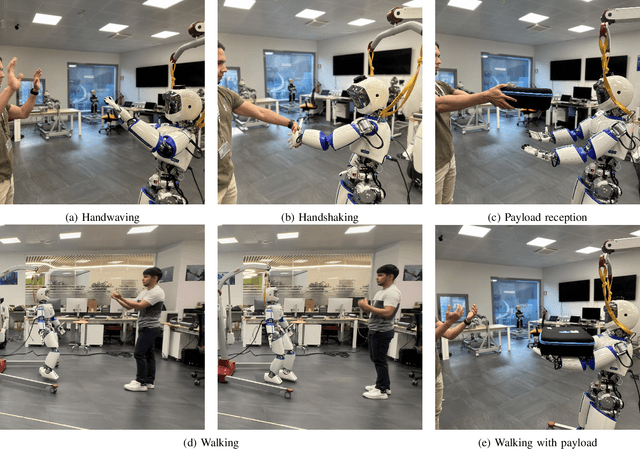

Abstract:This paper presents XBG (eXteroceptive Behaviour Generation), a multimodal end-to-end Imitation Learning (IL) system for a whole-body autonomous humanoid robot used in real-world Human-Robot Interaction (HRI) scenarios. The main contribution of this paper is an architecture for learning HRI behaviours using a data-driven approach. Through teleoperation, a diverse dataset is collected, comprising demonstrations across multiple HRI scenarios, including handshaking, handwaving, payload reception, walking, and walking with a payload. After synchronizing, filtering, and transforming the data, different Deep Neural Networks (DNN) models are trained. The final system integrates different modalities comprising exteroceptive and proprioceptive sources of information to provide the robot with an understanding of its environment and its own actions. The robot takes sequence of images (RGB and depth) and joints state information during the interactions and then reacts accordingly, demonstrating learned behaviours. By fusing multimodal signals in time, we encode new autonomous capabilities into the robotic platform, allowing the understanding of context changes over time. The models are deployed on ergoCub, a real-world humanoid robot, and their performance is measured by calculating the success rate of the robot's behaviour under the mentioned scenarios.

Codesign of Humanoid Robots for Ergonomy Collaboration with Multiple Humans via Genetic Algorithms and Nonlinear Optimization

Dec 12, 2023

Abstract:Ergonomics is a key factor to consider when designing control architectures for effective physical collaborations between humans and humanoid robots. In contrast, ergonomic indexes are often overlooked in the robot design phase, which leads to suboptimal performance in physical human-robot interaction tasks. This paper proposes a novel methodology for optimizing the design of humanoid robots with respect to ergonomic indicators associated with the interaction of multiple agents. Our approach leverages a dynamic and kinematic parameterization of the robot link and motor specifications to seek for optimal robot designs using a bilevel optimization approach. Specifically, a genetic algorithm first generates robot designs by selecting the link and motor characteristics. Then, we use nonlinear optimization to evaluate interaction ergonomy indexes during collaborative payload lifting with different humans and weights. To assess the effectiveness of our approach, we compare the optimal design obtained using bilevel optimization against the design obtained using nonlinear optimization. Our results show that the proposed approach significantly improves ergonomics in terms of energy expenditure calculated in two reference scenarios involving static and dynamic robot motions. We plan to apply our methodology to drive the design of the ergoCub2 robot, a humanoid intended for optimal physical collaboration with humans in diverse environments

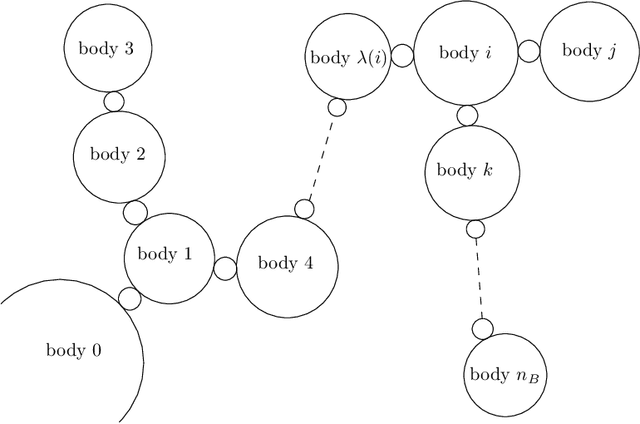

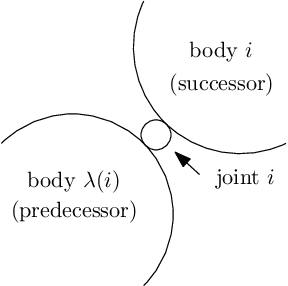

A Flexible MATLAB/Simulink Simulator for Robotic Floating-base Systems in Contact with the Ground

Nov 17, 2022Abstract:Physics simulators are widely used in robotics fields, from mechanical design to dynamic simulation, and controller design. This paper presents an open-source MATLAB/Simulink simulator for rigid-body articulated systems, including manipulators and floating-base robots. Thanks to MATLAB/Simulink features like MATLAB system classes and Simulink function blocks, the presented simulator combines a programmatic and block-based approach, resulting in a flexible design in the sense that different parts, including its physics engine, robot-ground interaction model, and state evolution algorithm are simply accessible and editable. Moreover, through the use of Simulink dynamic mask blocks, the proposed simulation framework supports robot models integrating open-chain and closed-chain kinematics with any desired number of links interacting with the ground. The simulator can also integrate second-order actuator dynamics. Furthermore, the simulator benefits from a one-line installation and an easy-to-use Simulink interface.

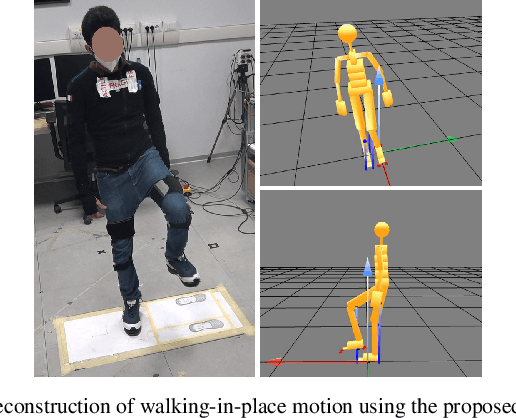

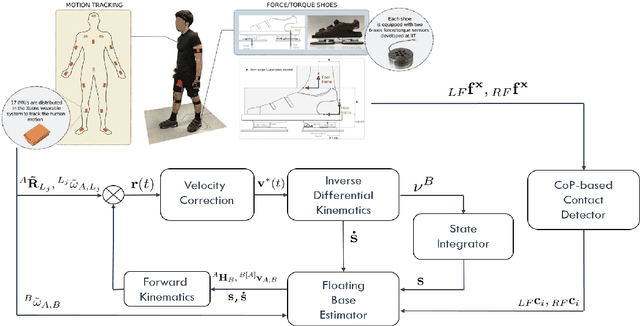

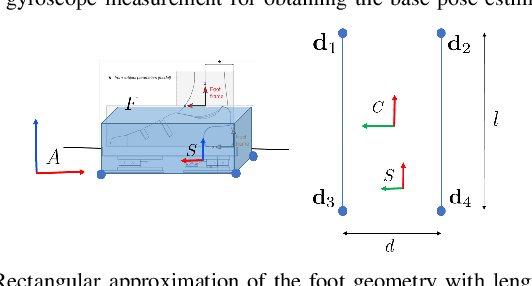

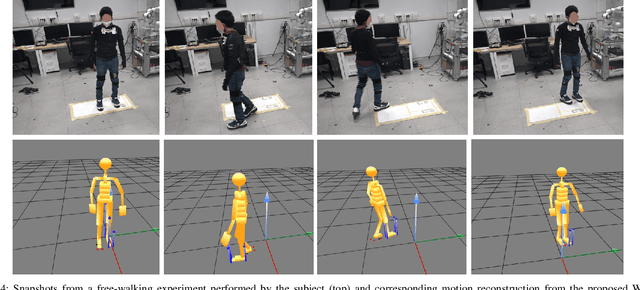

Whole-Body Human Kinematics Estimation using Dynamical Inverse Kinematics and Contact-Aided Lie Group Kalman Filter

May 16, 2022

Abstract:Full-body motion estimation of a human through wearable sensing technologies is challenging in the absence of position sensors. This paper contributes to the development of a model-based whole-body kinematics estimation algorithm using wearable distributed inertial and force-torque sensing. This is done by extending the existing dynamical optimization-based Inverse Kinematics (IK) approach for joint state estimation, in cascade, to include a center of pressure-based contact detector and a contact-aided Kalman filter on Lie groups for floating base pose estimation. The proposed method is tested in an experimental scenario where a human equipped with a sensorized suit and shoes performs walking motions. The proposed method is demonstrated to obtain a reliable reconstruction of the whole-body human motion.

An Experimental Comparison of Floating Base Estimators for Humanoid Robots with Flat Feet

May 16, 2022

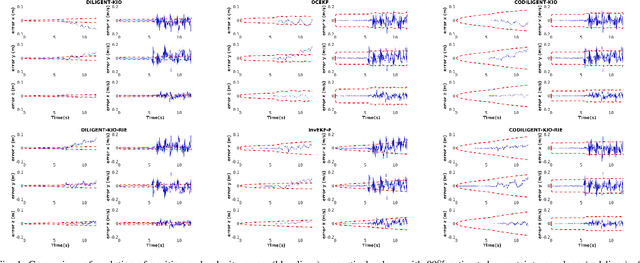

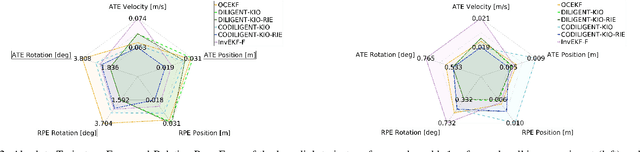

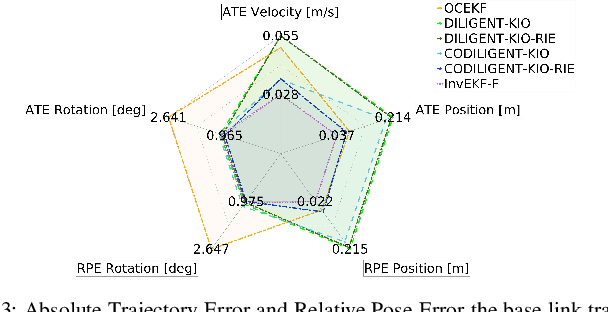

Abstract:Extended Kalman filtering is a common approach to achieve floating base estimation of a humanoid robot. These filters rely on measurements from an Inertial Measurement Unit (IMU) and relative forward kinematics for estimating the base position-and-orientation and its linear velocity along with the augmented states of feet position-and-orientation, thus giving them their name, flat-foot filters. However, the availability of only partial measurements often poses the question of consistency in the filter design. In this paper, we perform an experimental comparison of state-of-the-art flat-foot filters based on the representation choice of state, observation, matrix Lie group error and system dynamics evaluated for filter consistency and trajectory errors. The comparison is performed over simulated and real-world experiments conducted on the iCub humanoid platform.

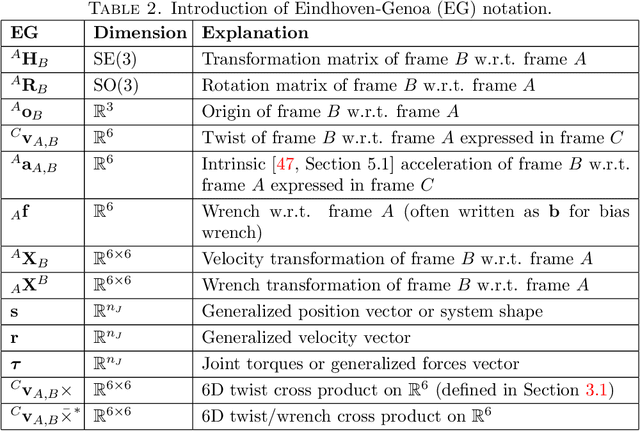

Efficient Geometric Linearization of Moving-Base Rigid Robot Dynamics

Apr 11, 2022

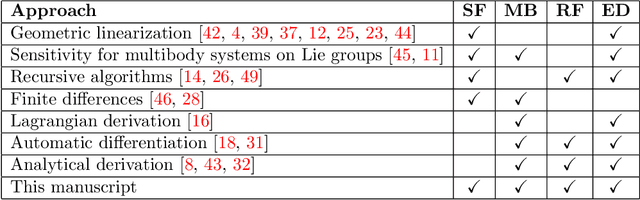

Abstract:The linearization of the equations of motion of a robotics system about a given state-input trajectory, including a controlled equilibrium state, is a valuable tool for model-based planning, closed-loop control, gain tuning, and state estimation. Contrary to the case of fixed based manipulators with prismatic or rotary joints, the state space of moving-base robotic systems such as humanoids, quadruped robots, or aerial manipulators cannot be globally parametrized by a finite number of independent coordinates. This impossibility is a direct consequence of the fact that the state of these systems includes the system's global orientation, formally described as an element of the special orthogonal group SO(3). As a consequence, obtaining the linearization of the equations of motion for these systems is typically resolved, from a practical perspective, by locally parameterizing the system's attitude by means of, e.g., Euler or Cardan angles. This has the drawback, however, of introducing artificial parameterization singularities and extra derivative computations. In this contribution, we show that it is actually possible to define a notion of linearization that does not require the use of a local parameterization for the system's orientation, obtaining a mathematically elegant, recursive, and singularity-free linearization for moving-based robot systems. Recursiveness, in particular, is obtained by proposing a nontrivial modification of existing recursive algorithms to allow for computations of the geometric derivatives of the inverse dynamics and the inverse of the mass matrix of the robotic system. The correctness of the proposed algorithm is validated by means of a numerical comparison with the result obtained via geometric finite difference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge