Ines Sorrentino

Physics-Informed Learning for the Friction Modeling of High-Ratio Harmonic Drives

Oct 16, 2024

Abstract:This paper presents a scalable method for friction identification in robots equipped with electric motors and high-ratio harmonic drives, utilizing Physics-Informed Neural Networks (PINN). This approach eliminates the need for dedicated setups and joint torque sensors by leveraging the robo\v{t}s intrinsic model and state data. We present a comprehensive pipeline that includes data acquisition, preprocessing, ground truth generation, and model identification. The effectiveness of the PINN-based friction identification is validated through extensive testing on two different joints of the humanoid robot ergoCub, comparing its performance against traditional static friction models like the Coulomb-viscous and Stribeck-Coulomb-viscous models. Integrating the identified PINN-based friction models into a two-layer torque control architecture enhances real-time friction compensation. The results demonstrate significant improvements in control performance and reductions in energy losses, highlighting the scalability and robustness of the proposed method, also for application across a large number of joints as in the case of humanoid robots.

Online DNN-driven Nonlinear MPC for Stylistic Humanoid Robot Walking with Step Adjustment

Oct 10, 2024

Abstract:This paper presents a three-layered architecture that enables stylistic locomotion with online contact location adjustment. Our method combines an autoregressive Deep Neural Network (DNN) acting as a trajectory generation layer with a model-based trajectory adjustment and trajectory control layers. The DNN produces centroidal and postural references serving as an initial guess and regularizer for the other layers. Being the DNN trained on human motion capture data, the resulting robot motion exhibits locomotion patterns, resembling a human walking style. The trajectory adjustment layer utilizes non-linear optimization to ensure dynamically feasible center of mass (CoM) motion while addressing step adjustments. We compare two implementations of the trajectory adjustment layer: one as a receding horizon planner (RHP) and the other as a model predictive controller (MPC). To enhance MPC performance, we introduce a Kalman filter to reduce measurement noise. The filter parameters are automatically tuned with a Genetic Algorithm. Experimental results on the ergoCub humanoid robot demonstrate the system's ability to prevent falls, replicate human walking styles, and withstand disturbances up to 68 Newton. Website: https://sites.google.com/view/dnn-mpc-walking Youtube video: https://www.youtube.com/watch?v=x3tzEfxO-xQ

UKF-Based Sensor Fusion for Joint-Torque Sensorless Humanoid Robots

Feb 28, 2024

Abstract:This paper proposes a novel sensor fusion based on Unscented Kalman Filtering for the online estimation of joint-torques of humanoid robots without joint-torque sensors. At the feature level, the proposed approach considers multimodal measurements (e.g. currents, accelerations, etc.) and non-directly measurable effects, such as external contacts, thus leading to joint torques readily usable in control architectures for human-robot interaction. The proposed sensor fusion can also integrate distributed, non-collocated force/torque sensors, thus being a flexible framework with respect to the underlying robot sensor suit. To validate the approach, we show how the proposed sensor fusion can be integrated into a twolevel torque control architecture aiming at task-space torquecontrol. The performances of the proposed approach are shown through extensive tests on the new humanoid robot ergoCub, currently being developed at Istituto Italiano di Tecnologia. We also compare our strategy with the existing state-of-theart approach based on the recursive Newton-Euler algorithm. Results demonstrate that our method achieves low root mean square errors in torque tracking, ranging from 0.05 Nm to 2.5 Nm, even in the presence of external contacts.

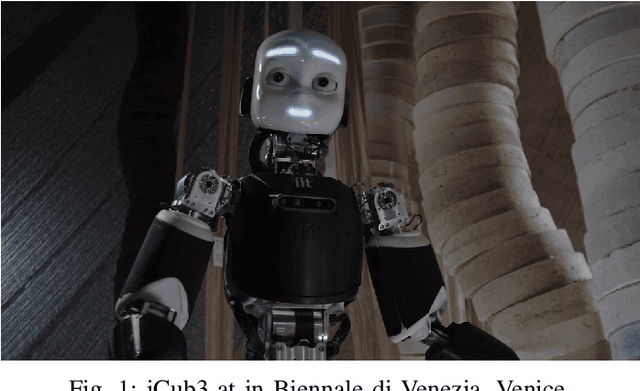

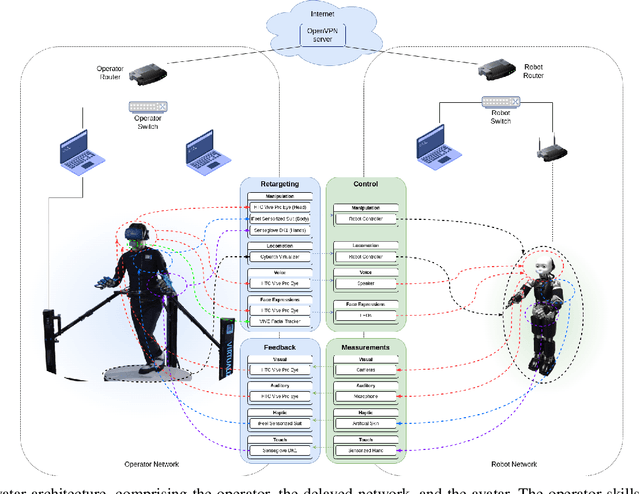

iCub3 Avatar System

Mar 14, 2022

Abstract:We present an avatar system that enables a human operator to visit a remote location via iCub3, a new humanoid robot developed at the Italian Institute of Technology (IIT) paving the way for the next generation of the iCub platforms. On the one hand, we present the humanoid iCub3 that plays the role of the robotic avatar. Particular attention is paid to the differences between iCub3 and the classical iCub humanoid robot. On the other hand, we present the set of technologies of the avatar system at the operator side. They are mainly composed of iFeel, namely, IIT lightweight non-invasive wearable devices for motion tracking and haptic feedback, and of non-IIT technologies designed for virtual reality ecosystems. Finally, we show the effectiveness of the avatar system by describing a demonstration involving a realtime teleoperation of the iCub3. The robot is located in Venice, Biennale di Venezia, while the human operator is at more than 290km distance and located in Genoa, IIT. Using a standard fiber optic internet connection, the avatar system transports the operator locomotion, manipulation, voice, and face expressions to the iCub3 with visual, auditory, haptic and touch feedback.

Online Non-linear Centroidal MPC for Humanoid Robot Locomotion with Step Adjustment

Mar 10, 2022

Abstract:This paper presents a Non-Linear Model Predictive Controller for humanoid robot locomotion with online step adjustment capabilities. The proposed controller considers the Centroidal Dynamics of the system to compute the desired contact forces and torques and contact locations. Differently from bipedal walking architectures based on simplified models, the presented approach considers the reduced centroidal model, thus allowing the robot to perform highly dynamic movements while keeping the control problem still treatable online. We show that the proposed controller can automatically adjust the contact location both in single and double support phases. The overall approach is then tested with a simulation of one-leg and two-leg systems performing jumping and running tasks, respectively. We finally validate the proposed controller on the position-controlled Humanoid Robot iCub. Results show that the proposed strategy prevents the robot from falling while walking and pushed with external forces up to 40 Newton for 1 second applied at the robot arm.

* Paper accepted in ICRA 2022

In Situ Translational Hand-Eye Calibration of Laser Profile Sensors using Arbitrary Objects

Mar 22, 2021

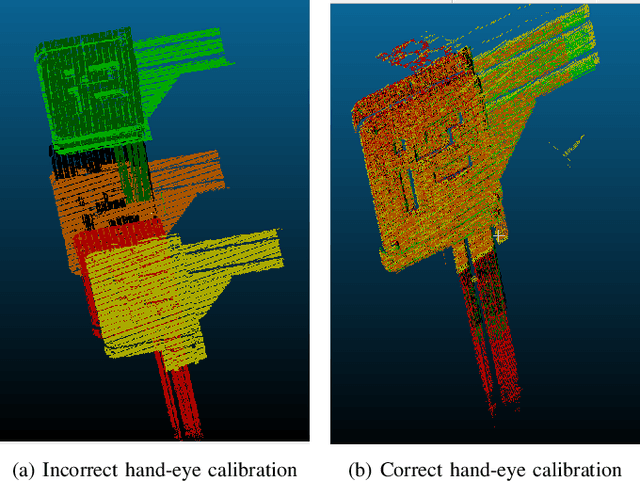

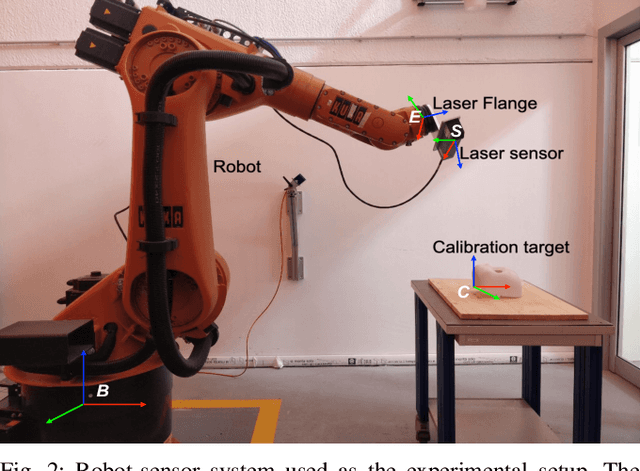

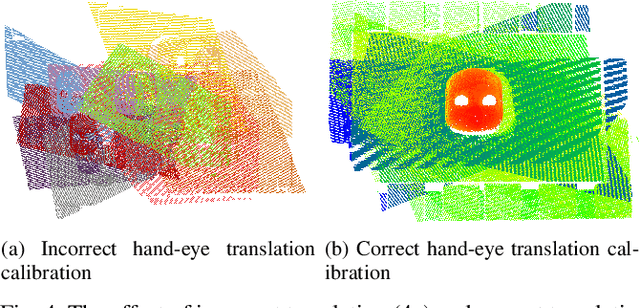

Abstract:Hand-eye calibration of laser profile sensors is the process of extracting the homogeneous transformation between the laser profile sensor frame and the end-effector frame of a robot in order to express the data extracted by the sensor in the robot's global coordinate system. For laser profile scanners this is a challenging procedure, as they provide data only in two dimensions and state-of-the-art calibration procedures require the use of specialised calibration targets. This paper presents a novel method to extract the translation-part of the hand-eye calibration matrix with rotation-part known a priori in a target-agnostic way. Our methodology is applicable to any 2D image or 3D object as a calibration target and can also be performed in situ in the final application. The method is experimentally validated on a real robot-sensor setup with 2D and 3D targets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge