Riccardo Grieco

Whole-Body Human Kinematics Estimation using Dynamical Inverse Kinematics and Contact-Aided Lie Group Kalman Filter

May 16, 2022

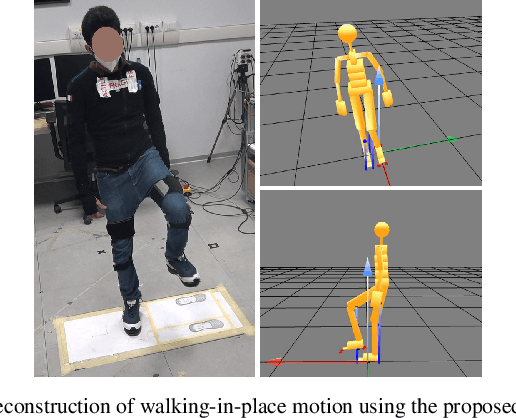

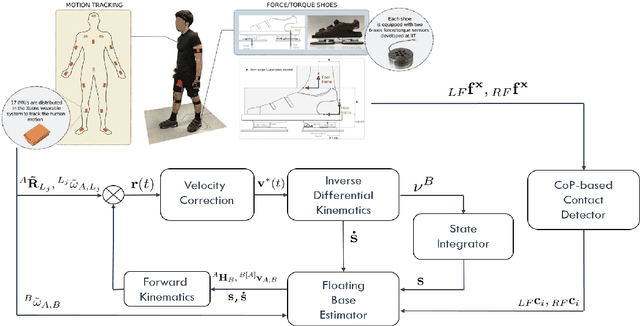

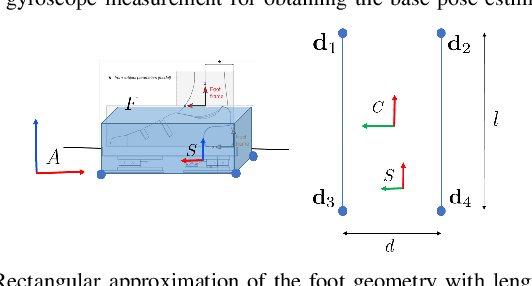

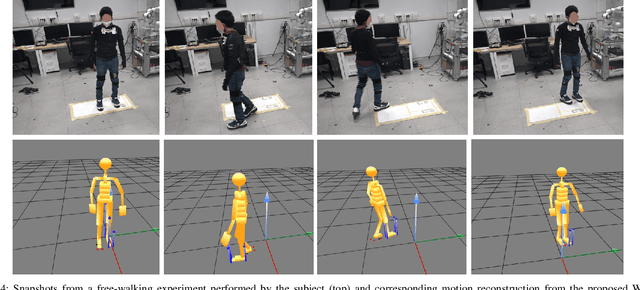

Abstract:Full-body motion estimation of a human through wearable sensing technologies is challenging in the absence of position sensors. This paper contributes to the development of a model-based whole-body kinematics estimation algorithm using wearable distributed inertial and force-torque sensing. This is done by extending the existing dynamical optimization-based Inverse Kinematics (IK) approach for joint state estimation, in cascade, to include a center of pressure-based contact detector and a contact-aided Kalman filter on Lie groups for floating base pose estimation. The proposed method is tested in an experimental scenario where a human equipped with a sensorized suit and shoes performs walking motions. The proposed method is demonstrated to obtain a reliable reconstruction of the whole-body human motion.

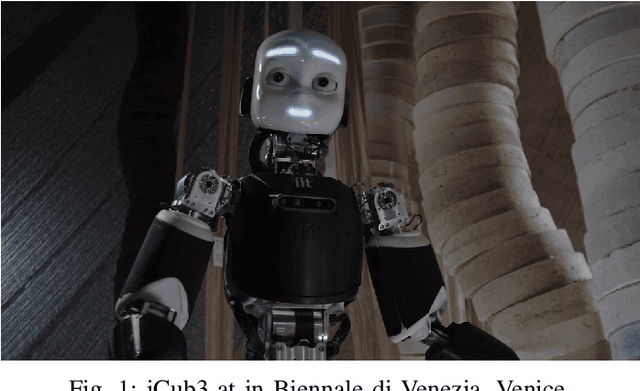

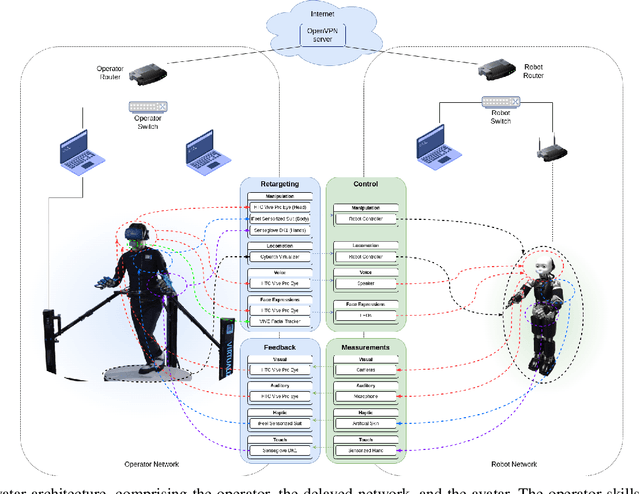

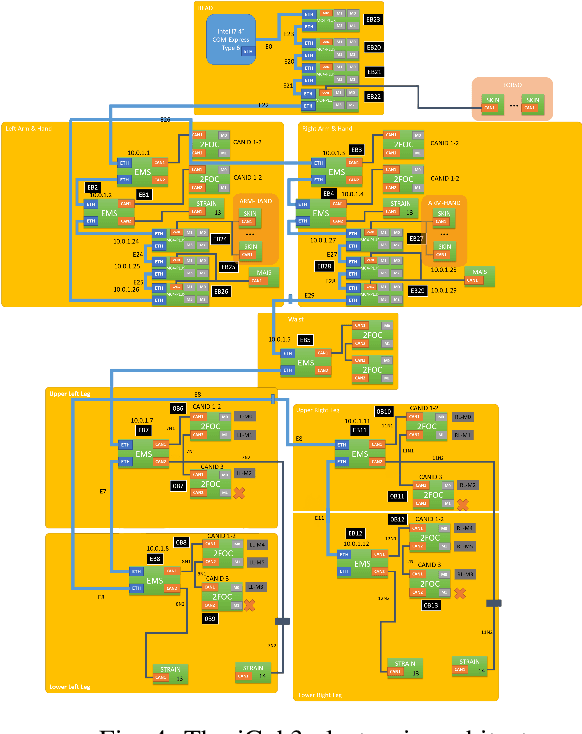

iCub3 Avatar System

Mar 14, 2022

Abstract:We present an avatar system that enables a human operator to visit a remote location via iCub3, a new humanoid robot developed at the Italian Institute of Technology (IIT) paving the way for the next generation of the iCub platforms. On the one hand, we present the humanoid iCub3 that plays the role of the robotic avatar. Particular attention is paid to the differences between iCub3 and the classical iCub humanoid robot. On the other hand, we present the set of technologies of the avatar system at the operator side. They are mainly composed of iFeel, namely, IIT lightweight non-invasive wearable devices for motion tracking and haptic feedback, and of non-IIT technologies designed for virtual reality ecosystems. Finally, we show the effectiveness of the avatar system by describing a demonstration involving a realtime teleoperation of the iCub3. The robot is located in Venice, Biennale di Venezia, while the human operator is at more than 290km distance and located in Genoa, IIT. Using a standard fiber optic internet connection, the avatar system transports the operator locomotion, manipulation, voice, and face expressions to the iCub3 with visual, auditory, haptic and touch feedback.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge