Yeshasvi Tirupachuri

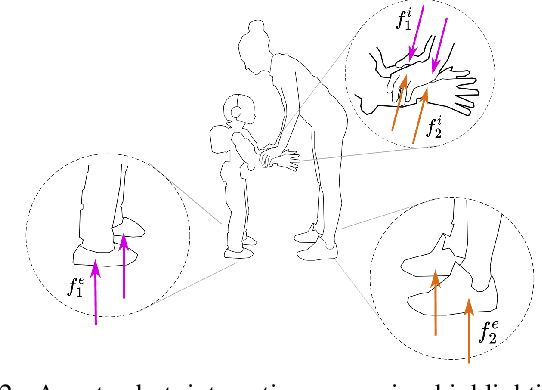

A Control Approach for Human-Robot Ergonomic Payload Lifting

May 15, 2023

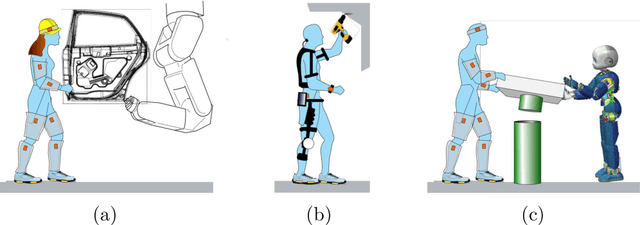

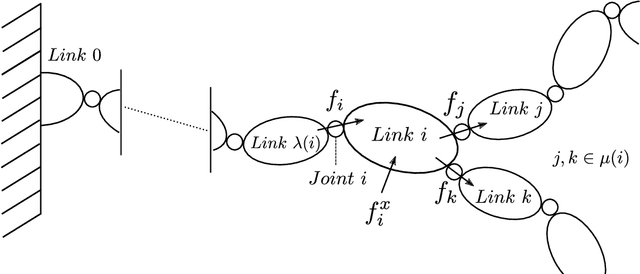

Abstract:Collaborative robots can relief human operators from excessive efforts during payload lifting activities. Modelling the human partner allows the design of safe and efficient collaborative strategies. In this paper, we present a control approach for human-robot collaboration based on human monitoring through whole-body wearable sensors, and interaction modelling through coupled rigid-body dynamics. Moreover, a trajectory advancement strategy is proposed, allowing for online adaptation of the robot trajectory depending on the human motion. The resulting framework allows us to perform payload lifting tasks, taking into account the ergonomic requirements of the agents. Validation has been performed in an experimental scenario using the iCub3 humanoid robot and a human subject sensorized with the iFeel wearable system.

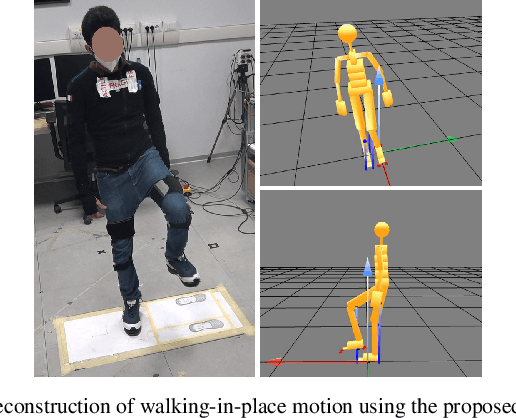

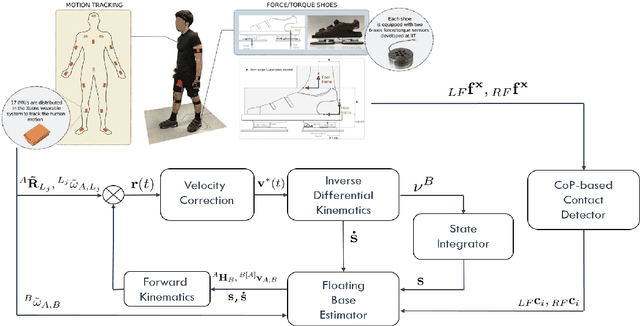

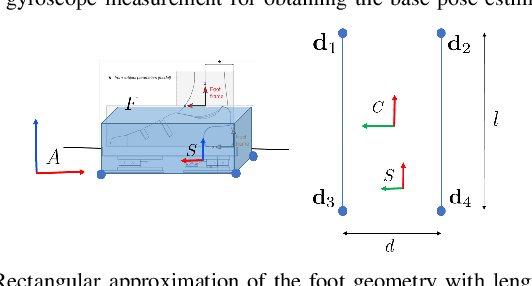

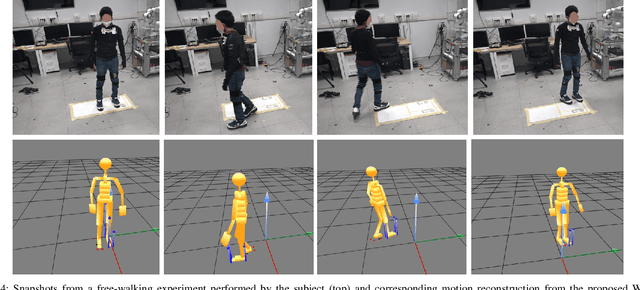

Whole-Body Human Kinematics Estimation using Dynamical Inverse Kinematics and Contact-Aided Lie Group Kalman Filter

May 16, 2022

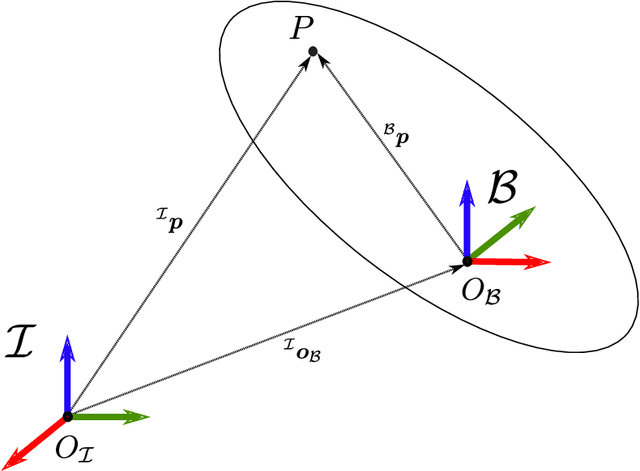

Abstract:Full-body motion estimation of a human through wearable sensing technologies is challenging in the absence of position sensors. This paper contributes to the development of a model-based whole-body kinematics estimation algorithm using wearable distributed inertial and force-torque sensing. This is done by extending the existing dynamical optimization-based Inverse Kinematics (IK) approach for joint state estimation, in cascade, to include a center of pressure-based contact detector and a contact-aided Kalman filter on Lie groups for floating base pose estimation. The proposed method is tested in an experimental scenario where a human equipped with a sensorized suit and shoes performs walking motions. The proposed method is demonstrated to obtain a reliable reconstruction of the whole-body human motion.

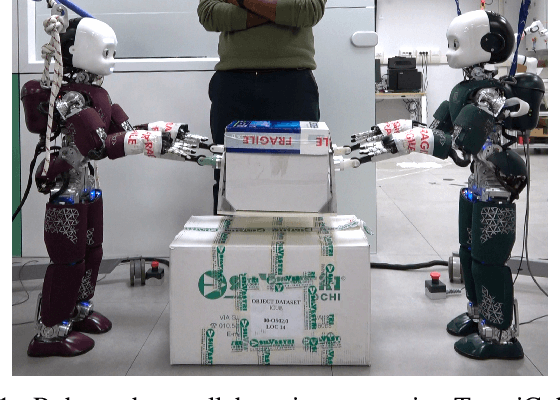

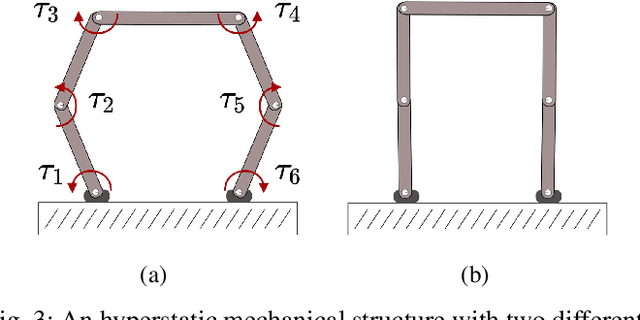

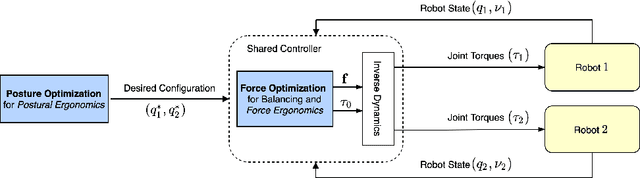

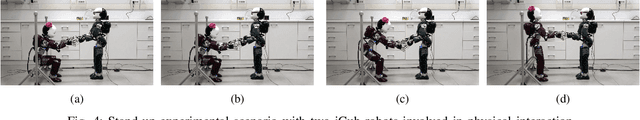

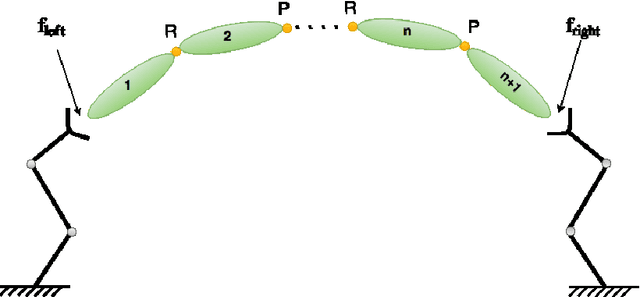

Shared Control of Robot-Robot Collaborative Lifting with Agent Postural and Force Ergonomic Optimization

Apr 28, 2021

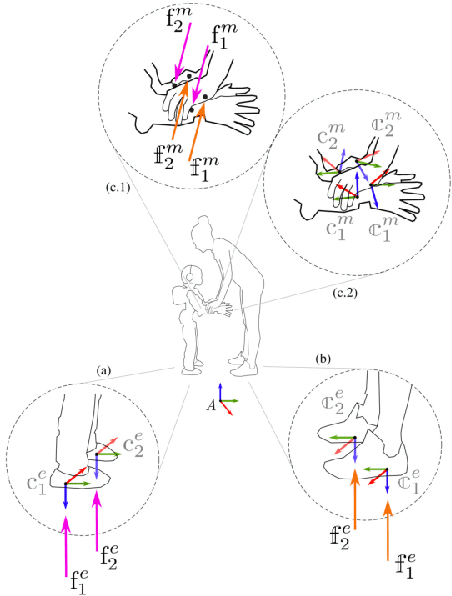

Abstract:Humans show specialized strategies for efficient collaboration. Transferring similar strategies to humanoid robots can improve their capability to interact with other agents, leading the way to complex collaborative scenarios with multiple agents acting on a shared environment. In this paper we present a control framework for robot-robot collaborative lifting. The proposed shared controller takes into account the joint action of both the robots thanks to a centralized controller that communicates with them, and solves the whole-system optimization. Efficient collaboration is ensured by taking into account the ergonomic requirements of the robots through the optimization of posture and contact forces. The framework is validated in an experimental scenario with two iCub humanoid robots performing different payload lifting sequences.

Enabling Human-Robot Collaboration via Holistic Human Perception and Partner-Aware Control

Apr 22, 2020

Abstract:As robotic technology advances, the barriers to the coexistence of humans and robots are slowly coming down. Application domains like elderly care, collaborative manufacturing, collaborative manipulation, etc., are considered the need of the hour, and progress in robotics holds the potential to address many societal challenges. The future socio-technical systems constitute of blended workforce with a symbiotic relationship between human and robot partners working collaboratively. This thesis attempts to address some of the research challenges in enabling human-robot collaboration. In particular, the challenge of a holistic perception of a human partner to continuously communicate his intentions and needs in real-time to a robot partner is crucial for the successful realization of a collaborative task. Towards that end, we present a holistic human perception framework for real-time monitoring of whole-body human motion and dynamics. On the other hand, the challenge of leveraging assistance from a human partner will lead to improved human-robot collaboration. In this direction, we attempt at methodically defining what constitutes assistance from a human partner and propose partner-aware robot control strategies to endow robots with the capacity to meaningfully engage in a collaborative task.

Recent Advances in Human-Robot Collaboration Towards Joint Action

Jan 02, 2020

Abstract:Robots existed as separate entities till now, but the horizons of a symbiotic human-robot partnership are impending. Despite all the recent technical advances in terms of hardware, robots are still not endowed with desirable relational skills that ensure a social component in their existence. This article draws from our experience as roboticists in Human-Robot Collaboration (HRC) with humanoid robots and presents some of the recent advances made towards realizing intuitive robot behaviors and partner-aware control involving physical interactions.

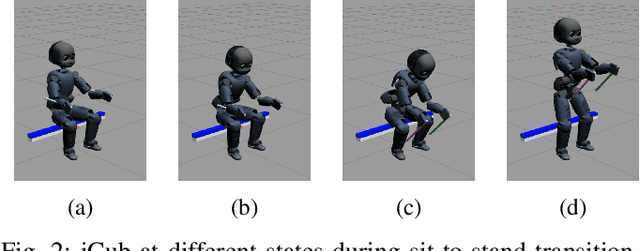

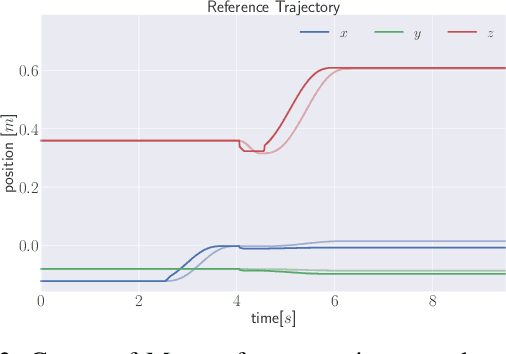

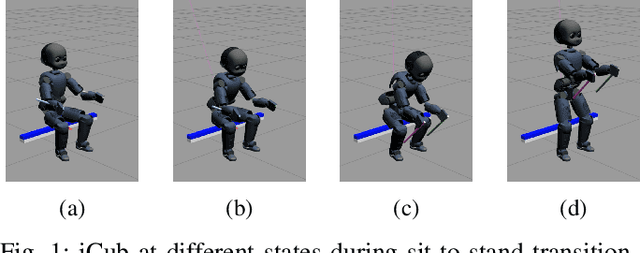

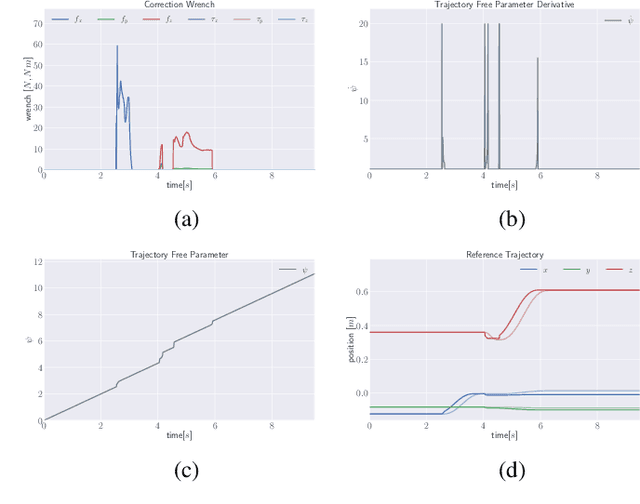

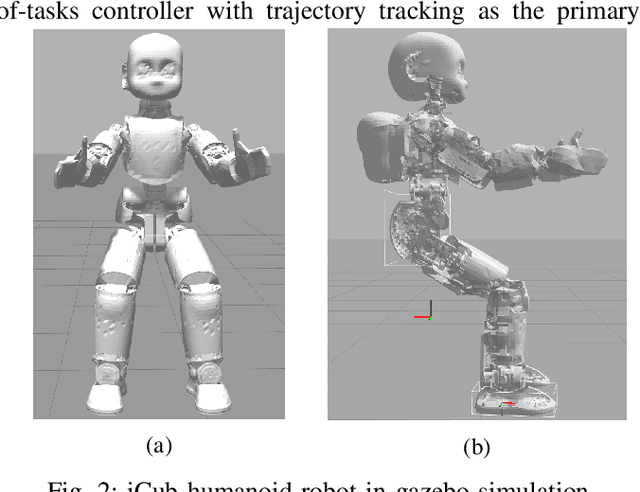

Trajectory Advancement for Robot Stand-up with Human Assistance

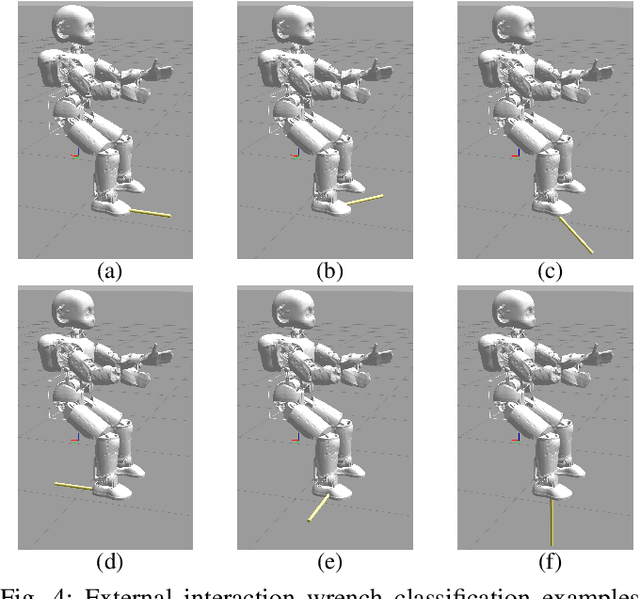

Oct 14, 2019

Abstract:Physical interactions are inevitable part of human-robot collaboration tasks and rather than exhibiting simple reactive behaviors to human interactions, collaborative robots need to be endowed with intuitive behaviors. This paper proposes a trajectory advancement approach that facilitates advancement along a reference trajectory by leveraging assistance from helpful interaction wrench present during human-robot collaboration. We validate our approach through experiments in simulation with iCub.

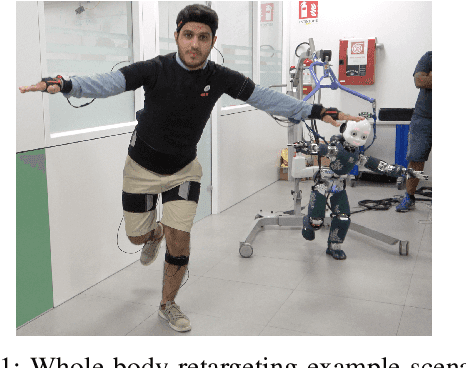

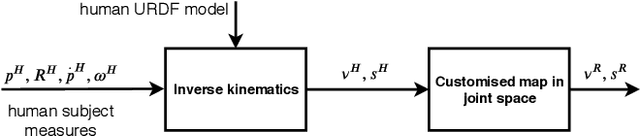

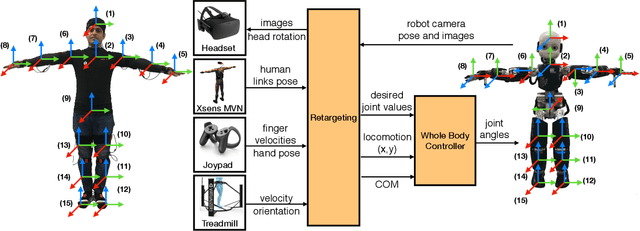

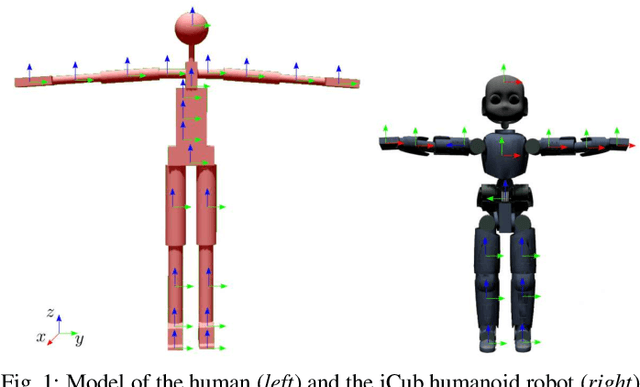

Whole-Body Geometric Retargeting for Humanoid Robots

Sep 22, 2019

Abstract:Humanoid robot teleoperation allows humans to integrate their cognitive capabilities with the apparatus to perform tasks that need high strength, manoeuvrability and dexterity. This paper presents a framework for teleoperation of humanoid robots using a novel approach for motion retargeting through inverse kinematics over the robot model. The proposed method enhances scalability for retargeting, i.e., it allows teleoperating different robots by different human users with minimal changes to the proposed system. Our framework enables an intuitive and natural interaction between the human operator and the humanoid robot at the configuration space level. We validate our approach by demonstrating whole-body retargeting with multiple robot models. Furthermore, we present experimental validation through teleoperation experiments using two state-of-the-art whole-body controllers for humanoid robots.

* Equal author contribution from Kourosh Darvish and Yeshasvi Tirupachuri

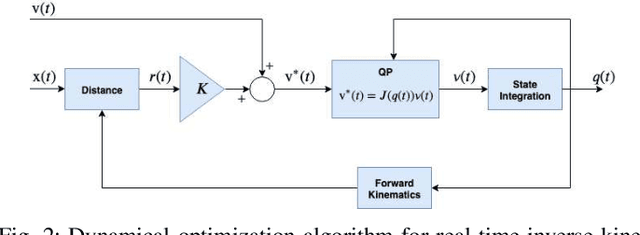

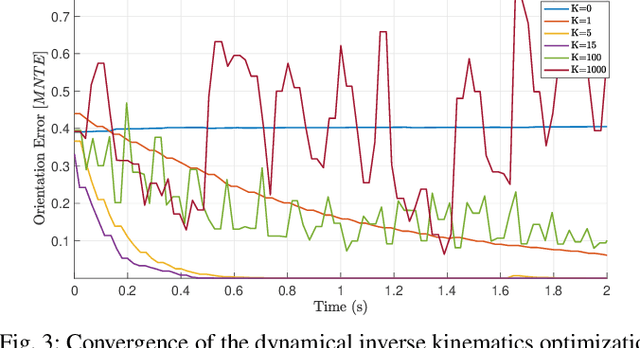

Model-Based Real-Time Motion Tracking using Dynamical Inverse Kinematics

Sep 17, 2019

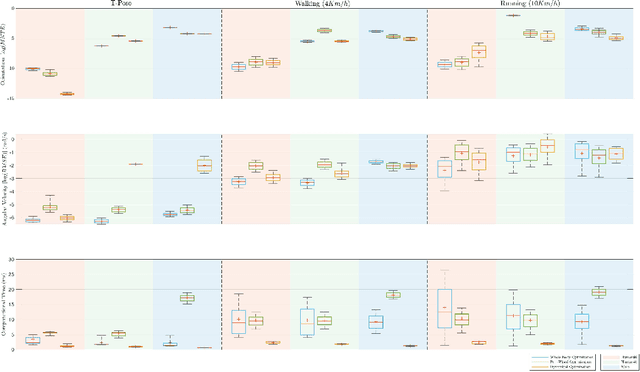

Abstract:This paper contributes towards the development of motion tracking algorithms for time-critical applications, proposing an infrastructure for solving dynamically the inverse kinematics of human models. We present a method based on the integration of the differential kinematics, and for which the convergence is proved using Lyapunov analysis. The method is tested in an experimental scenario where the motion of a subject is tracked in static and dynamic configurations, and the inverse kinematics is solved both for human and humanoid models. The architecture is evaluated both terms of accuracy and computational load, and compared to iterative optimization algorithms.

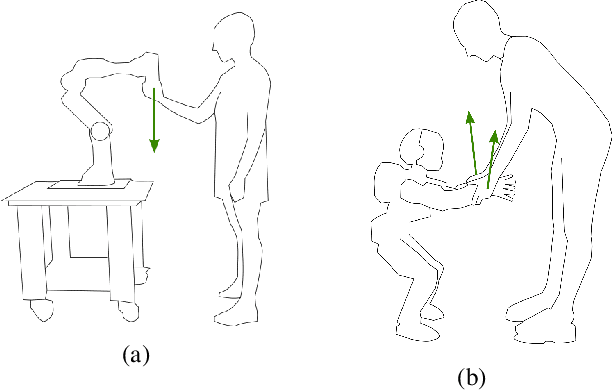

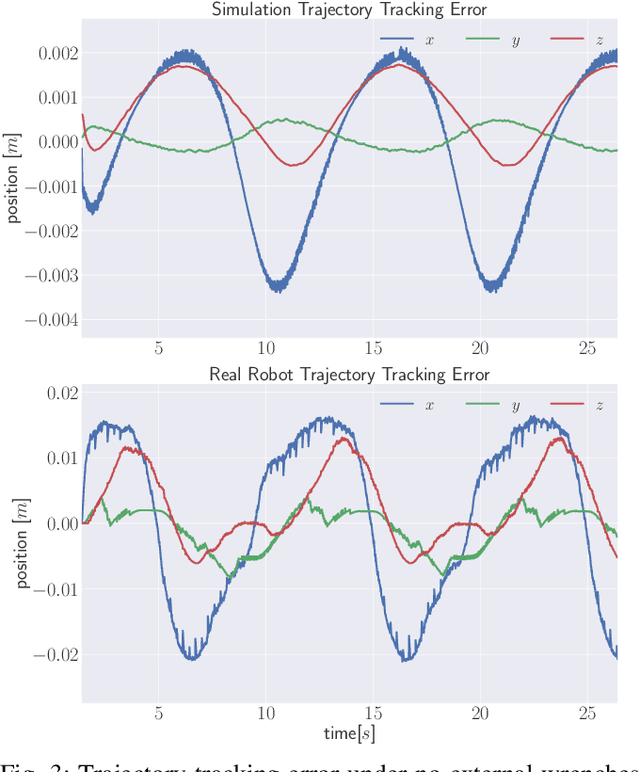

Trajectory Advancement during Human-Robot Collaboration

Jul 31, 2019

Abstract:As technology advances, the barriers between the co-existence of humans and robots are slowly coming down. The prominence of physical interactions for collaboration and cooperation between humans and robots will be an undeniable fact. Rather than exhibiting simple reactive behaviors to human interactions, it is desirable to endow robots with augmented capabilities of exploiting human interactions for successful task completion. Towards that goal, in this paper, we propose a trajectory advancement approach in which we mathematically derive the conditions that facilitate advancing along a reference trajectory by leveraging assistance from helpful interaction wrench present during human-robot collaboration. We validate our approach through experiments conducted with the iCub humanoid robot both in simulation and on the real robot.

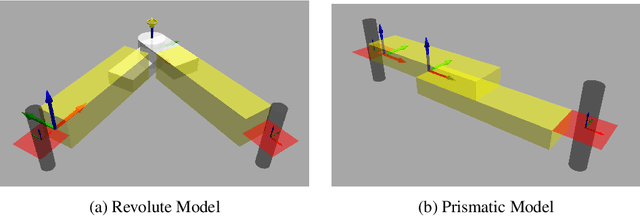

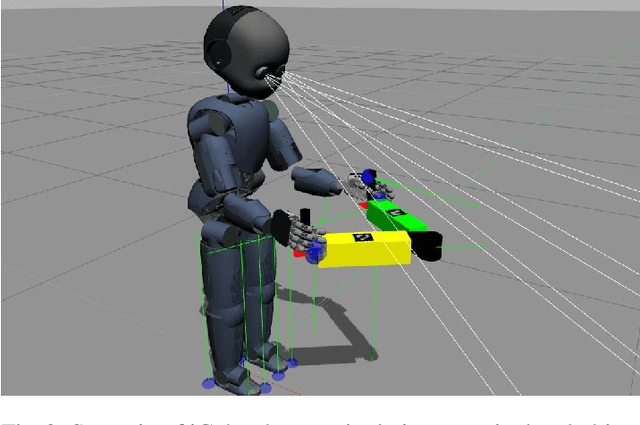

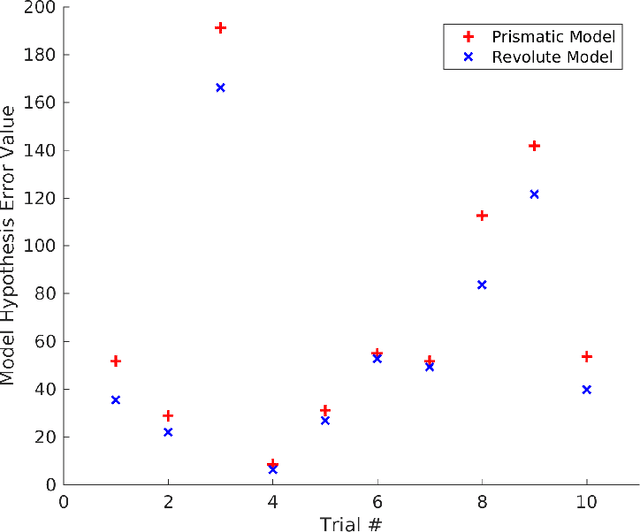

Momentum-Based Topology Estimation of Articulated Objects

Mar 20, 2019

Abstract:Articulated objects like doors, drawers, valves, and tools are pervasive in our everyday unstructured dynamic environments. Articulation models describe the joint nature between the different parts of an articulated object. As most of these objects are passive, a robot has to interact with them to infer all the articulation models to understand the object topology. We present a general algorithm to estimate the inherent articulation models by exploiting the momentum of the articulated system along with the interaction wrench while manipulating the object. We validate our approach with experiments in a simulation environment.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge